Best AI tools for< Deploy Large Language Models On Edge Devices >

20 - AI tool Sites

Sarvam AI

Sarvam AI is an AI application focused on leading transformative research in AI to develop, deploy, and distribute Generative AI applications in India. The platform aims to build efficient large language models for India's diverse linguistic culture and enable new GenAI applications through bespoke enterprise models. Sarvam AI is also developing an enterprise-grade platform for developing and evaluating GenAI apps, while contributing to open-source models and datasets to accelerate AI innovation.

Synthreo

Synthreo is an AI tool that empowers businesses to streamline operations, reduce costs, and drive growth through intelligent AI agents. It provides cutting-edge AI agent solutions that automate routine tasks, enhance decision-making, and create seamless collaboration between human teams and digital labor. Synthreo offers transformative advantages for businesses of all sizes, enabling operational efficiency and strategic growth. The platform implements advanced security measures, complies with industry standards, and upholds ethical AI usage. Businesses can leverage AI-powered digital labor to achieve unprecedented efficiency, innovation, and growth.

AiFA Labs

AiFA Labs is an AI platform that offers a comprehensive suite of generative AI products and services for enterprises. The platform enables businesses to create, manage, and deploy generative AI applications responsibly and at scale. With a focus on governance, compliance, and security, AiFA Labs provides a range of AI tools to streamline business operations, enhance productivity, and drive innovation. From AI code assistance to chat interfaces and data synthesis, AiFA Labs empowers organizations to leverage the power of AI for various use cases across different industries.

Cohere

Cohere is the leading AI platform for enterprise, offering generative AI, search and discovery, and advanced retrieval solutions. Their models are designed to enhance the global workforce, empowering businesses to thrive in the AI era. With features like Cohere Command, Cohere Embed, and Cohere Rerank, the platform enables the development of scalable and efficient AI-powered applications. Cohere focuses on optimizing enterprise data through language-based models, supporting over 100 languages for enhanced accuracy and efficiency.

Predibase

Predibase is a platform for fine-tuning and serving Large Language Models (LLMs). It provides a cost-effective and efficient way to train and deploy LLMs for a variety of tasks, including classification, information extraction, customer sentiment analysis, customer support, code generation, and named entity recognition. Predibase is built on proven open-source technology, including LoRAX, Ludwig, and Horovod.

PromptPoint Playground

PromptPoint Playground is an AI tool designed to help users design, test, and deploy prompts quickly and efficiently. It enables teams to create high-quality LLM outputs through automatic testing and evaluation. The platform allows users to make non-deterministic prompts predictable, organize prompt configurations, run automated tests, and monitor usage. With a focus on collaboration and accessibility, PromptPoint Playground empowers both technical and non-technical users to leverage the power of large language models for prompt engineering.

Azure AI Platform

Azure AI Platform by Microsoft offers a comprehensive suite of artificial intelligence services and tools for developers and businesses. It provides a unified platform for building, training, and deploying AI models, as well as integrating AI capabilities into applications. With a focus on generative AI, multimodal models, and large language models, Azure AI empowers users to create innovative AI-driven solutions across various industries. The platform also emphasizes content safety, scalability, and agility in managing AI projects, making it a valuable resource for organizations looking to leverage AI technologies.

Prisms

Prisms is a no-code platform for building AI-powered apps. It allows users to harness the power of AI without having to write any code. Prisms is built on top of Large Language models including GPT3, DALL-E, and Stable Diffusion. Users can connect the pieces in Prisms to stack together data sources, user inputs, and off-the-shelf building blocks to create their own AI-powered apps. Prisms also makes it easy to deploy AI-powered apps directly from the platform with its pre-built UI. Alternatively, users can build their own frontend and use Prisms as a backend for their AI logic.

FluidStack

FluidStack is a leading GPU cloud platform designed for AI and LLM (Large Language Model) training. It offers unlimited scale for AI training and inference, allowing users to access thousands of fully-interconnected GPUs on demand. Trusted by top AI startups, FluidStack aggregates GPU capacity from data centers worldwide, providing access to over 50,000 GPUs for accelerating training and inference. With 1000+ data centers across 50+ countries, FluidStack ensures reliable and efficient GPU cloud services at competitive prices.

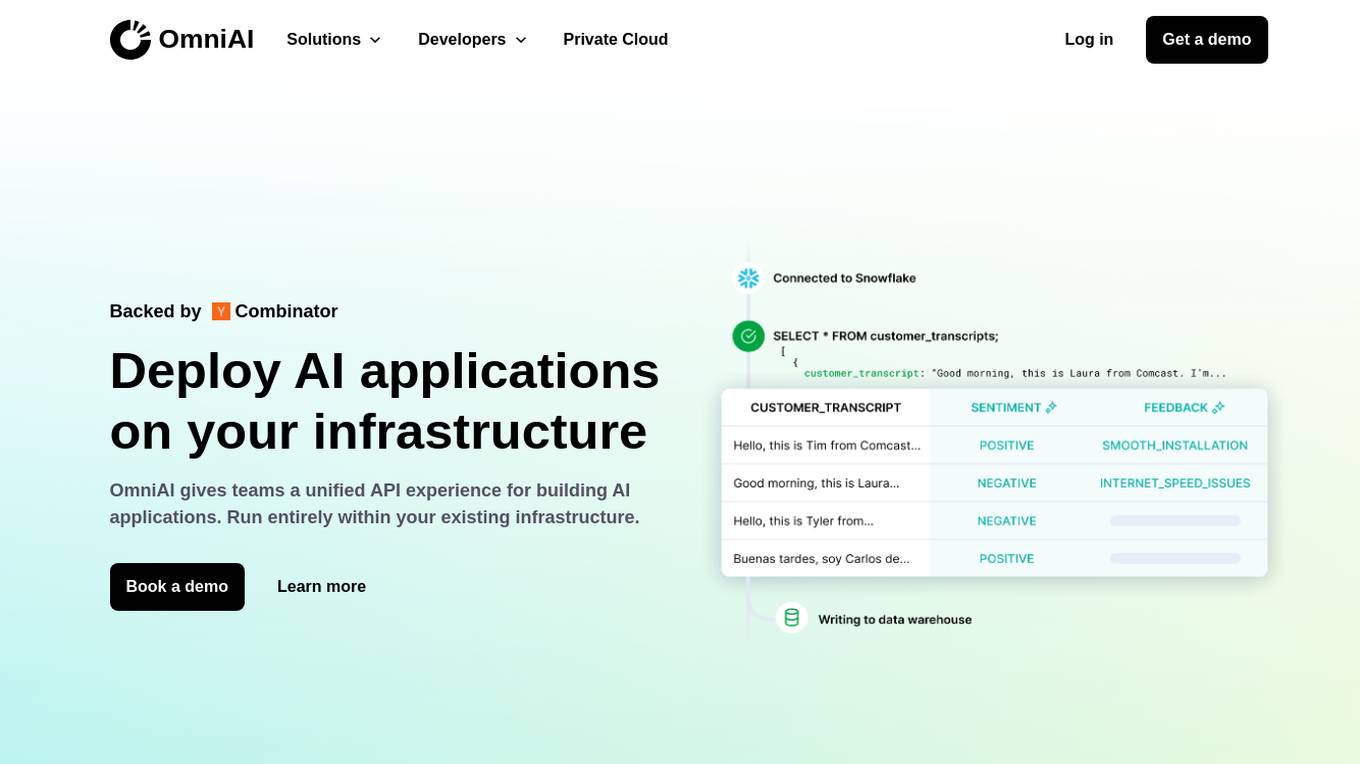

OmniAI

OmniAI is an AI tool that allows teams to deploy AI applications on their existing infrastructure. It provides a unified API experience for building AI applications and offers a wide selection of industry-leading models. With tools like Llama 3, Claude 3, Mistral Large, and AWS Titan, OmniAI excels in tasks such as natural language understanding, generation, safety, ethical behavior, and context retention. It also enables users to deploy and query the latest AI models quickly and easily within their virtual private cloud environment.

Dust

Dust is a customizable and secure AI assistant platform that helps businesses amplify their team's potential. It allows users to deploy the best Large Language Models to their company, connect Dust to their team's data, and empower their teams with assistants tailored to their specific needs. Dust is exceptionally modular and adaptable, tailoring to unique requirements and continuously evolving to meet changing needs. It supports multiple sources of data and models, including proprietary and open-source models from OpenAI, Anthropic, and Mistral. Dust also helps businesses identify their most creative and driven team members and share their experience with AI throughout the company. It promotes collaboration with shared conversations, @mentions in discussions, and Slackbot integration. Dust prioritizes security and data privacy, ensuring that data remains private and that enterprise-grade security measures are in place to manage data access policies.

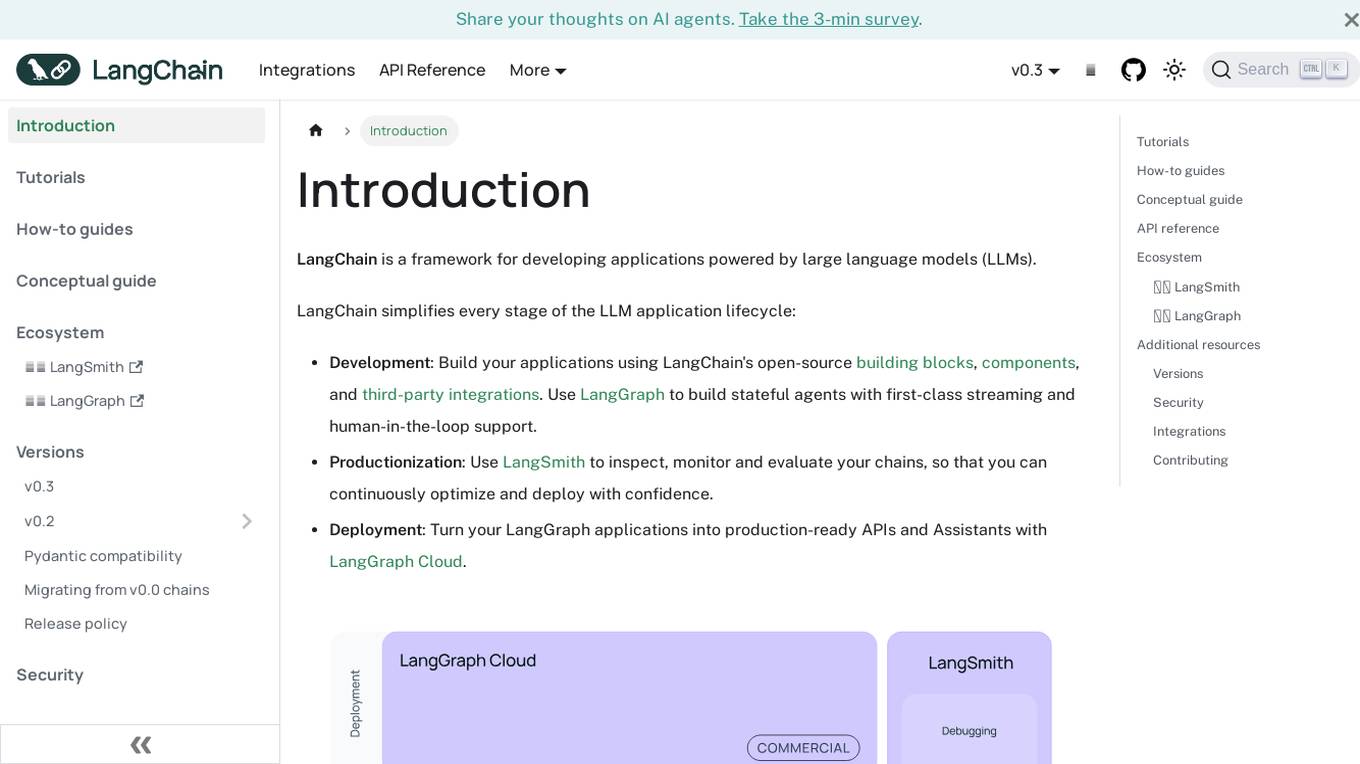

LangChain

LangChain is a framework for developing applications powered by large language models (LLMs). It simplifies every stage of the LLM application lifecycle, including development, productionization, and deployment. LangChain consists of open-source libraries such as langchain-core, langchain-community, and partner packages. It also includes LangGraph for building stateful agents and LangSmith for debugging and monitoring LLM applications.

Langtail

Langtail is a platform that helps developers build, test, and deploy AI-powered applications. It provides a suite of tools to help developers debug prompts, run tests, and monitor the performance of their AI models. Langtail also offers a community forum where developers can share tips and tricks, and get help from other users.

Arcee AI

Arcee AI is a platform that offers a cost-effective, secure, end-to-end solution for building and deploying Small Language Models (SLMs). It allows users to merge and train custom language models by leveraging open source models and their own data. The platform is known for its Model Merging technique, which combines the power of pre-trained Large Language Models (LLMs) with user-specific data to create high-performing models across various industries.

Denvr DataWorks AI Cloud

Denvr DataWorks AI Cloud is a cloud-based AI platform that provides end-to-end AI solutions for businesses. It offers a range of features including high-performance GPUs, scalable infrastructure, ultra-efficient workflows, and cost efficiency. Denvr DataWorks is an NVIDIA Elite Partner for Compute, and its platform is used by leading AI companies to develop and deploy innovative AI solutions.

ConsoleX

ConsoleX is an advanced AI tool that offers a wide range of functionalities to unlock infinite possibilities in the field of artificial intelligence. It provides users with a powerful platform to develop, test, and deploy AI models with ease. With cutting-edge features and intuitive interface, ConsoleX is designed to cater to the needs of both beginners and experts in the AI domain. Whether you are a data scientist, researcher, or developer, ConsoleX empowers you to explore the full potential of AI technology and drive innovation in your projects.

Allganize

Allganize Inc. is a leading provider of enterprise AI solutions. Their platform enables businesses to build and deploy custom AI applications without the need for coding. Allganize's solutions are used by a variety of industries, including financial services, healthcare, and manufacturing.

Langtrace AI

Langtrace AI is an open-source observability tool powered by Scale3 Labs that helps monitor, evaluate, and improve LLM (Large Language Model) applications. It collects and analyzes traces and metrics to provide insights into the ML pipeline, ensuring security through SOC 2 Type II certification. Langtrace supports popular LLMs, frameworks, and vector databases, offering end-to-end observability and the ability to build and deploy AI applications with confidence.

Unsloth

Unsloth is an AI tool designed to make finetuning large language models like Llama-3, Mistral, Phi-3, and Gemma 2x faster, use 70% less memory, and with no degradation in accuracy. The tool provides documentation to help users navigate through training their custom models, covering essentials such as installing and updating Unsloth, creating datasets, running, and deploying models. Users can also integrate third-party tools and utilize platforms like Google Colab.

Fleak AI Workflows

Fleak AI Workflows is a low-code serverless API Builder designed for data teams to effortlessly integrate, consolidate, and scale their data workflows. It simplifies the process of creating, connecting, and deploying workflows in minutes, offering intuitive tools to handle data transformations and integrate AI models seamlessly. Fleak enables users to publish, manage, and monitor APIs effortlessly, without the need for infrastructure requirements. It supports various data types like JSON, SQL, CSV, and Plain Text, and allows integration with large language models, databases, and modern storage technologies.

1 - Open Source AI Tools

distributed-llama

Distributed Llama is a tool that allows you to run large language models (LLMs) on weak devices or make powerful devices even more powerful by distributing the workload and dividing the RAM usage. It uses TCP sockets to synchronize the state of the neural network, and you can easily configure your AI cluster by using a home router. Distributed Llama supports models such as Llama 2 (7B, 13B, 70B) chat and non-chat versions, Llama 3, and Grok-1 (314B).

20 - OpenAI Gpts

Frontend Developer

AI front-end developer expert in coding React, Nextjs, Vue, Svelte, Typescript, Gatsby, Angular, HTML, CSS, JavaScript & advanced in Flexbox, Tailwind & Material Design. Mentors in coding & debugging for junior, intermediate & senior front-end developers alike. Let’s code, build & deploy a SaaS app.

Azure Arc Expert

Azure Arc expert providing guidance on architecture, deployment, and management.

Instructor GCP ML

Formador para la certificación de ML Engineer en GCP, con respuestas y explicaciones detalladas.

Docker and Docker Swarm Assistant

Expert in Docker and Docker Swarm solutions and troubleshooting.

Cloudwise Consultant

Expert in cloud-native solutions, provides tailored tech advice and cost estimates.