Best AI tools for< Assess Performance >

20 - AI tool Sites

Simpleem

Simpleem is an Artificial Emotional Intelligence (AEI) tool that helps users uncover intentions, predict success, and leverage behavior for successful interactions. By measuring all interactions and correlating them with concrete outcomes, Simpleem provides insights into verbal, para-verbal, and non-verbal cues to enhance customer relationships, track customer rapport, and assess team performance. The tool aims to identify win/lose patterns in behavior, guide users on boosting performance, and prevent burnout by promptly identifying red flags. Simpleem uses proprietary AI models to analyze real-world data and translate behavioral insights into concrete business metrics, achieving a high accuracy rate of 94% in success prediction.

Truesight

Goodeye Labs offers Truesight, an AI evaluation tool designed for domain experts to assess the performance of AI products without the need for extensive technical expertise. Truesight bridges the gap between domain knowledge and technical implementation, enabling users to evaluate AI-generated content against specific standards and factors. By empowering domain experts to provide judgment on AI performance, Truesight streamlines the evaluation process, reducing costs, meeting deadlines, and enhancing the reliability of AI products.

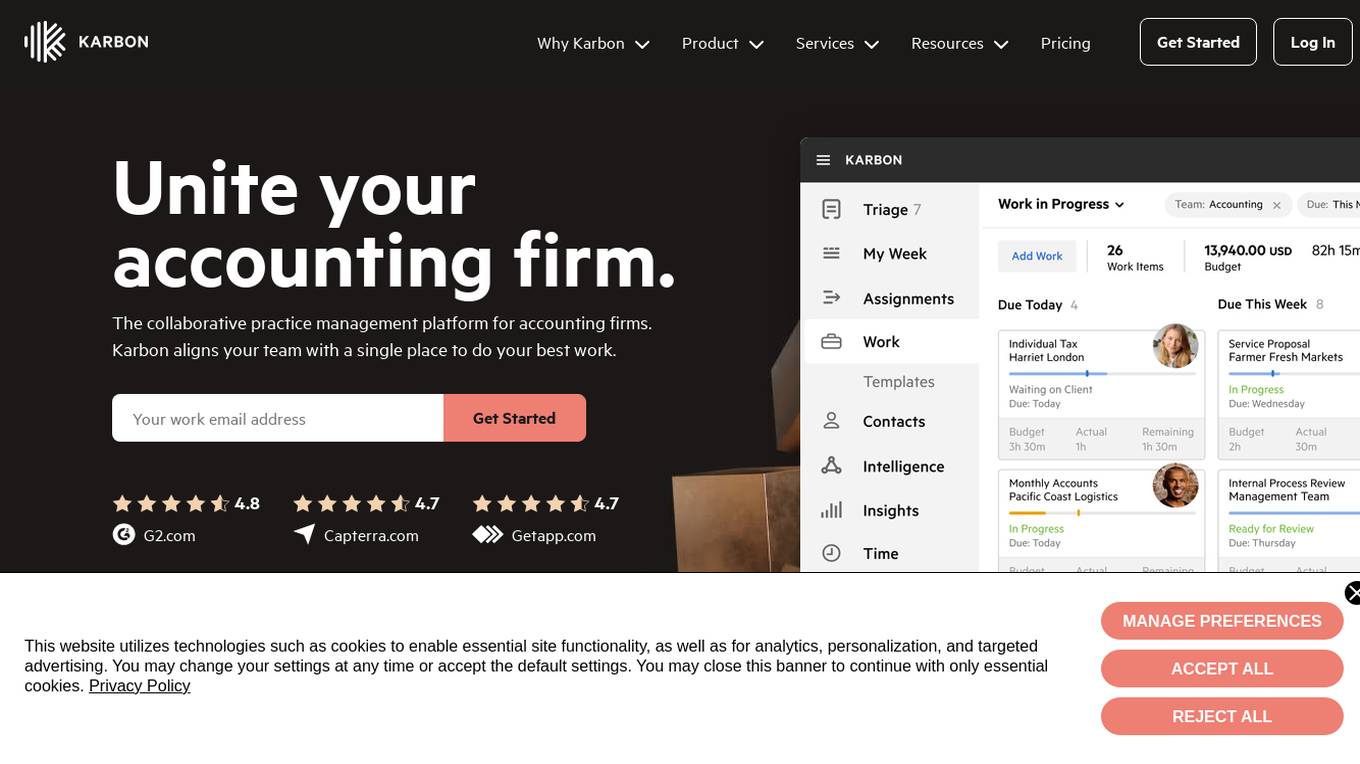

Karbon

Karbon is an AI-powered practice management software designed for accounting firms to increase visibility, control, automation, efficiency, collaboration, and connectivity. It offers features such as team collaboration, workflow automation, project management, time & budgets tracking, billing & payments, reporting & analysis, artificial intelligence integration, email management, shared inbox, calendar integration, client management, client portal, eSignatures, document management, and enterprise-grade security. Karbon enables firms to automate tasks, work faster, strengthen connections, and drive productivity. It provides services like group onboarding, guided implementation, and enterprise resources including articles, ebooks, and videos for accounting firms. Karbon also offers live training, customer support, and a practice excellence scorecard for firms to assess their performance. The software is known for its AI and GPT integration, helping users save time and improve efficiency.

Graphio

Graphio is an AI-driven employee scoring and scenario builder tool that leverages continuous, real-time scoring with AI agents to assess potential, predict flight risks, and identify future leaders. It replaces subjective evaluations with AI-driven insights to ensure accurate, unbiased decisions in talent management. Graphio uses AI to remove bias in talent management, providing real-time, data-driven insights for fair decisions in promotions, layoffs, and succession planning. It offers compliance features and rules that users can control, ensuring accurate and secure assessments aligned with legal and regulatory requirements. The platform focuses on security, privacy, and personalized coaching to enhance employee engagement and reduce turnover.

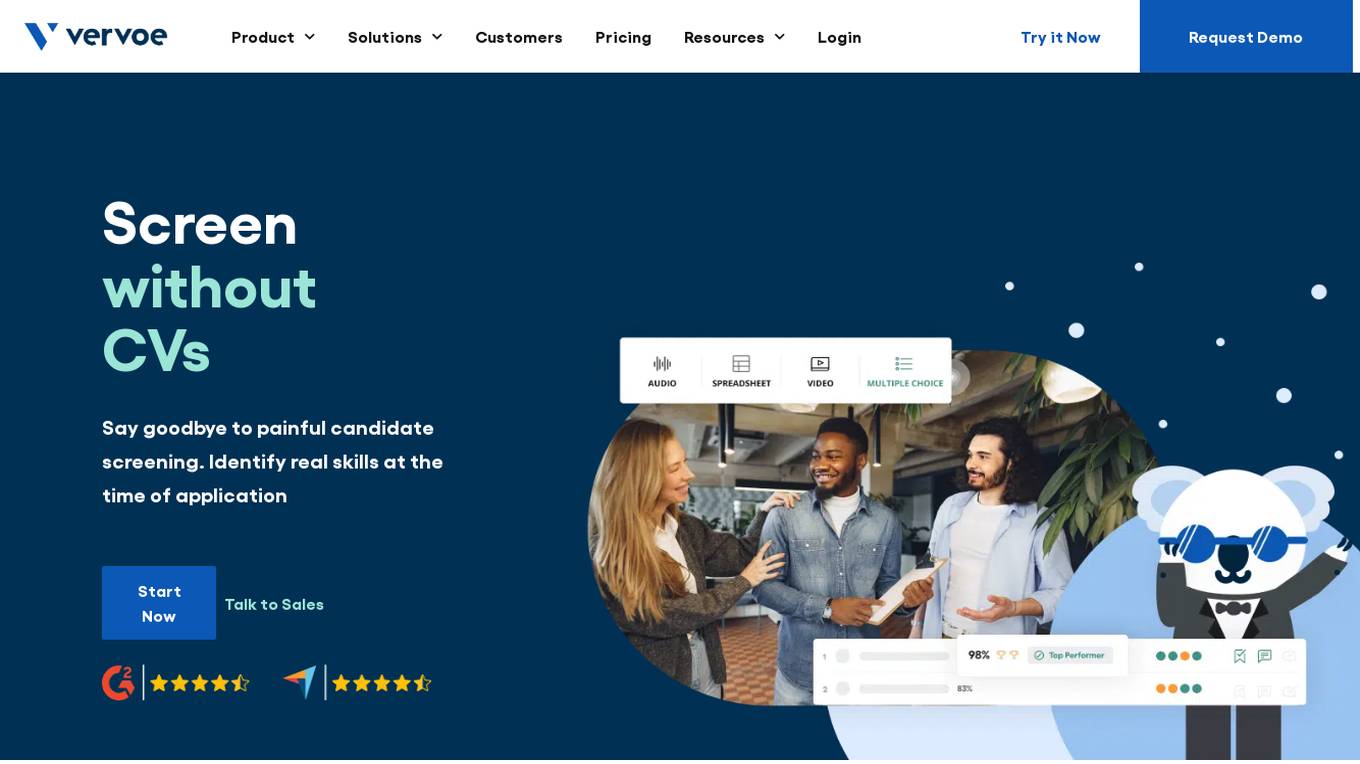

Vervoe

Vervoe is an AI-powered recruitment platform and hiring solution that revolutionizes the hiring process by offering skills-based screening through AI job simulations and assessments. It streamlines interviews, provides standardized templates, and facilitates team collaboration. Vervoe enables data-backed decisions by ranking applicants based on performance and offering detailed reports. The platform focuses on task-based evaluations of job-specific skills, enhancing the accuracy of hiring decisions. Employers can create customized tests or choose from a library of scientifically mapped assessments. Vervoe uses AI for recruiting, grading, and ranking candidates efficiently. The platform enhances employer branding, offers candidate feedback, and ensures a seamless candidate experience. Vervoe caters to various industries and company types, making it a versatile tool for modern recruitment processes.

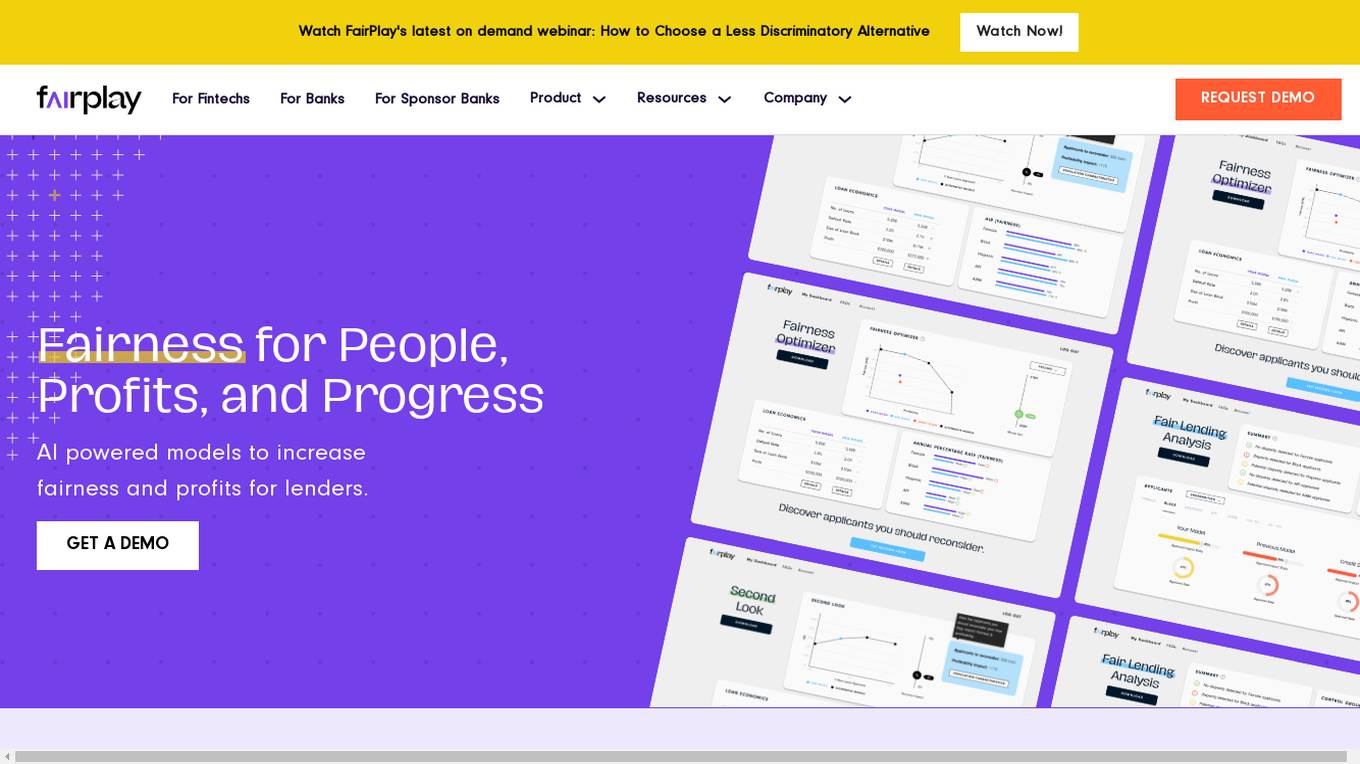

FairPlay

FairPlay is a Fairness-as-a-Service solution designed for financial institutions, offering AI-powered tools to assess automated decisioning models quickly. It helps in increasing fairness and profits by optimizing marketing, underwriting, and pricing strategies. The application provides features such as Fairness Optimizer, Second Look, Customer Composition, Redline Status, and Proxy Detection. FairPlay enables users to identify and overcome tradeoffs between performance and disparity, assess geographic fairness, de-bias proxies for protected classes, and tune models to reduce disparities without increasing risk. It offers advantages like increased compliance, speed, and readiness through automation, higher approval rates with no increase in risk, and rigorous Fair Lending analysis for sponsor banks and regulators. However, some disadvantages include the need for data integration, potential bias in AI algorithms, and the requirement for technical expertise to interpret results.

SmallTalk2Me

SmallTalk2Me is an AI-powered simulator designed to help users improve their spoken English. It offers a range of features, including mock job interviews, IELTS speaking test simulations, and daily stories and courses. The platform uses AI to provide users with instant feedback on their performance, helping them to identify areas for improvement and track their progress over time.

Underwrite.ai

Underwrite.ai is a platform that leverages advances in artificial intelligence and machine learning to provide lenders with nonlinear, dynamic models of credit risk. By analyzing thousands of data points from credit bureau sources, the application accurately models credit risk for consumers and small businesses, outperforming traditional approaches. Underwrite.ai offers a unique underwriting methodology that focuses on outcomes such as profitability and customer lifetime value, allowing organizations to enhance their lending performance without the need for capital investment or lengthy build times. The platform's models are continuously learning and adapting to market changes in real-time, providing explainable decisions in milliseconds.

Cognii

Cognii is an AI-based educational technology provider that offers solutions for K-12, higher education, and corporate training markets. Their award-winning EdTech product enables personalized learning, intelligent tutoring, open response assessments, and rich analytics. Cognii's Virtual Learning Assistant engages students in chatbot-style conversations, providing instant feedback, personalized hints, and guiding towards mastery. The platform aims to deliver 21st-century online education with superior learning outcomes and cost efficiency.

iCAD

iCAD is an AI-powered application designed for cancer detection, specifically focusing on breast cancer. The platform offers a suite of solutions including Detection, Density Assessment, and Risk Evaluation, all backed by science, clinical evidence, and proven patient outcomes. iCAD's AI-powered solutions aim to expose the hiding place of cancer, providing certainty and peace of mind, ultimately improving patient outcomes and saving more lives.

Welo Data

Welo Data is an AI tool that specializes in AI benchmarking, model assessment, and training high-quality datasets for AI models. The platform offers services such as supervised fine tuning, reinforcement learning with human feedback, data generation, expert evaluations, and data quality framework to support the development of world-class AI models. With over 27 years of experience, Welo Data combines language expertise and AI data to deliver exceptional training and performance evaluation solutions.

Clarity AI

Clarity AI is an AI-powered technology platform that offers a Sustainability Tech Kit for sustainable investing, shopping, reporting, and benchmarking. The platform provides built-in sustainability technology with customizable solutions for various needs related to data, methodologies, and tools. It seamlessly integrates into workflows, offering scalable and flexible end-to-end SaaS tools to address sustainability use cases. Clarity AI leverages powerful AI and machine learning to analyze vast amounts of data points, ensuring reliable and transparent data coverage. The platform is designed to empower users to assess, analyze, and report on sustainability aspects efficiently and confidently.

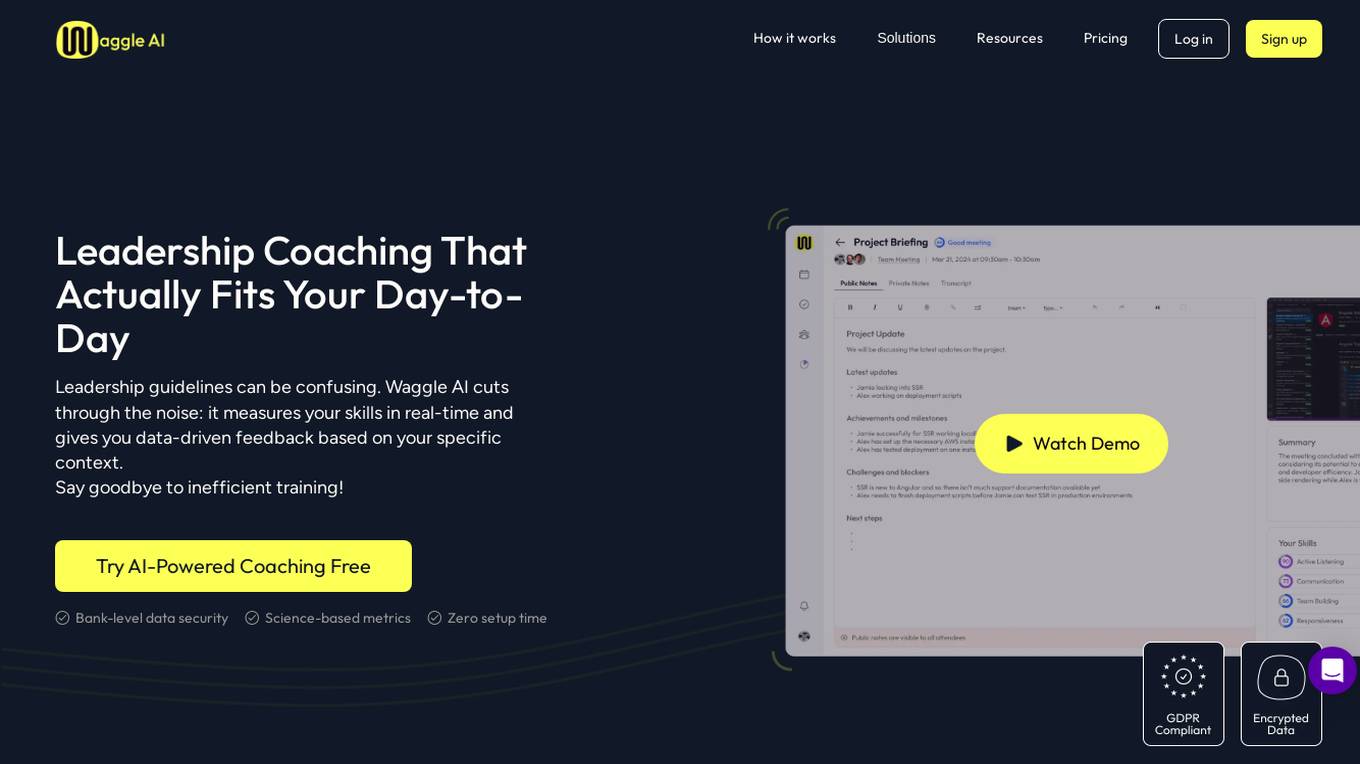

Waggle AI

Waggle AI is an AI-powered coaching tool designed to help leaders and managers improve their skills in real-time. It provides personalized feedback, skill insights, and meeting analytics to enhance leadership effectiveness. Waggle AI integrates seamlessly with existing meeting tools and calendars, offering features such as calendar management, meeting preparation, AI note-taking, meeting metrics, and skills assessment. The application aims to optimize leadership development by nudging users with best practices, providing data-driven insights, and fostering continuous improvement.

Gemmo AI

Gemmo AI is a boutique AI firm that specializes in building bespoke AI Agents blended with human creativity, insight, and judgment. They offer services such as AI Pathfinder for assessing opportunities, AI Implementation for deployment, and AI Optimization for performance improvement. Gemmo AI focuses on agility, value, and delivering fast, measurable results to help businesses integrate advanced AI technologies seamlessly into their workflows.

Pitch N Hire

Pitch N Hire is an AI-powered Applicant Tracking & Assessment Software designed to assist recruiters in enhancing their talent decisions. The platform offers a robust data-driven approach with descriptive, predictive, and prescriptive analytics to address talent acquisition challenges. It provides insights into candidate behavior, automated processes, and a vast network of career sites. With advanced AI data models, the software forecasts on-the-job performance, streamlines talent pipelines, and offers personalized branded experiences for candidates.

Gradescope

Gradescope is an online assessment platform that helps educators deliver and grade assessments seamlessly. It supports various assignment types, including variable-length assignments, fixed-template assignments, paper-based assignments, and programming projects. Gradescope enables educators to provide detailed feedback, maintain consistency with flexible rubrics, and send grades to students with a click. It also offers valuable insights through per-question and per-rubric statistics, helping educators understand student performance and adjust their teaching strategies. Additionally, Gradescope incorporates AI-assisted grading features, such as answer grouping, to streamline the grading process.

ISMS Copilot

ISMS Copilot is an AI-powered assistant designed to simplify ISO 27001 preparation for both experts and beginners. It offers various features such as ISMS scope definition, risk assessment and treatment, compliance navigation, incident management, business continuity planning, performance tracking, and more. The tool aims to save time, provide precise guidance, and ensure ISO 27001 compliance. With a focus on security and confidentiality, ISMS Copilot is a valuable resource for small businesses and information security professionals.

K2 AI

K2 AI is an AI consulting company that offers a range of services from ideation to impact, focusing on AI strategy, implementation, operation, and research. They support and invest in emerging start-ups and push knowledge boundaries in AI. The company helps executives assess organizational strengths, prioritize AI use cases, develop sustainable AI strategies, and continuously monitor and improve AI solutions. K2 AI also provides executive briefings, model development, and deployment services to catalyze AI initiatives. The company aims to deliver business value through rapid, user-centric, and data-driven AI development.

GreetAI

GreetAI is an AI-powered platform that revolutionizes the hiring process by conducting AI video interviews to evaluate applicants efficiently. The platform provides insightful reports, customizable interview questions, and highlights key points to help recruiters make informed decisions. GreetAI offers features such as interview simulations, job post generation, AI video screenings, and detailed candidate performance metrics.

Galactis.ai

Galactis.ai is an AI-powered IT Asset & Network Monitoring platform designed for enterprises. It offers real-time visibility, predictive insights, and proactive control across on-premises, cloud, and hybrid environments. The platform helps in optimizing IT spend, achieving high network uptime, automating operational tasks, and providing a single source of truth for enterprise assets. Galactis is trusted by regulated enterprises for production-grade IT asset management and network operations, with optional AI services delivered under strict governance.

1 - Open Source AI Tools

rageval

Rageval is an evaluation tool for Retrieval-augmented Generation (RAG) methods. It helps evaluate RAG systems by performing tasks such as query rewriting, document ranking, information compression, evidence verification, answer generation, and result validation. The tool provides metrics for answer correctness and answer groundedness, along with benchmark results for ASQA and ALCE datasets. Users can install and use Rageval to assess the performance of RAG models in question-answering tasks.

20 - OpenAI Gpts

Leadership Development Advisor

Guides leadership growth to enhance organizational performance.

I4T Assessor - UNESCO Tech Platform Trust Helper

Helps you evaluate whether or not tech platforms match UNESCO's Internet for Trust Guidelines for the Governance of Digital Platforms

Evaluation Criteria Creator

Simply write any topic (anything superheroes, vacuums, Pokémon’, diamonds…) and I’ll provide the evaluation criteria you can use.

IQ Test

IQ Test is designed to simulate an IQ testing environment. It provides a formal and objective experience, delivering questions and processing answers in a straightforward manner.

Safaricom Financial Analyst

Analyzes Safaricom's HY and FY financials, with detailed insights on different years.

Biz Problem Solver

Revolutionize Problem-Solving: AI-Enhanced, Expert-Driven Business Solutions Like First Principles, A3, 8D, McKinsey 7S, 4S, DMAIC, Kaizen, Lean Six Sigma, 40 TRIZ Principles of Innovation

Digital Assets @ FS

Consultant on digital assets in financial services, using a pricing study for insights.

UNICORN Binance Suite Assistant

Elegant assistance and expertise for integrating the Unicorn Binance Suite.

HomeScore

Assess a potential home's quality using your own photos and property inspection reports

Ready for Transformation

Assess your company's real appetite for new technologies or new ways of working methods

TRL Explorer

Assess the TRL of your projects, get ideas for specific TRLs, learn how to advance from one TRL to the next

🎯 CulturePulse Pro Advisor 🌐

Empowers leaders to gauge and enhance company culture. Use advanced analytics to assess, report, and develop a thriving workplace culture. 🚀💼📊

香港地盤安全佬 HK Construction Site Safety Advisor

Upload a site photo to assess the potential hazard and seek advises from experience AI Safety Officer