Best AI tools for< Adopt Names >

20 - AI tool Sites

NameBridge

NameBridge is an AI-powered tool that generates connotative Chinese names based on English names. The tool ensures the generated names sound great and have deep meanings. It is designed to help individuals create culturally appropriate Chinese names for various purposes such as business, writing, art, travel, and personal interests. NameBridge uses AI algorithms to instantly generate unique and meaningful Chinese names that are culturally significant and can be used in various contexts.

Escape

Escape is a dynamic application security testing (DAST) tool that stands out for its ability to work seamlessly with modern technology stacks, test business logic, and help developers address vulnerabilities efficiently. It offers features like API discovery and security testing, GraphQL security testing, and tailored remediations. Escape provides advantages such as high code coverage improvement, fewer false negatives, time-saving benefits, and application risk reduction. However, it also has disadvantages like the need for manual code remediations and limited support for certain security integrations.

Tenable AI Exposure

Tenable AI Exposure is an AI tool that helps organizations secure and understand their use of AI platforms. It provides visibility, context, and control to manage risks from enterprise AI platforms, enabling security leaders to govern AI usage, enforce policies, and prevent exposures. The tool allows users to track AI platform usage, identify and fix AI misconfigurations, protect against AI exploitation, and deploy quickly with industry-leading security for AI platform use.

Arya.ai

Arya.ai is an AI tool designed for Banks, Insurers, and Financial Services to deploy safe, responsible, and auditable AI applications. It offers a range of AI Apps, ML Observability Tools, and a Decisioning Platform. Arya.ai provides curated APIs, ML explainability, monitoring, and audit capabilities. The platform includes task-specific AI models for autonomous underwriting, claims processing, fraud monitoring, and more. Arya.ai aims to facilitate the rapid deployment and scaling of AI applications while ensuring institution-wide adoption of responsible AI practices.

Team-GPT

Team-GPT is an enterprise AI software designed for teams ranging from 2 to 5,000 members. It provides a shared workspace where teams can organize knowledge, collaborate, and master AI. The platform offers features such as folders and subfolders for organizing chats, a prompt library with ready-to-use templates, and adoption reports to measure AI adoption rates. Team-GPT aims to make ChatGPT more accessible and cost-effective for teams by providing pay-per-use pricing and priority access to the OpenAI API.

Weam

Weam is an AI adoption platform designed for digital agencies to supercharge their operations with collaborative AI. It offers a comprehensive suite of tools for simplifying AI implementation, including project management, resource allocation, training modules, and ongoing support to ensure successful AI integration. Weam enables teams to interact and collaborate over their preferred LLMs, facilitating scalability, time-saving, and widespread AI adoption across the organization.

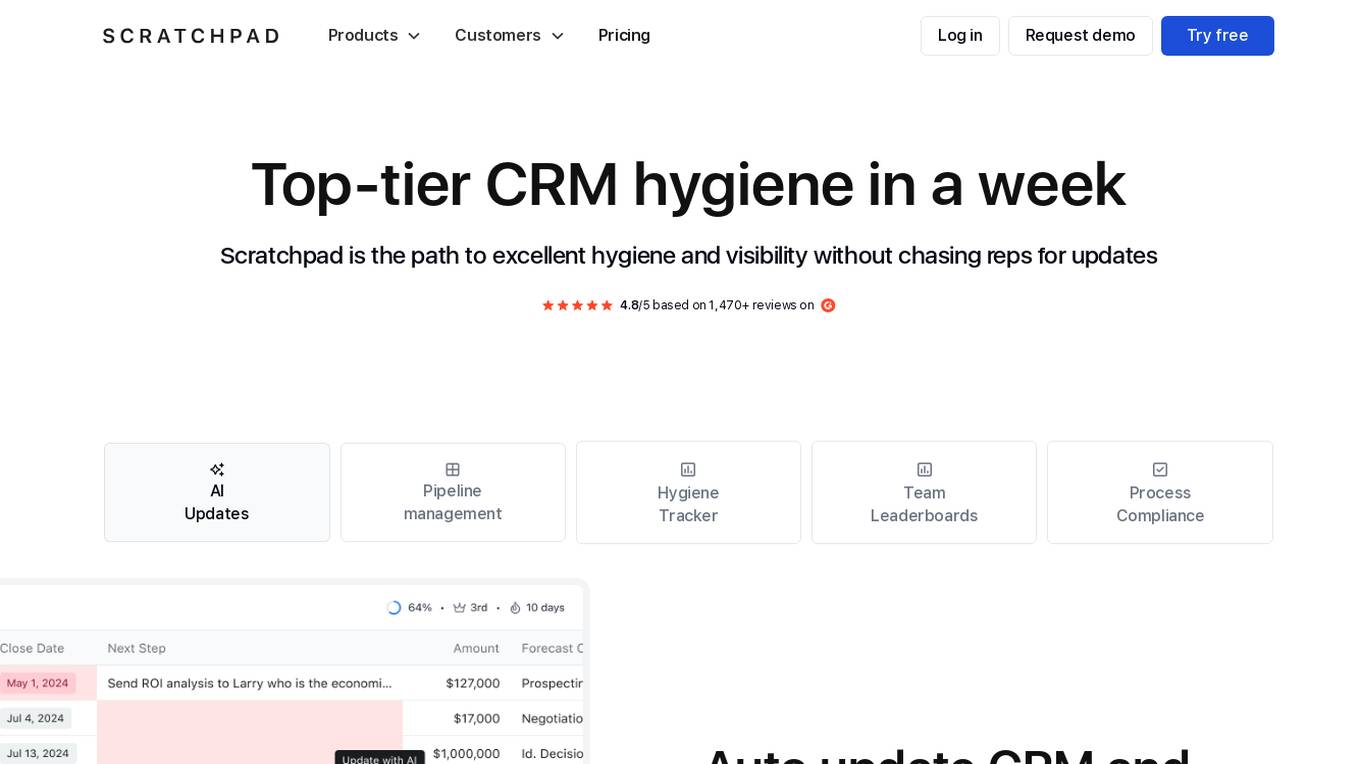

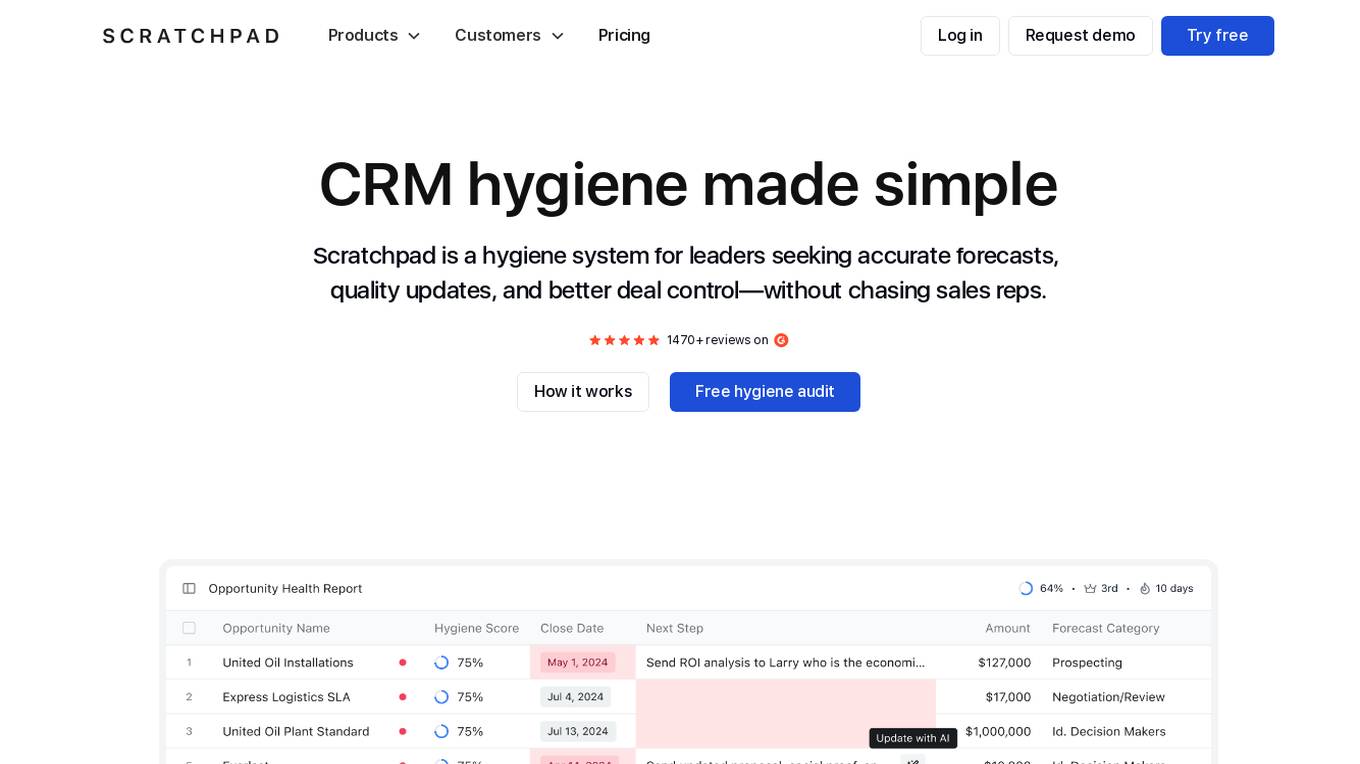

Scratchpad

Scratchpad is an AI-powered workspace designed for sales teams to streamline their sales processes and enhance productivity. It offers features such as AI Sales Assistant, Pipeline Management, Slack Deal Rooms, Automations & Enablement, Deal Inspection, and Sales Forecasting. With Scratchpad, sales teams can automate collaboration, improve Salesforce hygiene, track deal progress, and gain insights into deal movement and pipeline changes. The application aims to simplify sales workflows, provide real-time notes and call summaries, and enhance team collaboration for better performance and efficiency.

Scratchpad

Scratchpad is an AI-powered workspace designed for sales teams to streamline their sales processes and enhance productivity. It offers a range of features such as AI Sales Assistant, Pipeline Management, Deal Rooms, Automations & Enablement, and Deal Inspection. With Scratchpad, sales teams can benefit from real-time notes, call summaries, and transcripts, as well as AI prompts for sales processes. The application aims to simplify sales execution, improve CRM hygiene, and provide better deal control through automation and in-line coaching.

AI Compass for Businesses

The AI Compass for Businesses by BDO Digital is a powerful tool that helps businesses harness the full potential of technology. It provides free reports with recommendations to kickstart the AI journey, enabling companies to leverage AI to increase revenue, reduce expenses, and make better decisions. The tool benchmarks organizations against 15,000 others and offers practical ideas for implementing AI without requiring any contact information. Users can create personalized reports or book AI consultations to further enhance their AI implementation path.

Matrix AI Consulting Services

Matrix AI Consulting Services is an expert AI consultancy firm based in New Zealand, offering bespoke AI consulting services to empower businesses and government entities to embrace responsible AI. With over 24 years of experience in transformative technology, the consultancy provides services ranging from AI business strategy development to seamless integration, change management, training workshops, and governance frameworks. Matrix AI Consulting Services aims to help organizations unlock the full potential of AI, enhance productivity, streamline operations, and gain a competitive edge through the strategic implementation of AI technologies.

SurePath AI

SurePath AI is an AI platform solution company that governs the workforce use of GenAI. It provides solutions for detecting usage, mitigating risks, and controlling enterprise data access. SurePath AI offers a secure path for GenAI adoption by spotting, securing, and streamlining GenAI use effortlessly. The platform helps prevent data leaks, control access to private models and enterprise data, and manage access to public and private models. It also provides insights and analytics into user activity, policy enforcement, and potential risks.

Data & Trust Alliance

The Data & Trust Alliance is a group of industry-leading enterprises focusing on the responsible use of data and intelligent systems. They develop practices to enhance trust in data and AI models, ensuring transparency and reliability in the deployment processes. The alliance works on projects like Data Provenance Standards and Assessing third-party model trustworthiness to promote innovation and trust in AI applications. Through technology and innovation adoption, they aim to leverage expertise and influence for practical solutions and broad adoption across industries.

Fairo

Fairo is a platform that facilitates Responsible AI Governance, offering tools for reducing AI hallucinations, managing AI agents and assets, evaluating AI systems, and ensuring compliance with various regulations. It provides a comprehensive solution for organizations to align their AI systems ethically and strategically, automate governance processes, and mitigate risks. Fairo aims to make responsible AI transformation accessible to organizations of all sizes, enabling them to build technology that is profitable, ethical, and transformative.

Rebecca Bultsma

Rebecca Bultsma is a trusted and experienced AI educator who aims to make AI simple and ethical for everyday use. She provides resources, speaking engagements, and consulting services to help individuals and organizations understand and integrate AI into their workflows. Rebecca empowers people to work in harmony with AI, leveraging its capabilities to tackle challenges, spark creative ideas, and make a lasting impact. She focuses on making AI easy to understand and promoting ethical adoption strategies.

MetaPals

MetaPals is an AI-powered application that focuses on crafting digital experiences to form genuine emotional bonds with users. It leverages advanced AI technologies and partnerships with institutions like Nanyang Technological University and industry giants like Google AI and Firebase to redefine the way users interact with technology. MetaPals aims to solve the disconnect in the digital age by providing meaningful digital experiences and introducing unique digital companions through collaborations with renowned brands and IPs. The application allows users to adopt digital companions, dive into immersive adventures, and explore the intersection of technology and companionship, setting new standards of gameplay.

Diffblue Cover

Diffblue Cover is an autonomous AI-powered unit test writing tool for Java development teams. It uses next-generation autonomous AI to automate unit testing, freeing up developers to focus on more creative work. Diffblue Cover can write a complete and correct Java unit test every 2 seconds, and it is directly integrated into CI pipelines, unlike AI-powered code suggestions that require developers to check the code for bugs. Diffblue Cover is trusted by the world's leading organizations, including Goldman Sachs, and has been proven to improve quality, lower developer effort, help with code understanding, reduce risk, and increase deployment frequency.

Teraflow.ai

Teraflow.ai is an AI-enablement company that specializes in helping businesses adopt and scale their artificial intelligence models. They offer services in data engineering, ML engineering, AI/UX, and cloud architecture. Teraflow.ai assists clients in fixing data issues, boosting ML model performance, and integrating AI into legacy customer journeys. Their team of experts deploys solutions quickly and efficiently, using modern practices and hyper scaler technology. The company focuses on making AI work by providing fixed pricing solutions, building team capabilities, and utilizing agile-scrum structures for innovation. Teraflow.ai also offers certifications in GCP and AWS, and partners with leading tech companies like HashiCorp, AWS, and Microsoft Azure.

AI Readiness Assessment

The AI Readiness Assessment is a comprehensive tool designed to evaluate an organization's readiness to adopt and implement artificial intelligence technologies. It assesses various aspects such as data infrastructure, talent capabilities, organizational culture, and strategic alignment to provide valuable insights and recommendations for successful AI integration. By leveraging advanced algorithms and best practices, the assessment helps businesses identify strengths and areas for improvement in their AI journey.

Zest AI

Zest AI is an AI-driven credit underwriting software that offers a complete solution for AI-driven lending. It enables users to build custom machine learning risk models, adopt model assessment and regulatory compliance, and operate performance management and model monitoring. Zest AI helps increase approvals, manage risk, control loss, drive operational efficiency, automate credit decisioning, and improve borrower experience while ensuring fair lending practices. The software is designed to provide fair and transparent credit for everyone, making lending more accessible and inclusive.

Fundraising.AI

Fundraising.AI is an independent collaborative dedicated to promoting the development and use of Responsible and Beneficial Artificial Intelligence for nonprofit fundraising. The platform aims to empower fundraising professionals with the knowledge, tools, and resources to adopt AI technologies responsibly, prioritizing trust, precision, and personalization. Fundraising.AI focuses on providing tools for organizations and AI practitioners to build, buy, and support responsible and trusted AI systems for fundraising purposes.

0 - Open Source AI Tools

20 - OpenAI Gpts

Linda

Personal assistant to Let's Adopt International. Ask me anything about animal rescue, vet sciences and Let's Adopt

Mike

In all my interactions, I adopt a rigorously meticulous and thoughtful methodology, ensuring that each response is the product of careful analysis and deliberate consideration.

Rich Habits

Entrepreneurs can get distracted easily and form bad habits. This GPT helps you adopt rich habits and get rich by doing so.

Guess Guru

I play the game 'Guess who I am!' with you. I adopt the identity of random famous person. Show me you are a true Guess Guru, which can discover my new identity based on only yes/no questions.

CatGPT

CatGPT makes dark meows and purrs only. I know cat care facts and the secrets of the night.

Adept Online Business Builder

A guide for aspiring online entrepreneurs, offering practical advice on setting up and running a business. Please note: The product is independently developed and not affiliated, endorsed, or sponsored by OpenAI.

Time Converter

Elegantly designed to seamlessly adapt your schedule across multiple time zones.

Your Personal Professional Translator

Translator adept at format-preserved translations and cultural nuances.

AI Entrepreneur

An adept financier overseeing a varied collection of investments, dedicated to recognizing and nurturing innovative business endeavors.