Best AI tools for< Vulnerability Researcher >

Infographic

20 - AI tool Sites

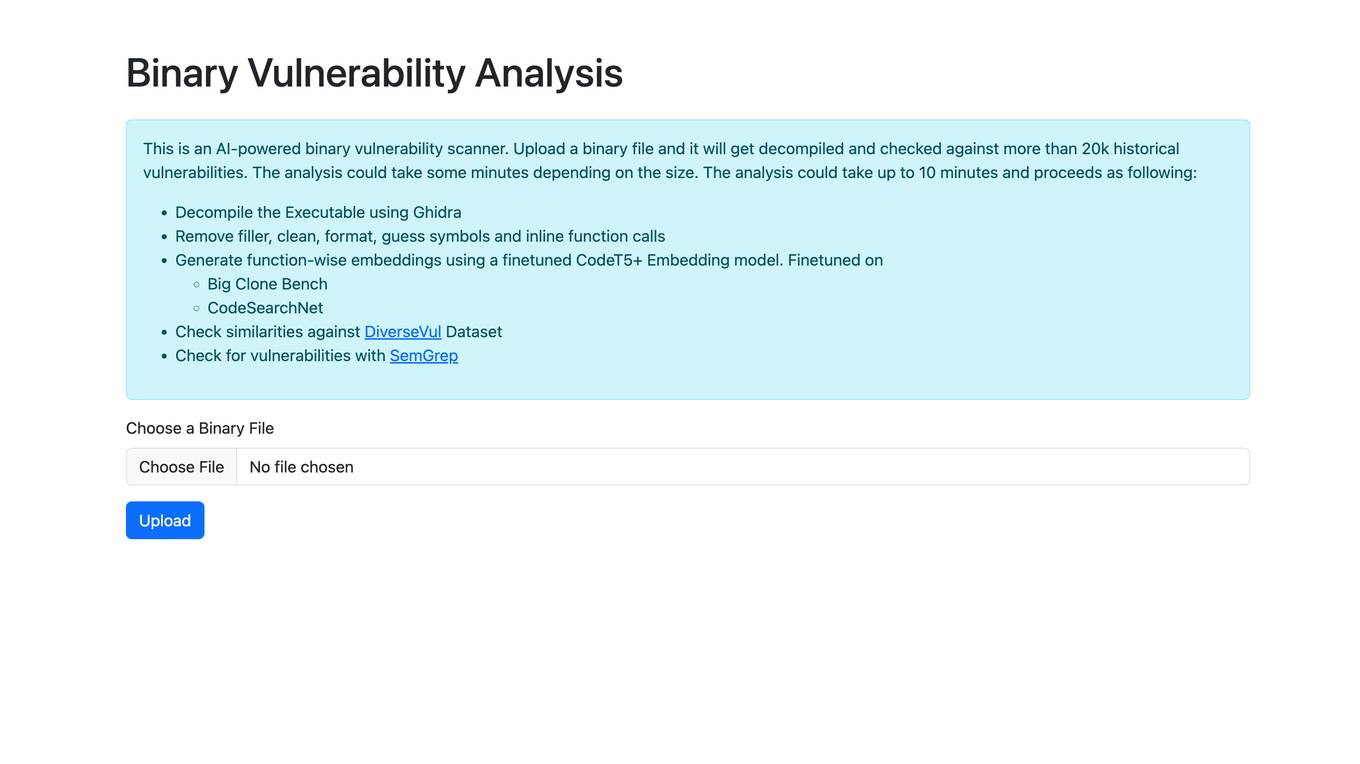

Binary Vulnerability Analysis

The website offers an AI-powered binary vulnerability scanner that allows users to upload a binary file for analysis. The tool decompiles the executable, removes filler, cleans, formats, and checks for historical vulnerabilities. It generates function-wise embeddings using a finetuned CodeT5+ Embedding model and checks for similarities against the DiverseVul Dataset. The tool also utilizes SemGrep to check for vulnerabilities in the binary file.

OpenBuckets

OpenBuckets is a web application designed to help users find and secure open buckets in cloud storage systems. It provides a simple and efficient way to identify and protect sensitive data that may be exposed due to misconfigured cloud storage settings. With OpenBuckets, users can easily scan their cloud storage accounts for publicly accessible buckets and take necessary actions to safeguard their information.

Huntr

Huntr is the world's first bug bounty platform for AI/ML. It provides a single place for security researchers to submit vulnerabilities, ensuring the security and stability of AI/ML applications, including those powered by Open Source Software (OSS).

Cyble

Cyble is a leading threat intelligence platform offering products and services recognized by top industry analysts. It provides AI-driven cyber threat intelligence solutions for enterprises, governments, and individuals. Cyble's offerings include attack surface management, brand intelligence, dark web monitoring, vulnerability management, takedown and disruption services, third-party risk management, incident management, and more. The platform leverages cutting-edge AI technology to enhance cybersecurity efforts and stay ahead of cyber adversaries.

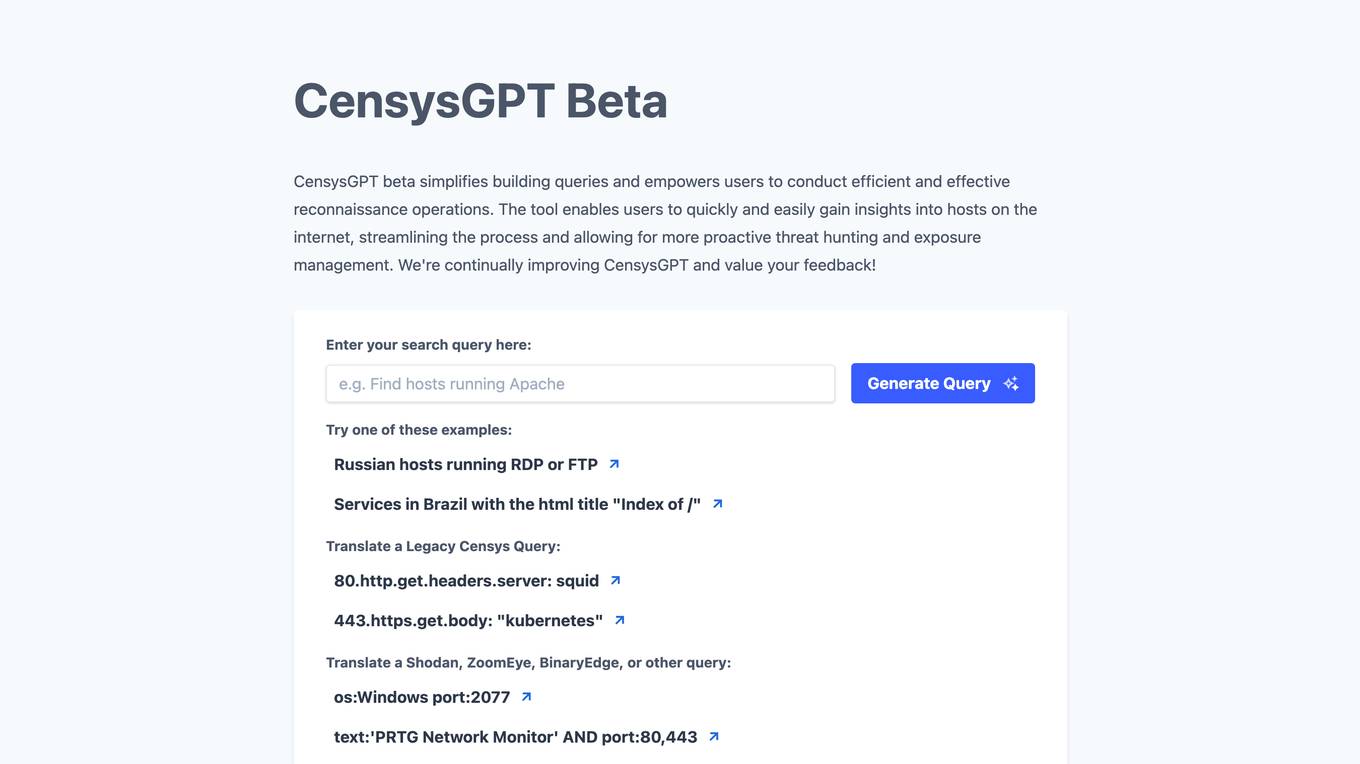

CensysGPT Beta

CensysGPT Beta is a tool that simplifies building queries and empowers users to conduct efficient and effective reconnaissance operations. It enables users to quickly and easily gain insights into hosts on the internet, streamlining the process and allowing for more proactive threat hunting and exposure management.

Protect AI

Protect AI is a comprehensive platform designed to secure AI systems by providing visibility and manageability to detect and mitigate unique AI security threats. The platform empowers organizations to embrace a security-first approach to AI, offering solutions for AI Security Posture Management, ML model security enforcement, AI/ML supply chain vulnerability database, LLM security monitoring, and observability. Protect AI aims to safeguard AI applications and ML systems from potential vulnerabilities, enabling users to build, adopt, and deploy AI models confidently and at scale.

ODIN

ODIN is a powerful internet scanning search engine designed for scanning and cataloging internet assets. It offers enhanced scanning capabilities, faster refresh rates, and comprehensive visibility into open ports. With over 45 modules covering various aspects like HTTP, Elasticsearch, and Redis, ODIN enriches data and provides accurate and up-to-date information. The application uses AI/ML algorithms to detect exposed buckets, files, and potential vulnerabilities. Users can perform granular searches, access exploit information, and integrate effortlessly with ODIN's API, SDKs, and CLI. ODIN allows users to search for hosts, exposed buckets, exposed files, and subdomains, providing detailed insights and supporting diverse threat intelligence applications.

SMshrimant

SMshrimant is a personal website belonging to a Bug Bounty Hunter, Security Researcher, Penetration Tester, and Ethical Hacker. The website showcases the creator's skills and experiences in the field of cybersecurity, including bug hunting, security vulnerability reporting, open-source tool development, and participation in Capture The Flag competitions. Visitors can learn about the creator's projects, achievements, and contact information for inquiries or collaborations.

Glog

Glog is an AI application focused on making software more secure by providing remediation advice for security vulnerabilities in software code based on context. It is capable of automatically fixing vulnerabilities, thus reducing security risks and protecting against cyber attacks. The platform utilizes machine learning and AI to enhance software security and agility, ensuring system reliability, integrity, and safety.

Giskard

Giskard is an automated Red Teaming platform designed to prevent security vulnerabilities and business compliance failures in AI agents. It offers advanced features for detecting AI vulnerabilities, proactive monitoring, and aligning AI testing with real business requirements. The platform integrates with observability stacks, provides enterprise-grade security, and ensures data protection. Giskard is trusted by enterprise AI teams and has been used to detect over 280,000 AI vulnerabilities.

NodeZero™ Platform

Horizon3.ai Solutions offers the NodeZero™ Platform, an AI-powered autonomous penetration testing tool designed to enhance cybersecurity measures. The platform combines expert human analysis by Offensive Security Certified Professionals with automated testing capabilities to streamline compliance processes and proactively identify vulnerabilities. NodeZero empowers organizations to continuously assess their security posture, prioritize fixes, and verify the effectiveness of remediation efforts. With features like internal and external pentesting, rapid response capabilities, AD password audits, phishing impact testing, and attack research, NodeZero is a comprehensive solution for large organizations, ITOps, SecOps, security teams, pentesters, and MSSPs. The platform provides real-time reporting, integrates with existing security tools, reduces operational costs, and helps organizations make data-driven security decisions.

AI QA Monkey

AI QA Monkey is a free website security scanner that offers instant security audits to check website security scores. The tool scans for vulnerabilities such as leaked sensitive data, open ports, passwords, .env files, and WordPress vulnerabilities. It provides a detailed security report with actionable insights and AI-powered fixes. Users can export reports in PDF, JSON, or CSV formats. AI QA Monkey is designed to help businesses improve their security posture and comply with GDPR regulations.

AquilaX

AquilaX is an AI-powered DevSecOps platform that simplifies security and accelerates development processes. It offers a comprehensive suite of security scanning tools, including secret identification, PII scanning, SAST, container scanning, and more. AquilaX is designed to integrate seamlessly into the development workflow, providing fast and accurate results by leveraging AI models trained on extensive datasets. The platform prioritizes developer experience by eliminating noise and false positives, making it a go-to choice for modern Secure-SDLC teams worldwide.

Kindo

Kindo is an AI-powered platform designed for DevSecOps teams to automate tasks, write doctrine, and orchestrate infrastructure responses. It offers AI-powered Runbook automations to streamline workflows, automate tedious tasks, and enhance security controls. Kindo enables users to offload time-consuming tasks to AI Agents, prioritize critical tasks, and monitor AI-related activities for compliance and informed decision-making. The platform provides a comprehensive vantage point for modern infrastructure defense and instrumentation, allowing users to create repeatable processes, automate vulnerability assessment and remediation, and secure multi-cloud IAM configurations.

Snyk

Snyk is a developer security platform powered by DeepCode AI, offering solutions for application security, software supply chain security, and secure AI-generated code. It provides comprehensive vulnerability data, license compliance management, and self-service security education. Snyk integrates AI models trained on security-specific data to secure applications and manage tech debt effectively. The platform ensures developer-first security with one-click security fixes and AI-powered recommendations, enhancing productivity while maintaining security standards.

Qwiet AI

Qwiet AI is a code vulnerability detection platform that accelerates secure coding by uncovering, prioritizing, and generating fixes for top vulnerabilities with a single scan. It offers features such as AI-enhanced SAST, contextual SCA, AI AutoFix, Container Security, SBOM, and Secrets detection. Qwiet AI helps InfoSec teams in companies to accurately pinpoint and autofix risks in their code, reducing false positives and remediation time. The platform provides a unified vulnerability dashboard, prioritizes risks, and offers tailored fix suggestions based on the full context of the code.

CloudDefense.AI

CloudDefense.AI is an industry-leading multi-layered Cloud Native Application Protection Platform (CNAPP) that safeguards cloud infrastructure and cloud-native apps with expertise, precision, and confidence. It offers comprehensive cloud security solutions, vulnerability management, compliance, and application security testing. The platform utilizes advanced AI technology to proactively detect and analyze real-time threats, ensuring robust protection for businesses against cyber threats.

DepsHub

DepsHub is an AI-powered tool designed to simplify dependency updates for software development teams. It offers automatic dependency updates, license checks, and security vulnerability scanning to ensure teams stay secure and up-to-date. With noise-free dependency management, cross-repository overview, license compliance, and security alerts, DepsHub streamlines the process of managing dependencies for teams of any size. The AI-powered engine analyzes library changelogs, release notes, and codebases to automatically update dependencies, including handling breaking changes. DepsHub supports a wide range of languages and frameworks, making it easy for teams to integrate with their favorite technologies and save time by focusing on writing code that matters.

SANS AI Cybersecurity Hackathon

SANS AI Cybersecurity Hackathon is a global virtual competition that challenges participants to design and build AI-driven solutions to secure systems, protect data, and counter emerging cyber threats. The hackathon offers a platform for cybersecurity professionals and students to showcase their creativity and technical expertise, connect with a global community, and make a real-world impact through AI innovation. Participants are required to create open-source solutions addressing pressing cybersecurity challenges by integrating AI, with a focus on areas like threat detection, incident response, vulnerability scanning, security dashboards, digital forensics, and more.

BigBear.ai

BigBear.ai is an AI-powered decision intelligence solutions provider that offers services across various industries including Government & Defense, Manufacturing & Warehouse Operations, Healthcare & Life Sciences. They specialize in optimizing operational efficiency, force deployment, supply chain management, autonomous systems management, and vulnerability detection. Their solutions are designed to improve situational awareness, streamline production processes, and enhance patient care delivery settings.

2 - Open Source Tools

SinkFinder

SinkFinder + LLM is a closed-source semi-automatic vulnerability discovery tool that performs static code analysis on jar/war/zip files. It enhances the capability of LLM large models to verify path reachability and assess the trustworthiness score of the path based on the contextual code environment. Users can customize class and jar exclusions, depth of recursive search, and other parameters through command-line arguments. The tool generates rule.json configuration file after each run and requires configuration of the DASHSCOPE_API_KEY for LLM capabilities. The tool provides detailed logs on high-risk paths, LLM results, and other findings. Rules.json file contains sink rules for various vulnerability types with severity levels and corresponding sink methods.

finite-monkey-engine

FiniteMonkey is an advanced vulnerability mining engine powered purely by GPT, requiring no prior knowledge base or fine-tuning. Its effectiveness significantly surpasses most current related research approaches. The tool is task-driven, prompt-driven, and focuses on prompt design, leveraging 'deception' and hallucination as key mechanics. It has helped identify vulnerabilities worth over $60,000 in bounties. The tool requires PostgreSQL database, OpenAI API access, and Python environment for setup. It supports various languages like Solidity, Rust, Python, Move, Cairo, Tact, Func, Java, and Fake Solidity for scanning. FiniteMonkey is best suited for logic vulnerability mining in real projects, not recommended for academic vulnerability testing. GPT-4-turbo is recommended for optimal results with an average scan time of 2-3 hours for medium projects. The tool provides detailed scanning results guide and implementation tips for users.

20 - OpenAI Gpts

NVD - CVE Research Assistant

Expert in CVEs and cybersecurity vulnerabilities, providing precise information from the National Vulnerability Database.

Solidity Sage

Your personal Ethereum magician — Simply ask a question or provide a code sample for insights into vulnerabilities, gas optimizations, and best practices. Don't be shy to ask about tooling and legendary attacks.

Phoenix Vulnerability Intelligence GPT

Expert in analyzing vulnerabilities with ransomware focus with intelligence powered by Phoenix Security

WVA

Web Vulnerability Academy (WVA) is an interactive tutor designed to introduce users to web vulnerabilities while also providing them with opportunities to assess and enhance their knowledge through testing.

VulnGPT

Your ally in navigating the CVE deluge. Expert insights for prioritizing and remediating vulnerabilities.

Security Testing Advisor

Ensures software security through comprehensive testing techniques.

🛡️ CodeGuardian Pro+ 🛡️

Your AI-powered sentinel for code! Scans for vulnerabilities, offers security tips, and educates on best practices in cybersecurity. 🔍🔐