Best AI tools for< Safety Methodology Analyst >

Infographic

20 - AI tool Sites

Plus

Plus is an AI-based autonomous driving software company that focuses on developing solutions for driver assist and autonomous driving technologies. The company offers a suite of autonomous driving solutions designed for integration with various hardware platforms and vehicle types, ranging from perception software to highly automated driving systems. Plus aims to transform the transportation industry by providing high-performance, safe, and affordable autonomous driving vehicles at scale.

European Agency for Safety and Health at Work

The European Agency for Safety and Health at Work (EU-OSHA) is an EU agency that provides information, statistics, legislation, and risk assessment tools on occupational safety and health (OSH). The agency's mission is to make Europe's workplaces safer, healthier, and more productive.

Voxel's Safety Intelligence Platform

Voxel's Safety Intelligence Platform is an AI-driven site intelligence platform that empowers safety and operations leaders to make strategic decisions. It provides real-time visibility into critical safety practices, offers custom insights through on-demand dashboards, facilitates risk management with collaborative tools, and promotes a sustainable safety culture. The platform helps enterprises reduce risks, increase efficiency, and enhance workforce safety through innovative AI technology.

Center for AI Safety (CAIS)

The Center for AI Safety (CAIS) is a research and field-building nonprofit based in San Francisco. Their mission is to reduce societal-scale risks associated with artificial intelligence (AI) by conducting impactful research, building the field of AI safety researchers, and advocating for safety standards. They offer resources such as a compute cluster for AI/ML safety projects, a blog with in-depth examinations of AI safety topics, and a newsletter providing updates on AI safety developments. CAIS focuses on technical and conceptual research to address the risks posed by advanced AI systems.

Center for AI Safety (CAIS)

The Center for AI Safety (CAIS) is a research and field-building nonprofit organization based in San Francisco. They conduct impactful research, advocacy projects, and provide resources to reduce societal-scale risks associated with artificial intelligence (AI). CAIS focuses on technical AI safety research, field-building projects, and offers a compute cluster for AI/ML safety projects. They aim to develop and use AI safely to benefit society, addressing inherent risks and advocating for safety standards.

AI Safety Initiative

The AI Safety Initiative is a premier coalition of trusted experts that aims to develop and deliver essential AI guidance and tools for organizations to deploy safe, responsible, and compliant AI solutions. Through vendor-neutral research, training programs, and global industry experts, the initiative provides authoritative AI best practices and tools. It offers certifications, training, and resources to help organizations navigate the complexities of AI governance, compliance, and security. The initiative focuses on AI technology, risk, governance, compliance, controls, and organizational responsibilities.

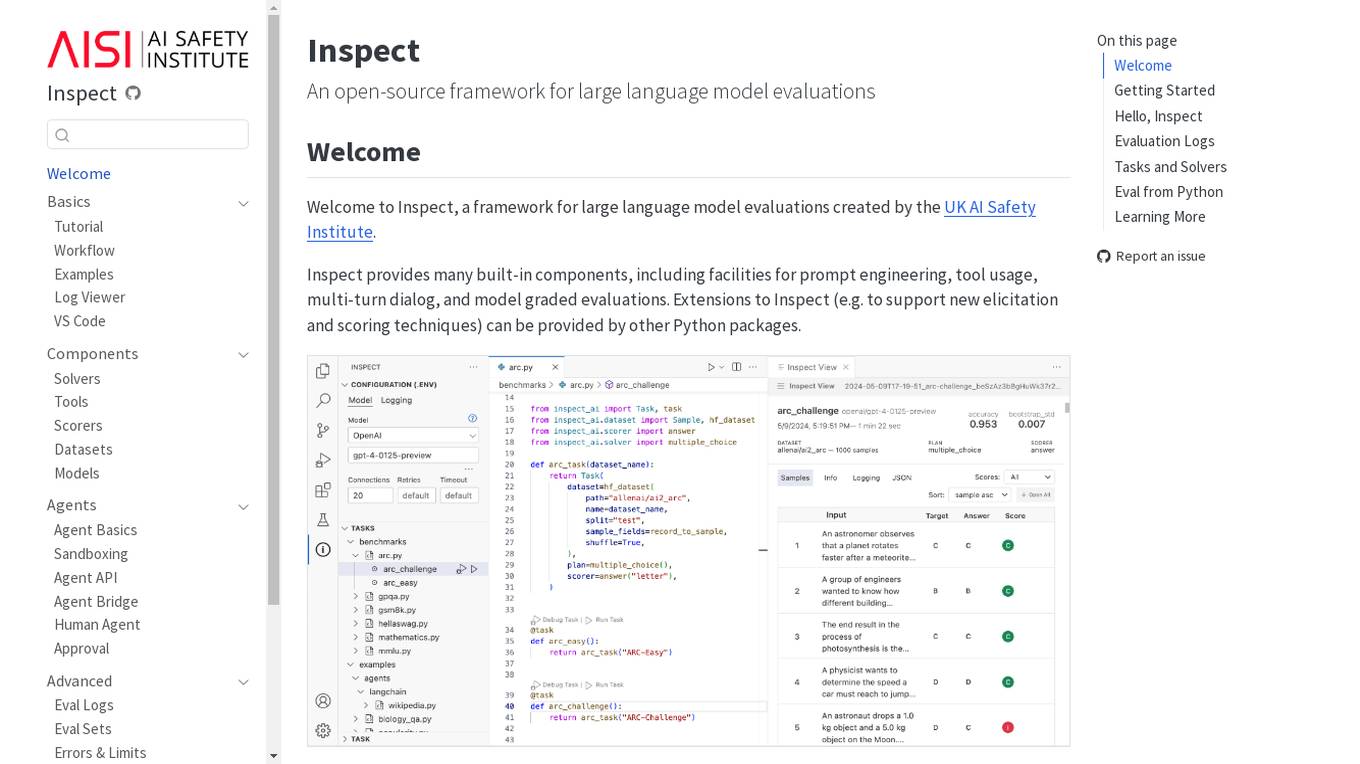

Inspect

Inspect is an open-source framework for large language model evaluations created by the UK AI Safety Institute. It provides built-in components for prompt engineering, tool usage, multi-turn dialog, and model graded evaluations. Users can explore various solvers, tools, scorers, datasets, and models to create advanced evaluations. Inspect supports extensions for new elicitation and scoring techniques through Python packages.

viAct.ai

viAct.ai is an AI monitoring tool that redefines workplace safety by combining automated AI monitoring with real-time alerts. The tool helps safety leaders prevent risks sooner, streamline compliance, and protect what matters most in various industries such as construction, oil & gas, mining, manufacturing, and more. viAct.ai offers proprietary scenario-based Vision AI, privacy by design approach, plug & play integration, and is trusted by industry leaders for its award-winning workplace safety solutions.

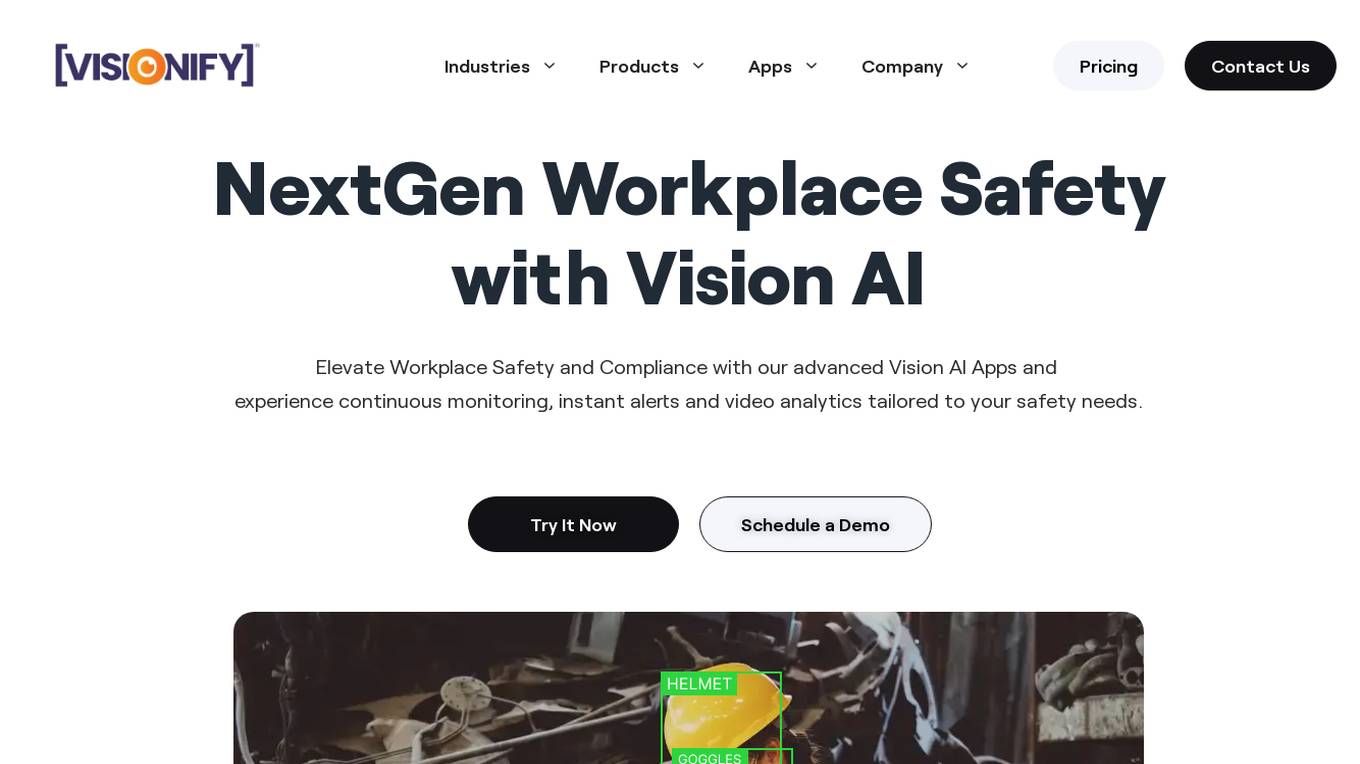

Visionify.ai

Visionify.ai is an advanced Vision AI application designed to enhance workplace safety and compliance through AI-driven surveillance. The platform offers over 60 Vision AI scenarios for hazard warnings, worker health, compliance policies, environment monitoring, vehicle monitoring, and suspicious activity detection. Visionify.ai empowers EHS professionals with continuous monitoring, real-time alerts, proactive hazard identification, and privacy-focused data security measures. The application transforms ordinary cameras into vigilant protectors, providing instant alerts and video analytics tailored to safety needs.

SWMS AI

SWMS AI is an AI-powered safety risk assessment tool that helps businesses streamline compliance and improve safety. It leverages a vast knowledge base of occupational safety resources, codes of practice, risk assessments, and safety documents to generate risk assessments tailored specifically to a project, trade, and industry. SWMS AI can be customized to a company's policies to align its AI's document generation capabilities with proprietary safety standards and requirements.

Kami Home

Kami Home is an AI-powered security application that provides effortless safety and security for homes. It offers smart alerts, secure cloud video storage, and a Pro Security Alarm system with 24/7 emergency response. The application uses AI-vision to detect humans, vehicles, and animals, ensuring that users receive custom alerts for relevant activities. With features like Fall Detect for seniors living at home, Kami Home aims to protect families and provide peace of mind through advanced technology.

Turing AI

Turing AI is a cloud-based video security system powered by artificial intelligence. It offers a range of AI-powered video surveillance products and solutions to enhance safety, security, and operations. The platform provides smart video search capabilities, real-time alerts, instant video sharing, and hardware offerings compatible with various cameras. With flexible licensing options and integration with third-party devices, Turing AI is trusted by customers across industries for its robust and innovative approach to cloud video security.

Frontier Model Forum

The Frontier Model Forum (FMF) is a collaborative effort among leading AI companies to advance AI safety and responsibility. The FMF brings together technical and operational expertise to identify best practices, conduct research, and support the development of AI applications that meet society's most pressing needs. The FMF's core objectives include advancing AI safety research, identifying best practices, collaborating across sectors, and helping AI meet society's greatest challenges.

SEA.AI

SEA.AI is an AI tool that provides Machine Vision for Safety at Sea. It utilizes the latest camera technology combined with artificial intelligence to detect and classify objects on the surface of the water, including unsignalled craft, floating obstacles, buoys, kayaks, and persons overboard. The application offers various solutions for sailing, commercial, motor, maritime surveillance, search & rescue, and government sectors. SEA.AI aims to enhance safety and convenience for sailors by leveraging AI technology for early detection of potential hazards at sea.

Recognito

Recognito is a leading facial recognition technology provider, offering the NIST FRVT Top 1 Face Recognition Algorithm. Their high-performance biometric technology is used by police forces and security services to enhance public safety, manage individual movements, and improve audience analytics for businesses. Recognito's software goes beyond object detection to provide detailed user role descriptions and develop user flows. The application enables rapid face and body attribute recognition, video analytics, and artificial intelligence analysis. With a focus on security, living, and business improvements, Recognito helps create safer and more prosperous cities.

DisplayGateGuard

DisplayGateGuard is an AI-powered brand safety and suitability provider that helps advertisers choose the right placements, isolate fraudulent websites, and enhance brand safety. By leveraging artificial intelligence, the platform offers curated inclusion and exclusion lists to provide deeper insights into the environments and contexts where ads are shown, ensuring campaigns reach the right audience effectively.

EdgeDX

EdgeDX is a leading provider of Edge AI Video Analysis Solutions, specializing in security and surveillance, construction and logistics safety, efficient store management, public safety management, and intelligent transportation system. The application offers over 50 intuitive AI apps capable of advanced human behavior analysis, supports various protocols and VMS, and provides features like P2P based mobile alarm viewer, LTE & GPS support, and internal recording with M.2 NVME SSD. EdgeDX aims to protect customer assets, ensure safety, and enable seamless integration with AI Bridge for easy and efficient implementation.

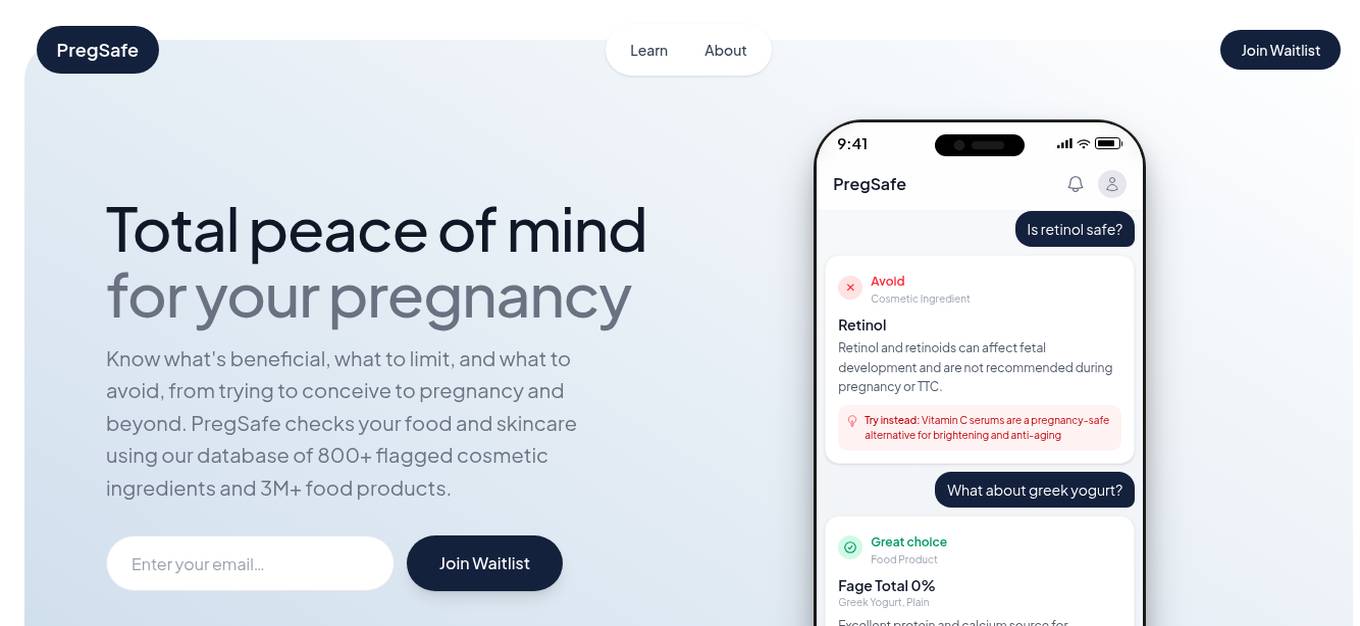

PregSafe

PregSafe is a pregnancy safety companion that empowers users to make informed decisions about the safety of food and skincare products during pregnancy and fertility. It offers a database of 800+ flagged cosmetic ingredients and 3M+ food products to help users identify what's beneficial, what to limit, and what to avoid. PregSafe is the world's first AI assistant for pregnancy and fertility safety, providing science-backed insights and practical guidance to reduce exposure to harmful ingredients and support long-term health for both mother and baby.

Anthropic

Anthropic is an AI safety and research company based in San Francisco. Our interdisciplinary team has experience across ML, physics, policy, and product. Together, we generate research and create reliable, beneficial AI systems.

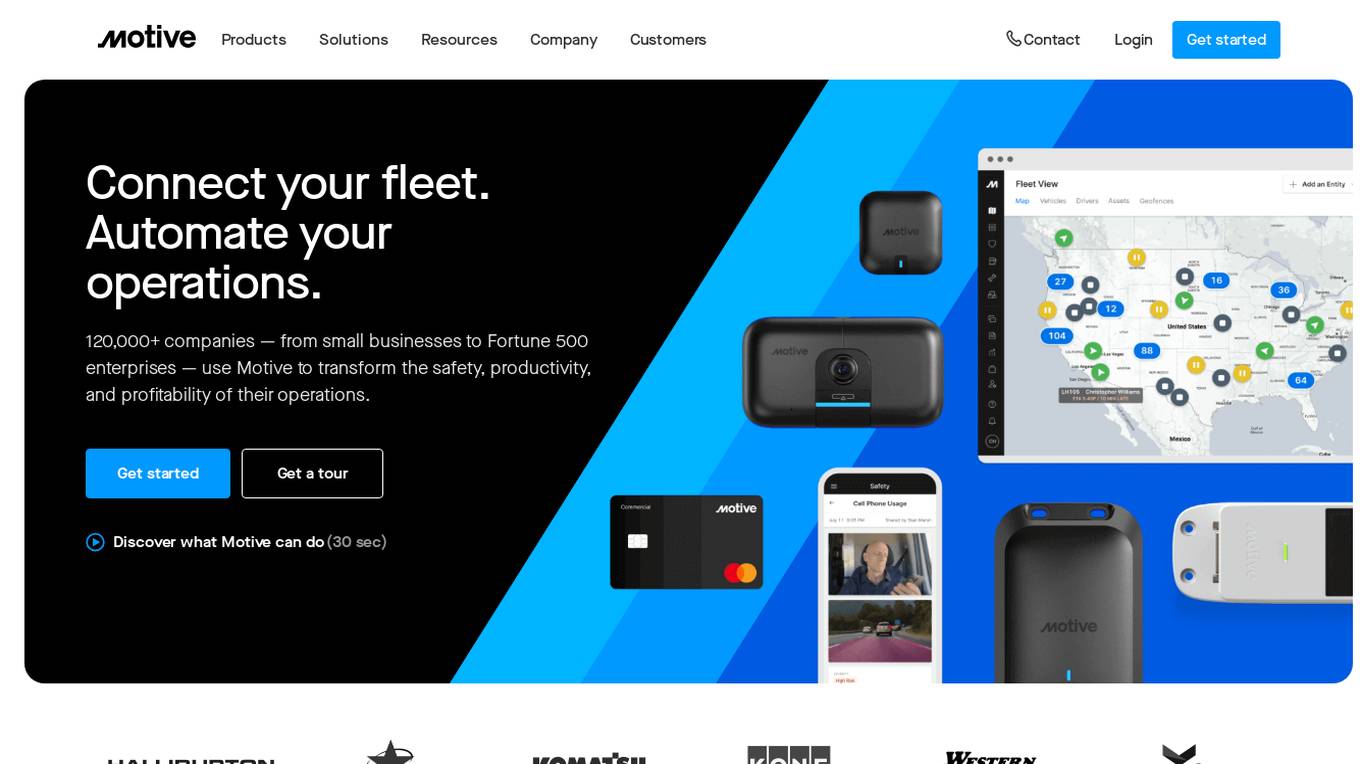

Motive

Motive is an all-in-one fleet management platform that provides businesses with a variety of tools to help them improve safety, efficiency, and profitability. Motive's platform includes features such as AI-powered dashcams, ELD compliance, GPS fleet tracking, equipment monitoring, and fleet card management. Motive's platform is used by over 120,000 companies, including small businesses and Fortune 500 enterprises.

0 - Open Source Tools

20 - OpenAI Gpts

Canadian Film Industry Safety Expert

Film studio safety expert guiding on regulations and practices

The Building Safety Act Bot (Beta)

Simplifying the BSA for your project. Created by www.arka.works

Brand Safety Audit

Get a detailed risk analysis for public relations, marketing, and internal communications, identifying challenges and negative impacts to refine your messaging strategy.

GPT Safety Liaison

A liaison GPT for AI safety emergencies, connecting users to OpenAI experts.

Travel Safety Advisor

Up-to-date travel safety advisor using web data, avoids subjective advice.

香港地盤安全佬 HK Construction Site Safety Advisor

Upload a site photo to assess the potential hazard and seek advises from experience AI Safety Officer

Emergency Training

Provides emergency training assistance with a focus on safety and clear guidelines.

Dog Safe: Can My Dog Eat This?

Your expert guide to dog safety, find out what's safe for dogs to eat. You may be suprised!