Best AI tools for< Remote Work >

Infographic

20 - AI tool Sites

workverse.space

The website workverse.space appears to be experiencing a privacy error related to its security certificate. The error message indicates that the connection may not be private and warns of potential information theft by attackers. The site's security certificate is issued for *.up.railway.app, which is causing the certificate common name invalid error. Users are advised to proceed to workverse.space at their own risk, as the site's security cannot be verified. The page also includes information about certificate transparency, security enhancements, and privacy policies.

Capacitor

Capacitor is an AI-powered remote working system designed to optimize workforce performance. It is an all-in-one collaboration platform for remote teams, offering features such as time tracking, A.I. assistance, capacity planning, payroll system, project management, and HR system. With Capacitor, organizations can visualize productivity, make better executive decisions, and manage tasks, time-burn, and resources efficiently.

JobsRemote.ai

JobsRemote.ai is a free artificial intelligence-based platform that offers a curated selection of remote job-friendly tools to enhance the remote work experience. Users can browse thousands of handpicked tools suitable for remote jobs, ensuring high-quality recommendations without ads, scams, or junk listings. The platform focuses on providing legitimate and suitable job listings from top companies worldwide, streamlining the application process for job seekers. With a user-friendly interface and personalized recommendations, JobsRemote.ai aims to connect remote professionals with quality remote job opportunities efficiently.

TubePro

TubePro is a digital platform designed to provide tools and insights for remote workers, freelancers, and digital professionals. It offers a range of resources to enhance productivity, communication, and digital skills. TubePro aims to support individuals in optimizing their work-from-home experience by offering guides, tutorials, and reviews of various apps and software. Stay connected with the latest trends and tools in the digital workspace through TubePro.

Reworked

Reworked is a leading online community for professionals in the fields of employee experience, digital workplace, and talent management. It provides news, research, and events on the latest trends and best practices in these areas. Reworked also offers a variety of resources for members, including a podcast, awards program, and research library.

Reworked

Reworked is a leading online community for professionals in the fields of employee experience, digital workplace, and talent management. It provides news, research, and events on the latest trends and best practices in these areas. Reworked also offers a variety of resources for members, including a podcast, awards program, and research library.

HowsThisGoing

HowsThisGoing is an AI-powered application designed to streamline team communication and productivity by enabling users to set up standups in Slack within seconds. The platform offers features such as automatic standups, AI summaries, custom tests, analytics & reporting, and workflow scheduling. Users can easily create workflows, generate AI reports, and track team performance efficiently. HowsThisGoing provides unlimited benefits at a flat price, making it a cost-effective solution for teams of all sizes.

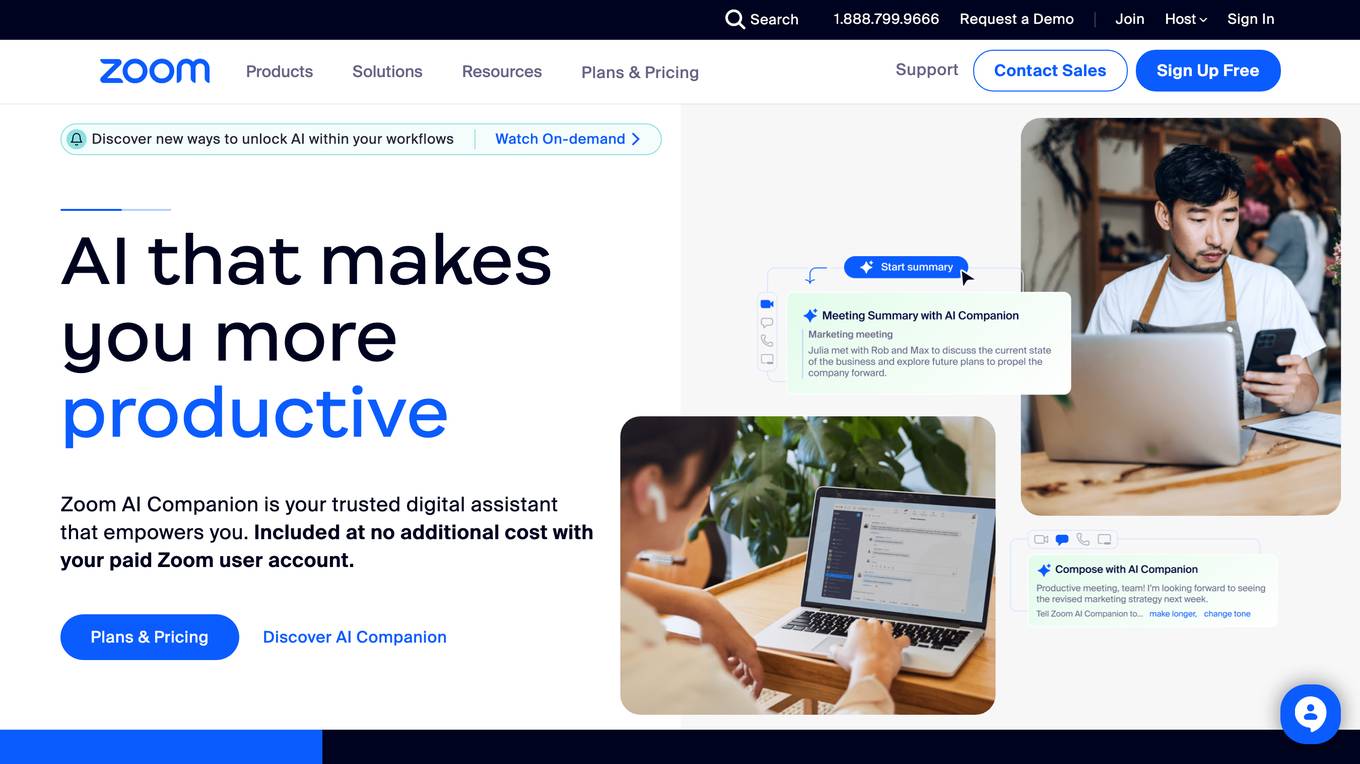

Zoom

Zoom is a cloud-based video conferencing service that allows users to virtually connect with others for meetings, webinars, and other events. It offers a range of features such as video and audio conferencing, screen sharing, chat, and recording. Zoom also provides additional tools for collaboration, such as a whiteboard, breakout rooms, and polling. The platform is designed to be user-friendly and accessible from various devices, including computers, smartphones, and tablets.

Allwork.Space

Allwork.Space is an online platform that provides resources and information on the future of work. It covers topics such as artificial intelligence, remote work, coworking, and workplace design. The website also features a marketplace where users can find office space and remote jobs.

TripOffice.com

TripOffice.com is a travel website that offers workation options for travelers looking to work comfortably from anywhere. With over 50,000 hotels and apartments in 120+ countries, users can find the best workation accommodations with workspaces. The website provides a user-friendly platform to search and book accommodations for workation trips, catering to digital nomads, remote workers, and business travelers.

Naft AI

Naft AI is an upcoming AI tool designed to facilitate remote-first/hybrid work environments by providing scalable coordination solutions. The tool focuses on enhancing the onboarding experience for customers, aiming to streamline processes and improve efficiency in a remote work setting.

Spot

Spot is a virtual office platform designed to bring remote teams together in a more engaging and interactive way. It combines the features of popular communication tools like Slack and Zoom with elements that mimic the in-person office experience, such as spontaneous interactions, shared spaces for collaboration, and virtual celebrations. Spot aims to enhance team connectivity, productivity, and overall work experience by providing a platform that bridges the gap between remote work and traditional office dynamics.

FlexOS

FlexOS is a media brand that provides interviews, analysis, and buying guides to help business leaders stay ahead in the future of work. It covers topics such as leadership, artificial intelligence, remote work, hybrid work, human resources, collaboration, communication, engagement, productivity, and happiness at work.

Gushwork

Gushwork is an AI-assisted SEO tool designed to help businesses scale their online presence by combining human expertise with AI technology. The platform offers services such as AI-powered keyword and topic mapping, real human marketers to vet content, and automated organic presence building. Gushwork aims to increase website traffic, organic sales, and overall online visibility through data-driven strategies and technical SEO optimizations. The tool is purpose-built for consumer brands, SaaS, B2B services, and local consumer services, with a focus on integration and partnerships for seamless operations.

Zoom

Zoom is a popular video conferencing platform that allows users to host virtual meetings, webinars, and online events. It provides a seamless way for individuals and businesses to connect remotely, collaborate effectively, and communicate in real-time. With features like screen sharing, chat functionality, and recording options, Zoom has become a go-to solution for remote work, distance learning, and social gatherings. The platform prioritizes user experience, security, and reliability, making it a versatile tool for various communication needs.

Leapmax

Leapmax is a workforce analytics software designed to enhance operational efficiency by improving employee productivity, ensuring data security, facilitating communication and collaboration, and managing compliance. The application offers features such as productivity management, data security, remote team collaboration, reporting management, and network health monitoring. Leapmax provides advantages like AI-based user detection, real-time activity tracking, remote co-browsing, collaboration suite, and actionable analytics. However, some disadvantages include the need for employee monitoring, potential privacy concerns, and dependency on internet connectivity. The application is commonly used by contact centers, outsourcers, enterprises, and back offices. Users can perform tasks like productivity monitoring, app usage tracking, communication and collaboration, compliance management, and remote workforce monitoring.

Verificient

Verificient Technologies Inc specializes in biometrics, computer vision, and machine learning to deliver world-class solutions in continuous identity verification and remote monitoring. Their flagship product, Proctortrack, is an identity verification and automated digital remote proctoring solution, helping Institutions of higher education ensure the integrity of their high-stakes online assessments.

Personify

Personify is a virtual camera platform that allows users to create and use avatars in video meetings. The platform offers a variety of features, including the ability to create custom avatars, import avatars from other platforms, and use a variety of backgrounds and effects. Personify is compatible with all major video conferencing software, including Zoom, Microsoft Teams, and Google Meet.

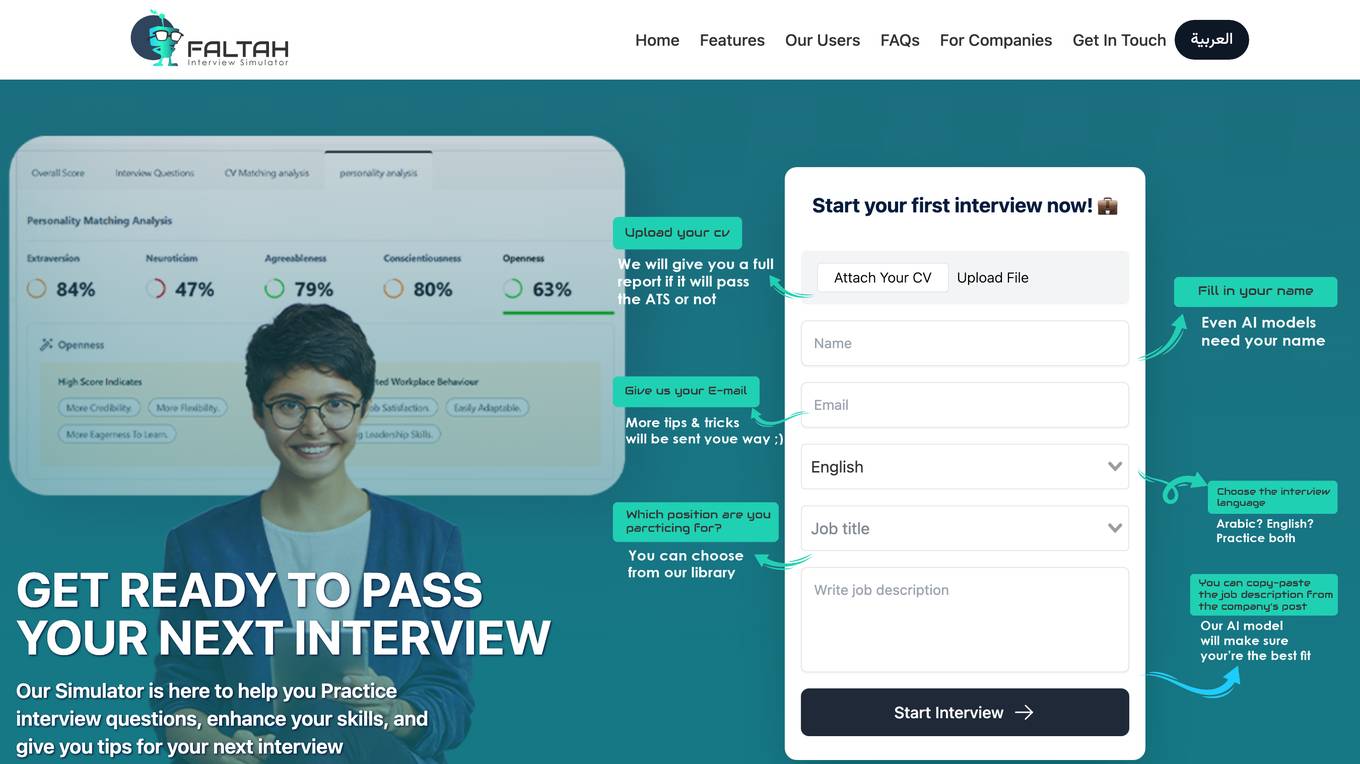

FALTAH

FALTAH is an AI-powered Interview Simulation tool designed to help users practice interview questions, enhance their skills, and receive tips for their next interview. The tool utilizes artificial intelligence to provide personalized feedback, performance reports, personality analysis, and CV parsing. It aims to assist students, job seekers, and remote workers in improving their interview skills and increasing their job prospects.

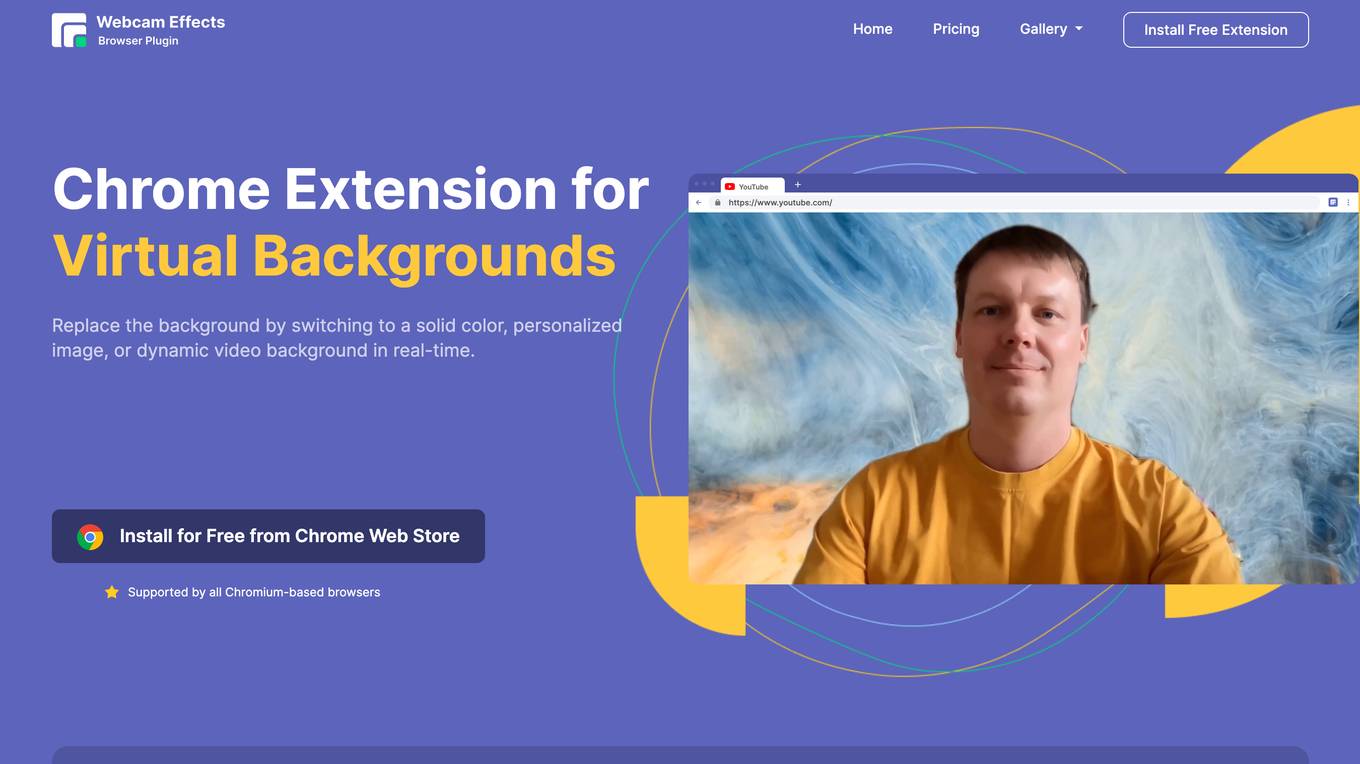

Webcam Effects Chrome Plugin

Webcam Effects Chrome Plugin is an AI-powered tool that offers a range of features to enhance online video conversations. It allows users to replace, blur the webcam background, record single source or whole tab in the browser for any browser-based video streaming. The plugin supports features like background blur, virtual backgrounds, smart zoom, emoji, and Giphy integration. It aims to provide users with a professional and engaging video call experience by leveraging advanced AI technology directly within the browser.

0 - Open Source Tools

20 - OpenAI Gpts

Remote Buddy Bot

Remote job expert aiding in job search, interview prep, and remote work advice.

Co-WorkingGPT

Find Co-Working spaces worldwide, get remote work advice and digital nomad hacks.

Hybrid Workplace Navigator

Advises organizations on optimizing hybrid work models, blending remote and in-office strategies.

OE Buddy

Assistant for multi-job remote workers, aiding in task management and communication.

Digital Nomad Lifestyle Guide

Advises on embracing a digital nomad lifestyle, covering aspects like remote work, travel planning, and living abroad.

Nomad List

NomadGPT helps you become a digital nomad and find you the best places in the world to live and work remotely

100 Remote Ways to Make Money

Expert on online freelancing, digital marketing, and e-commerce

Coworking Guide

Coworking Guide is your AI-powered expert in finding the ideal coworking space, tailored to your unique needs. Discover the latest trends, unique amenities, and tips for maximizing your coworking experience with a friendly, approachable guide.

Time Converter

Elegantly designed to seamlessly adapt your schedule across multiple time zones.

The Savvy Digital Nomad

A friendly guide for digital nomad life, offering country comparisons and lifestyle tips.

Leadership for Remote Teams

An advanced remote leadership coach with dynamic updates and expanded scenarios.

Remote Tech Jobs

Expert in finding remote tech jobs from all sources. Results will also include rates where available.