Best AI tools for< Information Extraction Specialist >

Infographic

20 - AI tool Sites

NuShift Inc

NuShift Inc is an AI-powered application that offers ELMR-T, a cutting-edge solution for converting data into actionable knowledge in the maintenance and engineering domain. Leveraging machine learning, machine translation, speech recognition, question answering, and information extraction, ELMR-T provides intelligent AI insights to empower maintenance teams. The application is designed to streamline data-driven decision-making, enhance user interaction, and boost efficiency by delivering precise and meaningful results effortlessly.

Daxtra

Daxtra is an AI-powered recruitment technology tool designed to help staffing and recruiting professionals find, parse, match, and engage the best candidates quickly and efficiently. The tool offers a suite of products that seamlessly integrate with existing ATS or CRM systems, automating various recruitment processes such as candidate data loading, CV/resume formatting, information extraction, and job matching. Daxtra's solutions cater to corporates, vendors, job boards, and social media partners, providing a comprehensive set of developer components to enhance recruitment workflows.

Isomeric

Isomeric is an AI tool that utilizes artificial intelligence to semantically understand unstructured text and extract specific data. It transforms messy, unstructured text into machine-readable JSON, enabling users to extract insights, process data, deliver results, and more. From web scraping to browser extensions to general information extraction, Isomeric helps users scale their data gathering pipeline efficiently.

deepset

deepset is an AI platform that offers enterprise-level products and solutions for AI teams. It provides deepset Cloud, a platform built with Haystack, enabling fast and accurate prototyping, building, and launching of advanced AI applications. The platform streamlines the AI application development lifecycle, offering processes, tools, and expertise to move from prototype to production efficiently. With deepset Cloud, users can optimize solution accuracy, performance, and cost, and deploy AI applications at any scale with one click. The platform also allows users to explore new models and configurations without limits, extending their team with access to world-class AI engineers for guidance and support.

Koncile

Koncile is an AI-powered OCR solution that automates data extraction from various documents. It combines advanced OCR technology with large language models to transform unstructured data into structured information. Koncile can extract data from invoices, accounting documents, identity documents, and more, offering features like categorization, enrichment, and database integration. The tool is designed to streamline document management processes and accelerate data processing. Koncile is suitable for businesses of all sizes, providing flexible subscription plans and enterprise solutions tailored to specific needs.

Inkwise

Inkwise is an AI-powered platform that helps users craft expert documents by extracting and integrating key information seamlessly from uploaded files. The platform offers features such as smart content extraction, predictive writing, document templates, and AI chat with files. Inkwise automates the document creation process by analyzing uploaded documents, extracting relevant data, and integrating it into customizable templates. It caters to various professions including academics, accounting, finance, corporate treasury, corporate tax, product management, procurement, legal, and marketing.

PYQ

PYQ is an AI-powered platform that helps businesses automate document-related tasks, such as data extraction, form filling, and system integration. It uses natural language processing (NLP) and machine learning (ML) to understand the content of documents and perform tasks accordingly. PYQ's platform is designed to be easy to use, with pre-built automations for common use cases. It also offers custom automation development services for more complex needs.

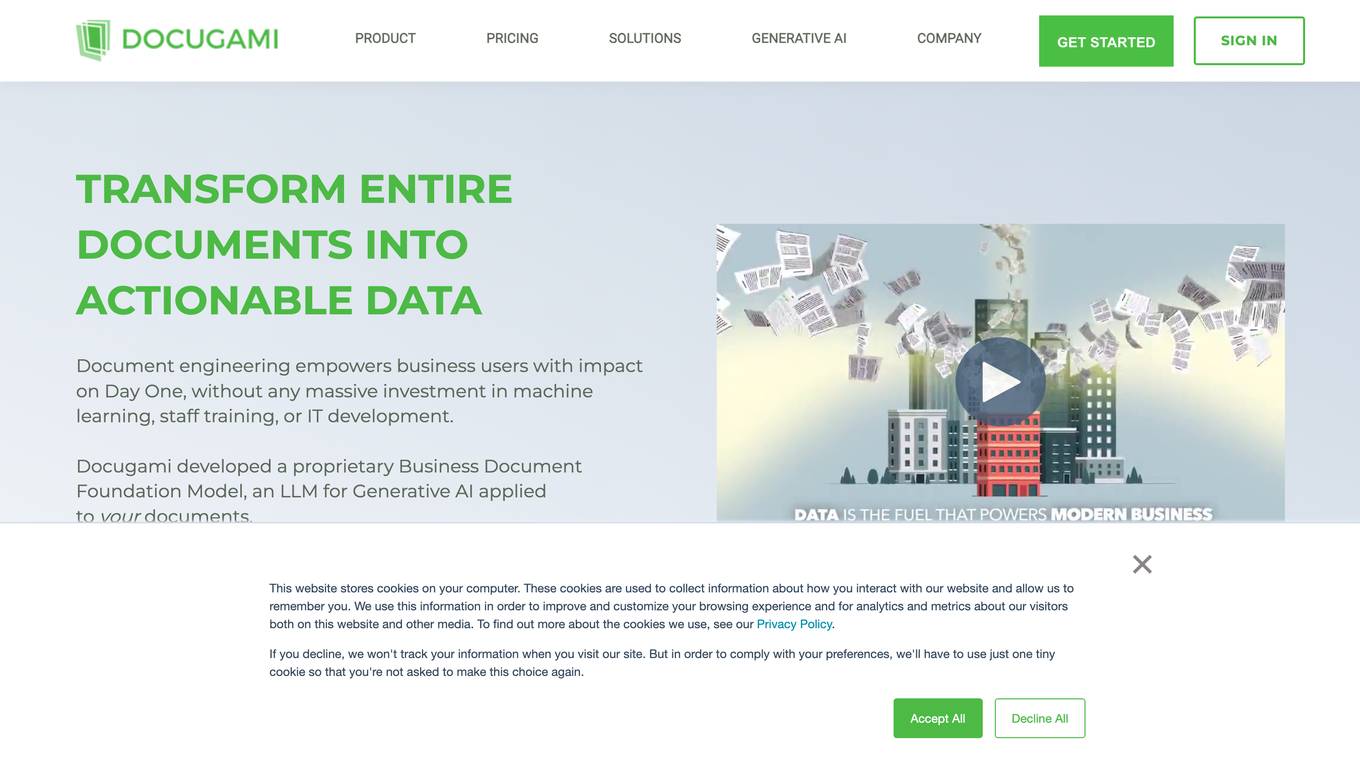

Docugami

Docugami is an AI-powered document engineering platform that enables business users to extract, analyze, and automate data from various types of documents. It empowers users with immediate impact without the need for extensive machine learning investments or IT development. Docugami's proprietary Business Document Foundation Model and Generative AI technology transform unstructured text and tables into structured information, allowing users to unlock insights, increase productivity, and ensure compliance.

Docugami

Docugami is an AI-powered document engineering platform that enables business users to extract, analyze, and automate data from various types of documents. It empowers users with immediate impact without the need for extensive machine learning investments or IT development. Docugami's proprietary Business Document Foundation Model leverages Generative AI to transform unstructured text into structured information, allowing users to unlock insights and drive business processes efficiently.

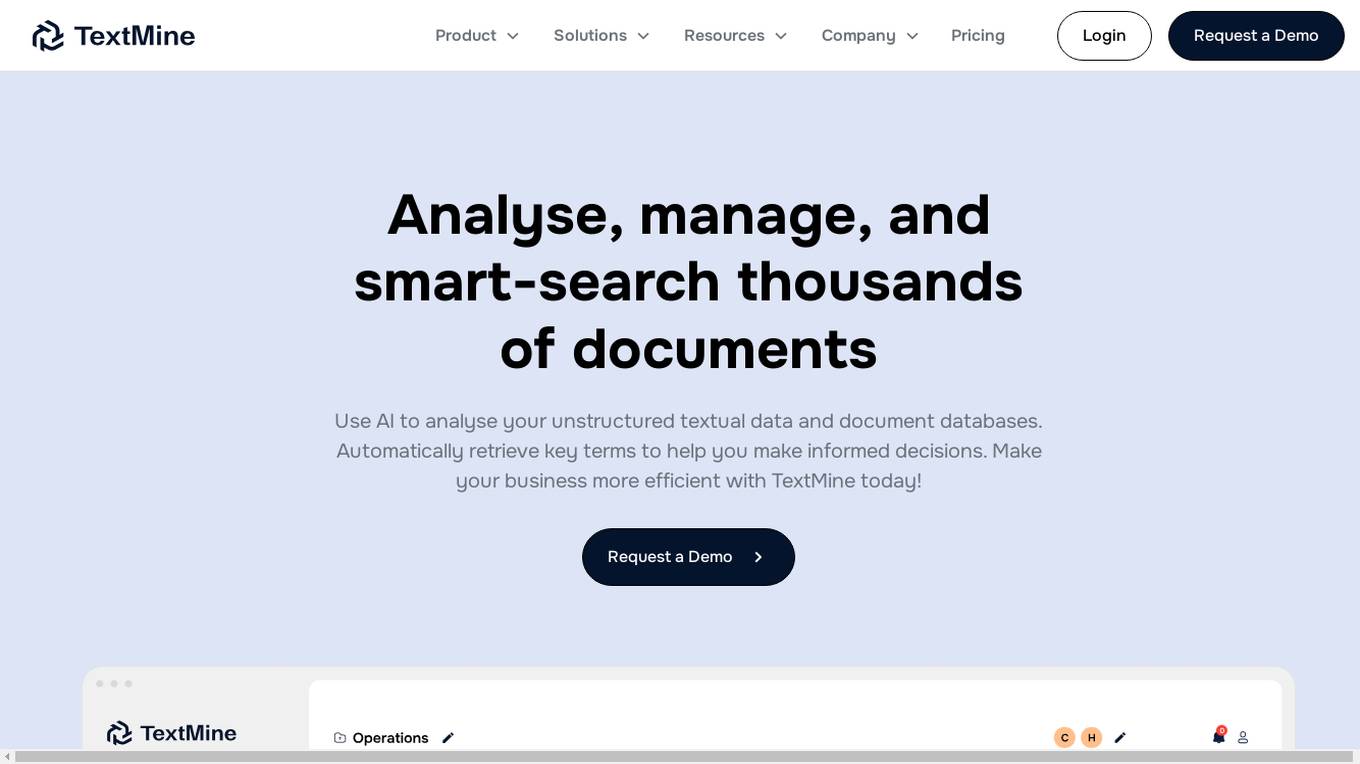

TextMine

TextMine is an AI-powered knowledge base that helps businesses analyze, manage, and search thousands of documents. It uses AI to analyze unstructured textual data and document databases, automatically retrieving key terms to help users make informed decisions. TextMine's features include a document vault for storing and managing documents, a categorization system for organizing documents, and a data extraction tool for extracting insights from documents. TextMine can help businesses save time, money, and improve efficiency by automating manual data entry and information retrieval tasks.

Pentest Copilot

Pentest Copilot by BugBase is an ultimate ethical hacking assistant that guides users through each step of the hacking journey, from analyzing web apps to root shells. It eliminates redundant research, automates payload and command generation, and provides intelligent contextual analysis to save time. The application excels at data extraction, privilege escalation, lateral movement, and leaving no trace behind. With features like secure VPN integration, total control over sessions, parallel command processing, and flexibility to choose between local or cloud execution, Pentest Copilot offers a seamless and efficient hacking experience without the need for Kali Linux installation.

Ragie

Ragie is a fully managed RAG-as-a-Service platform designed for developers. It offers easy-to-use APIs and SDKs to help developers get started quickly, with advanced features like LLM re-ranking, summary index, entity extraction, flexible filtering, and hybrid semantic and keyword search. Ragie allows users to connect directly to popular data sources like Google Drive, Notion, Confluence, and more, ensuring accurate and reliable information delivery. The platform is led by Craft Ventures and offers seamless data connectivity through connectors. Ragie simplifies the process of data ingestion, chunking, indexing, and retrieval, making it a valuable tool for AI applications.

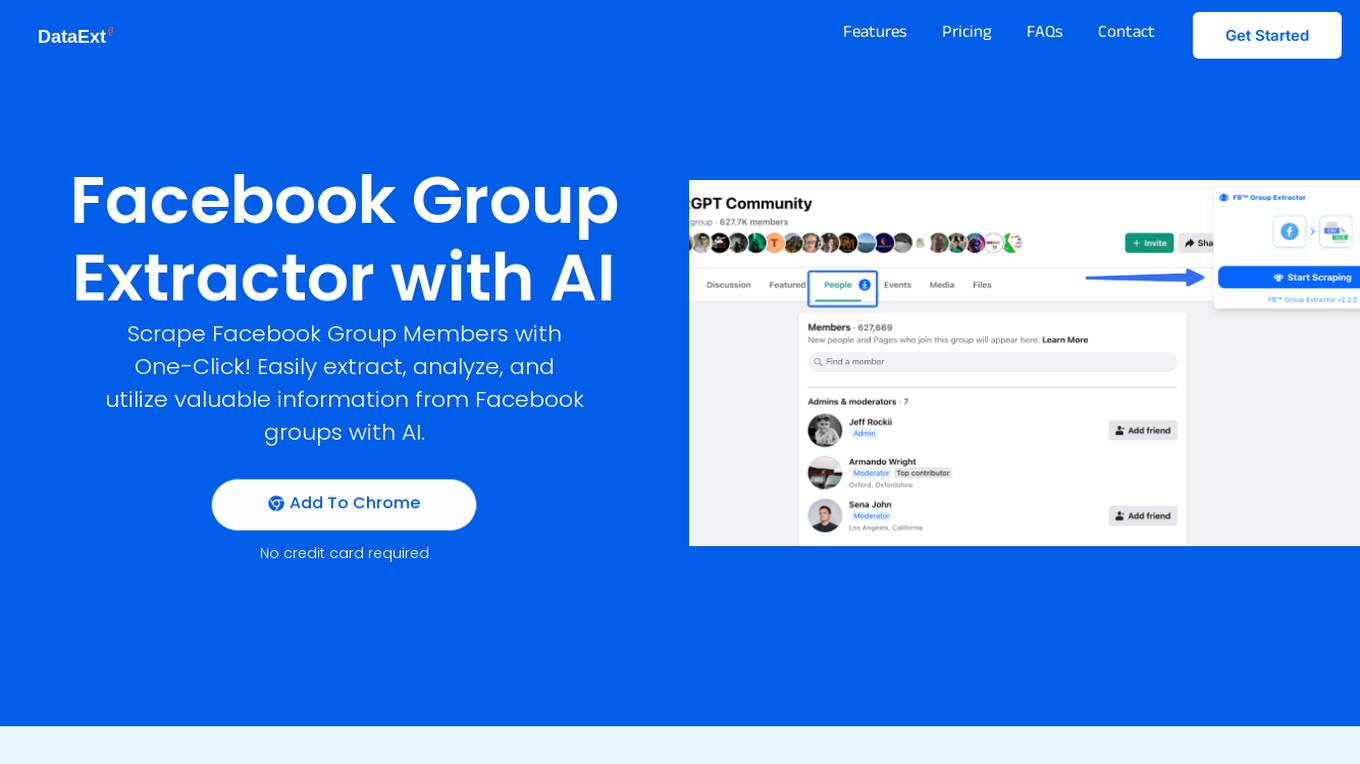

FB Group Extractor

FB Group Extractor is an AI-powered tool designed to scrape Facebook group members' data with one click. It allows users to easily extract, analyze, and utilize valuable information from Facebook groups using artificial intelligence technology. The tool provides features such as data extraction, behavioral analytics for personalized ads, content enhancement, user research, and more. With over 10k satisfied users, FB Group Extractor offers a seamless experience for businesses to enhance their marketing strategies and customer insights.

Automaited

Automaited is an AI application that offers Ada - an AI Agent for automating order processing. Ada handles orders from receipt to ERP entry, extracting, validating, and transferring data to ensure accuracy and efficiency. The application utilizes state-of-the-art AI technology to streamline order processing, saving time, reducing errors, and enabling users to focus on customer satisfaction. With seamless automation, Ada integrates into ERP systems, making order processing effortless, quick, and cost-efficient. Automaited provides tailored automations to make operational processes up to 70% more efficient, enhancing performance and reducing error rates.

SkipWatch

SkipWatch is an AI-powered YouTube summarizer that allows users to quickly generate video summaries, saving time and enhancing learning efficiency. With SkipWatch, users can access key information from YouTube videos in seconds, breaking language barriers and accelerating learning. The tool is designed to boost productivity by providing instant video summaries and extracting essential content from videos. Users can easily install SkipWatch as a Chrome extension and enjoy the benefits of efficient content consumption.

Horseman

Horseman is an AI-powered crawling companion that allows users to crawl the web in a highly configurable manner. With features like GPT integration, snippet creation with AI assistance, and insights generation, Horseman caters to frontend developers, performance analysts, digital agencies, accessibility experts, SEO specialists, and JavaScript engineers. The tool supports Windows, Mac OS, and Linux, offering a vast library of snippets for various tasks like sentiment analysis, content extraction, and more. Horseman empowers users to automate website interactions and extract valuable information effortlessly.

Habsy

Habsy is an AI-powered business card scanner application designed for individuals and teams to effortlessly scan, enrich, and manage business cards. It offers features like fast scanning, batch processing, easy contact organization, eco-friendly networking, accurate data extraction, and security and privacy measures. Habsy aims to make networking smarter, paperless, and more efficient for modern professionals by providing a seamless experience for capturing and utilizing contact information.

Woy AI Tools

Woy AI Tools is an online tool that offers free image to text conversion with over 99% accuracy and automatic recognition of more than 100 languages. Users can easily upload an image and receive the textual information contained within it. The tool supports multiple languages, prioritizes user privacy and data protection, has a simple and user-friendly interface, and is available for free usage. It utilizes advanced machine learning and OCR technology to continuously optimize recognition algorithms for clear and high-resolution images.

Schemawriter.ai

Schemawriter.ai is an advanced AI software platform that generates optimized schema and content on autopilot. It uses a large number of external APIs, including several Google APIs, and complex mathematical algorithms to produce entity lists and content correlated with high rankings in Google. The platform connects directly to Wikipedia and Wikidata via APIs to deliver accurate information about content and entities on webpages. Schemawriter.ai simplifies the process of editing schema, generating advanced schema files, and optimizing webpage content for fast and permanent on-page SEO optimization.

Nanonets

Nanonets is an AI-powered intelligent document processing and workflow automation platform that helps businesses extract valuable information from unstructured data, automate repetitive tasks, and make faster, more informed decisions. The platform offers solutions for various industries and functions, such as finance & accounting, supply chain & operations, human resources, customer support, and legal. Nanonets' AI agents and no-code platform enable businesses to streamline complex processes and achieve measurable ROI in a short period. With a focus on automation, Nanonets empowers users to optimize workflows, reduce manual effort, and enhance efficiency across different use cases.

1 - Open Source Tools

OneKE

OneKE is a flexible dockerized system for schema-guided knowledge extraction, capable of extracting information from the web and raw PDF books across multiple domains like science and news. It employs a collaborative multi-agent approach and includes a user-customizable knowledge base to enable tailored extraction. OneKE offers various IE tasks support, data sources support, LLMs support, extraction method support, and knowledge base configuration. Users can start with examples using YAML, Python, or Web UI, and perform tasks like Named Entity Recognition, Relation Extraction, Event Extraction, Triple Extraction, and Open Domain IE. The tool supports different source formats like Plain Text, HTML, PDF, Word, TXT, and JSON files. Users can choose from various extraction models like OpenAI, DeepSeek, LLaMA, Qwen, ChatGLM, MiniCPM, and OneKE for information extraction tasks. Extraction methods include Schema Agent, Extraction Agent, and Reflection Agent. The tool also provides support for schema repository and case repository management, along with solutions for network issues. Contributors to the project include Ningyu Zhang, Haofen Wang, Yujie Luo, Xiangyuan Ru, Kangwei Liu, Lin Yuan, Mengshu Sun, Lei Liang, Zhiqiang Zhang, Jun Zhou, Lanning Wei, Da Zheng, and Huajun Chen.

20 - OpenAI Gpts

Website Speed Reader

Expert in website summarization, providing clear and concise info summaries. You can also ask it to find specific info from the site.

Alien meaning?

What is Alien lyrics meaning? Alien singer:P. Sears, J. Sears,album:Modern Times ,album_time:1981. Click The LINK For More ↓↓↓

Create a Business 1-Pager Snippet v2

1) Input a URL, attachment, or copy/paste a bunch of info about your biz. 2) I will return a summary of what's important. 3) Use what I give you for other prompts, e.g.: marketing strategy, content ideas, competitive analysis, etc

Business Card Digitizer

Simply take a photo of your business cards and upload it to the chat. I'll take it from there!

Procedure Extraction and Formatting

Extracts and formats procedures from manuals into templates

Data Extractor Pro

Expert in data extraction and context-driven analysis. Can read most filetypes including PDFS, XLSX, Word, TXT, CSV, EML, Etc.

The Librarian

A digital librarian who identifies books from photos and provides detailed information.

Dissertation & Thesis GPT

An Ivy Leage Scholar GPT equipped to understand your research needs, formulate comprehensive literature review strategies, and extract pertinent information from a plethora of academic databases and journals. I'll then compose a peer review-quality paper with citations.

Information Framework Assistant

A SID framework companion for understanding and utilizing the Information Framework.

Gecko Tech Male Menopause Information

Information on male menopause, providing insights and explanations.