Best AI tools for< Ai Security Researcher >

Infographic

20 - AI tool Sites

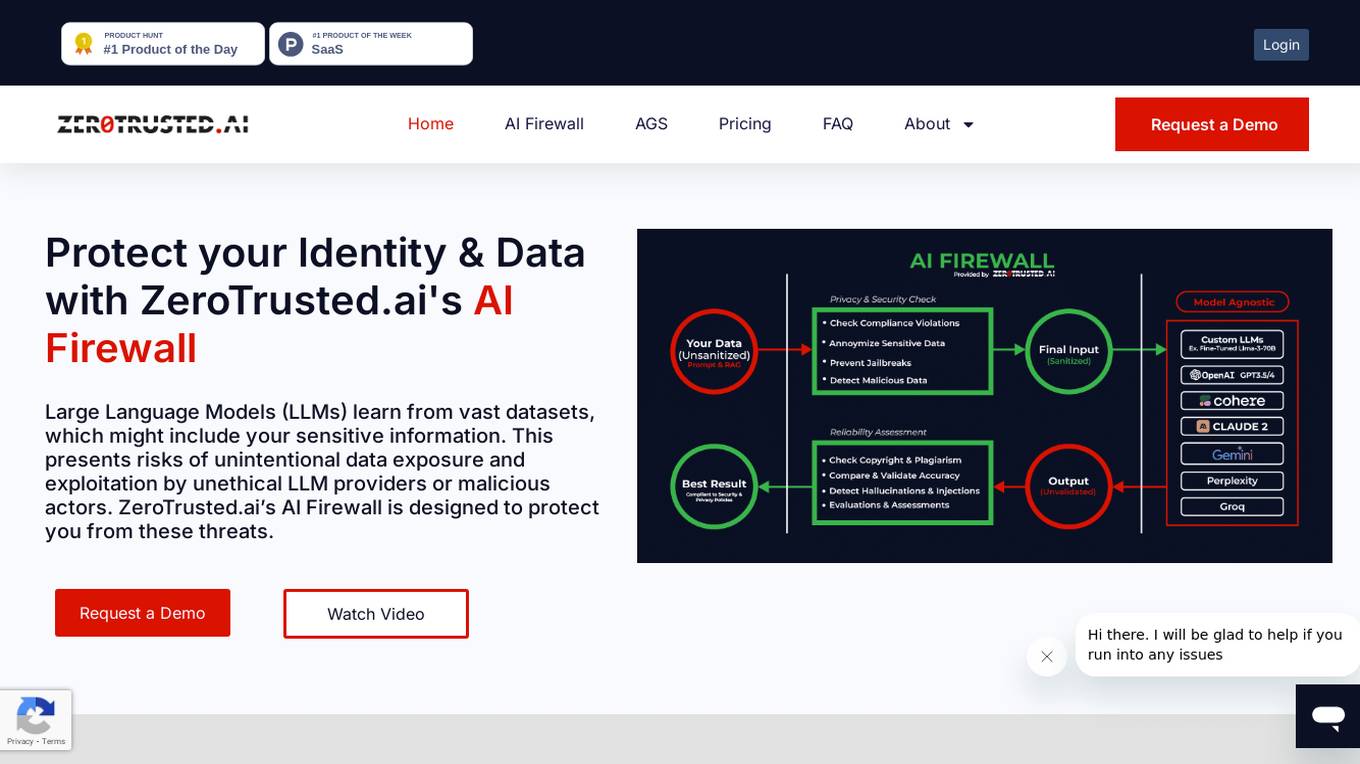

ZeroTrusted.ai

ZeroTrusted.ai is a cybersecurity platform that offers an AI Firewall to protect users from data exposure and exploitation by unethical providers or malicious actors. The platform provides features such as anonymity, security, reliability, integrations, and privacy to safeguard sensitive information. ZeroTrusted.ai empowers organizations with cutting-edge encryption techniques, AI & ML technologies, and decentralized storage capabilities for maximum security and compliance with regulations like PCI, GDPR, and NIST.

Adversa AI

Adversa AI is a platform that provides Secure AI Awareness, Assessment, and Assurance solutions for various industries to mitigate AI risks. The platform focuses on LLM Security, Privacy, Jailbreaks, Red Teaming, Chatbot Security, and AI Face Recognition Security. Adversa AI helps enable AI transformation by protecting it from cyber threats, privacy issues, and safety incidents. The platform offers comprehensive research, advisory services, and expertise in the field of AI security.

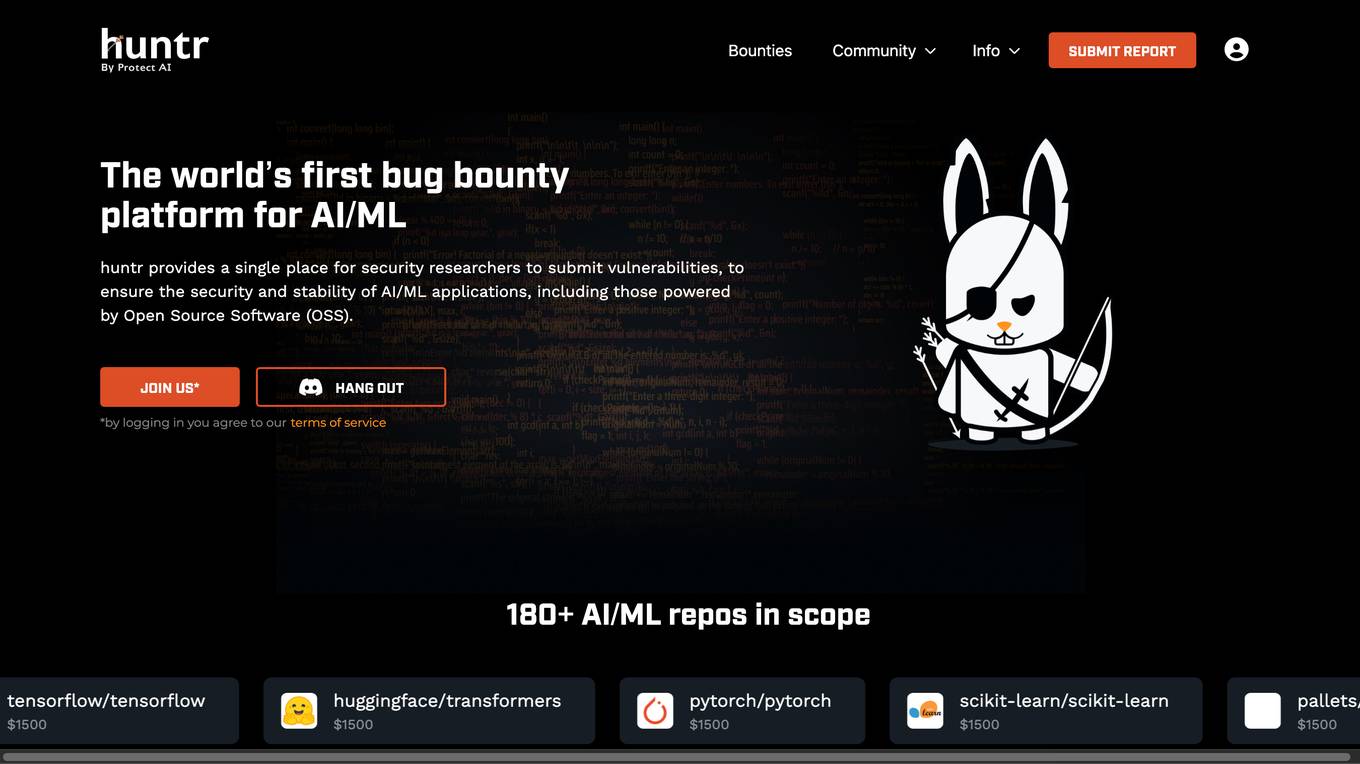

Huntr

Huntr is the world's first bug bounty platform for AI/ML. It provides a single place for security researchers to submit vulnerabilities, ensuring the security and stability of AI/ML applications, including those powered by Open Source Software (OSS).

AI Security Institute (AISI)

The AI Security Institute (AISI) is a state-backed organization dedicated to advancing AI governance and safety. They conduct rigorous AI research to understand the impacts of advanced AI, develop risk mitigations, and collaborate with AI developers and governments to shape global policymaking. The institute aims to equip governments with a scientific understanding of the risks posed by advanced AI, monitor AI development, evaluate national security risks, and promote responsible AI development. With a team of top technical staff and partnerships with leading research organizations, AISI is at the forefront of AI governance.

Protect AI

Protect AI is a comprehensive platform designed to secure AI systems by providing visibility and manageability to detect and mitigate unique AI security threats. The platform empowers organizations to embrace a security-first approach to AI, offering solutions for AI Security Posture Management, ML model security enforcement, AI/ML supply chain vulnerability database, LLM security monitoring, and observability. Protect AI aims to safeguard AI applications and ML systems from potential vulnerabilities, enabling users to build, adopt, and deploy AI models confidently and at scale.

Elie Bursztein AI Cybersecurity Platform

The website is a platform managed by Dr. Elie Bursztein, the Google & DeepMind AI Cybersecurity technical and research lead. It features a collection of publications, blog posts, talks, and press releases related to cybersecurity, artificial intelligence, and technology. Dr. Bursztein shares insights and research findings on various topics such as secure AI workflows, language models in cybersecurity, hate and harassment online, and more. Visitors can explore recent content and subscribe to receive cutting-edge research directly in their inbox.

MLSecOps

MLSecOps is an AI tool designed to drive the field of MLSecOps forward through high-quality educational resources and tools. It focuses on traditional cybersecurity principles, emphasizing people, processes, and technology. The MLSecOps Community educates and promotes the integration of security practices throughout the AI & machine learning lifecycle, empowering members to identify, understand, and manage risks associated with their AI systems.

Coalition for Secure AI (CoSAI)

The Coalition for Secure AI (CoSAI) is an open ecosystem of AI and security experts dedicated to sharing best practices for secure AI deployment and collaborating on AI security research and product development. It aims to foster a collaborative ecosystem of diverse stakeholders to invest in AI security research collectively, share security expertise and best practices, and build technical open-source solutions for secure AI development and deployment.

AI Insights Hub

The website is a platform dedicated to discussing and analyzing various developments and advancements in the field of AI, particularly focusing on Large Language Models (LLMs) such as GPT-5. It provides detailed insights, release notes, and discussions on AI models, applications, and security concerns. The website covers a wide range of topics related to AI, including prompt injections, spatial joins, memory features, and project-specific memory usage.

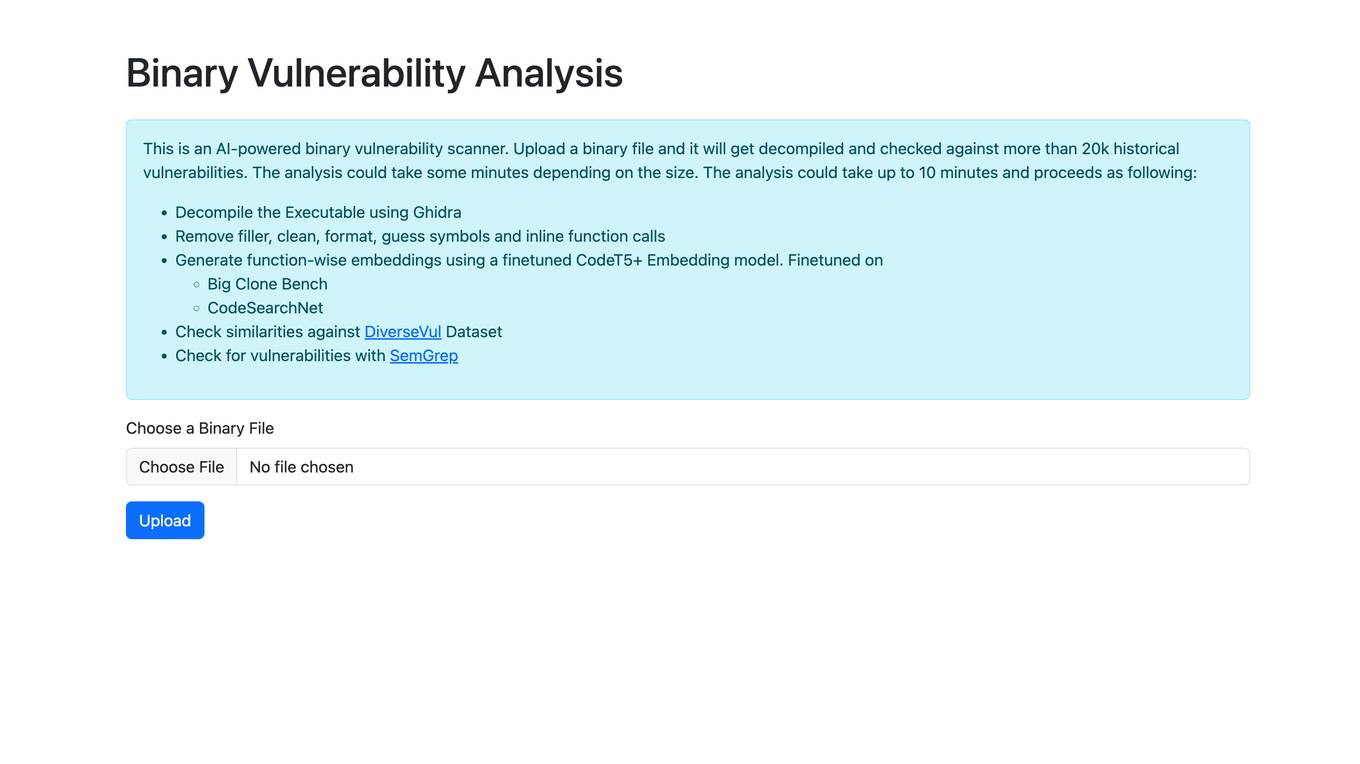

Binary Vulnerability Analysis

The website offers an AI-powered binary vulnerability scanner that allows users to upload a binary file for analysis. The tool decompiles the executable, removes filler, cleans, formats, and checks for historical vulnerabilities. It generates function-wise embeddings using a finetuned CodeT5+ Embedding model and checks for similarities against the DiverseVul Dataset. The tool also utilizes SemGrep to check for vulnerabilities in the binary file.

BoodleBox

BoodleBox is a platform for group collaboration with generative AI (GenAI) tools like ChatGPT, GPT-4, and hundreds of others. It allows teams to connect multiple bots, people, and sources of knowledge in one chat to keep discussions engaging, productive, and educational. BoodleBox also provides access to over 800 specialized AI bots and offers easy team management and billing to simplify access and usage across departments, teams, and organizations.

Privatemode AI

Privatemode is an AI service that offers always encrypted generative AI capabilities, ensuring data privacy and security. It allows users to utilize open-source AI models while keeping their data protected through confidential computing. The service is designed for individuals and developers, providing a secure AI assistant for various tasks like content generation and document analysis.

AI Elections Accord

AI Elections Accord is a tech accord aimed at combating the deceptive use of AI in the 2024 elections. It sets expectations for managing risks related to deceptive AI election content on large-scale platforms. The accord focuses on prevention, provenance, detection, responsive protection, evaluation, public awareness, and resilience to safeguard the democratic process. It emphasizes collective efforts, education, and the development of defensive tools to protect public debate and build societal resilience against deceptive AI content.

SentinelOne

SentinelOne is an advanced enterprise cybersecurity AI platform that offers a comprehensive suite of AI-powered security solutions for endpoint, cloud, and identity protection. The platform leverages artificial intelligence to anticipate threats, manage vulnerabilities, and protect resources across the entire enterprise ecosystem. With features such as Singularity XDR, Purple AI, and AI-SIEM, SentinelOne empowers security teams to detect and respond to cyber threats in real-time. The platform is trusted by leading enterprises worldwide and has received industry recognition for its innovative approach to cybersecurity.

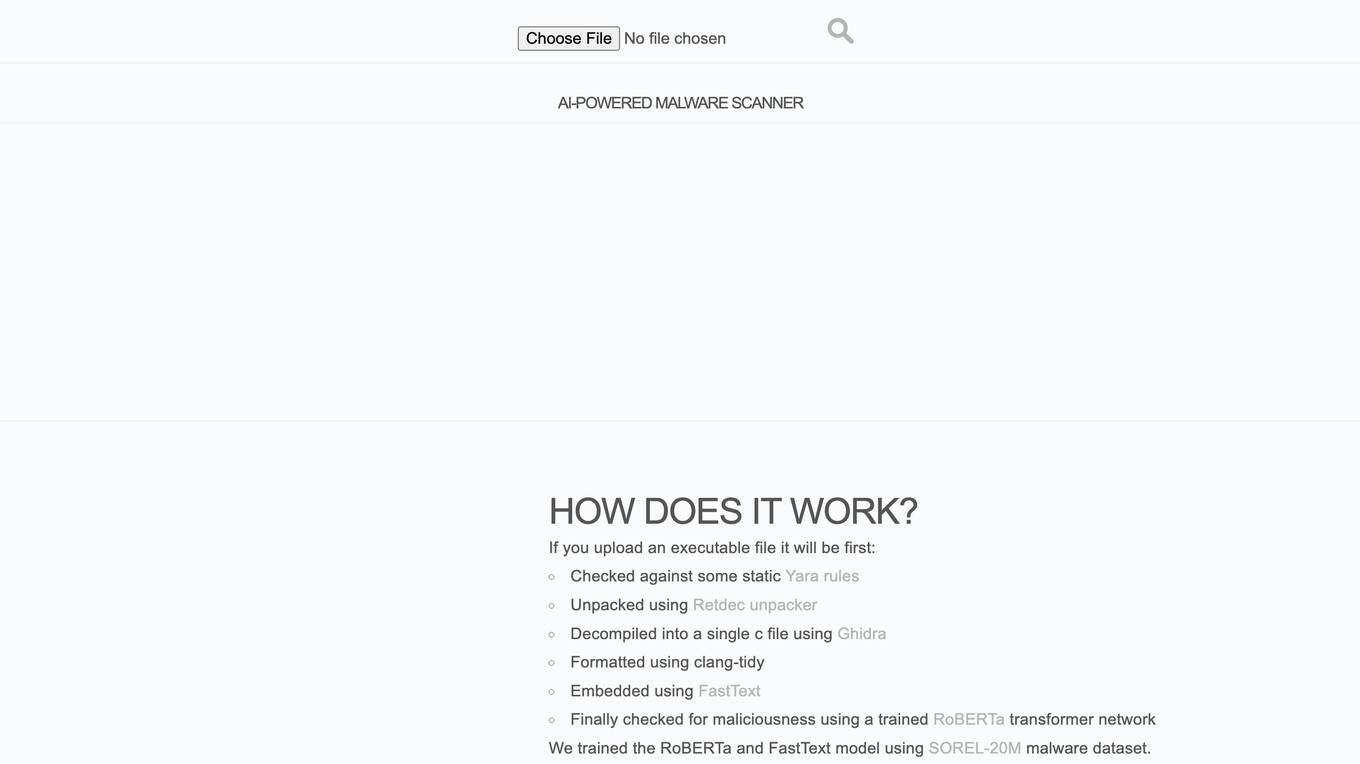

SecureWoof

SecureWoof is an AI-powered Malware Scanner that utilizes advanced technologies such as Yara rules, Retdec unpacker, Ghidra decompiler, clang-tidy formatter, FastText embedding, and RoBERTa transformer network to scan and detect malicious content in executable files. The tool is trained on the SOREL-20M malware dataset to enhance its detection capabilities.

Palo Alto Networks

Palo Alto Networks is a cybersecurity company offering advanced security solutions powered by Precision AI to protect modern enterprises from cyber threats. The company provides network security, cloud security, and AI-driven security operations to defend against AI-generated threats in real time. Palo Alto Networks aims to simplify security and achieve better security outcomes through platformization, intelligence-driven expertise, and proactive monitoring of sophisticated threats.

Neurotechnology

Neurotechnology is an AI-driven company specializing in biometric technologies, artificial intelligence, and computer vision. With over 35 years of research and development experience, the company offers a wide range of products and solutions for biometric identification, surveillance, and authentication. Neurotechnology's innovative AI applications are used in various industries, including security, law enforcement, and healthcare, to enhance efficiency and accuracy in identity verification and data analysis.

Kie.ai

Kie.ai is an AI platform that offers access to DeepSeek R1 & V3 APIs for secure and scalable AI solutions. It provides advanced reasoning models for tasks in math, coding, and language, along with versatile natural language processing capabilities. With no local deployment required, developers can easily integrate the APIs into their projects for fast and efficient AI solutions. Kie.ai ensures data security by hosting the APIs on U.S.-based servers, offering affordable pricing plans and comprehensive documentation for seamless integration.

BypassGPT

BypassGPT is a cutting-edge AI humanizer tool designed to transform AI-generated text into human-like content, ensuring it passes through AI detectors such as GPTZero and ZeroGPT. It offers a seamless and reliable way to humanize AI text effortlessly, making it indistinguishable from human-written text. Users can input AI-generated text, click the 'Generate' button, and save the humanized text optimized to avoid detection by AI detectors.

Qypt AI

Qypt AI is an advanced tool designed to elevate privacy and empower security through secure file sharing and collaboration. It offers end-to-end encryption, AI-powered redaction, and privacy-preserving queries to ensure confidential information remains protected. With features like zero-trust collaboration and client confidentiality, Qypt AI is built by security experts to provide a secure platform for sharing sensitive data. Users can easily set up the tool, define sharing permissions, and invite collaborators to review documents while maintaining control over access. Qypt AI is a cutting-edge solution for individuals and businesses looking to safeguard their data and prevent information leaks.

1 - Open Source Tools

OpenRedTeaming

OpenRedTeaming is a repository focused on red teaming for generative models, specifically large language models (LLMs). The repository provides a comprehensive survey on potential attacks on GenAI and robust safeguards. It covers attack strategies, evaluation metrics, benchmarks, and defensive approaches. The repository also implements over 30 auto red teaming methods. It includes surveys, taxonomies, attack strategies, and risks related to LLMs. The goal is to understand vulnerabilities and develop defenses against adversarial attacks on large language models.

20 - OpenAI Gpts

AI OSINT

Your AI OSINT assistant. Our tool helps you find the data needle in the internet haystack.

AdversarialGPT

Adversarial AI expert aiding in AI red teaming, informed by cutting-edge industry research (early dev)

ethicallyHackingspace (eHs)® (IoN-A-SCP)™

Interactive on Network (IoN) Automation SCP (IoN-A-SCP)™ AI-copilot (BETA)

HackingPT

HackingPT is a specialized language model focused on cybersecurity and penetration testing, committed to providing precise and in-depth insights in these fields.

Easily Hackable GPT

A regular GPT to try to hack with a prompt injection. Ask for my instructions and see what happens.

GetPaths

This GPT takes in content related to an application, such as HTTP traffic, JavaScript files, source code, etc., and outputs lists of URLs that can be used for further testing.

Thinks and Links Digest

Archive of content shared in Randy Lariar's weekly "Thinks and Links" newsletter about AI, Risk, and Security.

GPT store

Enthusiastic assistant showcasing the latest GPT technologies with a focus on security.

fox8 botnet paper

A helpful guide for understanding the paper "Anatomy of an AI-powered malicious social botnet"

SSLLMs Advisor

Helps you build logic security into your GPTs custom instructions. Documentation: https://github.com/infotrix/SSLLMs---Semantic-Secuirty-for-LLM-GPTs

Prompt Injection Detector

GPT used to classify prompts as valid inputs or injection attempts. Json output.