AI tools for Mistral-8x-7B

Related Tools:

Code Snippets AI

Code Snippets AI is an AI-powered code snippets library for teams. It helps developers master their codebase with contextually-rich AI chats, integrated with a secure code snippets library. Developers can build new features, fix bugs, add comments, and understand their codebase with the help of Code Snippets AI. The tool is trusted by the best development teams and helps developers code smarter than ever. With Code Snippets AI, developers can leverage the power of a codebase aware assistant, helping them write clean, performance optimized code. They can also create documentation, refactor, debug and generate code with full codebase context. This helps developers spend more time creating code and less time debugging errors.

Mistral AI

Mistral AI is a cutting-edge AI technology provider for developers and businesses. Their open and portable generative AI models offer unmatched performance, flexibility, and customization. Mistral AI's mission is to accelerate AI innovation by providing powerful tools that can be easily integrated into various applications and systems.

Mistral

Mistral is a knowledge management tool that helps users retain, apply, and succeed in their learning endeavors. It offers features such as seamless integration with popular note-taking tools, proven learning techniques for better retention, summarization of YouTube videos and text-based content, distraction-free reading, and resource management. Mistral is designed to help users overcome the challenges of information overload, wasteful distractions, and forgetfulness, empowering them to unlock their full learning potential.

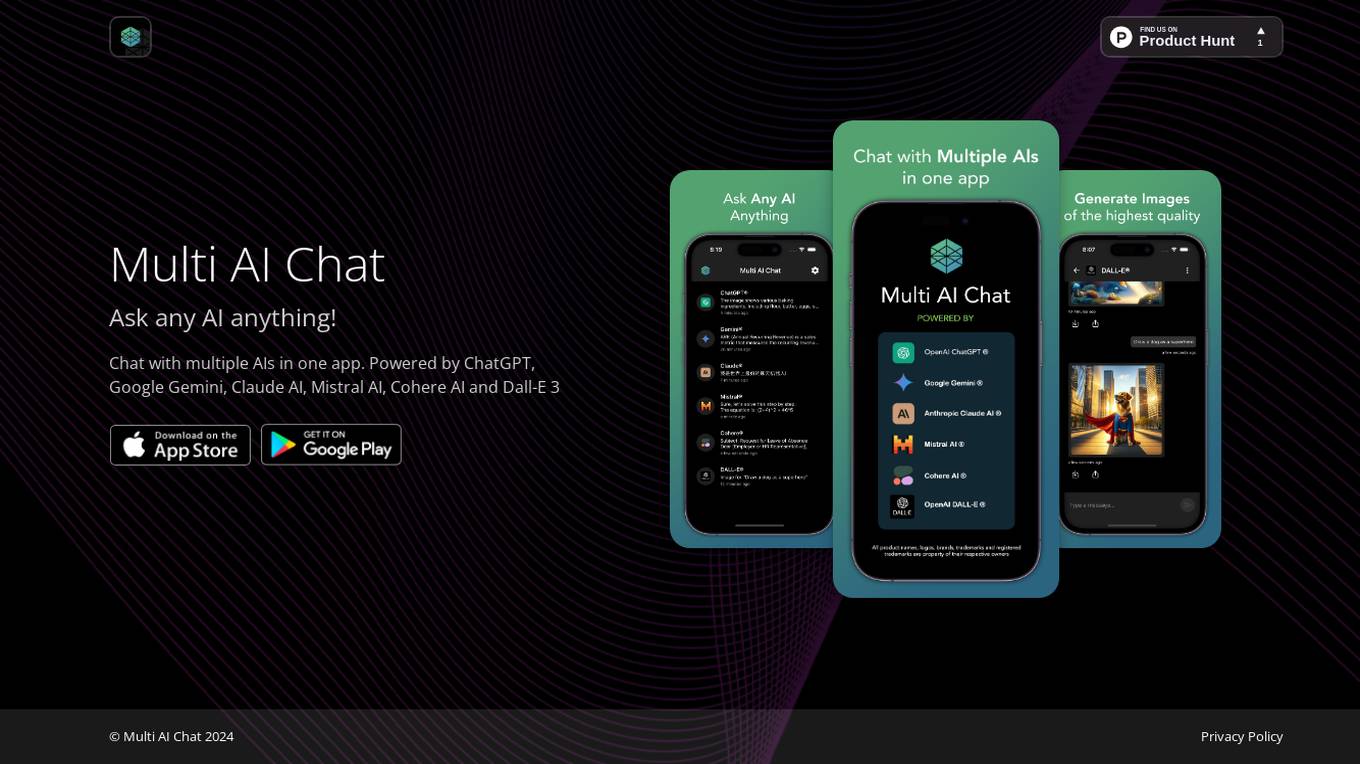

Multi AI Chat

Multi AI Chat is an AI-powered application that allows users to interact with various AI models by asking questions. The platform enables users to seek information, get answers, and engage in conversations with AI on a wide range of topics. With Multi AI Chat, users can explore the capabilities of different AI technologies and enhance their understanding of artificial intelligence.

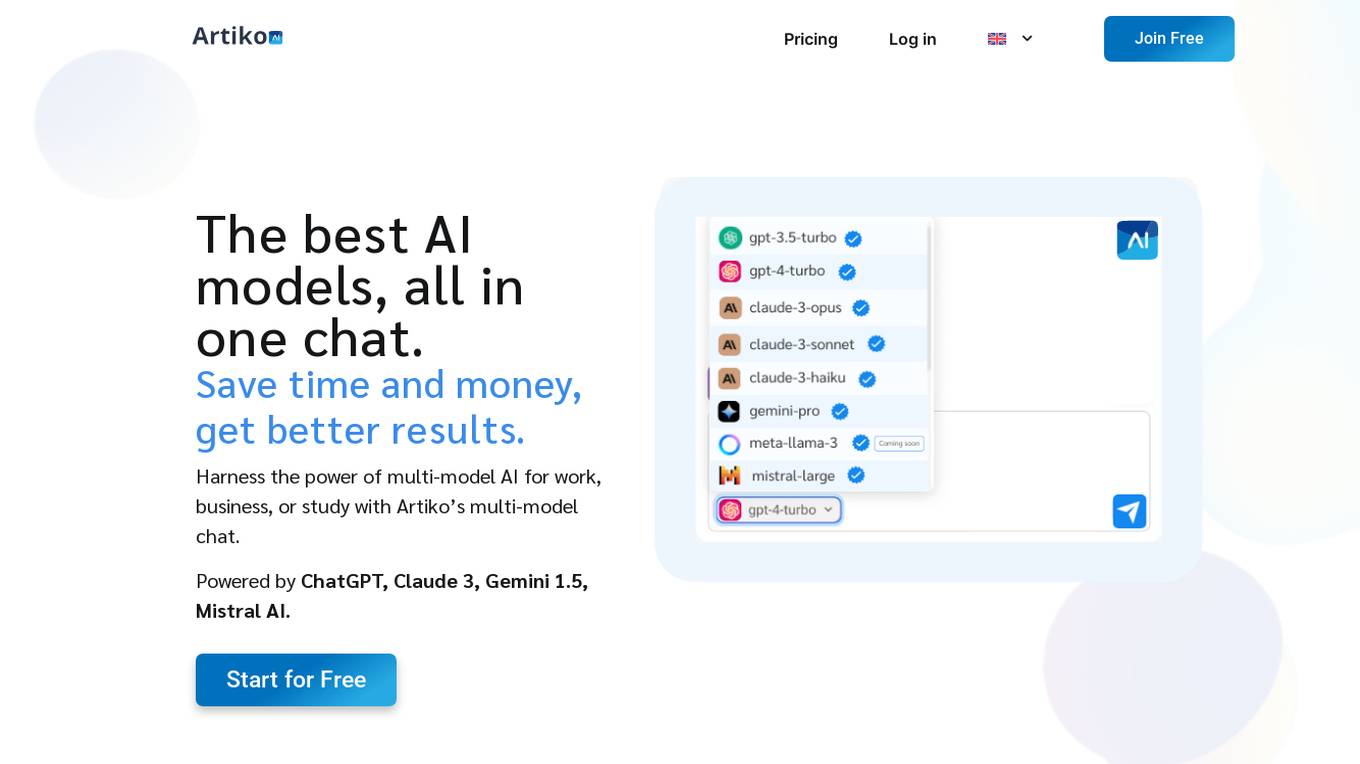

Artiko.ai

Artiko.ai is a multi-model AI chat platform that integrates advanced AI models such as ChatGPT, Claude 3, Gemini 1.5, and Mistral AI. It offers a convenient and cost-effective solution for work, business, or study by providing a single chat interface to harness the power of multi-model AI. Users can save time and money while achieving better results through features like text rewriting, data conversation, AI assistants, website chatbot, PDF and document chat, translation, brainstorming, and integration with various tools like Woocommerce, Amazon, Salesforce, and more.

ChatLabs

ChatLabs is an AI application that provides users with access to a variety of AI models for tasks such as chatting, writing, web searching, image generation, and more. Users can interact with AI assistants, browse the web, generate AI art, and utilize voice input features. The platform offers a prompt library, chat with files functionality, split-screen mode, and a Chrome extension for enhanced user experience.

Mammouth AI

Mammouth is an AI platform that offers access to a variety of industry-leading AI models for text and image generation. Users can subscribe to different models at a fraction of the price compared to individual subscriptions. The platform provides a user-friendly interface, straightforward pricing, and additional features to enhance AI workflow, such as image generation, one-click reprompting, multilingual models, and more. Mammouth aims to simplify the process of selecting and using advanced AI models for various creative and professional applications.

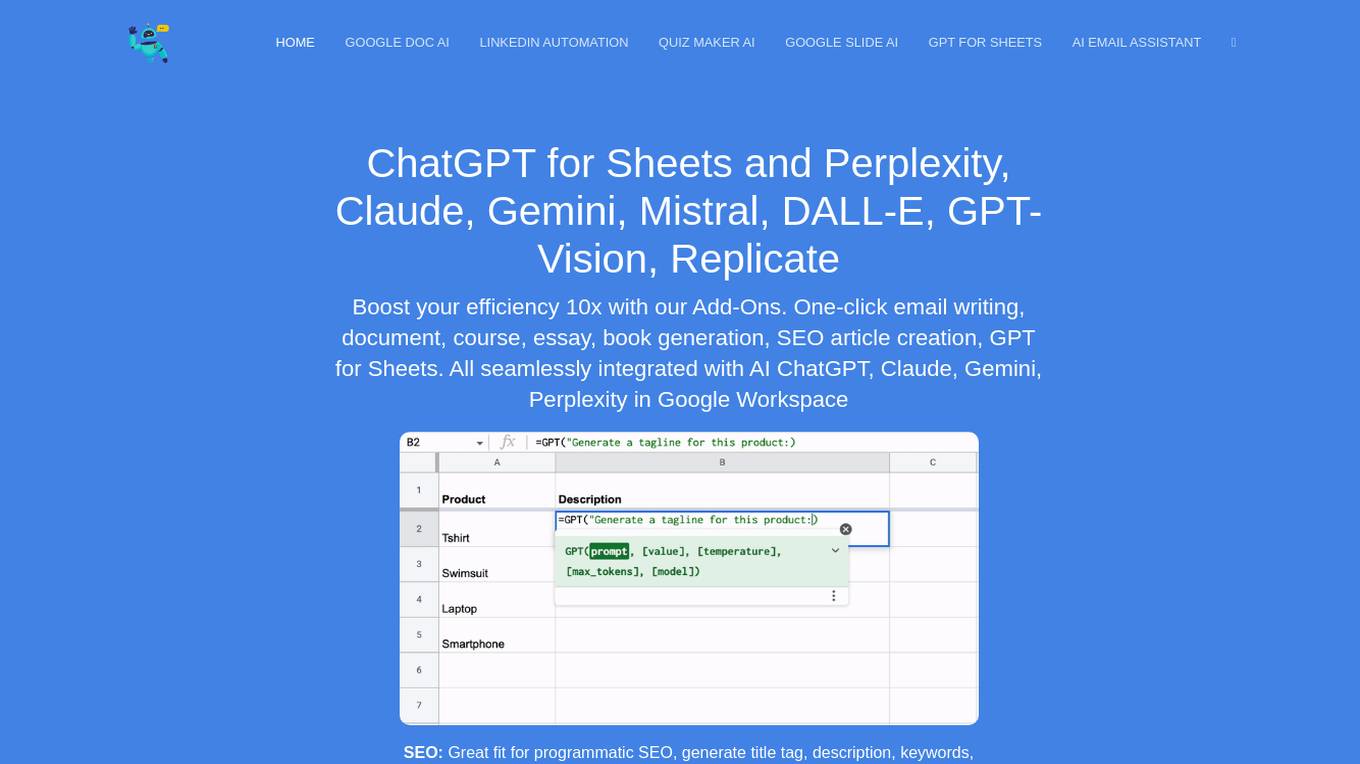

DocGPT.ai

DocGPT.ai is an AI-powered tool designed to enhance productivity and efficiency in various tasks such as email writing, document generation, content creation, SEO optimization, data enrichment, and more. It seamlessly integrates with Google Workspace applications to provide users with advanced AI capabilities for content generation and management. With support for multiple AI models and a wide range of features, DocGPT.ai is a comprehensive solution for individuals and businesses looking to streamline their workflows and improve their content creation processes.

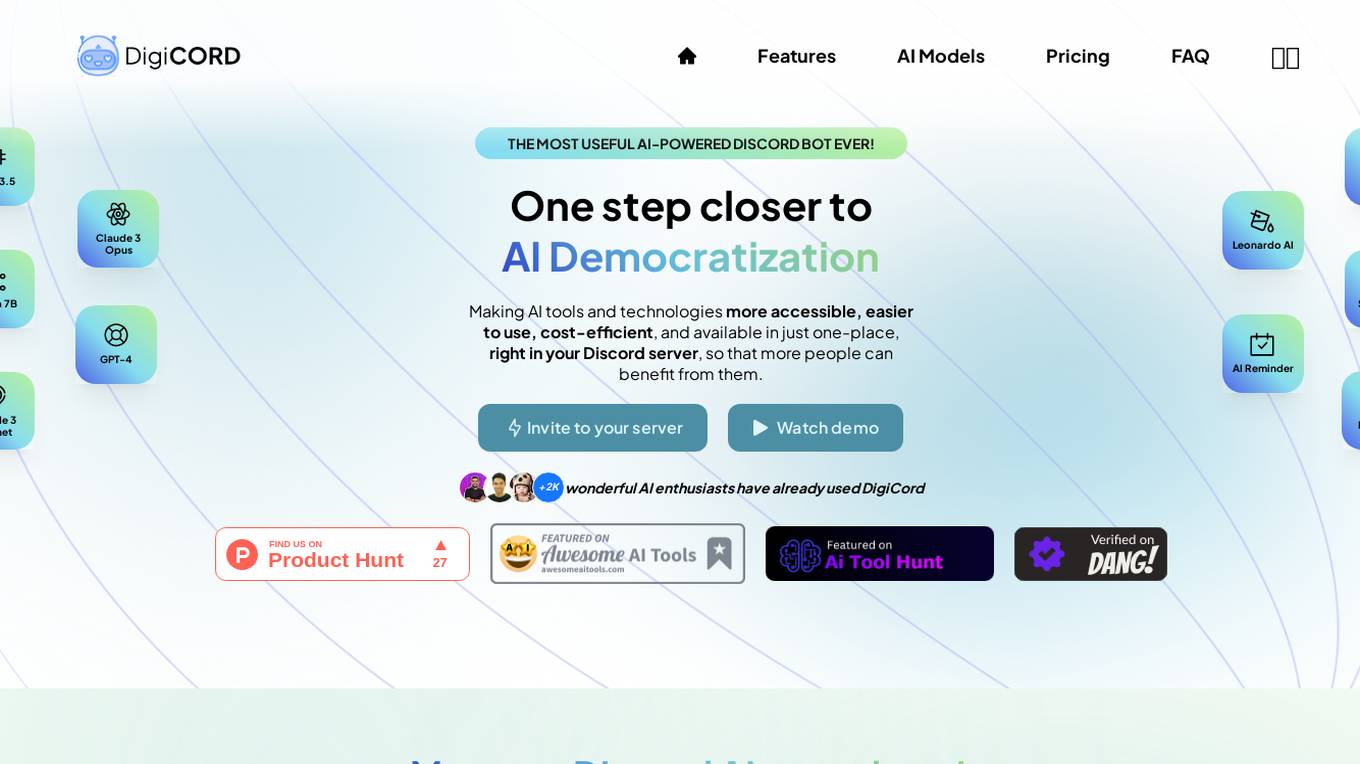

DigiCord

DigiCord is an AI-powered Discord bot that provides access to a wide range of large language models (LLMs) such as GPT-3.5, GPT-4, Claude, and more. It allows users to converse with AI, generate content, analyze images and data, and perform various tasks, all within the Discord server environment. DigiCord aims to democratize AI tools and technologies, making them more accessible, cost-efficient, and user-friendly for a diverse range of users, from students and digital artists to software engineers and entrepreneurs.

Ghostwriter

Ghostwriter is a revolutionary writing assistant add-in for Microsoft Office that leverages AI technology to help users brainstorm, plan, and create content faster and more efficiently. With over 2 million installs, Ghostwriter offers different editions tailored for various user needs, from students to professionals, providing quick action prompts, creative settings, response length control, and language translation capabilities. The application prioritizes user privacy and security, offering a secure and private experience while recommending users to get their own OpenAI API key for complete privacy.

Otio

Otio is an AI-native workspace designed for research and writing, powered by advanced models like GPT-4, Claude 3, and Mistral. It offers features such as summarizing and chatting with documents, writing, editing, and paraphrasing with AI assistance. Otio is trusted by researchers, students, and analysts from top institutions, providing a simple and efficient way to collect, read, and write in a single workspace. The tool aims to streamline the research process by summarizing various types of content, generating first drafts, and helping users extract key insights quickly.

Faune

Faune is an anonymous AI chat app that brings the power of large language models (LLMs) like GPT-3, GPT-4, and Mistral directly to users. It prioritizes privacy and offers unique features such as a dynamic prompt editor, support for multiple LLMs, and a built-in image processor. With Faune, users can engage in rich and engaging AI conversations without the need for user accounts or complex setups.

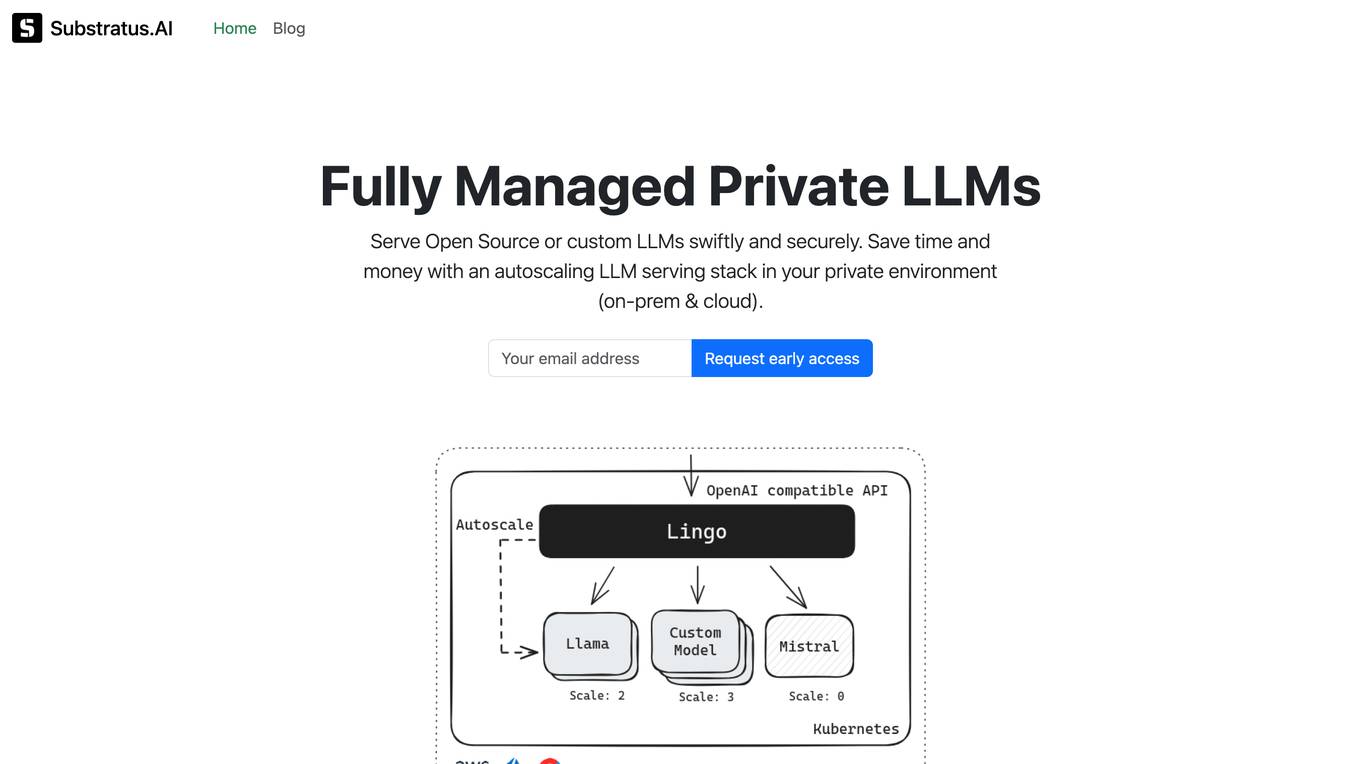

Substratus.AI

Substratus.AI is a fully managed private LLMs platform that allows users to serve LLMs (Llama and Mistral) in their own cloud account. It enables users to keep control of their data while reducing OpenAI costs by up to 10x. With Substratus.AI, users can utilize LLMs in production in hours instead of weeks, making it a convenient and efficient solution for AI model deployment.

TextSynth

TextSynth is an AI tool that provides access to large language models such as Mistral, Llama, Stable Diffusion, Whisper for text-to-image, text-to-speech, and speech-to-text capabilities via a REST API and a playground. It employs custom inference code for faster inference on standard GPUs and CPUs. Founded in 2020, TextSynth was among the first to offer access to the GPT-2 language model. The service is free with rate limitations, but users can opt for unlimited access by paying a small fee per request. All servers are located in France.

Ollama

Ollama is an AI tool that allows users to access and utilize large language models such as Llama 3, Phi 3, Mistral, Gemma 2, and more. Users can customize and create their own models. The tool is available for macOS, Linux, and Windows platforms, offering a preview version for users to explore and utilize these models for various applications.

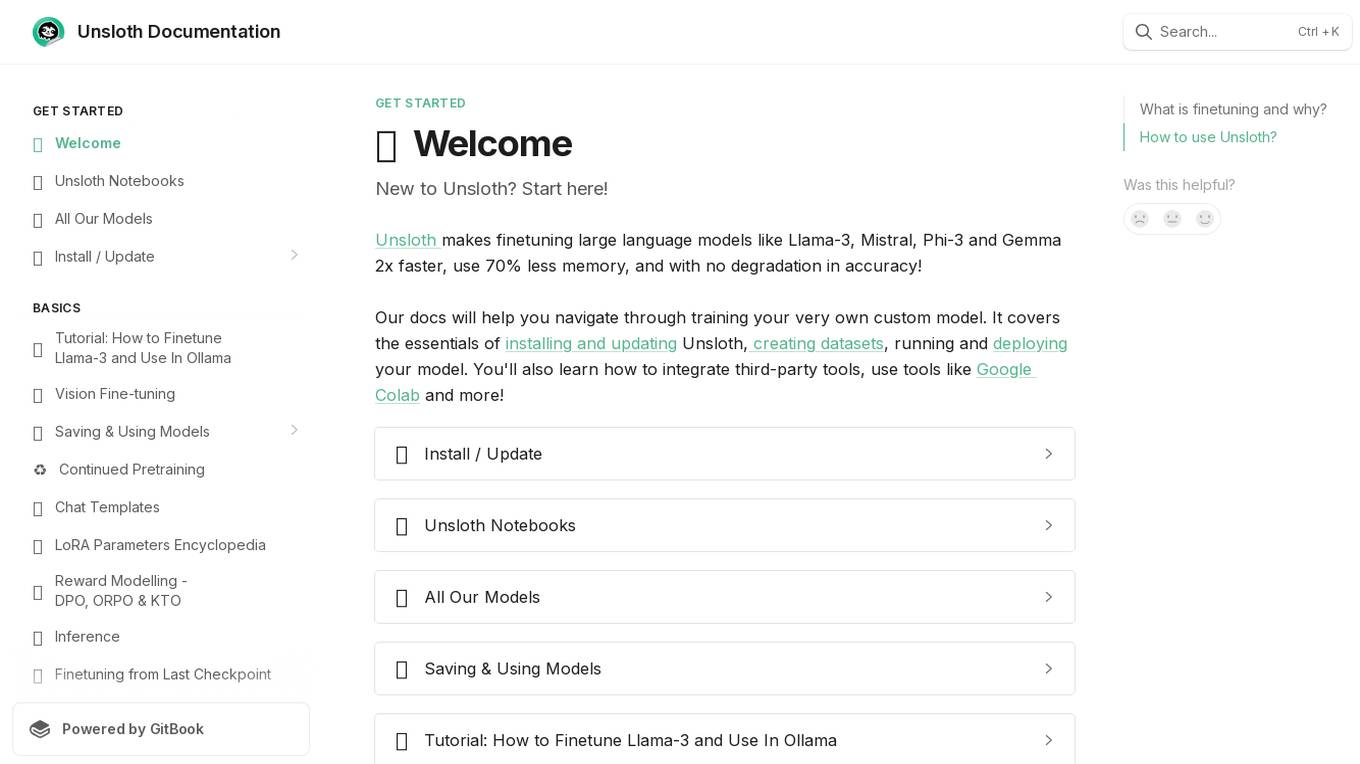

Unsloth

Unsloth is an AI tool designed to make finetuning large language models like Llama-3, Mistral, Phi-3, and Gemma 2x faster, use 70% less memory, and with no degradation in accuracy. The tool provides documentation to help users navigate through training their custom models, covering essentials such as installing and updating Unsloth, creating datasets, running, and deploying models. Users can also integrate third-party tools and utilize platforms like Google Colab.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

PowerInfer

PowerInfer is a high-speed Large Language Model (LLM) inference engine designed for local deployment on consumer-grade hardware, leveraging activation locality to optimize efficiency. It features a locality-centric design, hybrid CPU/GPU utilization, easy integration with popular ReLU-sparse models, and support for various platforms. PowerInfer achieves high speed with lower resource demands and is flexible for easy deployment and compatibility with existing models like Falcon-40B, Llama2 family, ProSparse Llama2 family, and Bamboo-7B.

lmdeploy

LMDeploy is a toolkit for compressing, deploying, and serving LLM, developed by the MMRazor and MMDeploy teams. It has the following core features: * **Efficient Inference** : LMDeploy delivers up to 1.8x higher request throughput than vLLM, by introducing key features like persistent batch(a.k.a. continuous batching), blocked KV cache, dynamic split&fuse, tensor parallelism, high-performance CUDA kernels and so on. * **Effective Quantization** : LMDeploy supports weight-only and k/v quantization, and the 4-bit inference performance is 2.4x higher than FP16. The quantization quality has been confirmed via OpenCompass evaluation. * **Effortless Distribution Server** : Leveraging the request distribution service, LMDeploy facilitates an easy and efficient deployment of multi-model services across multiple machines and cards. * **Interactive Inference Mode** : By caching the k/v of attention during multi-round dialogue processes, the engine remembers dialogue history, thus avoiding repetitive processing of historical sessions.

torchtune

Torchtune is a PyTorch-native library for easily authoring, fine-tuning, and experimenting with LLMs. It provides native-PyTorch implementations of popular LLMs using composable and modular building blocks, easy-to-use and hackable training recipes for popular fine-tuning techniques, YAML configs for easily configuring training, evaluation, quantization, or inference recipes, and built-in support for many popular dataset formats and prompt templates to help you quickly get started with training.

one-click-llms

The one-click-llms repository provides templates for quickly setting up an API for language models. It includes advanced inferencing scripts for function calling and offers various models for text generation and fine-tuning tasks. Users can choose between Runpod and Vast.AI for different GPU configurations, with recommendations for optimal performance. The repository also supports Trelis Research and offers templates for different model sizes and types, including multi-modal APIs and chat models.

Automodel

Automodel is a Python library for automating the process of building and evaluating machine learning models. It provides a set of tools and utilities to streamline the model development workflow, from data preprocessing to model selection and evaluation. With Automodel, users can easily experiment with different algorithms, hyperparameters, and feature engineering techniques to find the best model for their dataset. The library is designed to be user-friendly and customizable, allowing users to define their own pipelines and workflows. Automodel is suitable for data scientists, machine learning engineers, and anyone looking to quickly build and test machine learning models without the need for manual intervention.

evalchemy

Evalchemy is a unified and easy-to-use toolkit for evaluating language models, focusing on post-trained models. It integrates multiple existing benchmarks such as RepoBench, AlpacaEval, and ZeroEval. Key features include unified installation, parallel evaluation, simplified usage, and results management. Users can run various benchmarks with a consistent command-line interface and track results locally or integrate with a database for systematic tracking and leaderboard submission.

AQLM

AQLM is the official PyTorch implementation for Extreme Compression of Large Language Models via Additive Quantization. It includes prequantized AQLM models without PV-Tuning and PV-Tuned models for LLaMA, Mistral, and Mixtral families. The repository provides inference examples, model details, and quantization setups. Users can run prequantized models using Google Colab examples, work with different model families, and install the necessary inference library. The repository also offers detailed instructions for quantization, fine-tuning, and model evaluation. AQLM quantization involves calibrating models for compression, and users can improve model accuracy through finetuning. Additionally, the repository includes information on preparing models for inference and contributing guidelines.

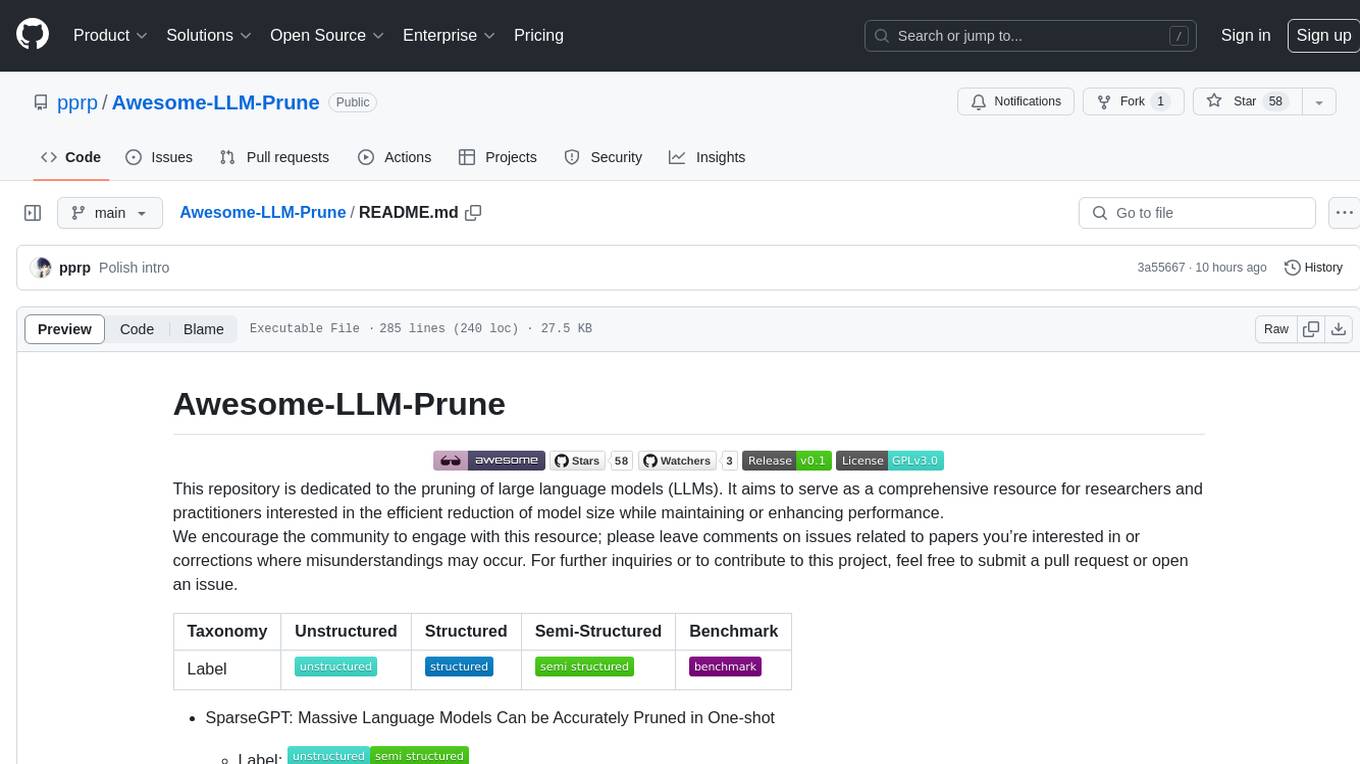

Awesome-LLM-Prune

This repository is dedicated to the pruning of large language models (LLMs). It aims to serve as a comprehensive resource for researchers and practitioners interested in the efficient reduction of model size while maintaining or enhancing performance. The repository contains various papers, summaries, and links related to different pruning approaches for LLMs, along with author information and publication details. It covers a wide range of topics such as structured pruning, unstructured pruning, semi-structured pruning, and benchmarking methods. Researchers and practitioners can explore different pruning techniques, understand their implications, and access relevant resources for further study and implementation.

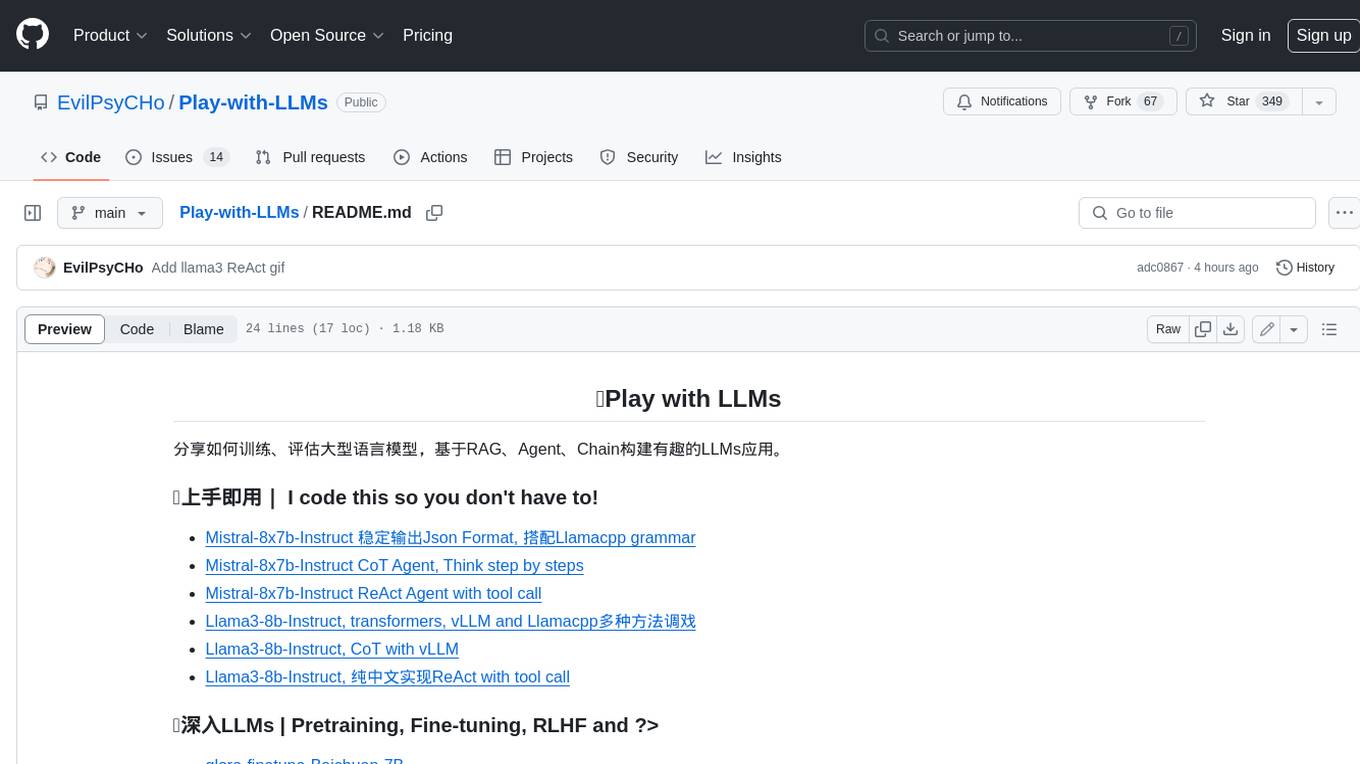

Play-with-LLMs

This repository provides a comprehensive guide to training, evaluating, and building applications with Large Language Models (LLMs). It covers various aspects of LLMs, including pretraining, fine-tuning, reinforcement learning from human feedback (RLHF), and more. The repository also includes practical examples and code snippets to help users get started with LLMs quickly and easily.

Awesome-AGI

Awesome-AGI is a curated list of resources related to Artificial General Intelligence (AGI), including models, pipelines, applications, and concepts. It provides a comprehensive overview of the current state of AGI research and development, covering various aspects such as model training, fine-tuning, deployment, and applications in different domains. The repository also includes resources on prompt engineering, RLHF, LLM vocabulary expansion, long text generation, hallucination mitigation, controllability and safety, and text detection. It serves as a valuable resource for researchers, practitioners, and anyone interested in the field of AGI.

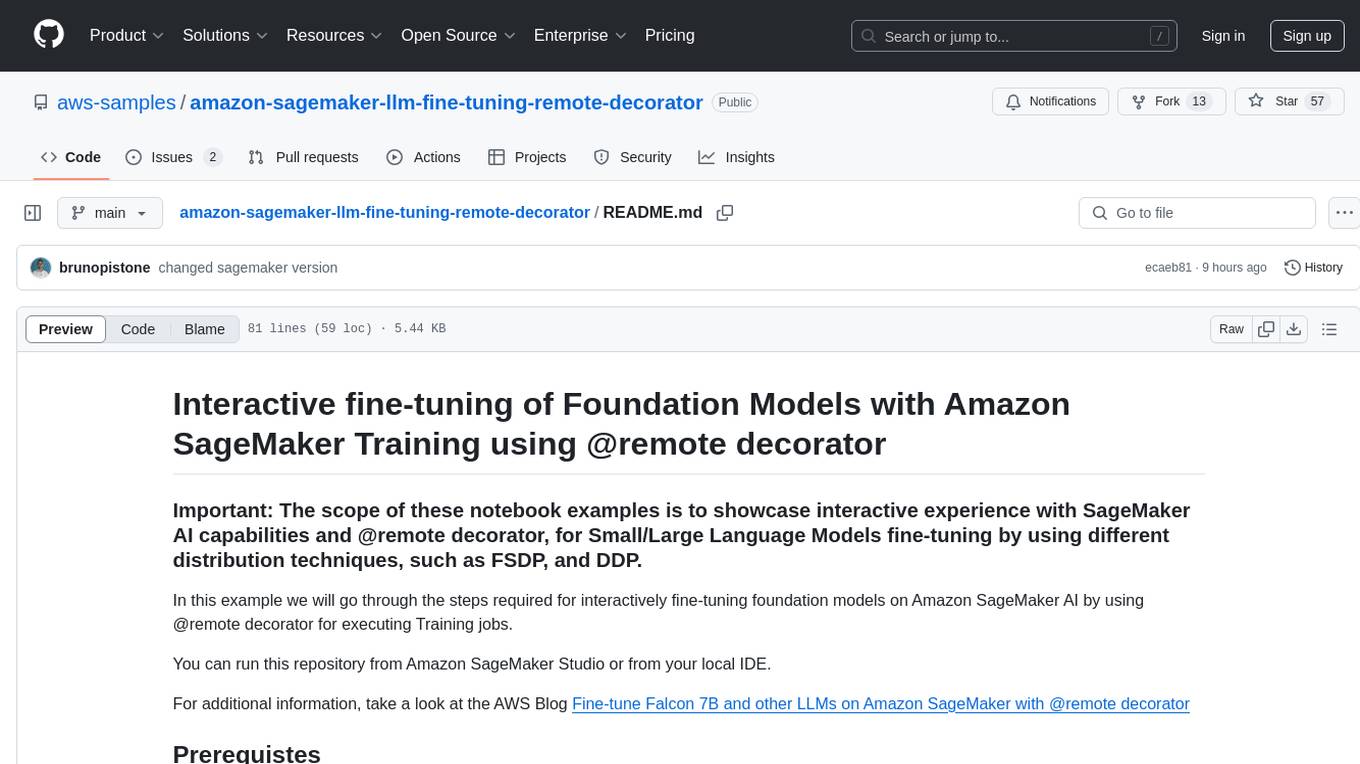

amazon-sagemaker-llm-fine-tuning-remote-decorator

This repository provides interactive fine-tuning of Foundation Models with Amazon SageMaker Training using the @remote decorator. It showcases the use of SageMaker AI capabilities for Small/Large Language Models fine-tuning by employing different distribution techniques like FSDP and DDP. Users can run the repository from Amazon SageMaker Studio or a local IDE. The notebooks cover various supervised and self-supervised fine-tuning scenarios for different models, along with instructions for updating configurations based on the AWS region and Python version compatibility.

tokencost

Tokencost is a clientside tool for calculating the USD cost of using major Large Language Model (LLMs) APIs by estimating the cost of prompts and completions. It helps track the latest price changes of major LLM providers, accurately count prompt tokens before sending OpenAI requests, and easily integrate to get the cost of a prompt or completion with a single function. Users can calculate prompt and completion costs using OpenAI requests, count tokens in prompts formatted as message lists or string prompts, and refer to a cost table with updated prices for various LLM models. The tool also supports callback handlers for LLM wrapper/framework libraries like LlamaIndex and Langchain.

Chinese-Mixtral-8x7B

Chinese-Mixtral-8x7B is an open-source project based on Mistral's Mixtral-8x7B model for incremental pre-training of Chinese vocabulary, aiming to advance research on MoE models in the Chinese natural language processing community. The expanded vocabulary significantly improves the model's encoding and decoding efficiency for Chinese, and the model is pre-trained incrementally on a large-scale open-source corpus, enabling it with powerful Chinese generation and comprehension capabilities. The project includes a large model with expanded Chinese vocabulary and incremental pre-training code.

mistral-inference

Mistral Inference repository contains minimal code to run 7B, 8x7B, and 8x22B models. It provides model download links, installation instructions, and usage guidelines for running models via CLI or Python. The repository also includes information on guardrailing, model platforms, deployment, and references. Users can interact with models through commands like mistral-demo, mistral-chat, and mistral-common. Mistral AI models support function calling and chat interactions for tasks like testing models, chatting with models, and using Codestral as a coding assistant. The repository offers detailed documentation and links to blogs for further information.

PolyMind

PolyMind is a multimodal, function calling powered LLM webui designed for various tasks such as internet searching, image generation, port scanning, Wolfram Alpha integration, Python interpretation, and semantic search. It offers a plugin system for adding extra functions and supports different models and endpoints. The tool allows users to interact via function calling and provides features like image input, image generation, and text file search. The application's configuration is stored in a `config.json` file with options for backend selection, compatibility mode, IP address settings, API key, and enabled features.

LLaMa2lang

This repository contains convenience scripts to finetune LLaMa3-8B (or any other foundation model) for chat towards any language (that isn't English). The rationale behind this is that LLaMa3 is trained on primarily English data and while it works to some extent for other languages, its performance is poor compared to English.