FlipAttack

[arXiv 2024] An official source code for paper "FlipAttack: Jailbreak LLMs via Flipping".

Stars: 91

FlipAttack is a jailbreak attack tool designed to exploit black-box Language Model Models (LLMs) by manipulating text inputs. It leverages insights into LLMs' autoregressive nature to construct noise on the left side of the input text, deceiving the model and enabling harmful behaviors. The tool offers four flipping modes to guide LLMs in denoising and executing malicious prompts effectively. FlipAttack is characterized by its universality, stealthiness, and simplicity, allowing users to compromise black-box LLMs with just one query. Experimental results demonstrate its high success rates against various LLMs, including GPT-4o and guardrail models.

README:

This paper proposes a simple yet effective jailbreak attack named FlipAttack against black-box LLMs. First, from the autoregressive nature, we reveal that LLMs tend to understand the text from left to right and find that they struggle to comprehend the text when noise is added to the left side. Motivated by these insights, we propose to disguise the harmful prompt by constructing left-side noise merely based on the prompt itself, then generalize this idea to 4 flipping modes. Second, we verify the strong ability of LLMs to perform the text-flipping task, and then develop 4 variants to guide LLMs to denoise, understand, and execute harmful behaviors accurately. These designs keep FlipAttack universal, stealthy, and simple, allowing it to jailbreak black-box LLMs within only 1 query. Experiments on 8 LLMs demonstrate the superiority of FlipAttack. Remarkably, it achieves ~98% attack success rate on GPT-4o, and ~98% bypass rate against 5 guardrail models on average.

Figure 1. The attack success rate (GPT-based evalation) of our proposed FlipAttack (blue), the runner-up black-box attack ReNeLLM (red), and the best white-box attack AutoDAN (yellow) on 8 LLMs for 7 categories of harm behaviors.

- (2025/03/06) The paper has been accepted by the ICLR 2025 FM-Wild Workshop.

- (2024/11/13) We update the GIF for case studies and check them in case folder.

- (2024/11/04) We release the codes of performance evaluation on sub-categories of AdvBench.

- (2024/11/01) We add an overview GIF to help readers better understand FlipAttack.

- (2024/10/18) FlipGuardData is released on huggingface. It contains 45k attack samples on 8 LLMs.

- (2024/10/15) The development version of codes is released.

- (2024/10/12) FlipAttack has been merged to PyRIT, check it here.

- (2024/10/11) FlipAttack is pulled a new request in PyRIT, check it here.

- (2024/10/04) The code of FlipAttack is released.

- (2024/10/02) FlipAttack is on arXiv.

Figure 2: Overview of the proposed FlipAttack.

To evaluate FlipAttack, you should run the following codes.

-

change to source code dictionary

cd ./src -

calculate ASR-GPT of FlipAttack on AdvBench

python eval_gpt.pyASR-GPT of FlipAttack against 8 LLMs on AdvBench | ---------------------------- | ---------------------------- | | Victim LLM | ASR-GPT | | ---------------------------- | ---------------------------- | | GPT-3.5 Turbo | 94.81% | | GPT-4 Turbo | 98.85% | | GPT-4 | 89.42% | | GPT-4o | 98.08% | | GPT-4o mini | 61.35% | | Claude 3.5 Sonnet | 86.54% | | LLaMA 3.1 405B | 28.27% | | Mixtral 8x22B | 97.12% | | ---------------------------- | ---------------------------- | | Average | 81.80% | | ---------------------------- | ---------------------------- | -

calculate ASR-GPT of FlipAttack on AdvBench subset (50 harmful behaviors)

python eval_subset_gpt.pyASR-GPT of FlipAttack against 8 LLMs on AdvBench subset | ---------------------------- | ---------------------------- | | Victim LLM | ASR-GPT | | ---------------------------- | ---------------------------- | | GPT-3.5 Turbo | 96.00% | | GPT-4 Turbo | 100.00% | | GPT-4 | 88.00% | | GPT-4o | 100.00% | | GPT-4o mini | 58.00% | | Claude 3.5 Sonnet | 88.00% | | LLaMA 3.1 405B | 26.00% | | Mixtral 8x22B | 100.00% | | ---------------------------- | ---------------------------- | | Average | 82.00% | | ---------------------------- | ---------------------------- | -

calculate ASR-DICT of FlipAttack on AdvBench

python eval_dict.pyASR-DICT of FlipAttack against 8 LLMs on AdvBench | ---------------------------- | ---------------------------- | | Victim LLM | ASR-DICT | | ---------------------------- | ---------------------------- | | GPT-3.5 Turbo | 85.58% | | GPT-4 Turbo | 83.46% | | GPT-4 | 62.12% | | GPT-4o | 83.08% | | GPT-4o mini | 87.50% | | Claude 3.5 Sonnet | 90.19% | | LLaMA 3.1 405B | 85.19% | | Mixtral 8x22B | 58.27% | | ---------------------------- | ---------------------------- | | Average | 79.42% | | ---------------------------- | ---------------------------- | -

calculate ASR-DICT of FlipAttack on AdvBench subset (50 harmful behaviors)

python eval_subset_dict.pyASR-DICT of FlipAttack against 8 LLMs on AdvBench subset | ---------------------------- | ---------------------------- | | Victim LLM | ASR-DICT | | ---------------------------- | ---------------------------- | | GPT-3.5 Turbo | 84.00% | | GPT-4 Turbo | 86.00% | | GPT-4 | 72.00% | | GPT-4o | 78.00% | | GPT-4o mini | 90.00% | | Claude 3.5 Sonnet | 94.00% | | LLaMA 3.1 405B | 86.00% | | Mixtral 8x22B | 54.00% | | ---------------------------- | ---------------------------- | | Average | 80.50% | | ---------------------------- | ---------------------------- |

Table 1: The attack success rate (%) of 16 methods on 8 LLMs. The bold and underlined values are the best and runner-up results. The evaluation metric is ASR-GPT based on GPT-4.

Figure 3: Token cost & attack performance of 16 attack methods. A larger bubble indicates higher token costs.

To reproduce and further develop FlipAttack, you should run the following codes.

-

Install the environment

pip install -r requirements.txt -

change to source code dictionary

cd ./src -

set the API keys, obtain the API keys from OpenAI, Anthropic, and DeepInfra

# for GPTs export OPENAI_API_KEY="your_api_key" # for Claude export ANTHROPIC_API_KEY="your_api_key" # LLaMA and Mistral export DEEPINFRA_API_KEY="your_api_key" -

read the configurations

--victim_llm | victim LLM --flip_mode | flipping mode --cot | chain-of-thought --lang_gpt | LangGPT --few_shot | task-oriented few-shot demo --data_name | name of benchmark --begin | begin of tested data --end | end of tested data --eval | conduct evaluation --parallel | run in parallel (use in main_parallel.py) -

run the commands

# for gpt-4-0613 python main.py --victim_llm gpt-4-0613 --flip_mode FMM --cot --data_name advbench --begin 0 --end 10 --eval # for gpt-4-turbo-2024-04-09 python main.py --victim_llm gpt-4-turbo-2024-04-09 --flip_mode FCW --cot --data_name advbench --begin 0 --end 10 --eval # for gpt-4o-2024-08-06 python main.py --victim_llm gpt-4o-2024-08-06 --flip_mode FCS --cot --lang_gpt --few_shot --data_name advbench --begin 0 --end 10 --eval # for gpt-4o-mini-2024-07-18 python main.py --victim_llm gpt-4o-mini-2024-07-18 --flip_mode FCS --cot --lang_gpt --data_name advbench --begin 0 --end 10 --eval # for gpt-3.5-turbo-0125 python main.py --victim_llm gpt-3.5-turbo-0125 --flip_mode FWO --data_name advbench --begin 0 --end 10 --eval # for claude-3-5-sonnet-20240620 python main.py --victim_llm claude-3-5-sonnet-20240620 --flip_mode FMM --cot --data_name advbench --begin 0 --end 10 --eval # for Meta-Llama-3.1-405B-Instruct python main.py --victim_llm Meta-Llama-3.1-405B-Instruct --flip_mode FMM --cot --data_name advbench --begin 0 --end 10 --eval # for Mixtral-8x22B-Instruct-v0.1 python main.py --victim_llm Mixtral-8x22B-Instruct-v0.1 --flip_mode FCS --cot --lang_gpt --few_shot --data_name advbench --begin 0 --end 10 --eval -

run code in parallel (recommended)

# for gpt-4-0613 python main_parallel.py --victim_llm gpt-4-0613 --flip_mode FMM --cot --data_name advbench --begin 0 --end 10 --eval --parallel # for gpt-4-turbo-2024-04-09 python main_parallel.py --victim_llm gpt-4-turbo-2024-04-09 --flip_mode FCW --cot --data_name advbench --begin 0 --end 10 --eval --parallel # for gpt-4o-2024-08-06 python main_parallel.py --victim_llm gpt-4o-2024-08-06 --flip_mode FCS --cot --lang_gpt --few_shot --data_name advbench --begin 0 --end 10 --eval --parallel # for gpt-4o-mini-2024-07-18 python main_parallel.py --victim_llm gpt-4o-mini-2024-07-18 --flip_mode FCS --cot --lang_gpt --data_name advbench --begin 0 --end 10 --eval --parallel # for gpt-3.5-turbo-0125 python main_parallel.py --victim_llm gpt-3.5-turbo-0125 --flip_mode FWO --data_name advbench --begin 0 --end 10 --eval --parallel # for claude-3-5-sonnet-20240620 python main_parallel.py --victim_llm claude-3-5-sonnet-20240620 --flip_mode FMM --cot --data_name advbench --begin 0 --end 10 --eval --parallel # for Meta-Llama-3.1-405B-Instruct python main_parallel.py --victim_llm Meta-Llama-3.1-405B-Instruct --flip_mode FMM --cot --data_name advbench --begin 0 --end 10 --eval --parallel # for Mixtral-8x22B-Instruct-v0.1 python main_parallel.py --victim_llm Mixtral-8x22B-Instruct-v0.1 --flip_mode FCS --cot --lang_gpt --few_shot --data_name advbench --begin 0 --end 10 --eval --parallel -

explore and further improve FlipAttack!

If you find this repository helpful, please cite our paper.

@article{FlipAttack,

title={FlipAttack: Jailbreak LLMs via Flipping},

author={Liu, Yue and He, Xiaoxin and Xiong, Miao and Fu, Jinlan and Deng, Shumin and Hooi, Bryan},

journal={arXiv preprint arXiv:2410.02832},

year={2024}

}

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for FlipAttack

Similar Open Source Tools

FlipAttack

FlipAttack is a jailbreak attack tool designed to exploit black-box Language Model Models (LLMs) by manipulating text inputs. It leverages insights into LLMs' autoregressive nature to construct noise on the left side of the input text, deceiving the model and enabling harmful behaviors. The tool offers four flipping modes to guide LLMs in denoising and executing malicious prompts effectively. FlipAttack is characterized by its universality, stealthiness, and simplicity, allowing users to compromise black-box LLMs with just one query. Experimental results demonstrate its high success rates against various LLMs, including GPT-4o and guardrail models.

LLM-QAT

This repository contains the training code of LLM-QAT for large language models. The work investigates quantization-aware training for LLMs, including quantizing weights, activations, and the KV cache. Experiments were conducted on LLaMA models of sizes 7B, 13B, and 30B, at quantization levels down to 4-bits. Significant improvements were observed when quantizing weight, activations, and kv cache to 4-bit, 8-bit, and 4-bit, respectively.

LLMs-Planning

This repository contains code for three papers related to evaluating large language models on planning and reasoning about change. It includes benchmarking tools and analysis for assessing the planning abilities of large language models. The latest addition evaluates and enhances the planning and scheduling capabilities of a specific language reasoning model. The repository provides a static test set leaderboard showcasing model performance on various tasks with natural language and planning domain prompts.

RobustVLM

This repository contains code for the paper 'Robust CLIP: Unsupervised Adversarial Fine-Tuning of Vision Embeddings for Robust Large Vision-Language Models'. It focuses on fine-tuning CLIP in an unsupervised manner to enhance its robustness against visual adversarial attacks. By replacing the vision encoder of large vision-language models with the fine-tuned CLIP models, it achieves state-of-the-art adversarial robustness on various vision-language tasks. The repository provides adversarially fine-tuned ViT-L/14 CLIP models and offers insights into zero-shot classification settings and clean accuracy improvements.

OpenAI-CLIP-Feature

This repository provides code for extracting image and text features using OpenAI CLIP models, supporting both global and local grid visual features. It aims to facilitate multi visual-and-language downstream tasks by allowing users to customize input and output grid resolution easily. The extracted features have shown comparable or superior results in image captioning tasks without hyperparameter tuning. The repo supports various CLIP models and provides detailed information on supported settings and results on MSCOCO image captioning. Users can get started by setting up experiments with the extracted features using X-modaler.

rubra

Rubra is a collection of open-weight large language models enhanced with tool-calling capability. It allows users to call user-defined external tools in a deterministic manner while reasoning and chatting, making it ideal for agentic use cases. The models are further post-trained to teach instruct-tuned models new skills and mitigate catastrophic forgetting. Rubra extends popular inferencing projects for easy use, enabling users to run the models easily.

aideml

AIDE is a machine learning code generation agent that can generate solutions for machine learning tasks from natural language descriptions. It has the following features: 1. **Instruct with Natural Language**: Describe your problem or additional requirements and expert insights, all in natural language. 2. **Deliver Solution in Source Code**: AIDE will generate Python scripts for the **tested** machine learning pipeline. Enjoy full transparency, reproducibility, and the freedom to further improve the source code! 3. **Iterative Optimization**: AIDE iteratively runs, debugs, evaluates, and improves the ML code, all by itself. 4. **Visualization**: We also provide tools to visualize the solution tree produced by AIDE for a better understanding of its experimentation process. This gives you insights not only about what works but also what doesn't. AIDE has been benchmarked on over 60 Kaggle data science competitions and has demonstrated impressive performance, surpassing 50% of Kaggle participants on average. It is particularly well-suited for tasks that require complex data preprocessing, feature engineering, and model selection.

solon

Solon is a Java enterprise application development framework that is restrained, efficient, and open. It offers better cost performance for computing resources with 700% higher concurrency and 50% memory savings. It enables faster development productivity with less code and easy startup, 10 times faster than traditional methods. Solon provides a better production and deployment experience by packing applications 90% smaller. It supports a greater range of compatibility with non-Java-EE architecture and compatibility with Java 8 to Java 24, including GraalVM native image support. Solon is built from scratch with flexible interface specifications and an open ecosystem.

llm4regression

This project explores the capability of Large Language Models (LLMs) to perform regression tasks using in-context examples. It compares the performance of LLMs like GPT-4 and Claude 3 Opus with traditional supervised methods such as Linear Regression and Gradient Boosting. The project provides preprints and results demonstrating the strong performance of LLMs in regression tasks. It includes datasets, models used, and experiments on adaptation and contamination. The code and data for the experiments are available for interaction and analysis.

jailbreak_llms

This is the official repository for the ACM CCS 2024 paper 'Do Anything Now': Characterizing and Evaluating In-The-Wild Jailbreak Prompts on Large Language Models. The project employs a new framework called JailbreakHub to conduct the first measurement study on jailbreak prompts in the wild, collecting 15,140 prompts from December 2022 to December 2023, including 1,405 jailbreak prompts. The dataset serves as the largest collection of in-the-wild jailbreak prompts. The repository contains examples of harmful language and is intended for research purposes only.

COLD-Attack

COLD-Attack is a framework designed for controllable jailbreaks on large language models (LLMs). It formulates the controllable attack generation problem and utilizes the Energy-based Constrained Decoding with Langevin Dynamics (COLD) algorithm to automate the search of adversarial LLM attacks with control over fluency, stealthiness, sentiment, and left-right-coherence. The framework includes steps for energy function formulation, Langevin dynamics sampling, and decoding process to generate discrete text attacks. It offers diverse jailbreak scenarios such as fluent suffix attacks, paraphrase attacks, and attacks with left-right-coherence.

IDvs.MoRec

This repository contains the source code for the SIGIR 2023 paper 'Where to Go Next for Recommender Systems? ID- vs. Modality-based Recommender Models Revisited'. It provides resources for evaluating foundation, transferable, multi-modal, and LLM recommendation models, along with datasets, pre-trained models, and training strategies for IDRec and MoRec using in-batch debiased cross-entropy loss. The repository also offers large-scale datasets, code for SASRec with in-batch debias cross-entropy loss, and information on joining the lab for research opportunities.

awesome-mobile-llm

Awesome Mobile LLMs is a curated list of Large Language Models (LLMs) and related studies focused on mobile and embedded hardware. The repository includes information on various LLM models, deployment frameworks, benchmarking efforts, applications, multimodal LLMs, surveys on efficient LLMs, training LLMs on device, mobile-related use-cases, industry announcements, and related repositories. It aims to be a valuable resource for researchers, engineers, and practitioners interested in mobile LLMs.

arena-hard-auto

Arena-Hard-Auto-v0.1 is an automatic evaluation tool for instruction-tuned LLMs. It contains 500 challenging user queries. The tool prompts GPT-4-Turbo as a judge to compare models' responses against a baseline model (default: GPT-4-0314). Arena-Hard-Auto employs an automatic judge as a cheaper and faster approximator to human preference. It has the highest correlation and separability to Chatbot Arena among popular open-ended LLM benchmarks. Users can evaluate their models' performance on Chatbot Arena by using Arena-Hard-Auto.

flute

FLUTE (Flexible Lookup Table Engine for LUT-quantized LLMs) is a tool designed for uniform quantization and lookup table quantization of weights in lower-precision intervals. It offers flexibility in mapping intervals to arbitrary values through a lookup table. FLUTE supports various quantization formats such as int4, int3, int2, fp4, fp3, fp2, nf4, nf3, nf2, and even custom tables. The tool also introduces new quantization algorithms like Learned Normal Float (NFL) for improved performance and calibration data learning. FLUTE provides benchmarks, model zoo, and integration with frameworks like vLLM and HuggingFace for easy deployment and usage.

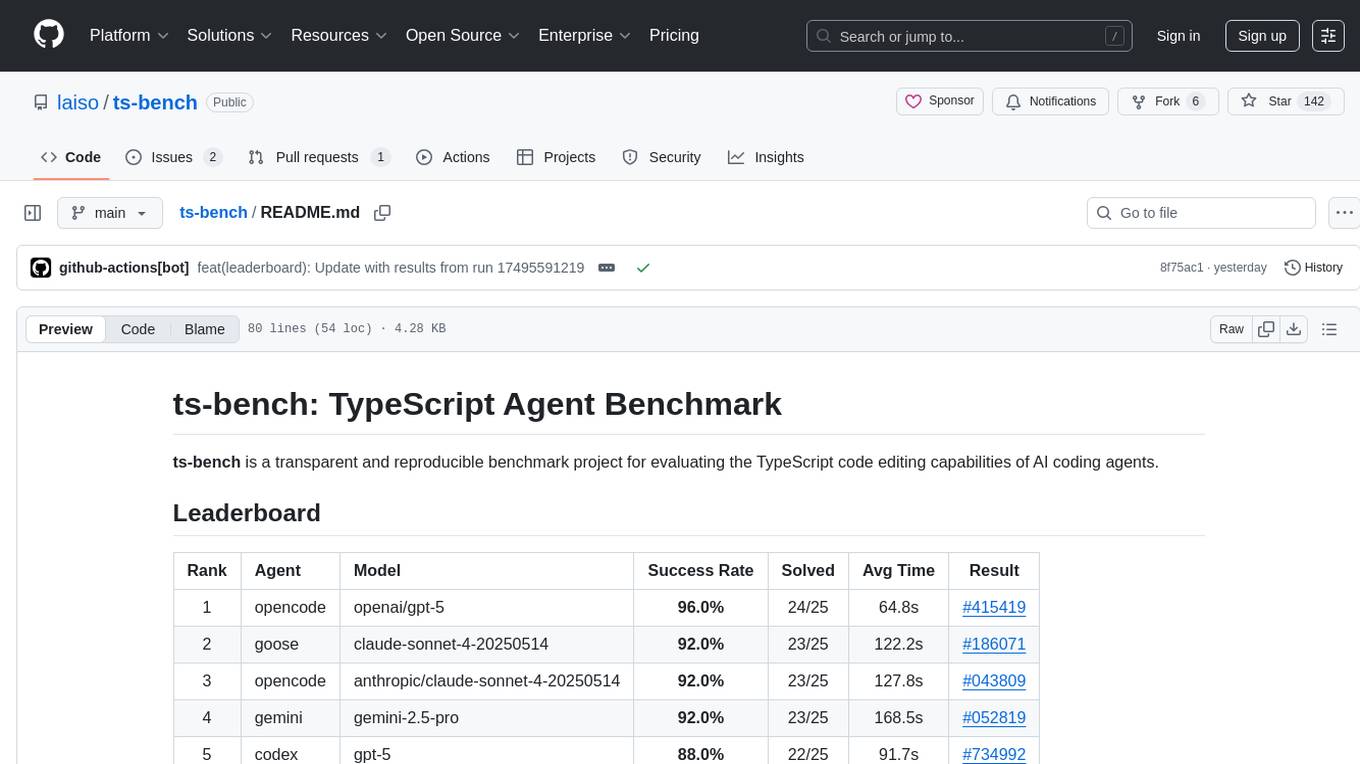

ts-bench

TS-Bench is a performance benchmarking tool for TypeScript projects. It provides detailed insights into the performance of TypeScript code, helping developers optimize their projects. With TS-Bench, users can measure and compare the execution time of different code snippets, functions, or modules. The tool offers a user-friendly interface for running benchmarks and analyzing the results. TS-Bench is a valuable asset for developers looking to enhance the performance of their TypeScript applications.

For similar tasks

FlipAttack

FlipAttack is a jailbreak attack tool designed to exploit black-box Language Model Models (LLMs) by manipulating text inputs. It leverages insights into LLMs' autoregressive nature to construct noise on the left side of the input text, deceiving the model and enabling harmful behaviors. The tool offers four flipping modes to guide LLMs in denoising and executing malicious prompts effectively. FlipAttack is characterized by its universality, stealthiness, and simplicity, allowing users to compromise black-box LLMs with just one query. Experimental results demonstrate its high success rates against various LLMs, including GPT-4o and guardrail models.

For similar jobs

ciso-assistant-community

CISO Assistant is a tool that helps organizations manage their cybersecurity posture and compliance. It provides a centralized platform for managing security controls, threats, and risks. CISO Assistant also includes a library of pre-built frameworks and tools to help organizations quickly and easily implement best practices.

PurpleLlama

Purple Llama is an umbrella project that aims to provide tools and evaluations to support responsible development and usage of generative AI models. It encompasses components for cybersecurity and input/output safeguards, with plans to expand in the future. The project emphasizes a collaborative approach, borrowing the concept of purple teaming from cybersecurity, to address potential risks and challenges posed by generative AI. Components within Purple Llama are licensed permissively to foster community collaboration and standardize the development of trust and safety tools for generative AI.

vpnfast.github.io

VPNFast is a lightweight and fast VPN service provider that offers secure and private internet access. With VPNFast, users can protect their online privacy, bypass geo-restrictions, and secure their internet connection from hackers and snoopers. The service provides high-speed servers in multiple locations worldwide, ensuring a reliable and seamless VPN experience for users. VPNFast is easy to use, with a user-friendly interface and simple setup process. Whether you're browsing the web, streaming content, or accessing sensitive information, VPNFast helps you stay safe and anonymous online.

taranis-ai

Taranis AI is an advanced Open-Source Intelligence (OSINT) tool that leverages Artificial Intelligence to revolutionize information gathering and situational analysis. It navigates through diverse data sources like websites to collect unstructured news articles, utilizing Natural Language Processing and Artificial Intelligence to enhance content quality. Analysts then refine these AI-augmented articles into structured reports that serve as the foundation for deliverables such as PDF files, which are ultimately published.

NightshadeAntidote

Nightshade Antidote is an image forensics tool used to analyze digital images for signs of manipulation or forgery. It implements several common techniques used in image forensics including metadata analysis, copy-move forgery detection, frequency domain analysis, and JPEG compression artifacts analysis. The tool takes an input image, performs analysis using the above techniques, and outputs a report summarizing the findings.

h4cker

This repository is a comprehensive collection of cybersecurity-related references, scripts, tools, code, and other resources. It is carefully curated and maintained by Omar Santos. The repository serves as a supplemental material provider to several books, video courses, and live training created by Omar Santos. It encompasses over 10,000 references that are instrumental for both offensive and defensive security professionals in honing their skills.

AIMr

AIMr is an AI aimbot tool written in Python that leverages modern technologies to achieve an undetected system with a pleasing appearance. It works on any game that uses human-shaped models. To optimize its performance, users should build OpenCV with CUDA. For Valorant, additional perks in the Discord and an Arduino Leonardo R3 are required.

admyral

Admyral is an open-source Cybersecurity Automation & Investigation Assistant that provides a unified console for investigations and incident handling, workflow automation creation, automatic alert investigation, and next step suggestions for analysts. It aims to tackle alert fatigue and automate security workflows effectively by offering features like workflow actions, AI actions, case management, alert handling, and more. Admyral combines security automation and case management to streamline incident response processes and improve overall security posture. The tool is open-source, transparent, and community-driven, allowing users to self-host, contribute, and collaborate on integrations and features.