screeps-starter-rust

Starter Rust AI for Screeps, the JavaScript-based MMO game

Stars: 121

screeps-starter-rust is a Rust AI starter kit for Screeps: World, a JavaScript-based MMO game. It utilizes the screeps-game-api bindings from the rustyscreeps organization and wasm-pack for building Rust code to WebAssembly. The example includes Rollup for bundling javascript, Babel for transpiling code, and screeps-api Node.js package for deployment. Users can refer to the Rust version of game APIs documentation at https://docs.rs/screeps-game-api/. The tool supports most crates on crates.io, except those interacting with OS APIs.

README:

Starter Rust AI for Screeps: World, the JavaScript-based MMO game.

This uses the screeps-game-api bindings from the rustyscreeps organization.

wasm-pack is used for building the Rust code to WebAssembly. This example uses Rollup to

bundle the resulting javascript, Babel to transpile generated code for compatibility with older

Node.js versions running on the Screeps servers, and the screeps-api Node.js package to deploy.

Documentation for the Rust version of the game APIs is at https://docs.rs/screeps-game-api/.

Almost all crates on https://crates.io/ are usable (only things which interact with OS apis are broken).

# Install rustup: https://rustup.rs/

# Install wasm-pack

cargo install wasm-pack

# Install wasm-opt

cargo install wasm-opt

# Install Node.js for build steps - versions 16 through 22 have been tested, any should work

# nvm is recommended but not required to manage the install, follow instructions at:

# Mac/Linux: https://github.com/nvm-sh/nvm

# Windows: https://github.com/coreybutler/nvm-windows

# Installs Node.js at version 20

# (all versions within LTS support should work;

# 20 is recommended due to some observed problems on Windows systems using 22)

nvm install 20

nvm use 20

# Clone the starter

git clone https://github.com/rustyscreeps/screeps-starter-rust.git

cd screeps-starter-rust

# note: if you customize the name of the crate, you'll need to update the MODULE_NAME

# variable in the js_src/main.js file and the module import with the updated name, as well

# as the "name" in the package.json

# Install dependencies for JS build

npm install

# Copy the example config, and set up at least one deployment mode.

cp .example-screeps.yaml .screeps.yaml

nano .screeps.yaml

# compile for a configured server but don't upload

npm run deploy -- --server ptr --dryrun

# compile and upload to a configured server

npm run deploy -- --server mmoVersions of screeps-game-api at 0.22 or higher are no longer compatible with the

cargo-screeps tool for building and deployment; the transpile step being handled by Babel is

required to transform the generated JS into code that the game servers can load.

To migrate an existing bot to using the new JavaScript translation layer and deploy script:

- Create a

.screeps.yamlwith the relevant settings from yourscreeps.tomlfile applied to the new.example-screeps.yamlexample file in this repo. - Add to your

.gitignore:.screeps.yaml,node_modules, anddist - Create a

package.jsoncopied from the one in this repo and make appropriate customizations.- Importantly, if you've modified your module name from

screeps-starter-rustto something else, you need to update thenamefield inpackage.jsonto be your bot's name.

- Importantly, if you've modified your module name from

- Install Node.js (from the quickstart steps above), then run

npm installfrom within the bot directory to install the required packages. - Copy the

deploy.jsscript over to a newjs_toolsdirectory. - Add

main.jsto a newjs_srcdirectory, either moved from your existingjavascriptdir and updated, or freshly copied.- If updating, you'll need to change:

- Import formatting, particularly for the wasm module.

- wasm module initialization has changed, requiring two calls to first compile the module, then to initialize the instance of the module.

- Whether updating or copying fresh, if you've modified your bot name from

screeps-starter-rustyou'll need to update the bot package import andMODULE_NAMEat the beginning ofmain.jsto be your updated bot name.

- If updating, you'll need to change:

- Update your

Cargo.tomlwith version0.22forscreeps-game-api - Run

npm run deploy -- --server ptr --dryrunto compile for PTR, remove the--dryrunto deploy

If you encounter an error like the following:

Error: Not Authorized

at ScreepsAPI.req (PATH_TO_YOUR_BOT/node_modules/screeps-api/dist/ScreepsAPI.js:1212:17)

at process.processTicksAndRejections (node:internal/process/task_queues:105:5)

at async ScreepsAPI.auth (PATH_TO_YOUR_BOT/node_modules/screeps-api/dist/ScreepsAPI.js:1162:17)

at async ScreepsAPI.fromConfig (PATH_TO_YOUR_BOT/node_modules/screeps-api/dist/ScreepsAPI.js:1394:9)

at async upload (PATH_TO_YOUR_BOT/js_tools/deploy.js:148:17)

at async run (PATH_TO_YOUR_BOT/js_tools/deploy.js:163:3

Then the password in your .screeps.yaml file is getting picked up as something aside from a string. Passwords sent to the server must be a string. Wrap it in quotes: password: "12345"

If you encounter an error like the following:

Error: Unknown module 'bot-name-here'

at Object.requireFn (<runtime>:20897:23)

at Object.module.exports.loop (main:933:33)

at __mainLoop:1:52

at __mainLoop:2:3

at Object.exports.evalCode (<runtime>:15381:76)

at Object.exports.run (<runtime>:20865:24)

You need to make sure you update your package.json name field to be your bot name.

If you encounter an error like the following:

CompileError: WebAssembly.Module(): Compiling wasm function #327:core::unicode::printable::check::h9ddbb57eb721c858 failed: Invalid opcode (enable with --experimental-wasm-se) @+257876

at Object.module.exports.loop (main:934:35)

at __mainLoop:1:52

at __mainLoop:2:3

at Object.exports.evalCode (<runtime>:15381:76)

at Object.exports.run (<runtime>:20865:24)

You need to update your Cargo.toml to include the --signext-lowering flag for wasm-opt. For example:

[package.metadata.wasm-pack.profile.release]

wasm-opt = ["-O4", "--signext-lowering"]

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for screeps-starter-rust

Similar Open Source Tools

screeps-starter-rust

screeps-starter-rust is a Rust AI starter kit for Screeps: World, a JavaScript-based MMO game. It utilizes the screeps-game-api bindings from the rustyscreeps organization and wasm-pack for building Rust code to WebAssembly. The example includes Rollup for bundling javascript, Babel for transpiling code, and screeps-api Node.js package for deployment. Users can refer to the Rust version of game APIs documentation at https://docs.rs/screeps-game-api/. The tool supports most crates on crates.io, except those interacting with OS APIs.

hash

HASH is a self-building, open-source database which grows, structures and checks itself. With it, we're creating a platform for decision-making, which helps you integrate, understand and use data in a variety of different ways.

desktop

ComfyUI Desktop is a packaged desktop application that allows users to easily use ComfyUI with bundled features like ComfyUI source code, ComfyUI-Manager, and uv. It automatically installs necessary Python dependencies and updates with stable releases. The app comes with Electron, Chromium binaries, and node modules. Users can store ComfyUI files in a specified location and manage model paths. The tool requires Python 3.12+ and Visual Studio with Desktop C++ workload for Windows. It uses nvm to manage node versions and yarn as the package manager. Users can install ComfyUI and dependencies using comfy-cli, download uv, and build/launch the code. Troubleshooting steps include rebuilding modules and installing missing libraries. The tool supports debugging in VSCode and provides utility scripts for cleanup. Crash reports can be sent to help debug issues, but no personal data is included.

llm-ollama

LLM-ollama is a plugin that provides access to models running on an Ollama server. It allows users to query the Ollama server for a list of models, register them with LLM, and use them for prompting, chatting, and embedding. The plugin supports image attachments, embeddings, JSON schemas, async models, model aliases, and model options. Users can interact with Ollama models through the plugin in a seamless and efficient manner.

HuggingFaceGuidedTourForMac

HuggingFaceGuidedTourForMac is a guided tour on how to install optimized pytorch and optionally Apple's new MLX, JAX, and TensorFlow on Apple Silicon Macs. The repository provides steps to install homebrew, pytorch with MPS support, MLX, JAX, TensorFlow, and Jupyter lab. It also includes instructions on running large language models using HuggingFace transformers. The repository aims to help users set up their Macs for deep learning experiments with optimized performance.

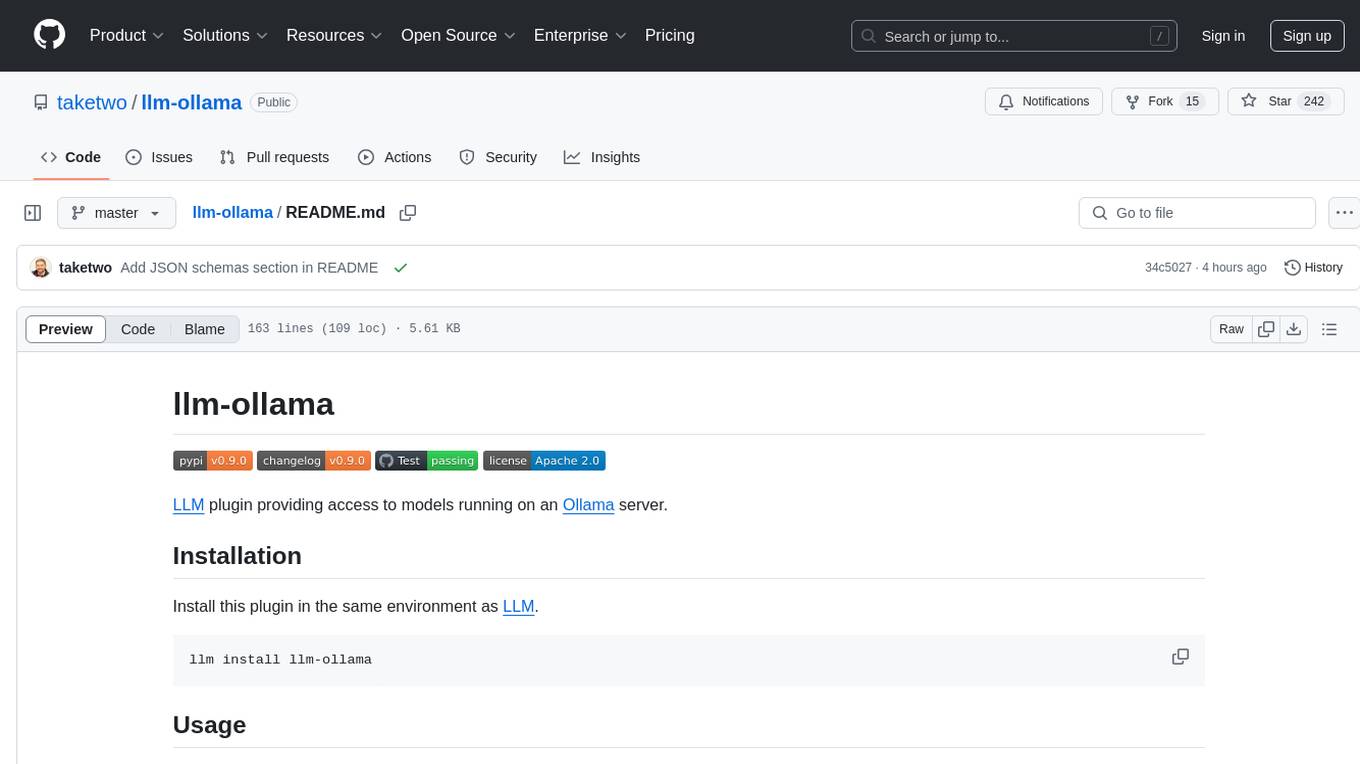

moly

Moly is an AI LLM client written in Rust, showcasing the capabilities of the Makepad UI toolkit and Project Robius, a framework for multi-platform application development in Rust. It is currently in beta, allowing users to build and run Moly on macOS, Linux, and Windows. The tool provides packaging support for different platforms, such as `.app`, `.dmg`, `.deb`, AppImage, pacman, and `.exe` (NSIS). Users can easily set up WasmEdge using `moly-runner` and leverage `cargo` commands to build and run Moly. Additionally, Moly offers pre-built releases for download and supports packaging for distribution on Linux, Windows, and macOS.

WindowsAgentArena

Windows Agent Arena (WAA) is a scalable Windows AI agent platform designed for testing and benchmarking multi-modal, desktop AI agents. It provides researchers and developers with a reproducible and realistic Windows OS environment for AI research, enabling testing of agentic AI workflows across various tasks. WAA supports deploying agents at scale using Azure ML cloud infrastructure, allowing parallel running of multiple agents and delivering quick benchmark results for hundreds of tasks in minutes.

vector-inference

This repository provides an easy-to-use solution for running inference servers on Slurm-managed computing clusters using vLLM. All scripts in this repository run natively on the Vector Institute cluster environment. Users can deploy models as Slurm jobs, check server status and performance metrics, and shut down models. The repository also supports launching custom models with specific configurations. Additionally, users can send inference requests and set up an SSH tunnel to run inference from a local device.

frontend

Nuclia frontend apps and libraries repository contains various frontend applications and libraries for the Nuclia platform. It includes components such as Dashboard, Widget, SDK, Sistema (design system), NucliaDB admin, CI/CD Deployment, and Maintenance page. The repository provides detailed instructions on installation, dependencies, and usage of these components for both Nuclia employees and external developers. It also covers deployment processes for different components and tools like ArgoCD for monitoring deployments and logs. The repository aims to facilitate the development, testing, and deployment of frontend applications within the Nuclia ecosystem.

ai-starter-kit

SambaNova AI Starter Kits is a collection of open-source examples and guides designed to facilitate the deployment of AI-driven use cases for developers and enterprises. The kits cover various categories such as Data Ingestion & Preparation, Model Development & Optimization, Intelligent Information Retrieval, and Advanced AI Capabilities. Users can obtain a free API key using SambaNova Cloud or deploy models using SambaStudio. Most examples are written in Python but can be applied to any programming language. The kits provide resources for tasks like text extraction, fine-tuning embeddings, prompt engineering, question-answering, image search, post-call analysis, and more.

vim-ollama

The 'vim-ollama' plugin for Vim adds Copilot-like code completion support using Ollama as a backend, enabling intelligent AI-based code completion and integrated chat support for code reviews. It does not rely on cloud services, preserving user privacy. The plugin communicates with Ollama via Python scripts for code completion and interactive chat, supporting Vim only. Users can configure LLM models for code completion tasks and interactive conversations, with detailed installation and usage instructions provided in the README.

web-codegen-scorer

Web Codegen Scorer is a tool designed to evaluate the quality of web code generated by Large Language Models (LLMs). It allows users to make evidence-based decisions related to AI-generated code by iterating on system prompts, comparing code quality from different models, and monitoring code quality over time. The tool focuses specifically on web code and offers various features such as configuring evaluations, specifying system instructions, using built-in checks for code quality, automatically repairing issues, and viewing results with an intuitive report viewer UI.

yoyak

Yoyak is a small CLI tool powered by LLM for summarizing and translating web pages. It provides shell completion scripts for bash, fish, and zsh. Users can set the model they want to use and summarize web pages with the 'yoyak summary' command. Additionally, translation to other languages is supported using the '-l' option with ISO 639-1 language codes. Yoyak supports various models for summarization and translation tasks.

nextjs-openai-doc-search

This starter project is designed to process `.mdx` files in the `pages` directory to use as custom context within OpenAI Text Completion prompts. It involves building a custom ChatGPT style doc search powered by Next.js, OpenAI, and Supabase. The project includes steps for pre-processing knowledge base, storing embeddings in Postgres, performing vector similarity search, and injecting content into OpenAI GPT-3 text completion prompt.

torchchat

torchchat is a codebase showcasing the ability to run large language models (LLMs) seamlessly. It allows running LLMs using Python in various environments such as desktop, server, iOS, and Android. The tool supports running models via PyTorch, chatting, generating text, running chat in the browser, and running models on desktop/server without Python. It also provides features like AOT Inductor for faster execution, running in C++ using the runner, and deploying and running on iOS and Android. The tool supports popular hardware and OS including Linux, Mac OS, Android, and iOS, with various data types and execution modes available.

termax

Termax is an LLM agent in your terminal that converts natural language to commands. It is featured by: - Personalized Experience: Optimize the command generation with RAG. - Various LLMs Support: OpenAI GPT, Anthropic Claude, Google Gemini, Mistral AI, and more. - Shell Extensions: Plugin with popular shells like `zsh`, `bash` and `fish`. - Cross Platform: Able to run on Windows, macOS, and Linux.

For similar tasks

screeps-starter-rust

screeps-starter-rust is a Rust AI starter kit for Screeps: World, a JavaScript-based MMO game. It utilizes the screeps-game-api bindings from the rustyscreeps organization and wasm-pack for building Rust code to WebAssembly. The example includes Rollup for bundling javascript, Babel for transpiling code, and screeps-api Node.js package for deployment. Users can refer to the Rust version of game APIs documentation at https://docs.rs/screeps-game-api/. The tool supports most crates on crates.io, except those interacting with OS APIs.

For similar jobs

DotRecast

DotRecast is a C# port of Recast & Detour, a navigation library used in many AAA and indie games and engines. It provides automatic navmesh generation, fast turnaround times, detailed customization options, and is dependency-free. Recast Navigation is divided into multiple modules, each contained in its own folder: - DotRecast.Core: Core utils - DotRecast.Recast: Navmesh generation - DotRecast.Detour: Runtime loading of navmesh data, pathfinding, navmesh queries - DotRecast.Detour.TileCache: Navmesh streaming. Useful for large levels and open-world games - DotRecast.Detour.Crowd: Agent movement, collision avoidance, and crowd simulation - DotRecast.Detour.Dynamic: Robust support for dynamic nav meshes combining pre-built voxels with dynamic objects which can be freely added and removed - DotRecast.Detour.Extras: Simple tool to import navmeshes created with A* Pathfinding Project - DotRecast.Recast.Toolset: All modules - DotRecast.Recast.Demo: Standalone, comprehensive demo app showcasing all aspects of Recast & Detour's functionality - Tests: Unit tests Recast constructs a navmesh through a multi-step mesh rasterization process: 1. First Recast rasterizes the input triangle meshes into voxels. 2. Voxels in areas where agents would not be able to move are filtered and removed. 3. The walkable areas described by the voxel grid are then divided into sets of polygonal regions. 4. The navigation polygons are generated by re-triangulating the generated polygonal regions into a navmesh. You can use Recast to build a single navmesh, or a tiled navmesh. Single meshes are suitable for many simple, static cases and are easy to work with. Tiled navmeshes are more complex to work with but better support larger, more dynamic environments. Tiled meshes enable advanced Detour features like re-baking, hierarchical path-planning, and navmesh data-streaming.

bots

The 'bots' repository is a collection of guides, tools, and example bots for programming bots to play video games. It provides resources on running bots live, installing the BotLab client, debugging bots, testing bots in simulated environments, and more. The repository also includes example bots for games like EVE Online, Tribal Wars 2, and Elvenar. Users can learn about developing bots for specific games, syntax of the Elm programming language, and tools for memory reading development. Additionally, there are guides on bot programming, contributing to BotLab, and exploring Elm syntax and core library.

Half-Life-Resurgence

Half-Life-Resurgence is a recreation and expansion project that brings NPCs, entities, and weapons from the Half-Life series into Garry's Mod. The goal is to faithfully recreate original content while also introducing new features and custom content envisioned by the community. Users can expect a wide range of NPCs with new abilities, AI behaviors, and weapons, as well as support for playing as any character and replacing NPCs in Half-Life 1 & 2 campaigns.

SwordCoastStratagems

Sword Coast Stratagems (SCS) is a mod that enhances Baldur's Gate games by adding over 130 optional components focused on improving monster AI, encounter difficulties, cosmetic enhancements, and ease-of-use tweaks. This repository serves as an archive for the project, with updates pushed only when new releases are made. It is not a collaborative project, and bug reports or suggestions should be made at the Gibberlings 3 forums. The mod is designed for offline workflow and should be downloaded from official releases.

LambsDanger

LAMBS Danger FSM is an open-source mod developed for Arma3, aimed at enhancing the AI behavior by integrating buildings into the tactical landscape, creating distinct AI states, and ensuring seamless compatibility with vanilla, ACE3, and modded assets. Originally created for the Norwegian gaming community, it is now available on Steam Workshop and GitHub for wider use. Users are allowed to customize and redistribute the mod according to their requirements. The project is licensed under the GNU General Public License (GPLv2) with additional amendments.

beehave

Beehave is a powerful addon for Godot Engine that enables users to create robust AI systems using behavior trees. It simplifies the design of complex NPC behaviors, challenging boss battles, and other advanced setups. Beehave allows for the creation of highly adaptive AI that responds to changes in the game world and overcomes unexpected obstacles, catering to both beginners and experienced developers. The tool is currently in development for version 3.0.

thinker

Thinker is an AI improvement mod for Alpha Centauri: Alien Crossfire that enhances single player challenge and gameplay with features like improved production/movement AI, visual changes on map rendering, more config options, resolution settings, and automation features. It includes Scient's patches and requires the GOG version of Alpha Centauri with the official Alien Crossfire patch version 2.0 installed. The mod provides additional DLL features developed in C++ for a richer gaming experience.

MobChip

MobChip is an all-in-one Entity AI and Bosses Library for Minecraft 1.13 and above. It simplifies the implementation of Minecraft's native entity AI into plugins, offering documentation, API usage, and utilities for ease of use. The library is flexible, using Reflection and Abstraction for modern functionality on older versions, and ensuring compatibility across multiple Minecraft versions. MobChip is open source, providing features like Bosses Library, Pathfinder Goals, Behaviors, Villager Gossip, Ender Dragon Phases, and more.