parsee-core

Retrieval of fully structured data made easy. Use LLMs or custom models. Specialized on PDFs and HTML files. Extensive support of tabular data extraction and multimodal queries.

Stars: 59

Parsee AI is a high-level open source data extraction and structuring framework specialized for the extraction of data from a financial domain, but can be used for other use-cases as well. It aims to make the structuring of data from unstructured sources like PDFs, HTML files, and images as easy as possible. Parsee can be used locally in Python environments or through a hosted version for cloud-based jobs. It supports the extraction of tables, numbers, and other data elements, with the ability to create custom extraction templates and run jobs using different models.

README:

Parsee AI is a high-level open source data extraction and structuring framework created by SimFin. Our goal is to make the structuring of data from the most common sources of unstructured data (mainly PDFs, HTML files and images) as easy as possible.

Parsee can be used entirely from your local Python environment. We also provide a hosted version of Parsee, where you can edit and share your extraction templates and also run jobs in the cloud: app.parsee.ai.

Parsee is specialized for the extraction of data from a financial domain (tables, numbers etc.) but can be used for other use-cases as well.

While Parsee has first class support for LLMs, the goal of Parsee is also not to limit itself to LLMs, as at least as of today, custom non-LLM models usually outperform LLMs in terms of speed, accuracy and cost-efficiency given that there is a large enough dataset available. At SimFin for example, we are using Parsee for extracting data from hundreds of documents daily, entirely without LLMs as we have already a substantial dataset built up for our tasks.

For handling tables from PDFs and images properly, we also released our open source PDF table extraction package: https://github.com/parsee-ai/parsee-pdf-reader

Recommended install with poetry: https://python-poetry.org/docs/

poetry add parsee-core

Alternatively:

pip install parsee-core

Goal:

Given we have some invoices, we want to:

- extract the invoice total, but not just the number, also the currency attached to it.

- extract the issuer of the invoice

import os

from parsee.templates.helpers import StructuringItem, MetaItem, create_template

from parsee.extraction.models.helpers import *

from parsee.converters.main import load_document, from_text

from parsee.extraction.run import run_job_with_single_model

from parsee.utils.enums import *

question_to_be_answered = "What is the invoice total?"

output_type = OutputType.NUMERIC

meta_currency_question = "What is the currency?"

meta_currency_output_type = OutputType.LIST # we want the model to use a pre-defined item from a list, this is basically a classification

meta_currency_list_values = ["USD", "EUR", "Other"] # any list of strings can be used here

meta_item = MetaItem(meta_currency_question, meta_currency_output_type, list_values=meta_currency_list_values)

invoice_total = StructuringItem(question_to_be_answered, output_type, meta_info=[meta_item])

let's also define an item for the issuer of the invoice

invoice_issuer = StructuringItem("Who is the issuer of the invoice?", OutputType.ENTITY)

job_template = create_template([invoice_total, invoice_issuer])

As an alternative to using extraction templates, you can also run your own custom prompts, more in tutorial 10.

In the following we will use the Llama 3 model from Meta via Ollama

ollama_model = ollama_config("llama3")

If you intend to use a model that has multimodal capabilities such as GPT 4 or Claude 3, you can enable the multimodal queries by setting the multimodal setting to True (you can also specify how many images should be passed to the modal at most and the maximum size for each image):

gpt_model = gpt_config(os.getenv("OPENAI_KEY"), None, openai_model_name="gpt-4-turbo", multimodal=True, max_images=3, max_image_size=2000)

or for Anthropic models:

anthropic_model = anthropic_config(os.getenv("ANTHROPIC_KEY"), "claude-3-opus-20240229", None, multimodal=True, max_images=1, max_image_size=800)

Parsee converts all data (strings, file contents etc.) to a standardized format, the class for this is called StandardDocumentFormat.

input_string = "The invoice total amounts to 12,5 Euros and is due on Feb 28th 2024. Invoice to: Some company LLC. Thanks for using the services of CloudCompany Inc."

document = from_text(input_string)

b) We can also simply load and convert files into the StandardDocumentFormat with the help of the converters that are included in Parsee, let's use an actual PDF invoice now

file_path = "../tests/fixtures/Midjourney_Invoice-DBD682ED-0005.pdf"

document = load_document(file_path)

_, _, answers_open_source_model = run_job_with_single_model(document, job_template, ollama_model)

If we look at the answers of the model we get the following:

answers_open_source_model[0].class_value

>> '11.9'

answers_open_source_model[0].meta[0].class_value

>> 'USD'

We can also use a different model to run the same extraction:

# enter your key manually here or load from an .env file

open_ai_api_key = os.getenv("OPENAI_KEY")

gpt_model = gpt_config(open_ai_api_key)

_, _, answers_gpt = run_job_with_single_model(document, job_template, gpt_model)

-

Extraction Templates & Basics: Python Code.

-

Meta Items: Python Code.

-

Table Extraction: Python Code.

-

Loading Templates from Parsee Cloud: Python Code.

-

Saving Templates: Python Code.

-

Datasets: Python Code.

-

Model Evaluations: Python Code.

-

Langchain Integration: Python Code.

-

Multimodal Models: Python Code.

-

Using Parsee Cloud to Load Images for Multimodal Applications: Python Code.

-

Custom Prompts: Python Code.

-

Document Chat (asking questions about the content of more than one file): Python Code.

In the following, we will only focus on the "general questions" items of the extraction templates. The logic for table detection/structuring items is quite similar and we will add some more explanations for them in the future.

In the most basic sense, every question you define under the "general questions" category can have exactly one answer.

If no answer can be found for a question (or meta item), the answer can always be „n/a“, meaning that the parsing of the values was not successful or the model did not have an answer. In that sense, all outputs are "nullable" but will be represented by the string value "n/a" in case they are null.

A question can have more than one answer only when there is a meta item defined, which will create an „axis“ along which the model can give different answers to the same question.

For the question: What is the invoice total? (output type numeric)

If there is no meta item defined, the model can answer this question only with one number (because of the numeric output type) or with "n/a".

e.g.

- Invoice total: 10.0

OR

- Invoice total: 21.5

OR

- Invoice total: n/a

If for some reason you want the model to not just respond with one answer, but in case there are maybe several different answers to a question for a single document, you can add a meta item.

If we define a meta item „invoice date“ and attach it to the invoice total, the model can now theoretically give several answers for the same document, differentiated by their meta ID:

e.g.

- (first answer) Invoice total: 10.0 as per 2022-03-01

AND

- (second answer) Invoice total: 24.0 as per 2022-06-01

So you can imagine the meta items as a sort of „key“, in the sense that as long as the meta values differ for 2 items, their keys will be different. All output values can be imagined as key value pairs.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for parsee-core

Similar Open Source Tools

parsee-core

Parsee AI is a high-level open source data extraction and structuring framework specialized for the extraction of data from a financial domain, but can be used for other use-cases as well. It aims to make the structuring of data from unstructured sources like PDFs, HTML files, and images as easy as possible. Parsee can be used locally in Python environments or through a hosted version for cloud-based jobs. It supports the extraction of tables, numbers, and other data elements, with the ability to create custom extraction templates and run jobs using different models.

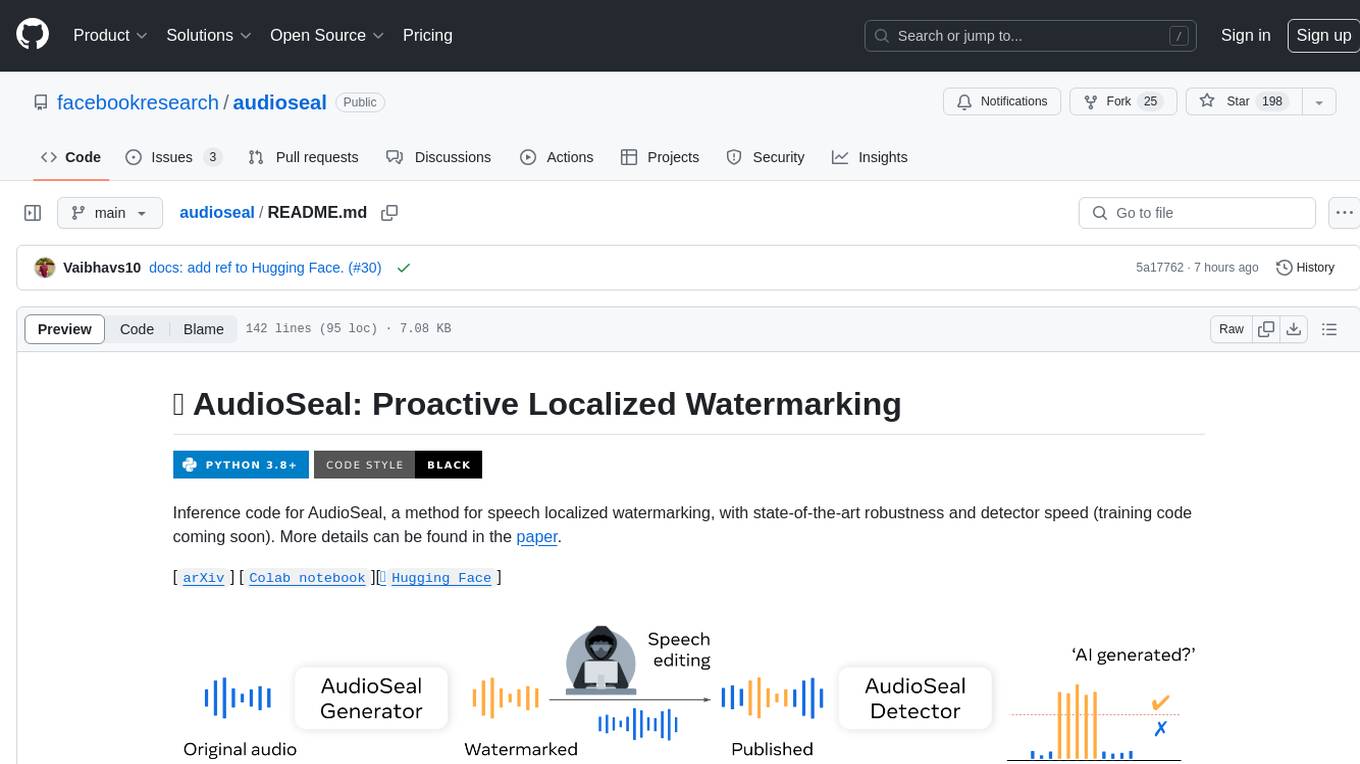

audioseal

AudioSeal is a method for speech localized watermarking, designed with state-of-the-art robustness and detector speed. It jointly trains a generator to embed a watermark in audio and a detector to detect watermarked fragments in longer audios, even in the presence of editing. The tool achieves top-notch detection performance at the sample level, generates minimal alteration of signal quality, and is robust to various audio editing types. With a fast, single-pass detector, AudioSeal surpasses existing models in speed, making it ideal for large-scale and real-time applications.

kafka-ml

Kafka-ML is a framework designed to manage the pipeline of Tensorflow/Keras and PyTorch machine learning models on Kubernetes. It enables the design, training, and inference of ML models with datasets fed through Apache Kafka, connecting them directly to data streams like those from IoT devices. The Web UI allows easy definition of ML models without external libraries, catering to both experts and non-experts in ML/AI.

uncheatable_eval

Uncheatable Eval is a tool designed to assess the language modeling capabilities of LLMs on real-time, newly generated data from the internet. It aims to provide a reliable evaluation method that is immune to data leaks and cannot be gamed. The tool supports the evaluation of Hugging Face AutoModelForCausalLM models and RWKV models by calculating the sum of negative log probabilities on new texts from various sources such as recent papers on arXiv, new projects on GitHub, news articles, and more. Uncheatable Eval ensures that the evaluation data is not included in the training sets of publicly released models, thus offering a fair assessment of the models' performance.

hackingBuddyGPT

hackingBuddyGPT is a framework for testing LLM-based agents for security testing. It aims to create common ground truth by creating common security testbeds and benchmarks, evaluating multiple LLMs and techniques against those, and publishing prototypes and findings as open-source/open-access reports. The initial focus is on evaluating the efficiency of LLMs for Linux privilege escalation attacks, but the framework is being expanded to evaluate the use of LLMs for web penetration-testing and web API testing. hackingBuddyGPT is released as open-source to level the playing field for blue teams against APTs that have access to more sophisticated resources.

LangChain

LangChain is a C# implementation of the LangChain library, which provides a composable way to build applications with LLMs (Large Language Models). It offers a variety of features, including: - A unified interface for interacting with different LLMs, such as OpenAI's GPT-3 and Microsoft's Azure OpenAI Service - A set of pre-built chains that can be used to perform common tasks, such as question answering, summarization, and translation - A flexible API that allows developers to create their own custom chains - A growing community of developers and users who are contributing to the project LangChain is still under development, but it is already being used to build a variety of applications, including chatbots, search engines, and writing assistants. As the project continues to mature, it is expected to become an increasingly valuable tool for developers who want to build applications with LLMs.

aici

The Artificial Intelligence Controller Interface (AICI) lets you build Controllers that constrain and direct output of a Large Language Model (LLM) in real time. Controllers are flexible programs capable of implementing constrained decoding, dynamic editing of prompts and generated text, and coordinating execution across multiple, parallel generations. Controllers incorporate custom logic during the token-by-token decoding and maintain state during an LLM request. This allows diverse Controller strategies, from programmatic or query-based decoding to multi-agent conversations to execute efficiently in tight integration with the LLM itself.

cameratrapai

SpeciesNet is an ensemble of AI models designed for classifying wildlife in camera trap images. It consists of an object detector that finds objects of interest in wildlife camera images and an image classifier that classifies those objects to the species level. The ensemble combines these two models using heuristics and geographic information to assign each image to a single category. The models have been trained on a large dataset of camera trap images and are used for species recognition in the Wildlife Insights platform.

zippy

ZipPy is a research repository focused on fast AI detection using compression techniques. It aims to provide a faster approximation for AI detection that is embeddable and scalable. The tool uses LZMA and zlib compression ratios to indirectly measure the perplexity of a text, allowing for the detection of low-perplexity text. By seeding a compression stream with AI-generated text and comparing the compression ratio of the seed data with the sample appended, ZipPy can identify similarities in word choice and structure to classify text as AI or human-generated.

suql

SUQL (Structured and Unstructured Query Language) is a tool that augments SQL with free text primitives for building chatbots that can interact with relational data sources containing both structured and unstructured information. It seamlessly integrates retrieval models, large language models (LLMs), and traditional SQL to provide a clean interface for hybrid data access. SUQL supports optimizations to minimize expensive LLM calls, scalability to large databases with PostgreSQL, and general SQL operations like JOINs and GROUP BYs.

pydantic-ai

PydanticAI is a Python agent framework designed to make it less painful to build production grade applications with Generative AI. It is built by the Pydantic Team and supports various AI models like OpenAI, Anthropic, Gemini, Ollama, Groq, and Mistral. PydanticAI seamlessly integrates with Pydantic Logfire for real-time debugging, performance monitoring, and behavior tracking of LLM-powered applications. It is type-safe, Python-centric, and offers structured responses, dependency injection system, and streamed responses. PydanticAI is in early beta, offering a Python-centric design to apply standard Python best practices in AI-driven projects.

CoLLM

CoLLM is a novel method that integrates collaborative information into Large Language Models (LLMs) for recommendation. It converts recommendation data into language prompts, encodes them with both textual and collaborative information, and uses a two-step tuning method to train the model. The method incorporates user/item ID fields in prompts and employs a conventional collaborative model to generate user/item representations. CoLLM is built upon MiniGPT-4 and utilizes pretrained Vicuna weights for training.

llms

The 'llms' repository is a comprehensive guide on Large Language Models (LLMs), covering topics such as language modeling, applications of LLMs, statistical language modeling, neural language models, conditional language models, evaluation methods, transformer-based language models, practical LLMs like GPT and BERT, prompt engineering, fine-tuning LLMs, retrieval augmented generation, AI agents, and LLMs for computer vision. The repository provides detailed explanations, examples, and tools for working with LLMs.

Graph-CoT

This repository contains the source code and datasets for Graph Chain-of-Thought: Augmenting Large Language Models by Reasoning on Graphs accepted to ACL 2024. It proposes a framework called Graph Chain-of-thought (Graph-CoT) to enable Language Models to traverse graphs step-by-step for reasoning, interaction, and execution. The motivation is to alleviate hallucination issues in Language Models by augmenting them with structured knowledge sources represented as graphs.

Generative-AI-Pharmacist

Generative AI Pharmacist is a project showcasing the use of generative AI tools to create an animated avatar named Macy, who delivers medication counseling in a realistic and professional manner. The project utilizes tools like Midjourney for image generation, ChatGPT for text generation, ElevenLabs for text-to-speech conversion, and D-ID for creating a photorealistic talking avatar video. The demo video featuring Macy discussing commonly-prescribed medications demonstrates the potential of generative AI in healthcare communication.

neutone_sdk

The Neutone SDK is a tool designed for researchers to wrap their own audio models and run them in a DAW using the Neutone Plugin. It simplifies the process by allowing models to be built using PyTorch and minimal Python code, eliminating the need for extensive C++ knowledge. The SDK provides support for buffering inputs and outputs, sample rate conversion, and profiling tools for model performance testing. It also offers examples, notebooks, and a submission process for sharing models with the community.

For similar tasks

parsee-core

Parsee AI is a high-level open source data extraction and structuring framework specialized for the extraction of data from a financial domain, but can be used for other use-cases as well. It aims to make the structuring of data from unstructured sources like PDFs, HTML files, and images as easy as possible. Parsee can be used locally in Python environments or through a hosted version for cloud-based jobs. It supports the extraction of tables, numbers, and other data elements, with the ability to create custom extraction templates and run jobs using different models.

For similar jobs

databerry

Chaindesk is a no-code platform that allows users to easily set up a semantic search system for personal data without technical knowledge. It supports loading data from various sources such as raw text, web pages, files (Word, Excel, PowerPoint, PDF, Markdown, Plain Text), and upcoming support for web sites, Notion, and Airtable. The platform offers a user-friendly interface for managing datastores, querying data via a secure API endpoint, and auto-generating ChatGPT Plugins for each datastore. Chaindesk utilizes a Vector Database (Qdrant), Openai's text-embedding-ada-002 for embeddings, and has a chunk size of 1024 tokens. The technology stack includes Next.js, Joy UI, LangchainJS, PostgreSQL, Prisma, and Qdrant, inspired by the ChatGPT Retrieval Plugin.

OAD

OAD is a powerful open-source tool for analyzing and visualizing data. It provides a user-friendly interface for exploring datasets, generating insights, and creating interactive visualizations. With OAD, users can easily import data from various sources, clean and preprocess data, perform statistical analysis, and create customizable visualizations to communicate findings effectively. Whether you are a data scientist, analyst, or researcher, OAD can help you streamline your data analysis workflow and uncover valuable insights from your data.

sqlcoder

Defog's SQLCoder is a family of state-of-the-art large language models (LLMs) designed for converting natural language questions into SQL queries. It outperforms popular open-source models like gpt-4 and gpt-4-turbo on SQL generation tasks. SQLCoder has been trained on more than 20,000 human-curated questions based on 10 different schemas, and the model weights are licensed under CC BY-SA 4.0. Users can interact with SQLCoder through the 'transformers' library and run queries using the 'sqlcoder launch' command in the terminal. The tool has been tested on NVIDIA GPUs with more than 16GB VRAM and Apple Silicon devices with some limitations. SQLCoder offers a demo on their website and supports quantized versions of the model for consumer GPUs with sufficient memory.

TableLLM

TableLLM is a large language model designed for efficient tabular data manipulation tasks in real office scenarios. It can generate code solutions or direct text answers for tasks like insert, delete, update, query, merge, and chart operations on tables embedded in spreadsheets or documents. The model has been fine-tuned based on CodeLlama-7B and 13B, offering two scales: TableLLM-7B and TableLLM-13B. Evaluation results show its performance on benchmarks like WikiSQL, Spider, and self-created table operation benchmark. Users can use TableLLM for code and text generation tasks on tabular data.

mlcraft

Synmetrix (prev. MLCraft) is an open source data engineering platform and semantic layer for centralized metrics management. It provides a complete framework for modeling, integrating, transforming, aggregating, and distributing metrics data at scale. Key features include data modeling and transformations, semantic layer for unified data model, scheduled reports and alerts, versioning, role-based access control, data exploration, caching, and collaboration on metrics modeling. Synmetrix leverages Cube (Cube.js) for flexible data models that consolidate metrics from various sources, enabling downstream distribution via a SQL API for integration into BI tools, reporting, dashboards, and data science. Use cases include data democratization, business intelligence, embedded analytics, and enhancing accuracy in data handling and queries. The tool speeds up data-driven workflows from metrics definition to consumption by combining data engineering best practices with self-service analytics capabilities.

data-scientist-roadmap2024

The Data Scientist Roadmap2024 provides a comprehensive guide to mastering essential tools for data science success. It includes programming languages, machine learning libraries, cloud platforms, and concepts categorized by difficulty. The roadmap covers a wide range of topics from programming languages to machine learning techniques, data visualization tools, and DevOps/MLOps tools. It also includes web development frameworks and specific concepts like supervised and unsupervised learning, NLP, deep learning, reinforcement learning, and statistics. Additionally, it delves into DevOps tools like Airflow and MLFlow, data visualization tools like Tableau and Matplotlib, and other topics such as ETL processes, optimization algorithms, and financial modeling.

VMind

VMind is an open-source solution for intelligent visualization, providing an intelligent chart component based on LLM by VisActor. It allows users to create chart narrative works with natural language interaction, edit charts through dialogue, and export narratives as videos or GIFs. The tool is easy to use, scalable, supports various chart types, and offers one-click export functionality. Users can customize chart styles, specify themes, and aggregate data using LLM models. VMind aims to enhance efficiency in creating data visualization works through dialogue-based editing and natural language interaction.

quadratic

Quadratic is a modern multiplayer spreadsheet application that integrates Python, AI, and SQL functionalities. It aims to streamline team collaboration and data analysis by enabling users to pull data from various sources and utilize popular data science tools. The application supports building dashboards, creating internal tools, mixing data from different sources, exploring data for insights, visualizing Python workflows, and facilitating collaboration between technical and non-technical team members. Quadratic is built with Rust + WASM + WebGL to ensure seamless performance in the browser, and it offers features like WebGL Grid, local file management, Python and Pandas support, Excel formula support, multiplayer capabilities, charts and graphs, and team support. The tool is currently in Beta with ongoing development for additional features like JS support, SQL database support, and AI auto-complete.