openvino_build_deploy

Pre-built components and code samples to help you build and deploy production-grade AI applications with the OpenVINO™ Toolkit from Intel

Stars: 171

The OpenVINO Build and Deploy repository provides pre-built components and code samples to accelerate the development and deployment of production-grade AI applications across various industries. With the OpenVINO Toolkit from Intel, users can enhance the capabilities of both Intel and non-Intel hardware to meet specific needs. The repository includes AI reference kits, interactive demos, workshops, and step-by-step instructions for building AI applications. Additional resources such as Jupyter notebooks and a Medium blog are also available. The repository is maintained by the AI Evangelist team at Intel, who provide guidance on real-world use cases for the OpenVINO toolkit.

README:

Welcome to the Build and Deploy AI Solutions repository! This repository contains pre-built components and code samples designed to accelerate the development and deployment of production-grade AI applications across various industries, including retail, healthcare, gaming, manufacturing, and more. With the OpenVINO™ Toolkit from Intel, you can enhance the capabilities of both Intel and non-Intel hardware to meet your specific needs.

Material and dependencies are self-contained, so you can get started building easily.

- ➡️ Repo Contents

- 📚 Additional Resources

- 🌳 How to Contribute

- ❓ Troubleshooting and Resources

- 🧑💻 Team

AI Reference Kits: Production-Ready OpenVINO Code

Start development of your AI applications, including deep learning and Gen AI, on top of our code samples, conference demos, and here.

Each AI Reference Kit includes:

- Detailed documentation

- Pre-configured demos

- Code samples to seamlessly integrate AI functionalities into your projects.

Interactive Demos: End-to-end Examples with Simple Setup

This directory contains interactive demos aimed at demonstrating how OpenVINO performs as an AI optimization and inference engine (cloud, client, and edge).

Desktop Applications: User-Friendly AI Applications with OpenVINO

Discover interactive desktop applications using OpenVINO as an engine for AI inference.

Notebooks: Jupyter Notebooks with OpenVINO code

Explore Jupyter notebooks that demonstrate how to use OpenVINO to build and deploy AI solutions.

Workshops: Guided Content to Get Started Building

Explored guided content our team created and demonstrated at events.

Wiki: Step-by-step Instructions

Explore instructions on setup for the AI PC, including performance monitoring and more.

Use our Jupyter notebooks to jump-start your projects and apps, which can be found in this repository.

To read about the latest details and updates on OpenVINO toolkit, visit our blog.

If you want to contribute to OpenVINO toolkit, please read this article.

- Open a discussion topic

- Create an issue

- Learn more about OpenVINO

- Explore OpenVINO’s documentation

Hello, we're the AI Evangelist team at Intel! We’re a group of experts in AI spread across the globe, and we aim to guide you through real-world use cases for the OpenVINO toolkit. Feel free to reach out to any of us on Linkedin, or choose the one closest to you!

|

Raymond Lo Global Lead (Santa Clara – HQ, California) PhD in Computer Engineering 10+ Years as Entrepreneur Executive & Evangelist |

Adrian Boguszewski Deep Learning Expert (Gdansk, Poland) MSc in Computer Science 5+ years as DL Engineer |

|

Anisha Udayakumar AI Innovation Consultant (Bengaluru, India) BTech in Civil Engineering 5+ years as a Innovation Consultant |

Zhuo Wu Professor and Research Scientist (Shanghai, China) PhD in Electronics 15+ Years as a Researcher and Professor |

|

Ezequiel (Eze) Lanza Open Source AI Evangelist (Toronto, Canada) MSc in Data Science 10+ years as AI/ML Engineer and Open Source Evangelist |

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for openvino_build_deploy

Similar Open Source Tools

openvino_build_deploy

The OpenVINO Build and Deploy repository provides pre-built components and code samples to accelerate the development and deployment of production-grade AI applications across various industries. With the OpenVINO Toolkit from Intel, users can enhance the capabilities of both Intel and non-Intel hardware to meet specific needs. The repository includes AI reference kits, interactive demos, workshops, and step-by-step instructions for building AI applications. Additional resources such as Jupyter notebooks and a Medium blog are also available. The repository is maintained by the AI Evangelist team at Intel, who provide guidance on real-world use cases for the OpenVINO toolkit.

arcade-ai

Arcade AI is a developer-focused tooling and API platform designed to enhance the capabilities of LLM applications and agents. It simplifies the process of connecting agentic applications with user data and services, allowing developers to concentrate on building their applications. The platform offers prebuilt toolkits for interacting with various services, supports multiple authentication providers, and provides access to different language models. Users can also create custom toolkits and evaluate their tools using Arcade AI. Contributions are welcome, and self-hosting is possible with the provided documentation.

lemonai

LemonAI is a versatile machine learning library designed to simplify the process of building and deploying AI models. It provides a wide range of tools and algorithms for data preprocessing, model training, and evaluation. With LemonAI, users can easily experiment with different machine learning techniques and optimize their models for various tasks. The library is well-documented and beginner-friendly, making it suitable for both novice and experienced data scientists. LemonAI aims to streamline the development of AI applications and empower users to create innovative solutions using state-of-the-art machine learning methods.

GenerativeAIExamples

NVIDIA Generative AI Examples are state-of-the-art examples that are easy to deploy, test, and extend. All examples run on the high performance NVIDIA CUDA-X software stack and NVIDIA GPUs. These examples showcase the capabilities of NVIDIA's Generative AI platform, which includes tools, frameworks, and models for building and deploying generative AI applications.

ai-app-lab

The ai-app-lab is a high-code Python SDK Arkitect designed for enterprise developers with professional development capabilities. It provides a toolset and workflow set for developing large model applications tailored to specific business scenarios. The SDK offers highly customizable application orchestration, quality business tools, one-stop development and hosting services, security enhancements, and AI prototype application code examples. It caters to complex enterprise development scenarios, enabling the creation of highly customized intelligent applications for various industries.

cedar-OS

Cedar OS is an open-source framework that bridges the gap between AI agents and React applications, enabling the creation of AI-native applications where agents can interact with the application state like users. It focuses on providing intuitive and powerful ways for humans to interact with AI through features like full state integration, real-time streaming, voice-first design, and flexible architecture. Cedar OS offers production-ready chat components, agentic state management, context-aware mentions, voice integration, spells & quick actions, and fully customizable UI. It differentiates itself by offering a true AI-native architecture, developer-first experience, production-ready features, and extensibility. Built with TypeScript support, Cedar OS is designed for developers working on ambitious AI-native applications.

Disciplined-AI-Software-Development

Disciplined AI Software Development is a comprehensive repository that provides guidelines and best practices for developing AI software in a disciplined manner. It covers topics such as project organization, code structure, documentation, testing, and deployment strategies to ensure the reliability, scalability, and maintainability of AI applications. The repository aims to help developers and teams navigate the complexities of AI development by offering practical advice and examples to follow.

awesome-ai-apps

This repository is a comprehensive collection of practical examples, tutorials, and recipes for building powerful LLM-powered applications. From simple chatbots to advanced AI agents, these projects serve as a guide for developers working with various AI frameworks and tools. Powered by Nebius AI Studio - your one-stop platform for building and deploying AI applications.

jadx-ai-mcp

JADX-AI-MCP is a plugin for the JADX decompiler that integrates with Model Context Protocol (MCP) to provide live reverse engineering support with LLMs like Claude. It allows for quick analysis, vulnerability detection, and AI code modification, all in real time. The tool combines JADX-AI-MCP and JADX MCP SERVER to analyze Android APKs effortlessly. It offers various prompts for code understanding, vulnerability detection, reverse engineering helpers, static analysis, AI code modification, and documentation. The tool is part of the Zin MCP Suite and aims to connect all android reverse engineering and APK modification tools with a single MCP server for easy reverse engineering of APK files.

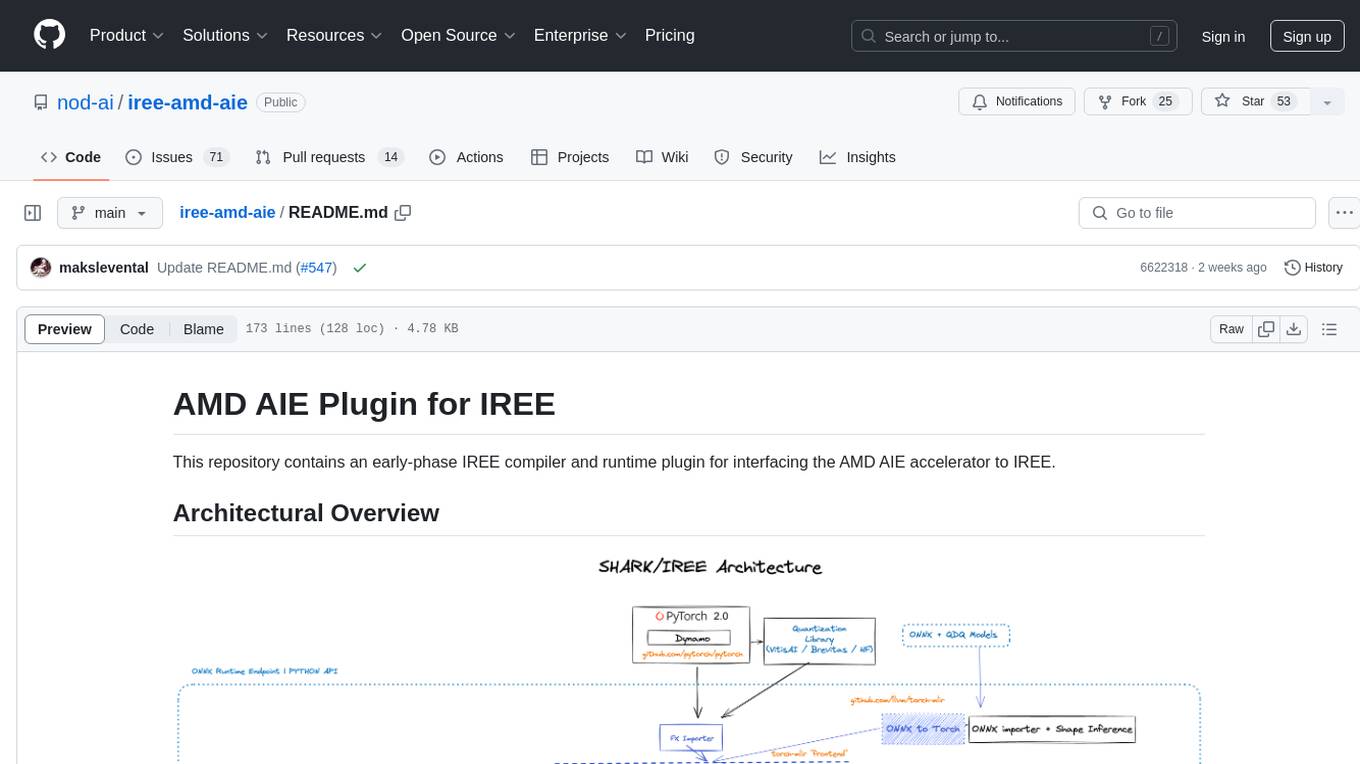

iree-amd-aie

This repository contains an early-phase IREE compiler and runtime plugin for interfacing the AMD AIE accelerator to IREE. It provides architectural overview, developer setup instructions, building guidelines, and runtime driver setup details. The repository focuses on enabling the integration of the AMD AIE accelerator with IREE, offering developers the tools and resources needed to build and run applications leveraging this technology.

deepflow

DeepFlow is an open-source project that provides deep observability for complex cloud-native and AI applications. It offers Zero Code data collection with eBPF for metrics, distributed tracing, request logs, and function profiling. DeepFlow is integrated with SmartEncoding to achieve Full Stack correlation and efficient access to all observability data. With DeepFlow, cloud-native and AI applications automatically gain deep observability, removing the burden of developers continually instrumenting code and providing monitoring and diagnostic capabilities covering everything from code to infrastructure for DevOps/SRE teams.

koog

Koog is a Kotlin-based framework for building and running AI agents entirely in idiomatic Kotlin. It allows users to create agents that interact with tools, handle complex workflows, and communicate with users. Key features include pure Kotlin implementation, MCP integration, embedding capabilities, custom tool creation, ready-to-use components, intelligent history compression, powerful streaming API, persistent agent memory, comprehensive tracing, flexible graph workflows, modular feature system, scalable architecture, and multiplatform support.

ramalama

The Ramalama project simplifies working with AI by utilizing OCI containers. It automatically detects GPU support, pulls necessary software in a container, and runs AI models. Users can list, pull, run, and serve models easily. The tool aims to support various GPUs and platforms in the future, making AI setup hassle-free.

ktransformers

KTransformers is a flexible Python-centric framework designed to enhance the user's experience with advanced kernel optimizations and placement/parallelism strategies for Transformers. It provides a Transformers-compatible interface, RESTful APIs compliant with OpenAI and Ollama, and a simplified ChatGPT-like web UI. The framework aims to serve as a platform for experimenting with innovative LLM inference optimizations, focusing on local deployments constrained by limited resources and supporting heterogeneous computing opportunities like GPU/CPU offloading of quantized models.

amazon-sagemaker-generativeai

Repository for training and deploying Generative AI models, including text-text, text-to-image generation, prompt engineering playground and chain of thought examples using SageMaker Studio. The tool provides a platform for users to experiment with generative AI techniques, enabling them to create text and image outputs based on input data. It offers a range of functionalities for training and deploying models, as well as exploring different generative AI applications.

open-ai

Open AI is a powerful tool for artificial intelligence research and development. It provides a wide range of machine learning models and algorithms, making it easier for developers to create innovative AI applications. With Open AI, users can explore cutting-edge technologies such as natural language processing, computer vision, and reinforcement learning. The platform offers a user-friendly interface and comprehensive documentation to support users in building and deploying AI solutions. Whether you are a beginner or an experienced AI practitioner, Open AI offers the tools and resources you need to accelerate your AI projects and stay ahead in the rapidly evolving field of artificial intelligence.

For similar tasks

python-tutorial-notebooks

This repository contains Jupyter-based tutorials for NLP, ML, AI in Python for classes in Computational Linguistics, Natural Language Processing (NLP), Machine Learning (ML), and Artificial Intelligence (AI) at Indiana University.

open-parse

Open Parse is a Python library for visually discerning document layouts and chunking them effectively. It is designed to fill the gap in open-source libraries for handling complex documents. Unlike text splitting, which converts a file to raw text and slices it up, Open Parse visually analyzes documents for superior LLM input. It also supports basic markdown for parsing headings, bold, and italics, and has high-precision table support, extracting tables into clean Markdown formats with accuracy that surpasses traditional tools. Open Parse is extensible, allowing users to easily implement their own post-processing steps. It is also intuitive, with great editor support and completion everywhere, making it easy to use and learn.

MoonshotAI-Cookbook

The MoonshotAI-Cookbook provides example code and guides for accomplishing common tasks with the MoonshotAI API. To run these examples, you'll need an MoonshotAI account and associated API key. Most code examples are written in Python, though the concepts can be applied in any language.

AHU-AI-Repository

This repository is dedicated to the learning and exchange of resources for the School of Artificial Intelligence at Anhui University. Notes will be published on this website first: https://www.aoaoaoao.cn and will be synchronized to the repository regularly. You can also contact me at [email protected].

modern_ai_for_beginners

This repository provides a comprehensive guide to modern AI for beginners, covering both theoretical foundations and practical implementation. It emphasizes the importance of understanding both the mathematical principles and the code implementation of AI models. The repository includes resources on PyTorch, deep learning fundamentals, mathematical foundations, transformer-based LLMs, diffusion models, software engineering, and full-stack development. It also features tutorials on natural language processing with transformers, reinforcement learning, and practical deep learning for coders.

Building-AI-Applications-with-ChatGPT-APIs

This repository is for the book 'Building AI Applications with ChatGPT APIs' published by Packt. It provides code examples and instructions for mastering ChatGPT, Whisper, and DALL-E APIs through building innovative AI projects. Readers will learn to develop AI applications using ChatGPT APIs, integrate them with frameworks like Flask and Django, create AI-generated art with DALL-E APIs, and optimize ChatGPT models through fine-tuning.

examples

This repository contains a collection of sample applications and Jupyter Notebooks for hands-on experience with Pinecone vector databases and common AI patterns, tools, and algorithms. It includes production-ready examples for review and support, as well as learning-optimized examples for exploring AI techniques and building applications. Users can contribute, provide feedback, and collaborate to improve the resource.

lingoose

LinGoose is a modular Go framework designed for building AI/LLM applications. It offers the flexibility to import only the necessary modules, abstracts features for customization, and provides a comprehensive solution for developing AI/LLM applications from scratch. The framework simplifies the process of creating intelligent applications by allowing users to choose preferred implementations or create their own. LinGoose empowers developers to leverage its capabilities to streamline the development of cutting-edge AI and LLM projects.

For similar jobs

openvino_build_deploy

The OpenVINO Build and Deploy repository provides pre-built components and code samples to accelerate the development and deployment of production-grade AI applications across various industries. With the OpenVINO Toolkit from Intel, users can enhance the capabilities of both Intel and non-Intel hardware to meet specific needs. The repository includes AI reference kits, interactive demos, workshops, and step-by-step instructions for building AI applications. Additional resources such as Jupyter notebooks and a Medium blog are also available. The repository is maintained by the AI Evangelist team at Intel, who provide guidance on real-world use cases for the OpenVINO toolkit.

djl-demo

The Deep Java Library (DJL) is a framework-agnostic Java API for deep learning. It provides a unified interface to popular deep learning frameworks such as TensorFlow, PyTorch, and MXNet. DJL makes it easy to develop deep learning applications in Java, and it can be used for a variety of tasks, including image classification, object detection, natural language processing, and speech recognition.

thinc

Thinc is a lightweight deep learning library that offers an elegant, type-checked, functional-programming API for composing models, with support for layers defined in other frameworks such as PyTorch, TensorFlow and MXNet. You can use Thinc as an interface layer, a standalone toolkit or a flexible way to develop new models.

veScale

veScale is a PyTorch Native LLM Training Framework. It provides a set of tools and components to facilitate the training of large language models (LLMs) using PyTorch. veScale includes features such as 4D parallelism, fast checkpointing, and a CUDA event monitor. It is designed to be scalable and efficient, and it can be used to train LLMs on a variety of hardware platforms.

Awesome-LLM-Inference

Awesome-LLM-Inference: A curated list of 📙Awesome LLM Inference Papers with Codes, check 📖Contents for more details. This repo is still updated frequently ~ 👨💻 Welcome to star ⭐️ or submit a PR to this repo!

Awesome-Quantization-Papers

This repo contains a comprehensive paper list of **Model Quantization** for efficient deep learning on AI conferences/journals/arXiv. As a highlight, we categorize the papers in terms of model structures and application scenarios, and label the quantization methods with keywords.

ai_all_resources

This repository is a compilation of excellent ML and DL tutorials created by various individuals and organizations. It covers a wide range of topics, including machine learning fundamentals, deep learning, computer vision, natural language processing, reinforcement learning, and more. The resources are organized into categories, making it easy to find the information you need. Whether you're a beginner or an experienced practitioner, you're sure to find something valuable in this repository.

TornadoVM

TornadoVM is a plug-in to OpenJDK and GraalVM that allows programmers to automatically run Java programs on heterogeneous hardware. TornadoVM targets OpenCL, PTX and SPIR-V compatible devices which include multi-core CPUs, dedicated GPUs (Intel, NVIDIA, AMD), integrated GPUs (Intel HD Graphics and ARM Mali), and FPGAs (Intel and Xilinx).