nodetool

Local-First Agent OS

Stars: 127

NodeTool is a platform designed for AI enthusiasts, developers, and creators, providing a visual interface to access a variety of AI tools and models. It simplifies access to advanced AI technologies, offering resources for content creation, data analysis, automation, and more. With features like a visual editor, seamless integration with leading AI platforms, model manager, and API integration, NodeTool caters to both newcomers and experienced users in the AI field.

README:

🎯 Privacy by design • 🔓 Own your stack • 🚀 Production ready

Nodetool lets you design agents that work with your data. Use any model to analyze data, generate visuals, or automate workdlows.

- Vision

- Mission

- How It Works

- Principles

- What NodeTool Is

- What NodeTool Is Not

- Who It’s For

- Roadmap

- Promise

- Quick Start

- Bring Your Own Providers

- Install Node Packs in the App

- Community

- 🛠️ Development Setup

- Run Backend & Web UI

- Testing

- Troubleshooting

- Contributing

- License

- Get in Touch

Get from idea to production in three simple steps:

- 🏗️ Build — Drag nodes to create your workflow—no coding required.

- ⚡ Run — Test locally. Your data stays on your machine by default.

- 🚀 Deploy — Ship with one command to RunPod or your own cloud.

- Local‑first.

- Open and portable.

- Powerful node system. Small, composable units.

- Transparency. See every step while it runs.

- Fast on your hardware. Optimized for MPS or CUDA acceleration.

- Visual graph editor + runnable runtime (desktop + headless)

- Execute via CLI, API, WebSocket

- Local models (Llama.cpp/HF) + optional cloud (OpenAI/Anthropic/Replicate)

- Deploy to laptop/server, Runpod, Google Cloud, AWS

- Managed SaaS, SLAs, multi‑tenant

- Compliance‑grade policy/audit

- Autoscaling orchestrator

- One‑click content toy

Indie hackers, Game devs, AI enthusiasts, creative professionals, agencies, and studios who want to build and run their own pipelines.

- Creative pipelines: templates for thumbnails, sprites, ad variants, research briefs.

- Timeline & Snapshots: run history, diffs, and easy rollback.

- Render Queue & A/B: batch runs and seeded comparisons.

- Packaging: one‑click bundles for sharing with collaborators/clients; community packs registry.

| Platform | Download | Requirements |

|---|---|---|

| Windows | Download Installer | Nvidia GPU recommended, 20GB free space |

| macOS | Download Installer | M1+ Apple Silicon |

| Linux | Download AppImage | Nvidia GPU recommended |

- Download and install NodeTool

- Launch the app

- Download models

- Start with a template or create from scratch

- Drag, connect, run—see results instantly

Connect to any AI provider. Your keys, your costs, your choice.

✅ Integrated Providers: OpenAI • Anthropic • Hugging Face • Groq • Together • Replicate • Cohere • + 8 more

Set provider API keys in Settings → Providers.

Text Generation

- Ollama

- Huggingface Llama.cpp and GGUF

- HuggingFace Hub Inference providers

- OpenAI

- Gemini

- Anthropic

- and many others

Text-to-Image

- Flux Dev, Flux Schnell (Huggingface, FAL, Replicate)

- Flux V 1 Pro (FAL, Replicate)

- Flux Subject (FAL)

- Flux Lora, Flux Lora TTI, Flux Lora Inpainting (FAL)

- Flux 360 (Replicate)

- Flux Black Light (Replicate)

- Flux Canny Dev/Pro (Replicate)

- Flux Cinestill (Replicate)

- Flux Depth Dev/Pro (Replicate)

- Flux Dev (Replicate)

- Flux Dev Lora (Replicate)

- Stable Diffusion XL (Huggingface, Replicate, Fal)

- Stable Diffusion XL Turbo (Replicate, Fal)

- Stable Diffusion Upscalersr (HuggingFace)

- AuraFlow v0.3, Bria V1/V1 Fast/V1 HD, Fast SDXL (FAL)

- Fast LCMDiffusion, Fast Lightning SDXL, Fast Turbo Diffusion (FAL)

- Hyper SDXL (FAL)

- Ideogram V 2, Ideogram V 2 Turbo (FAL)

- Illusion Diffusion (FAL)

- Kandinsky, Kandinsky 2.2 (Replicate)

- Zeroscope V 2 XL (Huggingface, Replicate)

- Ad Inpaint (Replicate)

- Consistent Character (Replicate)

Image Processing

- black-forest-labs/FLUX.1-Kontext-dev (nodetool-base)

- google/vit-base-patch16-224 (image classification, nodetool-base)

- openmmlab/upernet-convnext-small (image segmentation, nodetool-base)

- Diffusion Edge (edge detection, FAL)

- Bria Background Remove/Replace/Eraser/Expand/GenFill/ProductShot (FAL)

- Robust Video Matting (video background removal, Replicate)

- nlpconnect/vit-gpt2-image-captioning (image captioning, HuggingFace)

Audio Generation

- microsoft/speecht5_tts (TTS, nodetool-base)

- F5-TTS, E2-TTS (TTS, FAL)

- PlayAI Dialog TTS (dialog TTS, FAL)

- MMAudio V2 (music and audio generation, FAL)

- ElevenLabs TTS models (ElevenLabs)

- Stable Audio (text-to-audio, FAL & HuggingFace)

- AudioLDM, AudioLDM2 (text-to-audio, HuggingFace)

- DanceDiffusion (music generation, HuggingFace)

- MusicGen (music generation, Replicate)

- Music 01 (music generation with vocals, Replicate)

- facebook/musicgen-small/medium/large/melody (music generation, HuggingFace)

- facebook/musicgen-stereo-small/large (stereo music generation, HuggingFace)

Audio Processing

- Audio To Waveform (audio visualization, Replicate)

Video Generation

- Hotshot-XL (text-to-GIF, Replicate)

- HunyuanVideo, LTX-Video (text-to-video, Replicate)

- Kling Text To Video V 2, Kling Video V 2 (FAL)

- Pixverse Image To Video, Pixverse Text To Video, Pixverse Text To Video Fast (FAL)

- Wan Pro Image To Video, Wan Pro Text To Video (FAL)

- Wan V 2 1 13 BText To Video (FAL)

- Cog Video X (FAL)

- Haiper Image To Video (FAL)

- Wan 2 1 1 3 B (text-to-video, Replicate)

- Wan 2 1 I 2 V 480 p (image-to-video, Replicate)

- Video 01, Video 01 Live (video generation, Replicate)

- Ray (video interpolation, Replicate)

- Wan-AI/Wan2.2-I2V-A14B-Diffusers (image-to-video, HuggingFace)

- Wan-AI/Wan2.1-I2V-14B-480P-Diffusers (image-to-video, HuggingFace)

- Wan-AI/Wan2.1-I2V-14B-720P-Diffusers (image-to-video, HuggingFace)

- Wan-AI/Wan2.2-T2V-A14B-Diffusers (text-to-video, HuggingFace)

- Wan-AI/Wan2.1-T2V-14B-Diffusers (text-to-video, HuggingFace)

- Wan-AI/Wan2.2-TI2V-5B-Diffusers (text+image-to-video, HuggingFace)

Text Processing

- facebook/bart-large-cnn (summarization, nodetool-base)

- distilbert/distilbert-base-uncased-finetuned-sst-2-english (text classification, nodetool-base)

- google-t5/t5-base (text processing, nodetool-base)

- facebook/bart-large-mnli (zero-shot classification, HuggingFace)

- distilbert-base-uncased/cased-distilled-squad (question answering, HuggingFace)

Speech Recognition

- superb/hubert-base-superb-er (audio classification, nodetool-base)

- openai/whisper-large-v3 (speech recognition, nodetool-base)

- openai/whisper-large-v3-turbo/large-v2/medium/small (speech recognition, HuggingFace)

Install and manage packs directly from the desktop app.

- Open Package Manager: Launch the Electron desktop app, then open the Package Manager from the Tools menu.

- Browse and search packages: Use the top search box to filter by package name, description, or repo id.

- Search nodes across packs: Use the “Search nodes” field to find nodes by title, description, or type. You can install the required pack directly from node results.

Open source on GitHub. Star and contribute.

💬 Join Discord — Share workflows and get help from the community

🌟 Star on GitHub — Help others discover NodeTool

🚀 Contribute — Help shape the future of visual AI development

Follow these steps to set up a local development environment for the entire NodeTool platform, including the UI, backend services, and the core library (nodetool-core). If you are primarily interested in contributing to the core library itself, please also refer to the nodetool-core repository for its specific development setup using Poetry.

- Python 3.11: Required for the backend.

- Conda: Download and install from miniconda.org.

- Node.js (Latest LTS): Required for the frontend. Download and install from nodejs.org.

# Create or update the Conda environment from environment.yml

conda env update -f environment.yml --prune

conda activate nodetoolWindows shortcut: run pwsh -File setup_windows.ps1 to perform all steps (Conda env, Python installs, npm bootstraps) and start backend/web/electron processes.

The script clones or updates nodetool-core, nodetool-base, and nodetool-huggingface one level up from the nodetool repository before installing them in editable mode.

macOS/Linux shortcut: run ./scripts/setup_unix.sh for the equivalent automation.

These are the essential packages to run NodeTool.

Make sure to activate the conda environment.

# Install nodetool-core and nodetool-base

# On macOS / Linux / Windows:

uv pip install git+https://github.com/nodetool-ai/nodetool-core

uv pip install git+https://github.com/nodetool-ai/nodetool-baseIf you're working in this monorepo and want live-editable installs:

# From the repository root

conda activate nodetool

uv pip install -e ./nodetool-core

uv pip install -e ./nodetool-baseNodeTool's functionality is extended via packs. Install only the ones you need.

NOTE:

- Activate the conda environment first

- Use uv for faster installs.

Prefer the in‑app Package Manager for a guided experience. See Install Node Packs in the App. The commands below are for advanced/CI usage.

# List available packs (optional)

nodetool package list -a

# Example: Install packs for specific integrations

uv pip install git+https://github.com/nodetool-ai/nodetool-fal

uv pip install git+https://github.com/nodetool-ai/nodetool-replicate

uv pip install git+https://github.com/nodetool-ai/nodetool-elevenlabsNote: Some packs like nodetool-huggingface may require specific PyTorch versions or CUDA drivers.

Use --index-url to install:

- Check your CUDA version:

nvidia-smi

- Install PyTorch with CUDA support first:

# For CUDA 11.8

uv pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu118

# For CUDA 12.1-12.3 (most common)

uv pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu121

# For CUDA 12.4+

uv pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu124

- Install GPU-dependent packs:

# Use --extra-index-url to access both PyPI and PyTorch packages

uv pip install --extra-index-url https://download.pytorch.org/whl/cu121 git+https://github.com/nodetool-ai/nodetool-huggingface

- Verify GPU support:

python -c "import torch; print(f'CUDA available: {torch.cuda.is_available()}')"

If you see "bitsandbytes compiled without GPU support", reinstall it:

uv pip uninstall bitsandbytes

uv pip install bitsandbytes

If PyTorch shows CPU-only version, make sure you used the correct url from step 2.

Use --extra-index-url (not --index-url) when installing from git repositories to avoid missing dependencies

Ensure the nodetool Conda environment is active.

Option A: Run Backend with Web UI (for Development)

This command starts the backend server:

# On macOS / Linux / Windows:

nodetool serve --reloadRun frontend in web folder:

cd web

npm install

npm startAccess the UI in your browser at http://localhost:3000.

Option B: Run with Electron App

This provides the full desktop application experience.

Configure Conda Path:

Ensure your settings.yaml file points to your Conda environment path:

- macOS/Linux:

~/.config/nodetool/settings.yaml - Windows:

%APPDATA%/nodetool/settings.yaml

CONDA_ENV: /path/to/your/conda/envs/nodetool # e.g., /Users/me/miniconda3/envs/nodetoolBuild Frontends: You only need to do this once or when frontend code changes.

# Build the main web UI

cd web

npm install

npm run build

cd ..

# Build the apps UI (if needed)

cd apps

npm install

npm run build

cd ..

# Build the Electron UI

cd electron

npm install

npm run build

cd ..Start Electron:

cd electron

npm start # launches the desktop app using the previously built UIThe Electron app will launch, automatically starting the backend and frontend.

pytest -qcd web

npm test

npm run lint

npm run typecheckcd electron

npm run lint

npm run typecheck-

Node/npm versions: use Node.js LTS (≥18). If switching versions:

rm -rf node_modules && npm install - Port in use (3000/8000): stop other processes or choose another port for the web UI.

-

CLI not found (

nodetool): ensure the Conda env is active and packages are installed; restart your shell. -

GPU/PyTorch issues: follow the CUDA-specific steps above and prefer

--extra-index-urlfor mixed sources.

We welcome community contributions!

- Fork the repository

- Create a feature branch (

git checkout -b feature/amazing-feature) -

Commit your changes (

git commit -m 'Add amazing feature') -

Push to the branch (

git push origin feature/amazing-feature) - Open a Pull Request

Please follow our contribution guidelines and code of conduct.

AGPL-3.0 — True ownership, zero compromise.

Tell us what's missing and help shape NodeTool

✉️ Got ideas or just want to say hi?

[email protected]

👥 Built by makers, for makers

Matthias Georgi: [email protected]

David Bührer: [email protected]

📖 Documentation: docs.nodetool.ai

🐛 Issues: GitHub Issues

NodeTool — Build agents visually, deploy anywhere. Privacy first. ❤️

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for nodetool

Similar Open Source Tools

nodetool

NodeTool is a platform designed for AI enthusiasts, developers, and creators, providing a visual interface to access a variety of AI tools and models. It simplifies access to advanced AI technologies, offering resources for content creation, data analysis, automation, and more. With features like a visual editor, seamless integration with leading AI platforms, model manager, and API integration, NodeTool caters to both newcomers and experienced users in the AI field.

layra

LAYRA is the world's first visual-native AI automation engine that sees documents like a human, preserves layout and graphical elements, and executes arbitrarily complex workflows with full Python control. It empowers users to build next-generation intelligent systems with no limits or compromises. Built for Enterprise-Grade deployment, LAYRA features a modern frontend, high-performance backend, decoupled service architecture, visual-native multimodal document understanding, and a powerful workflow engine.

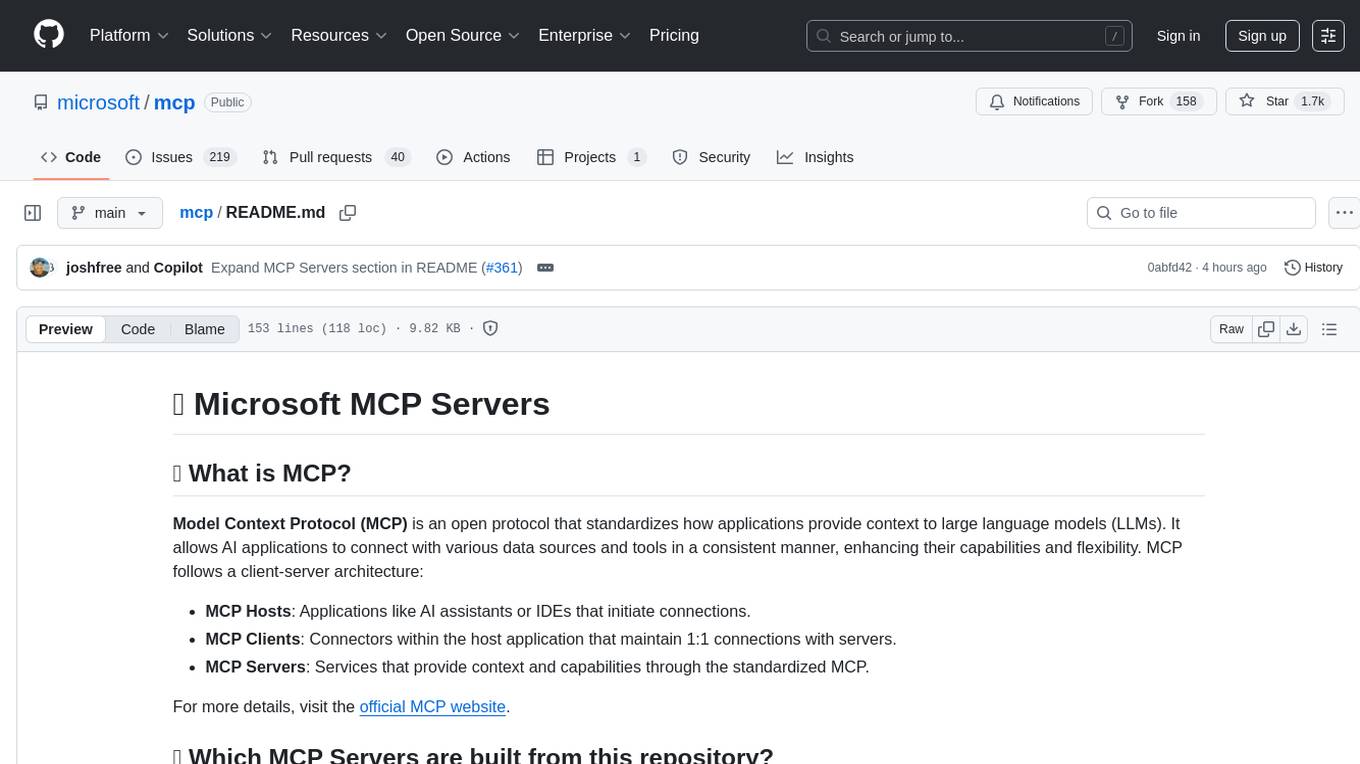

mcp

Model Context Protocol (MCP) is an open protocol that standardizes how applications provide context to large language models (LLMs). It allows AI applications to connect with various data sources and tools in a consistent manner, enhancing their capabilities and flexibility. This repository contains core libraries, test frameworks, engineering systems, pipelines, and tooling for Microsoft MCP Server contributors to unify engineering investments and reduce duplication and divergence. For more details, visit the official MCP website.

opcode

opcode is a powerful desktop application built with Tauri 2 that serves as a command center for interacting with Claude Code. It offers a visual GUI for managing Claude Code sessions, creating custom agents, tracking usage, and more. Users can navigate projects, create specialized AI agents, monitor usage analytics, manage MCP servers, create session checkpoints, edit CLAUDE.md files, and more. The tool bridges the gap between command-line tools and visual experiences, making AI-assisted development more intuitive and productive.

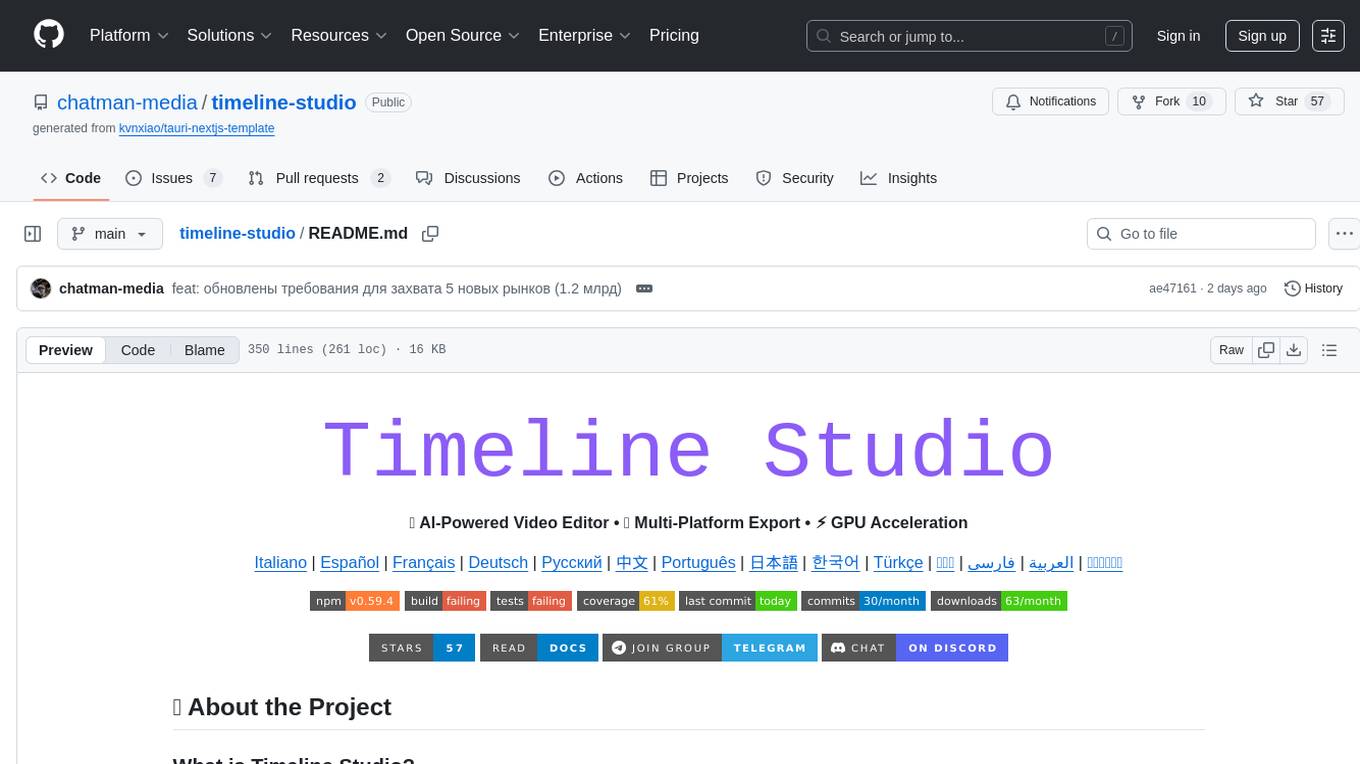

timeline-studio

Timeline Studio is a next-generation professional video editor with AI integration that automates content creation for social media. It combines the power of desktop applications with the convenience of web interfaces. With 257 AI tools, GPU acceleration, plugin system, multi-language interface, and local processing, Timeline Studio offers complete video production automation. Users can create videos for various social media platforms like TikTok, YouTube, Vimeo, Telegram, and Instagram with optimized versions. The tool saves time, understands trends, provides professional quality, and allows for easy feature extension through plugins. Timeline Studio is open source, transparent, and offers significant time savings and quality improvements for video editing tasks.

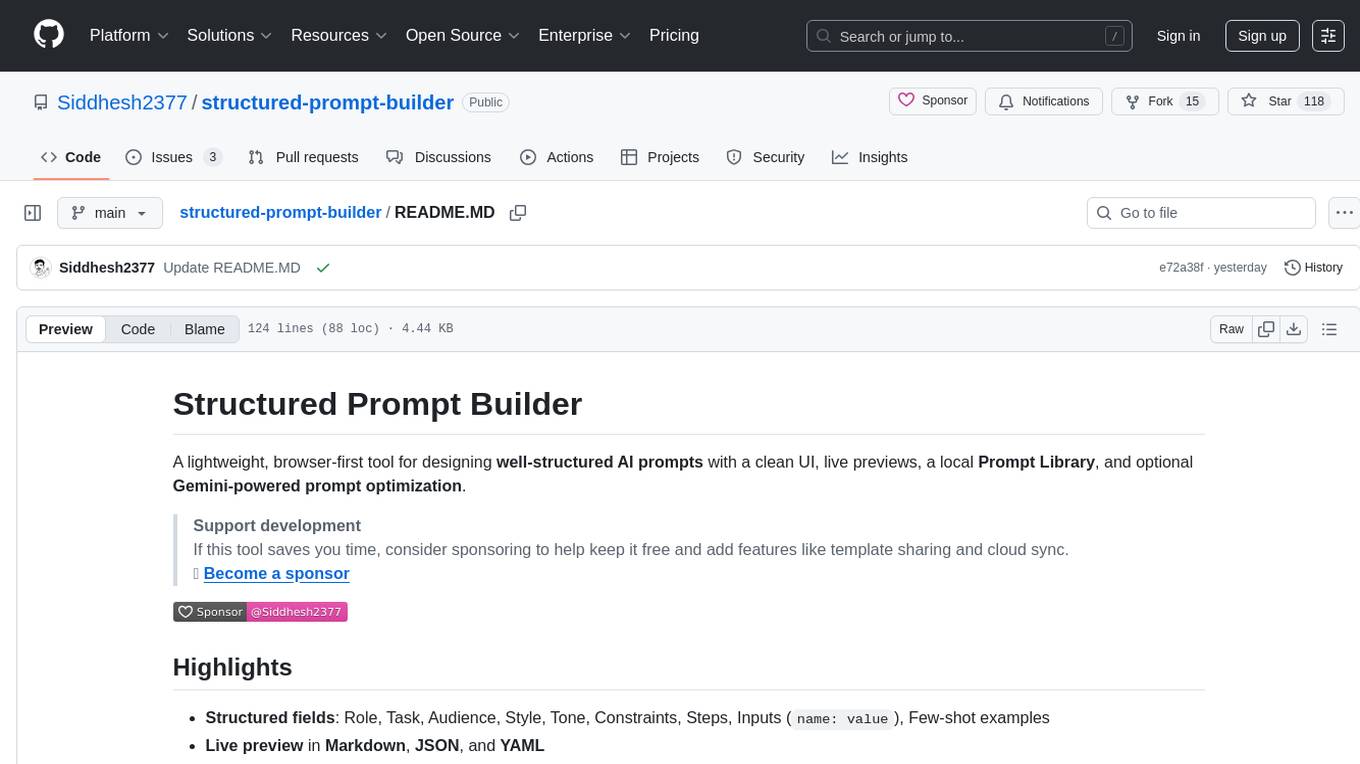

structured-prompt-builder

A lightweight, browser-first tool for designing well-structured AI prompts with a clean UI, live previews, a local Prompt Library, and optional Gemini-powered prompt optimization. It supports structured fields like Role, Task, Audience, Style, Tone, Constraints, Steps, Inputs, and Few-shot examples. Users can copy/download prompts in Markdown, JSON, and YAML formats, and utilize model parameters like Temperature, Top-p, Max tokens, Presence & Frequency penalties. The tool also features a Local Prompt Library for saving, loading, duplicating, and deleting prompts, as well as a Gemini Optimizer for cleaning grammar/clarity without altering the schema. It offers dark/light friendly styles and a focused reading mode for long prompts.

AutoAgents

AutoAgents is a cutting-edge multi-agent framework built in Rust that enables the creation of intelligent, autonomous agents powered by Large Language Models (LLMs) and Ractor. Designed for performance, safety, and scalability. AutoAgents provides a robust foundation for building complex AI systems that can reason, act, and collaborate. With AutoAgents you can create Cloud Native Agents, Edge Native Agents and Hybrid Models as well. It is so extensible that other ML Models can be used to create complex pipelines using Actor Framework.

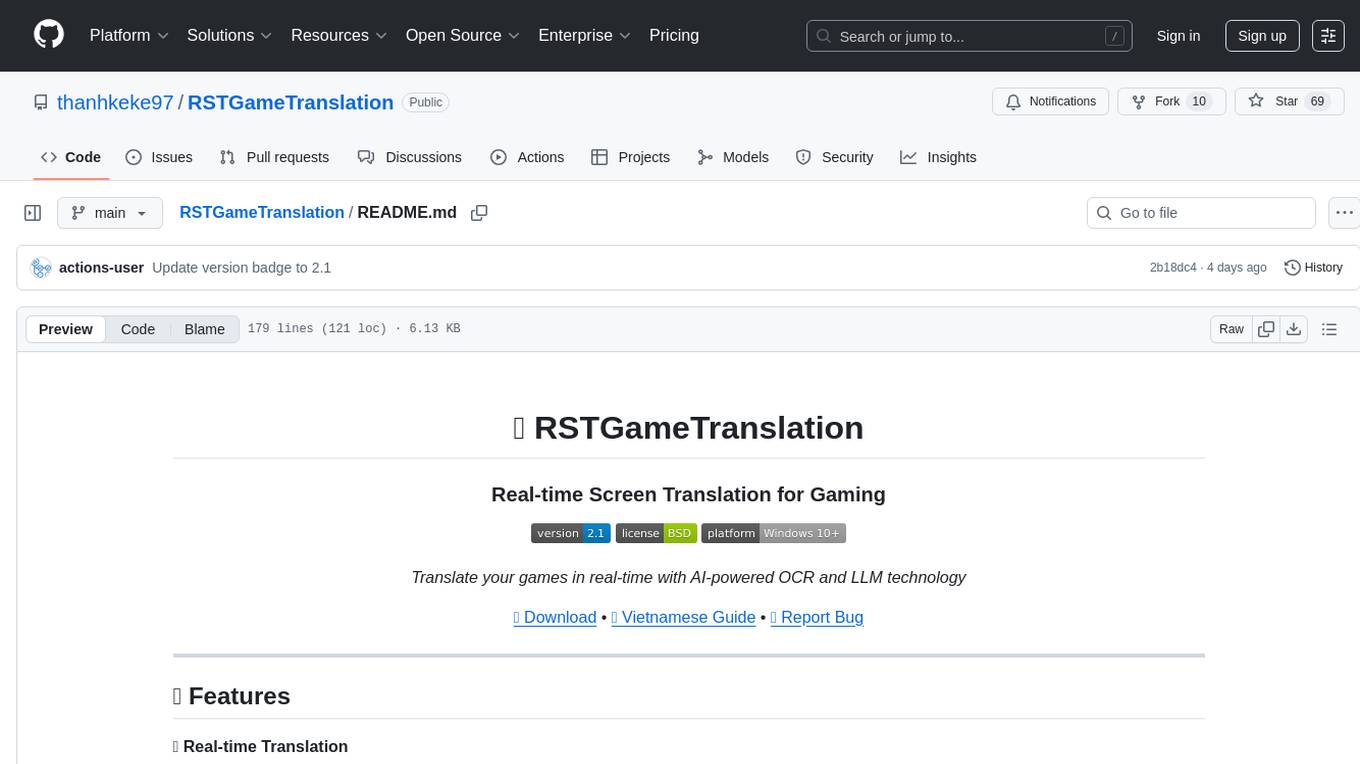

RSTGameTranslation

RSTGameTranslation is a tool designed for translating game text into multiple languages efficiently. It provides a user-friendly interface for game developers to easily manage and localize their game content. With RSTGameTranslation, developers can streamline the translation process, ensuring consistency and accuracy across different language versions of their games. The tool supports various file formats commonly used in game development, making it versatile and adaptable to different project requirements. Whether you are working on a small indie game or a large-scale production, RSTGameTranslation can help you reach a global audience by making localization a seamless and hassle-free experience.

evi-run

evi-run is a powerful, production-ready multi-agent AI system built on Python using the OpenAI Agents SDK. It offers instant deployment, ultimate flexibility, built-in analytics, Telegram integration, and scalable architecture. The system features memory management, knowledge integration, task scheduling, multi-agent orchestration, custom agent creation, deep research, web intelligence, document processing, image generation, DEX analytics, and Solana token swap. It supports flexible usage modes like private, free, and pay mode, with upcoming features including NSFW mode, task scheduler, and automatic limit orders. The technology stack includes Python 3.11, OpenAI Agents SDK, Telegram Bot API, PostgreSQL, Redis, and Docker & Docker Compose for deployment.

aegra

Aegra is a self-hosted AI agent backend platform that provides LangGraph power without vendor lock-in. Built with FastAPI + PostgreSQL, it offers complete control over agent orchestration for teams looking to escape vendor lock-in, meet data sovereignty requirements, enable custom deployments, and optimize costs. Aegra is Agent Protocol compliant and perfect for teams seeking a free, self-hosted alternative to LangGraph Platform with zero lock-in, full control, and compatibility with existing LangGraph Client SDK.

neuropilot

NeuroPilot is an open-source AI-powered education platform that transforms study materials into interactive learning resources. It provides tools like contextual chat, smart notes, flashcards, quizzes, and AI podcasts. Supported by various AI models and embedding providers, it offers features like WebSocket streaming, JSON or vector database support, file-based storage, and configurable multi-provider setup for LLMs and TTS engines. The technology stack includes Node.js, TypeScript, Vite, React, TailwindCSS, JSON database, multiple LLM providers, and Docker for deployment. Users can contribute to the project by integrating AI models, adding mobile app support, improving performance, enhancing accessibility features, and creating documentation and tutorials.

cc-sdd

The cc-sdd repository provides a tool for AI-Driven Development Life Cycle with Spec-Driven Development workflows for Claude Code and Gemini CLI. It includes powerful slash commands, Project Memory for AI learning, structured AI-DLC workflow, Spec-Driven Development methodology, and Kiro IDE compatibility. Ideal for feature development, code reviews, technical planning, and maintaining development standards. The tool supports multiple coding agents, offers an AI-DLC workflow with quality gates, and allows for advanced options like language and OS selection, preview changes, safe updates, and custom specs directory. It integrates AI-Driven Development Life Cycle, Project Memory, Spec-Driven Development, supports cross-platform usage, multi-language support, and safe updates with backup options.

claude-007-agents

Claude Code Agents is an open-source AI agent system designed to enhance development workflows by providing specialized AI agents for orchestration, resilience engineering, and organizational memory. These agents offer specialized expertise across technologies, AI system with organizational memory, and an agent orchestration system. The system includes features such as engineering excellence by design, advanced orchestration system, Task Master integration, live MCP integrations, professional-grade workflows, and organizational intelligence. It is suitable for solo developers, small teams, enterprise teams, and open-source projects. The system requires a one-time bootstrap setup for each project to analyze the tech stack, select optimal agents, create configuration files, set up Task Master integration, and validate system readiness.

astrsk

astrsk is a tool that pushes the boundaries of AI storytelling by offering advanced AI agents, customizable response formatting, and flexible prompt editing for immersive roleplaying experiences. It provides complete AI agent control, a visual flow editor for conversation flows, and ensures 100% local-first data storage. The tool is true cross-platform with support for various AI providers and modern technologies like React, TypeScript, and Tailwind CSS. Coming soon features include cross-device sync, enhanced session customization, and community features.

shimmy

Shimmy is a 5.1MB single-binary local inference server providing OpenAI-compatible endpoints for GGUF models. It offers fast, reliable AI inference with sub-second responses, zero configuration, and automatic port management. Perfect for developers seeking privacy, cost-effectiveness, speed, and easy integration with popular tools like VSCode and Cursor. Shimmy is designed to be invisible infrastructure that simplifies local AI development and deployment.

lighteval

LightEval is a lightweight LLM evaluation suite that Hugging Face has been using internally with the recently released LLM data processing library datatrove and LLM training library nanotron. We're releasing it with the community in the spirit of building in the open. Note that it is still very much early so don't expect 100% stability ^^' In case of problems or question, feel free to open an issue!

For similar tasks

Awesome-AITools

This repo collects AI-related utilities. ## All Categories * All Categories * ChatGPT and other closed-source LLMs * AI Search engine * Open Source LLMs * GPT/LLMs Applications * LLM training platform * Applications that integrate multiple LLMs * AI Agent * Writing * Programming Development * Translation * AI Conversation or AI Voice Conversation * Image Creation * Speech Recognition * Text To Speech * Voice Processing * AI generated music or sound effects * Speech translation * Video Creation * Video Content Summary * OCR(Optical Character Recognition)

NSMusicS

NSMusicS is a local music software that is expected to support multiple platforms with AI capabilities and multimodal features. The goal of NSMusicS is to integrate various functions (such as artificial intelligence, streaming, music library management, cross platform, etc.), which can be understood as similar to Navidrome but with more features than Navidrome. It wants to become a plugin integrated application that can almost have all music functions.

biniou

biniou is a self-hosted webui for various GenAI (generative artificial intelligence) tasks. It allows users to generate multimedia content using AI models and chatbots on their own computer, even without a dedicated GPU. The tool can work offline once deployed and required models are downloaded. It offers a wide range of features for text, image, audio, video, and 3D object generation and modification. Users can easily manage the tool through a control panel within the webui, with support for various operating systems and CUDA optimization. biniou is powered by Huggingface and Gradio, providing a cross-platform solution for AI content generation.

generative-ai-js

Generative AI JS is a JavaScript library that provides tools for creating generative art and music using artificial intelligence techniques. It allows users to generate unique and creative content by leveraging machine learning models. The library includes functions for generating images, music, and text based on user input and preferences. With Generative AI JS, users can explore the intersection of art and technology, experiment with different creative processes, and create dynamic and interactive content for various applications.

pictureChange

The 'pictureChange' repository is a plugin that supports image processing using Baidu AI, stable diffusion webui, and suno music composition AI. It also allows for file summarization and image summarization using AI. The plugin supports various stable diffusion models, administrator control over group chat features, concurrent control, and custom templates for image and text generation. It can be deployed on WeChat enterprise accounts, personal accounts, and public accounts.

Generative-AI-Indepth-Basic-to-Advance

Generative AI Indepth Basic to Advance is a repository focused on providing tutorials and resources related to generative artificial intelligence. The repository covers a wide range of topics from basic concepts to advanced techniques in the field of generative AI. Users can find detailed explanations, code examples, and practical demonstrations to help them understand and implement generative AI algorithms. The goal of this repository is to help beginners get started with generative AI and to provide valuable insights for more experienced practitioners.

nodetool

NodeTool is a platform designed for AI enthusiasts, developers, and creators, providing a visual interface to access a variety of AI tools and models. It simplifies access to advanced AI technologies, offering resources for content creation, data analysis, automation, and more. With features like a visual editor, seamless integration with leading AI platforms, model manager, and API integration, NodeTool caters to both newcomers and experienced users in the AI field.

ai-enhanced-audio-book

The ai-enhanced-audio-book repository contains AI-enhanced audio plugins developed using C++, JUCE, libtorch, RTNeural, and other libraries. It showcases neural networks learning to emulate guitar amplifiers through waveforms. Users can visit the official website for more information and obtain a copy of the book from the publisher Taylor and Francis/ Routledge/ Focal.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.