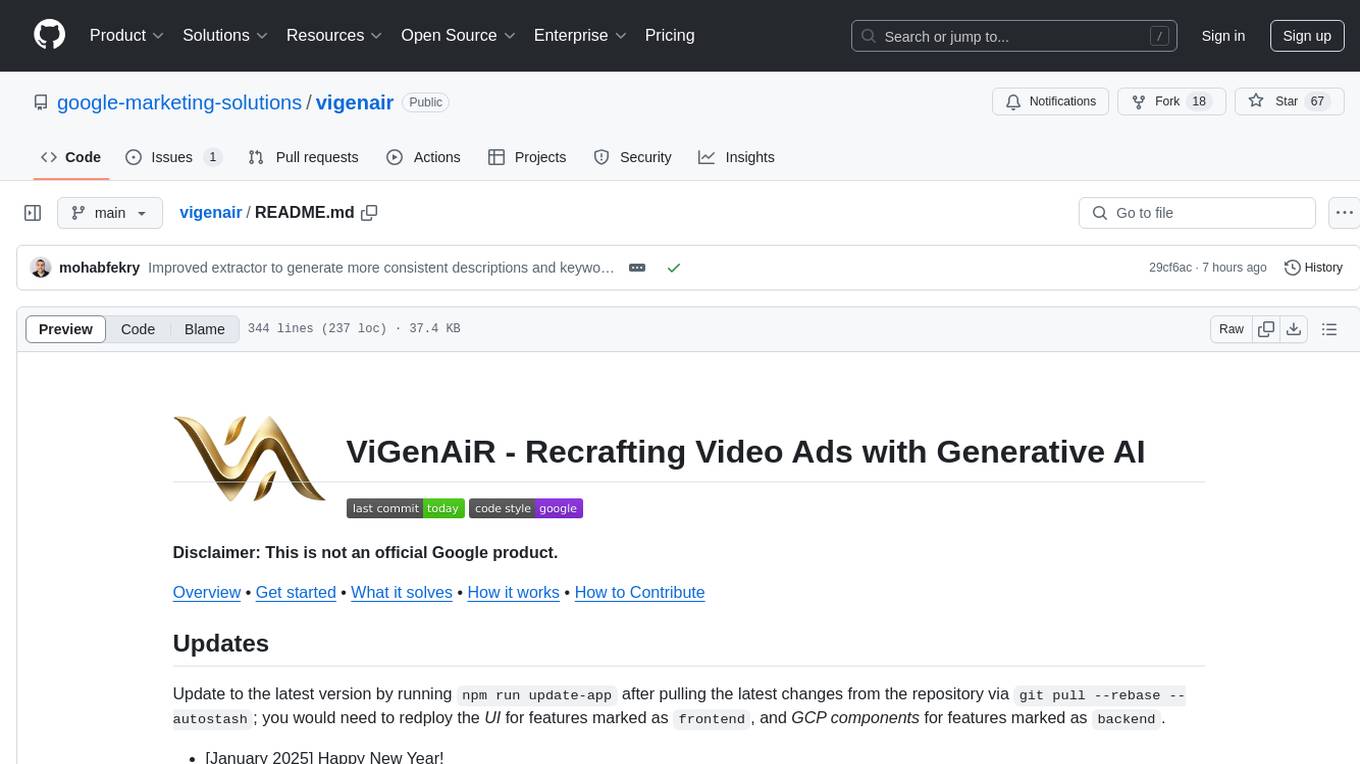

vigenair

Recrafting Video Ads with Generative AI

Stars: 83

ViGenAiR is a tool that harnesses the power of Generative AI models on Google Cloud Platform to automatically transform long-form Video Ads into shorter variants, targeting different audiences. It generates video, image, and text assets for Demand Gen and YouTube video campaigns. Users can steer the model towards generating desired videos, conduct A/B testing, and benefit from various creative features. The tool offers benefits like diverse inventory, compelling video ads, creative excellence, user control, and performance insights. ViGenAiR works by analyzing video content, splitting it into coherent segments, and generating variants following Google's best practices for effective ads.

README:

Disclaimer: This is not an official Google product.

Overview • Get started • What it solves • How it works • How to Contribute

Update to the latest version by running npm run update-app after pulling the latest changes from the repository via git pull --rebase --autostash; you would need to redploy the UI for features marked as frontend, and GCP components for features marked as backend.

- [February 2025]

-

frontend: You can now choose objective-specific ABCDs (Awareness, Consideration, Action, or Shorts) in the Advanced settings section of variants generation. Read more here.

-

- [January 2025] Happy New Year!

-

frontend: You can now input your own guidelines for evaluation and scoring of generated variants using the Advanced settings section. Read more here. -

backend: Added functionality to identify key frames using Gemini and extract them as additional Demand Gen image assets. -

backend: Improved the extraction process to maintain consistency across the generated descriptions and keywords per segment.

-

- [December 2024]

-

frontend: Added functionality to generate Demand Gen text assets in a desired target language. Read more here. -

frontend: Added possibility to load previously rendered videos via a new dropdown. You can now also specify a name for each render job which will be displayed alongside the render timestamp. Read more here. -

frontend: Added checkbox to select/deselect all segments during variants preview. -

frontend: During variants generation, users can now preview the total duration of their variant directly as they are selecting/deselecting segments. -

frontend: The ABCDs evaluation section per variant may now additionally include recommendations on how to improve the video's content to make it more engaging. -

frontend: Improved user instruction following for the variants generation prompt and simplified the input process; you no longer need a separate checkbox to include or exclude elements - just specify your requirements directly in the prompt. -

frontend+backend: Added support for Gemini 2.0.

-

- [November 2024]

-

frontend+backend: General bug fixes and performance improvements. -

frontend+backend: Added possibility to select the timing for audio and music overlays. Read more here.

-

- [October 2024]

- [September 2024]

-

backend: You can now process any video of any length or size - even beyond the Google Cloud Video Intelligence API limits of 50 GB size and up to 3h video length.

-

- [August 2024]

- Updated the pricing section and Cloud calculator example to use the new (cheaper) pricing for

Gemini 1.5 Flash. -

frontend: You can now manually move the Smart Framing crop area to better capture the point of interest. Read more here. -

frontend: You can now share a page of the Web App containing your rendered videos and all associated image & text assets via a dedicated link. Read more here. -

frontend+backend: Performance improvements for processing videos that are 10 minutes or longer.

- Updated the pricing section and Cloud calculator example to use the new (cheaper) pricing for

- [July 2024]

-

frontend+backend: We now render non-blurred vertical and square formats by dynamically framing the most prominent part of the video. Read more here. -

frontend+backend: You can now reorder segments by dragging & dropping them during the variants preview. Read more here. -

frontend+backend: The UI now supports upload and processing of all video MIME types supported by Gemini. -

backend: The Demand Gen text assets generation prompt has been adjusted to better adhere to the "Punctuation & Symbols" policy.

-

- [June 2024]

-

frontend: Enhanced file upload process to support >20MB files and up to browser-specific limits (~2-4GB). -

frontend+backend: Improved variants generation and Demand Gen text assets generation prompt.

-

- [May 2024]: Launch! 🚀

ViGenAiR (pronounced vision-air) harnesses the power of multimodal Generative AI models on Google Cloud Platform (GCP) to automatically transform long-form Video Ads into shorter variants, in multiple ad formats, targeting different audiences. It is your AI-powered creative partner, generating video, image and text assets to power Demand Gen and YouTube video campaigns. ViGenAiR is an acronym for Video Generation via Ads Recrafting, and is more colloquially referred to as Vigenair. Check out the tool's sizzle reel on YouTube by clicking on the image below:

Note: Looking to take action on your Demand Gen insights and recommendations? Try Demand Gen Pulse, a Looker Studio Dashboard that gives you a single source of truth for your Demand Gen campaigns across accounts. It surfaces creative best practices and flags when they are not adopted, and provides additional insights including audience performance and conversion health.

Please make sure you have fulfilled all prerequisites mentioned under Requirements first.

- Make sure your system has an up-to-date installation of Node.js and npm.

- Install clasp by running

npm install @google/clasp -g, then login viaclasp login. - Navigate to the Apps Script Settings page and

enablethe Apps Script API. - Make sure your system has an up-to-date installation of the gcloud CLI, then login via

gcloud auth login. - Make sure your system has an up-to-date installation of

gitand use it to clone this repository:git clone https://github.com/google-marketing-solutions/vigenair. - Navigate to the directory where the source code lives:

cd vigenair. - Run

npm start:- First, enter your GCP Project ID.

- Then select whether you would like to deploy GCP components (defaults to

Yes) and the UI (also defaults toYes).- When deploying GCP components, you will be prompted to enter an optional Cloud Function region (defaults to

us-central1) and an optional GCS location (defaults tous). - When deploying the UI, you will be asked if you are a Google Workspace user and if you want others in your Workspace domain to access your deployed web app (defaults to

No). By default, the web app is only accessible by you, and that is controlled by the web app access settings in the project's manifest file, which defaults toMYSELF. If you answerYeshere, this value will be changed toDOMAINto allow other individuals within your organisation to access the web app without having to deploy it themselves.

- When deploying GCP components, you will be prompted to enter an optional Cloud Function region (defaults to

The npm start script will then proceed to perform the deployments you requested (GCP, UI, or both), where GCP is deployed first, followed by the UI. For GCP, the script will first create a bucket named <gcp_project_id>-vigenair (if it doesn't already exist), then enable all necessary Cloud APIs and set up the right access roles, before finally deploying the vigenair Cloud Function to your Cloud project. The script would then deploy the Angular UI web app to a new Apps Script project, outputting the URL of the web app at the end of the deployment process, which you can use to run the app.

See How Vigenair Works for more details on the different components of the solution.

Note: If using a completely new GCP project with no prior deployments of Cloud Run / Cloud Functions, you may receive Eventarc permission denied errors when deploying the

vigenairCloud Function for the very first time. Please wait a few minutes (up to seven) for all necessary permissions to propagate before retrying thenpm startcommand.

The npm start and npm run update-app scripts manage deployments for you; a new deployment is always created and existing ones get archived, so that the version of the web app you use has the latest changes from your local copy of this repository. If you would like to manually manage deployments, you may do so by navigating to the Apps Script home page, locating and selecting the ViGenAiR project, then managing deployments via the Deploy button/dropdown in the top-right corner of the page.

You need the following to use Vigenair:

- Google account: required to access the Vigenair web app.

- GCP project

- All users running Vigenair must be granted the Vertex AI User and the Storage Object User roles on the associated GCP project.

The Vigenair setup and deployment script will create the following components:

- A Google Cloud Storage (GCS) bucket named

<gcp_project_id>-vigenair - A Cloud Function (2nd gen) named

vigenairthat fulfills both the Extractor and Combiner services. Refer to deploy.sh for specs. - An Apps Script deployment for the frontend web app.

If you will also be deploying Vigenair, you need to have the following additional roles on the associated GCP project:

-

Storage Adminfor the entire project ORStorage Legacy Bucket Writeron the<gcp_project_id>-vigenairbucket. See IAM Roles for Cloud Storage for more information. -

Cloud Functions Developerto deploy and manage Cloud Functions. See IAM Roles for Cloud Functions for more information. -

Project IAM Adminto be able to run the commands that set up roles and policy bindings in the deployment script. See IAM access control for more information.

Current Video Ads creative solutions, both within YouTube / Google Ads as well as open source, primarily focus on 4 of the 5 keys to effective advertising - Brand, Targeting, Reach and Recency. Those 4 pillars contribute to only ~50% of the potential marketing ROI, with the 5th pillar - Creative - capturing a whopping ~50% all on its own.

Vigenair focuses on the Creative pillar to help potentially unlock ~50% ROI while solving a huge pain point for most advertisers; the generation of high-quality video assets in different durations and Video Ad formats, powered by Google's multimodal Generative AI - Gemini.

- Inventory: Horizontal, vertical and square Video assets in different durations allow advertisers to tap into virtually ALL Google-owned sources of inventory.

- Campaigns: Shorter more compelling Video Ads that still capture the meaning and storyline of their original ads - ideal for Social and Awareness/Consideration campaigns.

- Creative Excellence: Coherent Videos (e.g. dialogue doesn't get cut mid-scene, videos don't end abruptly, etc.) that follow Google's best practices for effective video ads, including dynamic square and vertical aspect ratio framing, and (coming soon) creative direction rules for camera angles and scene cutting.

- User Control: Users can steer the model towards generating their desired videos (via prompts and/or manual scene selection).

- Performance: Built-in A/B testing provides a basis for automatically identifying the best variants tailored to the advertiser's target audiences.

Vigenair's frontend is an Angular Progressive Web App (PWA) hosted on Google Apps Script and accessible via a web app deployment. Users must authenticate with a Google account in order to use the Vigenair web app. Backend services are hosted on Cloud Functions 2nd gen, and are triggered via Cloud Storage (GCS). Decoupling the UI and core services via GCS significantly reduces authentication overhead and effectively implements separation of concerns between the frontend and backend layers.

Vigenair uses Gemini on Vertex AI to holistically understand and analyse the content and storyline of a Video Ad, automatically splitting it into coherent audio/video segments that are then used to generate different shorter variants and Ad formats. Vigenair analyses the spoken dialogue in a video (if present), the visually changing shots, on-screen entities such as any identified logos and/or text, and background music and effects. It then uses all of this information to combine sections of the video together that are coherent; segments that won't be cut mid-dialogue nor mid-scene, and that are semantically and contextually related to one another. These coherent A/V segments serve as the building blocks for both GenAI and user-driven recombination.

The generated variants may follow the original Ad's storyline - and thus serve as mid-funnel reminder campaigns of the original Ad for Awareness and/or Consideration - or introduce whole new storylines altogether, all while following Google's best practices for effective video ads.

- Vigenair will not work well for all types of videos. Try it out with an open mind :)

- Users cannot delete previously analysed videos via the UI; they must do this directly in GCS.

- The current audio analysis and understanding tech is unable to differentiate between voice-over and any singing voices in the video. The Analyse voice-over checkbox in the UI's Video selection card can be used to counteract this; uncheck the checkbox for videos where there is no voice-over, rather just background song and/or effects.

- When generating video variants, segments selected by the LLM might not follow user prompt instructions, and the overall variant might not follow the desired target duration. It is recommended to review and potentially modify the preselected segments of the variant before adding it to the render queue.

- When previewing generated video variants, audio overlay settings are not applied; they are only available for fully rendered variants.

- Resizing text overlays / supers when cropping videos into vertical and square formats is currently not supported.

The diagram below shows how Vigenair's components interact and communicate with one another.

Users upload or select videos they have previously analysed via the UI's Video selection card (step #2 is skipped for already analysed videos).

- The Load existing video dropdown pulls all processed videos from the associated GCS bucket when the page loads, and updates the list whenever users interact with the dropdown.

- The My videos only toggle filters the list to only those videos uploaded by the current user - this is particularly relevant for Google Workspace users, where the associated GCP project and GCS bucket are shared among users within the same organisation.

- The Analyse voice-over checkbox, which is checked by default, can be used to skip the process of transcribing and analysing any voice-over or speech in the video. Uncheck this checkbox for videos where there is only background music / song or effects.

- Uploads get stored in GCS in separate folders following this format:

<input_video_filename>(--n)--<timestamp>--<encoded_user_id>.-

input_video_filename: The name of the video file as it was stored on the user's file system. - Optional

--nsuffix to the filename: For those videos where the Analyse voice-over checkbox was unchecked. -

timestamp: Current timestamp in microseconds (e.g. 1234567890123) -

encoded_user_id: Base64 encoded version of the user's email - if available - otherwise Apps Script's temp User ID.

-

New uploads into GCS trigger the Extractor service Cloud Function, which extracts all video information and stores the results on GCS (input.vtt, analysis.json and data.json).

- First, background music and voice-over (if available) are separated via the spleeter library, and the voice-over is transcribed.

- Transcription is done via the faster-whisper library and the output is stored in an

input.vttfile, along with alanguage.txtfile containing the video's primary language, in the same folder as the input video. - Video analysis is done via the Cloud Video Intelligence API, where visual shots, detected objects - with tracking, labels, people and faces, and recognised logos and any on-screen text within the input video are extracted. The output is stored in an

analysis.jsonfile in the same folder as the input video. - Finally, coherent audio/video segments are created using the transcription and video intelligence outputs and then cut into individual video files and stored on GCS in an

av_segments_cutssubfolder under the root video folder. These cuts are then annotated via Gemini, which provides a description and a set of associated keywords / topics per segment. The fully annotated segments (including all information from the Video Intelligence API) are then compiled into adata.jsonfile that is stored in the same folder as the input video.

The UI continuously queries GCS for updates while showing a preview of the uploaded video.

-

Once the

input.vttis available, a transcription track is embedded onto the video preview. -

Once the

analysis.jsonis available, object tracking results are displayed as bounding boxes directly on the video preview. These can be toggled on/off via the first button in the top-left toggle group - which is set to on by default. -

Vertical and square format previews are also generated, and can be displayed via the second and third buttons of the toggle group, respectively. The previews are generated by dynamically moving the vertical/square crop area to capture the most prominent element in each frame.

-

Smart framing is controlled via weights that can be modified via the fourth button of the toggle group to increase or decrease the prominence score of each element, and therefore skew the crop area towards it. You can regenerate the crop area previews via the button in the settings dialog as shown below.

-

You can also manually move the crop area in case the smart framing weights were insufficient in capturing your desired point of interest. This is possible by doing the following:

-

Select the desired format (square / vertical) from the toggle group and play the video.

-

Pause the video at the point where you would like to manually move the crop area.

-

Click on the "Move crop area" button that will appear above the video once paused.

-

Drag the crop area left or right as desired.

-

Save the new position of the crop area by clicking on the "Save adjusted crop area" button.

-

The crop area will be adjusted automatically for all preceding and subsequent video frames that had the same undesired position.

-

Once the

data.jsonis available, the extracted A/V Segments are displayed along with a set of user controls. -

Clicking on the link icon in the top-right corner of the "Video editing" panel will open the Cloud Storage browser UI and navigate to the associated video folder.

Users are now ready for combination. They can view the A/V segments and generate / iterate on variants via a preview while modifying user controls, adding desired variants to the render queue.

-

A/V segments are displayed in two ways:

-

In the video preview view: A single frame of each segment, cut mid-segment, is displayed in a filmstrip and scrolls into view while the user is previewing the video, indicating the segment that is currently playing. Clicking on a segment will also automatically seek to it in the video preview.

-

A detailed segments list view: Which shows additional information per segment; the segment's duration, description and extracted keywords.

-

-

User Controls for video variant generation:

- Users are presented with an optional prompt which they can use to steer the output towards focusing on - or excluding - certain aspects, like certain entities or topics in the input video, or target audience of the resulting video variant.

- Users may also use the Target duration slider to set their desired target duration.

- The expandable Advanced settings section (collapsed by default) contains a dropdown to choose the YouTube ABCDs evaluation objective (Awareness, Consideration, Action, or Shorts), along with an additional Evaluation prompt prompt that users can optionally modify. This prompt contains the criteria upon which the generated variant should be evaluated, which defaults to the Awareness ABCDs. Users can input details about their own brand and creative guidelines here, either alongside or instead of the ABCDs, and may click the reset button next to the prompt to reset the input back to the default ABCDs value. We recommend using Markdown syntax to emphasize information and provide a more concise structure for Gemini.

- Users can then click

Generateto generate variants accordingly, which will query Gemini with a detailed script of the video to generate potential variants that fulfill the optional user-provided prompts and target duration.

-

Generated variants are displayed in tabs - one per tab - and both the video preview and segments list views are updated to preselect the A/V segments of the variant currently being viewed. Clicking on the video's play button in the video preview mode will preview only those preselected segments.

Each variant has the following information:

- A title which is displayed in the variant's tab.

- A duration, which is also displayed in the variant's tab.

- The total duration is also displayed below the segments list, so that users can preview the variant's duration as they select/deselect segments.

- The list of A/V segments that make up the variant.

- A description of the variant and what is happening in it.

- An LLM-generated Score, from 1-5, representing how well the variant adheres to the input rules and guidelines. Users are strongly encouraged to update this section of the generation prompt in config.ts to refer to their own brand voice and creative guidelines.

- Reasoning for the provided score, with examples of adherence / inadherence.

Variants are sorted in descending order, first by the proximity of their duration to the user's target duration, and then by score for variants with the same duration.

-

Vigenair supports different rendering settings for the audio of the generated videos. The image below describes the supported options and how they differ:

Furthermore, if Music or All audio overlay is selected, the user can additionally decide how the overlay should be done via one of the following options:

- Variant start (default): Audio will start from the beginning of the first segment in the variant.

- Video start: Audio will start from the beginning of the original video, regardless of when the variant starts.

- Video end: Audio will end with the ending of the original video, regardless of when the variant ends.

- Variant end: Audio will end with the ending of the last segment in the variant.

-

Whether to fade out audio at the end of generated videos. When selected, videos will be faded out for

1s(configured by theCONFIG_DEFAULT_FADE_OUT_DURATIONenvironment variable for the Combiner service). -

Whether to generate Demand Gen campaign text and image assets alongside the variant or not.

-

Which formats (horizontal, vertical and square) assets to render. Defaults to rendering horizontal assets only.

-

Users can also select the individual segments that each variant is comprised of. This selection is available in both the video preview and segments list views. Please note that switching between variant tabs will clear any changes to the selection.

-

Users may also change the order of segments via the Reorder segments toggle, allowing the preview and rendering of more advanced and customised variations. Please note that reodering segments will reset the variant playback, and switching the toggle off will restore the original order.

Users may also choose to skip the variants generation and rendering process and directly display previously rendered videos using the "Load rendered videos" dropdown.

The values displayed in the dropdown represent the optional custom name that the user may have provided when building their render queue (see Rendering), along with the date and time of rendering (which is added automatically, so users don't need to manually input this information).

Desired variants can be added to the render queue along with the their associated render settings:

- Each variant added to the render queue will be presented as a card in a sidebar that will open from the right-hand-side of the page. The card contains the thumbnail of the variant's first segment, along with the variant title, list of segments contained within it, its duration and chosen render settings (audio settings including fade out, Demand Gen assets choice and desired formats).

- Variants where the user had manually modified the preselected segments will be displayed with the score greyed out and with the suffix

(modified)appended to the variant's title. - Users cannot add the same variant with the exact same segment selection and rendering settings more than once to the render queue.

- Users can always remove variants from the render queue which they no longer desire via the dedicated button per card.

- Clicking on a variant in the render queue will load its settings into the video preview and segments list views, allowing users to preview the variant once more.

Clicking on the Render button inside the render queue will render the variants in their desired formats and settings via the Combiner service Cloud Function (writing render.json to GCS, which serves as the input to the service, and the output is a combos.json file. Both files, along with the rendered variants, are stored in a <timestamp>-combos subfolder below the root video folder). Users may also optionally specify a name for the render queue, which will be displayed in the "Load rendered videos" dropdown (see Loading Previosuly Rendered Videos).

The UI continuously queries GCS for updates. Once a combos.json is available, the final videos - in their different formats and along with all associated assets - will be displayed. Users can preview the final videos and select the ones they would like to upload into Google Ads / YouTube. Users may also share a page of the Web App containing the rendered videos and associated image & text assets via the dedicated "share" icon in the top-right corner of the "Rendered videos" panel. Finally, users may also regenerate Demand Gen text assets, either in bulk or individually, using the auto-detected language of the video or by specifying a desired target language.

Note: Due to an ongoing Apps Script issue, users viewing the application via "share" links must be granted the

Editorrole on the underlying Apps Script project. This can be done by navigating to the Apps Script home page, locating theViGenAiRscript and using the more vertical toShare.

Users are priced according to their usage of Google (Cloud and Workspace) services as detailed below. In summary, Processing 1 min of video and generating 5 variants would cost around $2 to $6 based on your Cloud Functions configuration. You may modify the multimodal and language models used by Vigenair by modifying the CONFIG_VISION_MODEL and CONFIG_TEXT_MODEL environment variables respectively for the Cloud Function in deploy.sh, as well as the CONFIG.vertexAi.model property in config.ts for the frontend web app. The most cost-effective setup is using Gemini 1.5 Flash (default), for both multimodal and text-only use cases, also considering quota limits per model.

For more information, refer to this detailed Cloud pricing calculator example using Gemini 1.5 Flash. The breakdown of the charges are:

- Apps Script (where the UI is hosted): Free of charge. Apps Script services have daily quotas and the one for URL Fetch calls is relevant for Vigenair. Assuming a rule of thumb of 100 URL Fetch calls per video, you would be able to process 200 videos per day as a standard user, and 1000 videos per day as a Workspace user.

- Cloud Storage: The storage of input and generated videos, along with intermediate media files (voice-over, background music, segment thumbnails, different JSON files for the UI, etc.). Pricing varies depending on region and duration, and you can assume a rule of thumb of 100MB per 1 min of video, which would fall within the Cloud free tier. Refer to the full pricing guide for more information.

- Cloud Functions (which includes Cloud Build, Eventarc and Aritfact Registry): The processing, or up, time only; idle-time won't be billed, unless minimum instances are configured. Weigh the impact of cold starts vs. potential cost and decide whether to set

min-instances=1in deploy.sh accordingly. Vigenair's cloud function is triggered for any file upload into the GCS bucket, and so you can assume a threshold of max 100 invocations and 5 min of processing time per 1 min of video, which would cost around $1.3 with8 GiB (2 vCPU), $2.6 with16 GiB (4 vCPU), and $5.3 with32 GiB (8 vCPU). Refer to the full pricing guide for more information. - Vertex AI generative models:

- Text: The language model is queried with the entire generation prompt, which includes a script of the video covering all its segments and potentially a user-defined section. The output is a list containing one or more variants with all their information. This process is repeated in case of errors, and users can generate variants as often as they want. Assuming an average of 15 requests per video - continuing with the assumption that the video is 1 min long - you would be charged around $0.5.

- Multimodal: The multimodal model is used to annotate every segment extracted from the video, and so the pricing varies significantly depending on the number and length of the extracted segments, which in turn vary heavily according to the content of the video; a 1 min video may produce 10 segments while another may produce 20. Assuming a threshold of max 25 segments, avg. 2.5s each, per 1 min of video, you would be charged around $0.2.

Beyond the information outlined in our Contributing Guide, you would need to follow these additional steps to build Vigenair locally and modify the source code:

- Make sure your system has an up-to-date installation of the gcloud CLI.

- Run

gcloud auth loginand complete the authentication flow. - Navigate to the directory where the source code lives and run

cd service - Run

bash deploy.sh.

- Make sure your system has an up-to-date installation of Node.js and npm.

- Install clasp by running

npm install @google/clasp -g, then login viaclasp login. - Navigate to the Apps Script Settings page and

enablethe Apps Script API. - Navigate to the directory where the source code lives and run

cd ui - Run

npm installto install dependencies. - Run

npm run deployto build, test and deploy (via clasp) all UI and Apps Script code to the target Apps Script project. - Navigate to the directory where the Angular UI lives:

cd src/ui - Run

ng serveto launch the Angular UI locally with Hot Module Replacement (HMR) during development.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for vigenair

Similar Open Source Tools

vigenair

ViGenAiR is a tool that harnesses the power of Generative AI models on Google Cloud Platform to automatically transform long-form Video Ads into shorter variants, targeting different audiences. It generates video, image, and text assets for Demand Gen and YouTube video campaigns. Users can steer the model towards generating desired videos, conduct A/B testing, and benefit from various creative features. The tool offers benefits like diverse inventory, compelling video ads, creative excellence, user control, and performance insights. ViGenAiR works by analyzing video content, splitting it into coherent segments, and generating variants following Google's best practices for effective ads.

serverless-pdf-chat

The serverless-pdf-chat repository contains a sample application that allows users to ask natural language questions of any PDF document they upload. It leverages serverless services like Amazon Bedrock, AWS Lambda, and Amazon DynamoDB to provide text generation and analysis capabilities. The application architecture involves uploading a PDF document to an S3 bucket, extracting metadata, converting text to vectors, and using a LangChain to search for information related to user prompts. The application is not intended for production use and serves as a demonstration and educational tool.

guidellm

GuideLLM is a platform for evaluating and optimizing the deployment of large language models (LLMs). By simulating real-world inference workloads, GuideLLM enables users to assess the performance, resource requirements, and cost implications of deploying LLMs on various hardware configurations. This approach ensures efficient, scalable, and cost-effective LLM inference serving while maintaining high service quality. The tool provides features for performance evaluation, resource optimization, cost estimation, and scalability testing.

reai-ida

RevEng.AI IDA Pro Plugin is a tool that integrates with the RevEng.AI platform to provide various features such as uploading binaries for analysis, downloading analysis logs, renaming function names, generating AI summaries, synchronizing functions between local analysis and the platform, and configuring plugin settings. Users can upload files for analysis, synchronize function names, rename functions, generate block summaries, and explain function behavior using this plugin. The tool requires IDA Pro v8.0 or later with Python 3.9 and higher. It relies on the 'reait' package for functionality.

aici

The Artificial Intelligence Controller Interface (AICI) lets you build Controllers that constrain and direct output of a Large Language Model (LLM) in real time. Controllers are flexible programs capable of implementing constrained decoding, dynamic editing of prompts and generated text, and coordinating execution across multiple, parallel generations. Controllers incorporate custom logic during the token-by-token decoding and maintain state during an LLM request. This allows diverse Controller strategies, from programmatic or query-based decoding to multi-agent conversations to execute efficiently in tight integration with the LLM itself.

airbroke

Airbroke is an open-source error catcher tool designed for modern web applications. It provides a PostgreSQL-based backend with an Airbrake-compatible HTTP collector endpoint and a React-based frontend for error management. The tool focuses on simplicity, maintaining a small database footprint even under heavy data ingestion. Users can ask AI about issues, replay HTTP exceptions, and save/manage bookmarks for important occurrences. Airbroke supports multiple OAuth providers for secure user authentication and offers occurrence charts for better insights into error occurrences. The tool can be deployed in various ways, including building from source, using Docker images, deploying on Vercel, Render.com, Kubernetes with Helm, or Docker Compose. It requires Node.js, PostgreSQL, and specific system resources for deployment.

MARS5-TTS

MARS5 is a novel English speech model (TTS) developed by CAMB.AI, featuring a two-stage AR-NAR pipeline with a unique NAR component. The model can generate speech for various scenarios like sports commentary and anime with just 5 seconds of audio and a text snippet. It allows steering prosody using punctuation and capitalization in the transcript. Speaker identity is specified using an audio reference file, enabling 'deep clone' for improved quality. The model can be used via torch.hub or HuggingFace, supporting both shallow and deep cloning for inference. Checkpoints are provided for AR and NAR models, with hardware requirements of 750M+450M params on GPU. Contributions to improve model stability, performance, and reference audio selection are welcome.

nx_open

The `nx_open` repository contains open-source components for the Network Optix Meta Platform, used to build products like Nx Witness Video Management System. It includes source code, specifications, and a Desktop Client. The repository is licensed under Mozilla Public License 2.0. Users can build the Desktop Client and customize it using a zip file. The build environment supports Windows, Linux, and macOS platforms with specific prerequisites. The repository provides scripts for building, signing executable files, and running the Desktop Client. Compatibility with VMS Server versions is crucial, and automatic VMS updates are disabled for the open-source Desktop Client.

geti-sdk

The Intel® Geti™ SDK is a python package that enables teams to rapidly develop AI models by easing the complexities of model development and enhancing collaboration between teams. It provides tools to interact with an Intel® Geti™ server via the REST API, allowing for project creation, downloading, uploading, deploying for local inference with OpenVINO, setting project and model configuration, launching and monitoring training jobs, and media upload and prediction. The SDK also includes tutorial-style Jupyter notebooks demonstrating its usage.

amazon-transcribe-live-call-analytics

The Amazon Transcribe Live Call Analytics (LCA) with Agent Assist Sample Solution is designed to help contact centers assess and optimize caller experiences in real time. It leverages Amazon machine learning services like Amazon Transcribe, Amazon Comprehend, and Amazon SageMaker to transcribe and extract insights from contact center audio. The solution provides real-time supervisor and agent assist features, integrates with existing contact centers, and offers a scalable, cost-effective approach to improve customer interactions. The end-to-end architecture includes features like live call transcription, call summarization, AI-powered agent assistance, and real-time analytics. The solution is event-driven, ensuring low latency and seamless processing flow from ingested speech to live webpage updates.

ai-goat

AI Goat is a tool designed to help users learn about AI security through a series of vulnerable LLM CTF challenges. It allows users to run everything locally on their system without the need for sign-ups or cloud fees. The tool focuses on exploring security risks associated with large language models (LLMs) like ChatGPT, providing practical experience for security researchers to understand vulnerabilities and exploitation techniques. AI Goat uses the Vicuna LLM, derived from Meta's LLaMA and ChatGPT's response data, to create challenges that involve prompt injections, insecure output handling, and other LLM security threats. The tool also includes a prebuilt Docker image, ai-base, containing all necessary libraries to run the LLM and challenges, along with an optional CTFd container for challenge management and flag submission.

cluster-toolkit

Cluster Toolkit is an open-source software by Google Cloud for deploying AI/ML and HPC environments on Google Cloud. It allows easy deployment following best practices, with high customization and extensibility. The toolkit includes tutorials, examples, and documentation for various modules designed for AI/ML and HPC use cases.

geti-sdk

The Intel® Geti™ SDK is a python package that enables teams to rapidly develop AI models by easing the complexities of model development and fostering collaboration. It provides tools to interact with an Intel® Geti™ server via the REST API, allowing for project creation, downloading, uploading, deploying for local inference with OpenVINO, configuration management, training job monitoring, media upload, and prediction. The repository also includes tutorial-style Jupyter notebooks demonstrating SDK usage.

agentok

Agentok Studio is a visual tool built for AutoGen, a cutting-edge agent framework from Microsoft and various contributors. It offers intuitive visual tools to simplify the construction and management of complex agent-based workflows. Users can create workflows visually as graphs, chat with agents, and share flow templates. The tool is designed to streamline the development process for creators and developers working on next-generation Multi-Agent Applications.

Mapperatorinator

Mapperatorinator is a multi-model framework that uses spectrogram inputs to generate fully featured osu! beatmaps for all gamemodes and assist modding beatmaps. The project aims to automatically generate rankable quality osu! beatmaps from any song with a high degree of customizability. The tool is built upon osuT5 and osu-diffusion, utilizing GPU compute and instances on vast.ai for development. Users can responsibly use AI in their beatmaps with this tool, ensuring disclosure of AI usage. Installation instructions include cloning the repository, creating a virtual environment, and installing dependencies. The tool offers a Web GUI for user-friendly experience and a Command-Line Inference option for advanced configurations. Additionally, an Interactive CLI script is available for terminal-based workflow with guided setup. The tool provides generation tips and features MaiMod, an AI-driven modding tool for osu! beatmaps. Mapperatorinator tokenizes beatmaps, utilizes a model architecture based on HF Transformers Whisper model, and offers multitask training format for conditional generation. The tool ensures seamless long generation, refines coordinates with diffusion, and performs post-processing for improved beatmap quality. Super timing generator enhances timing accuracy, and LoRA fine-tuning allows adaptation to specific styles or gamemodes. The project acknowledges credits and related works in the osu! community.

chronon

Chronon is a platform that simplifies and improves ML workflows by providing a central place to define features, ensuring point-in-time correctness for backfills, simplifying orchestration for batch and streaming pipelines, offering easy endpoints for feature fetching, and guaranteeing and measuring consistency. It offers benefits over other approaches by enabling the use of a broad set of data for training, handling large aggregations and other computationally intensive transformations, and abstracting away the infrastructure complexity of data plumbing.

For similar tasks

VideoLLaMA2

VideoLLaMA 2 is a project focused on advancing spatial-temporal modeling and audio understanding in video-LLMs. It provides tools for multi-choice video QA, open-ended video QA, and video captioning. The project offers model zoo with different configurations for visual encoder and language decoder. It includes training and evaluation guides, as well as inference capabilities for video and image processing. The project also features a demo setup for running a video-based Large Language Model web demonstration.

vigenair

ViGenAiR is a tool that harnesses the power of Generative AI models on Google Cloud Platform to automatically transform long-form Video Ads into shorter variants, targeting different audiences. It generates video, image, and text assets for Demand Gen and YouTube video campaigns. Users can steer the model towards generating desired videos, conduct A/B testing, and benefit from various creative features. The tool offers benefits like diverse inventory, compelling video ads, creative excellence, user control, and performance insights. ViGenAiR works by analyzing video content, splitting it into coherent segments, and generating variants following Google's best practices for effective ads.

Grounded-Video-LLM

Grounded-VideoLLM is a Video Large Language Model specialized in fine-grained temporal grounding. It excels in tasks such as temporal sentence grounding, dense video captioning, and grounded VideoQA. The model incorporates an additional temporal stream, discrete temporal tokens with specific time knowledge, and a multi-stage training scheme. It shows potential as a versatile video assistant for general video understanding. The repository provides pretrained weights, inference scripts, and datasets for training. Users can run inference queries to get temporal information from videos and train the model from scratch.

For similar jobs

vigenair

ViGenAiR is a tool that harnesses the power of Generative AI models on Google Cloud Platform to automatically transform long-form Video Ads into shorter variants, targeting different audiences. It generates video, image, and text assets for Demand Gen and YouTube video campaigns. Users can steer the model towards generating desired videos, conduct A/B testing, and benefit from various creative features. The tool offers benefits like diverse inventory, compelling video ads, creative excellence, user control, and performance insights. ViGenAiR works by analyzing video content, splitting it into coherent segments, and generating variants following Google's best practices for effective ads.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

daily-poetry-image

Daily Chinese ancient poetry and AI-generated images powered by Bing DALL-E-3. GitHub Action triggers the process automatically. Poetry is provided by Today's Poem API. The website is built with Astro.

exif-photo-blog

EXIF Photo Blog is a full-stack photo blog application built with Next.js, Vercel, and Postgres. It features built-in authentication, photo upload with EXIF extraction, photo organization by tag, infinite scroll, light/dark mode, automatic OG image generation, a CMD-K menu with photo search, experimental support for AI-generated descriptions, and support for Fujifilm simulations. The application is easy to deploy to Vercel with just a few clicks and can be customized with a variety of environment variables.

SillyTavern

SillyTavern is a user interface you can install on your computer (and Android phones) that allows you to interact with text generation AIs and chat/roleplay with characters you or the community create. SillyTavern is a fork of TavernAI 1.2.8 which is under more active development and has added many major features. At this point, they can be thought of as completely independent programs.

Twitter-Insight-LLM

This project enables you to fetch liked tweets from Twitter (using Selenium), save it to JSON and Excel files, and perform initial data analysis and image captions. This is part of the initial steps for a larger personal project involving Large Language Models (LLMs).

AISuperDomain

Aila Desktop Application is a powerful tool that integrates multiple leading AI models into a single desktop application. It allows users to interact with various AI models simultaneously, providing diverse responses and insights to their inquiries. With its user-friendly interface and customizable features, Aila empowers users to engage with AI seamlessly and efficiently. Whether you're a researcher, student, or professional, Aila can enhance your AI interactions and streamline your workflow.

ChatGPT-On-CS

This project is an intelligent dialogue customer service tool based on a large model, which supports access to platforms such as WeChat, Qianniu, Bilibili, Douyin Enterprise, Douyin, Doudian, Weibo chat, Xiaohongshu professional account operation, Xiaohongshu, Zhihu, etc. You can choose GPT3.5/GPT4.0/ Lazy Treasure Box (more platforms will be supported in the future), which can process text, voice and pictures, and access external resources such as operating systems and the Internet through plug-ins, and support enterprise AI applications customized based on their own knowledge base.