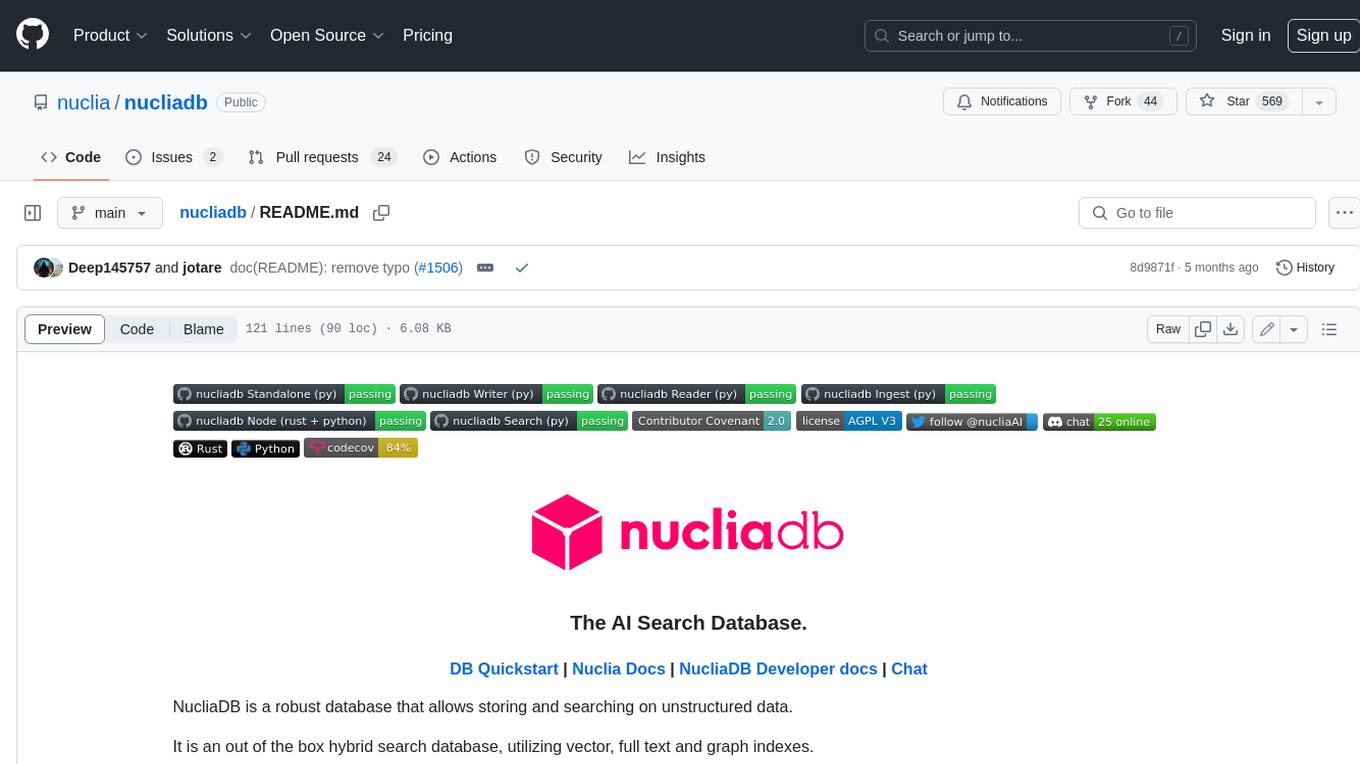

stagehand

The AI Browser Automation Framework

Stars: 17162

Stagehand is an AI web browsing framework that simplifies and extends web automation using three simple APIs: act, extract, and observe. It aims to provide a lightweight, configurable framework without complex abstractions, allowing users to automate web tasks reliably. The tool generates Playwright code based on atomic instructions provided by the user, enabling natural language-driven web automation. Stagehand is open source, maintained by the Browserbase team, and supports different models and model providers for flexibility in automation tasks.

README:

The AI Browser Automation Framework

Read the Docs

If you're looking for the Python implementation, you can find it here

Most existing browser automation tools either require you to write low-level code in a framework like Selenium, Playwright, or Puppeteer, or use high-level agents that can be unpredictable in production. By letting developers choose what to write in code vs. natural language, Stagehand is the natural choice for browser automations in production.

-

Choose when to write code vs. natural language: use AI when you want to navigate unfamiliar pages, and use code (Playwright) when you know exactly what you want to do.

-

Preview and cache actions: Stagehand lets you preview AI actions before running them, and also helps you easily cache repeatable actions to save time and tokens.

-

Computer use models with one line of code: Stagehand lets you integrate SOTA computer use models from OpenAI and Anthropic into the browser with one line of code.

Here's how to build a sample browser automation with Stagehand:

// Use Playwright functions on the page object

const page = stagehand.page;

await page.goto("https://github.com/browserbase");

// Use act() to execute individual actions

await page.act("click on the stagehand repo");

// Use Computer Use agents for larger actions

const agent = stagehand.agent({

provider: "openai",

model: "computer-use-preview",

});

await agent.execute("Get to the latest PR");

// Use extract() to read data from the page

const { author, title } = await page.extract({

instruction: "extract the author and title of the PR",

schema: z.object({

author: z.string().describe("The username of the PR author"),

title: z.string().describe("The title of the PR"),

}),

});Visit docs.stagehand.dev to view the full documentation.

Start with Stagehand with one line of code, or check out our Quickstart Guide for more information:

npx create-browser-appgit clone https://github.com/browserbase/stagehand.git

cd stagehand

pnpm install

pnpm playwright install

pnpm run build

pnpm run example # run the blank script at ./examples/example.ts

pnpm run example 2048 # run the 2048 example at ./examples/2048.ts

pnpm run evals -man # see evaluation suite optionsStagehand is best when you have an API key for an LLM provider and Browserbase credentials. To add these to your project, run:

cp .env.example .env

nano .env # Edit the .env file to add API keys[!NOTE]

We highly value contributions to Stagehand! For questions or support, please join our Slack community.

At a high level, we're focused on improving reliability, speed, and cost in that order of priority. If you're interested in contributing, we strongly recommend reaching out to Miguel Gonzalez or Paul Klein in our Slack community before starting to ensure that your contribution aligns with our goals.

For more information, please see our Contributing Guide.

This project heavily relies on Playwright as a resilient backbone to automate the web. It also would not be possible without the awesome techniques and discoveries made by tarsier, gemini-zod, and fuji-web.

We'd like to thank the following people for their major contributions to Stagehand:

- Paul Klein

- Anirudh Kamath

- Sean McGuire

- Miguel Gonzalez

- Sameel Arif

- Filip Michalsky

- Jeremy Press

- Navid Pour

Licensed under the MIT License.

Copyright 2025 Browserbase, Inc.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for stagehand

Similar Open Source Tools

stagehand

Stagehand is an AI web browsing framework that simplifies and extends web automation using three simple APIs: act, extract, and observe. It aims to provide a lightweight, configurable framework without complex abstractions, allowing users to automate web tasks reliably. The tool generates Playwright code based on atomic instructions provided by the user, enabling natural language-driven web automation. Stagehand is open source, maintained by the Browserbase team, and supports different models and model providers for flexibility in automation tasks.

codebase-context-spec

The Codebase Context Specification (CCS) project aims to standardize embedding contextual information within codebases to enhance understanding for both AI and human developers. It introduces a convention similar to `.env` and `.editorconfig` files but focused on documenting code for both AI and humans. By providing structured contextual metadata, collaborative documentation guidelines, and standardized context files, developers can improve code comprehension, collaboration, and development efficiency. The project includes a linter for validating context files and provides guidelines for using the specification with AI assistants. Tooling recommendations suggest creating memory systems, IDE plugins, AI model integrations, and agents for context creation and utilization. Future directions include integration with existing documentation systems, dynamic context generation, and support for explicit context overriding.

pathway

Pathway is a Python data processing framework for analytics and AI pipelines over data streams. It's the ideal solution for real-time processing use cases like streaming ETL or RAG pipelines for unstructured data. Pathway comes with an **easy-to-use Python API** , allowing you to seamlessly integrate your favorite Python ML libraries. Pathway code is versatile and robust: **you can use it in both development and production environments, handling both batch and streaming data effectively**. The same code can be used for local development, CI/CD tests, running batch jobs, handling stream replays, and processing data streams. Pathway is powered by a **scalable Rust engine** based on Differential Dataflow and performs incremental computation. Your Pathway code, despite being written in Python, is run by the Rust engine, enabling multithreading, multiprocessing, and distributed computations. All the pipeline is kept in memory and can be easily deployed with **Docker and Kubernetes**. You can install Pathway with pip: `pip install -U pathway` For any questions, you will find the community and team behind the project on Discord.

embedchain

Embedchain is an Open Source Framework for personalizing LLM responses. It simplifies the creation and deployment of personalized AI applications by efficiently managing unstructured data, generating relevant embeddings, and storing them in a vector database. With diverse APIs, users can extract contextual information, find precise answers, and engage in interactive chat conversations tailored to their data. The framework follows the design principle of being 'Conventional but Configurable' to cater to both software engineers and machine learning engineers.

edenai-apis

Eden AI aims to simplify the use and deployment of AI technologies by providing a unique API that connects to all the best AI engines. With the rise of **AI as a Service** , a lot of companies provide off-the-shelf trained models that you can access directly through an API. These companies are either the tech giants (Google, Microsoft , Amazon) or other smaller, more specialized companies, and there are hundreds of them. Some of the most known are : DeepL (translation), OpenAI (text and image analysis), AssemblyAI (speech analysis). There are **hundreds of companies** doing that. We're regrouping the best ones **in one place** !

arch

Arch is an intelligent Layer 7 gateway designed to protect, observe, and personalize LLM applications with APIs. It handles tasks like detecting and rejecting jailbreak attempts, calling backend APIs, disaster recovery, and observability. Built on Envoy Proxy, it offers features like function calling, prompt guardrails, traffic management, and standards-based observability. Arch aims to improve the speed, security, and personalization of generative AI applications.

SalesGPT

SalesGPT is an open-source AI agent designed for sales, utilizing context-awareness and LLMs to work across various communication channels like voice, email, and texting. It aims to enhance sales conversations by understanding the stage of the conversation and providing tools like product knowledge base to reduce errors. The agent can autonomously generate payment links, handle objections, and close sales. It also offers features like automated email communication, meeting scheduling, and integration with various LLMs for customization. SalesGPT is optimized for low latency in voice channels and ensures human supervision where necessary. The tool provides enterprise-grade security and supports LangSmith tracing for monitoring and evaluation of intelligent agents built on LLM frameworks.

agent-lightning

Agent Lightning is a lightweight and efficient tool for automating repetitive tasks in the field of data analysis and machine learning. It provides a user-friendly interface to create and manage automated workflows, allowing users to easily schedule and execute data processing, model training, and evaluation tasks. With its intuitive design and powerful features, Agent Lightning streamlines the process of building and deploying machine learning models, making it ideal for data scientists, machine learning engineers, and AI enthusiasts looking to boost their productivity and efficiency in their projects.

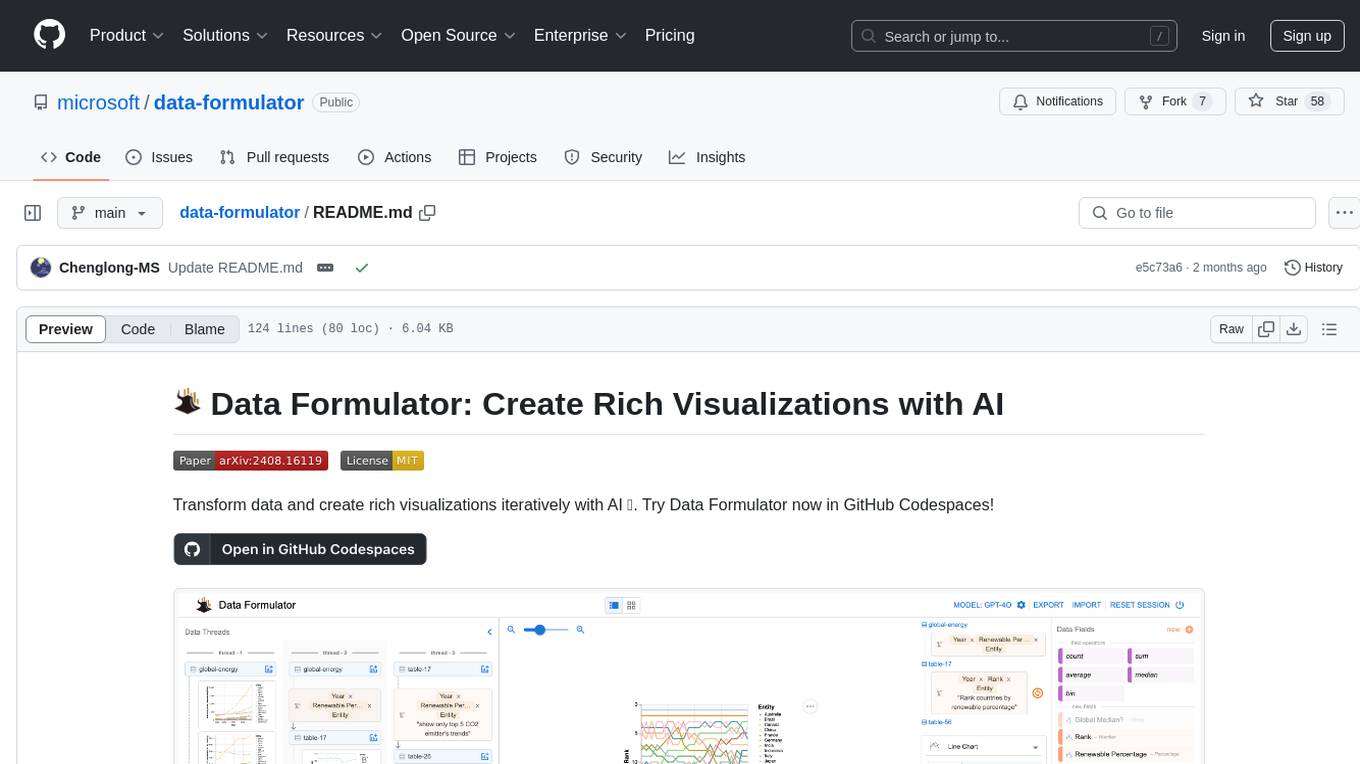

data-formulator

Data Formulator is an AI-powered tool developed by Microsoft Research to help data analysts create rich visualizations iteratively. It combines user interface interactions with natural language inputs to simplify the process of describing chart designs while delegating data transformation to AI. Users can utilize features like blended UI and NL inputs, data threads for history navigation, and code inspection to create impressive visualizations. The tool supports local installation for customization and Codespaces for quick setup. Developers can build new data analysis tools on top of Data Formulator, and research papers are available for further reading.

azure-search-openai-demo

This sample demonstrates a few approaches for creating ChatGPT-like experiences over your own data using the Retrieval Augmented Generation pattern. It uses Azure OpenAI Service to access a GPT model (gpt-35-turbo), and Azure AI Search for data indexing and retrieval. The repo includes sample data so it's ready to try end to end. In this sample application we use a fictitious company called Contoso Electronics, and the experience allows its employees to ask questions about the benefits, internal policies, as well as job descriptions and roles.

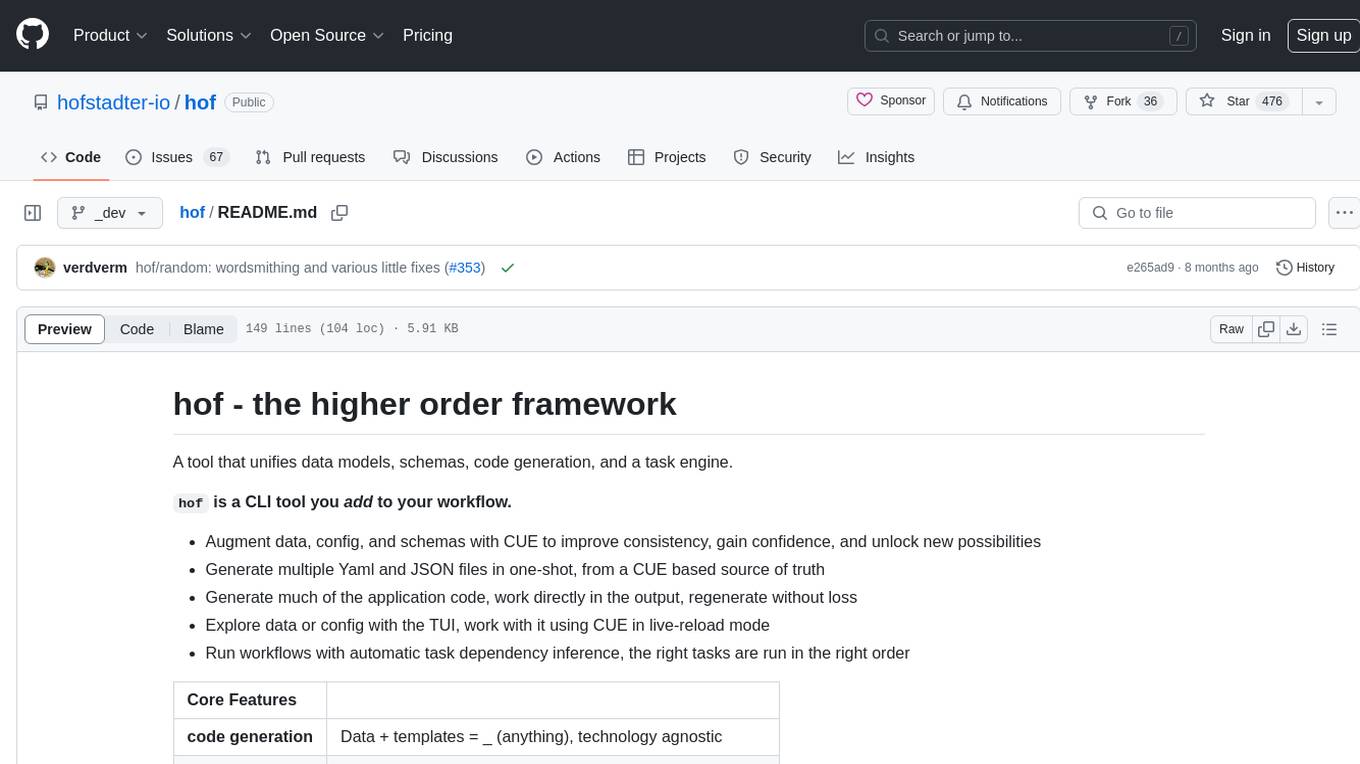

hof

Hof is a CLI tool that unifies data models, schemas, code generation, and a task engine. It allows users to augment data, config, and schemas with CUE to improve consistency, generate multiple Yaml and JSON files, explore data or config with a TUI, and run workflows with automatic task dependency inference. The tool uses CUE to power the DX and implementation, providing a language for specifying schemas, configuration, and writing declarative code. Hof offers core features like code generation, data model management, task engine, CUE cmds, creators, modules, TUI, and chat for better, scalable results.

AntSK

AntSK is an AI knowledge base/agent built with .Net8+Blazor+SemanticKernel. It features a semantic kernel for accurate natural language processing, a memory kernel for continuous learning and knowledge storage, a knowledge base for importing and querying knowledge from various document formats, a text-to-image generator integrated with StableDiffusion, GPTs generation for creating personalized GPT models, API interfaces for integrating AntSK into other applications, an open API plugin system for extending functionality, a .Net plugin system for integrating business functions, real-time information retrieval from the internet, model management for adapting and managing different models from different vendors, support for domestic models and databases for operation in a trusted environment, and planned model fine-tuning based on llamafactory.

Sentient

Sentient is a personal, private, and interactive AI companion developed by Existence. The project aims to build a completely private AI companion that is deeply personalized and context-aware of the user. It utilizes automation and privacy to create a true companion for humans. The tool is designed to remember information about the user and use it to respond to queries and perform various actions. Sentient features a local and private environment, MBTI personality test, integrations with LinkedIn, Reddit, and more, self-managed graph memory, web search capabilities, multi-chat functionality, and auto-updates for the app. The project is built using technologies like ElectronJS, Next.js, TailwindCSS, FastAPI, Neo4j, and various APIs.

LlamaIndexTS

LlamaIndex.TS is a data framework for your LLM application. Use your own data with large language models (LLMs, OpenAI ChatGPT and others) in Typescript and Javascript.

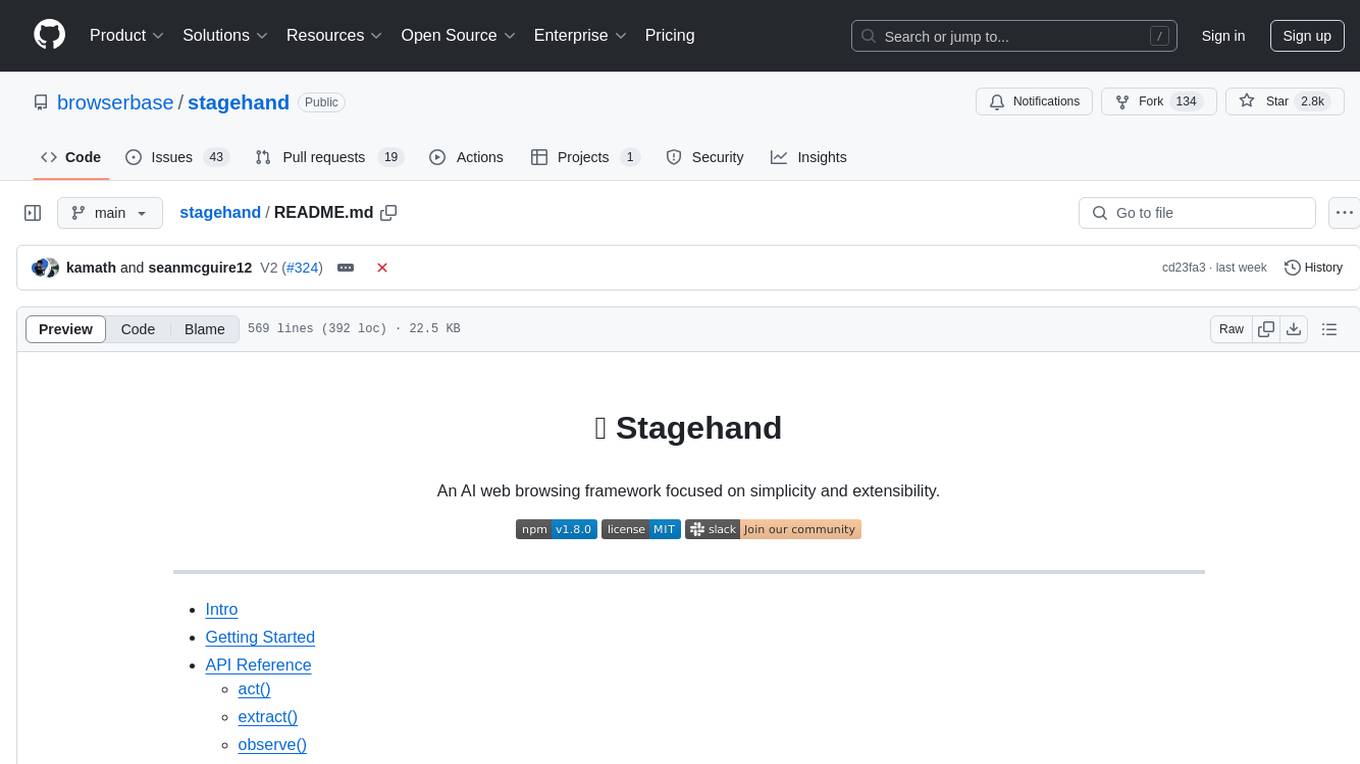

nucliadb

NucliaDB is a robust database that allows storing and searching on unstructured data. It is an out of the box hybrid search database, utilizing vector, full text and graph indexes. NucliaDB is written in Rust and Python. We designed it to index large datasets and provide multi-teanant support. When utilizing NucliaDB with Nuclia cloud, you are able to the power of an NLP database without the hassle of data extraction, enrichment and inference. We do all the hard work for you.

supervisely

Supervisely is a computer vision platform that provides a range of tools and services for developing and deploying computer vision solutions. It includes a data labeling platform, a model training platform, and a marketplace for computer vision apps. Supervisely is used by a variety of organizations, including Fortune 500 companies, research institutions, and government agencies.

For similar tasks

stagehand

Stagehand is an AI web browsing framework that simplifies and extends web automation using three simple APIs: act, extract, and observe. It aims to provide a lightweight, configurable framework without complex abstractions, allowing users to automate web tasks reliably. The tool generates Playwright code based on atomic instructions provided by the user, enabling natural language-driven web automation. Stagehand is open source, maintained by the Browserbase team, and supports different models and model providers for flexibility in automation tasks.

extractor

Extractor is an AI-powered data extraction library for Laravel that leverages OpenAI's capabilities to effortlessly extract structured data from various sources, including images, PDFs, and emails. It features a convenient wrapper around OpenAI Chat and Completion endpoints, supports multiple input formats, includes a flexible Field Extractor for arbitrary data extraction, and integrates with Textract for OCR functionality. Extractor utilizes JSON Mode from the latest GPT-3.5 and GPT-4 models, providing accurate and efficient data extraction.

NeMo-Guardrails

NeMo Guardrails is an open-source toolkit for easily adding _programmable guardrails_ to LLM-based conversational applications. Guardrails (or "rails" for short) are specific ways of controlling the output of a large language model, such as not talking about politics, responding in a particular way to specific user requests, following a predefined dialog path, using a particular language style, extracting structured data, and more.

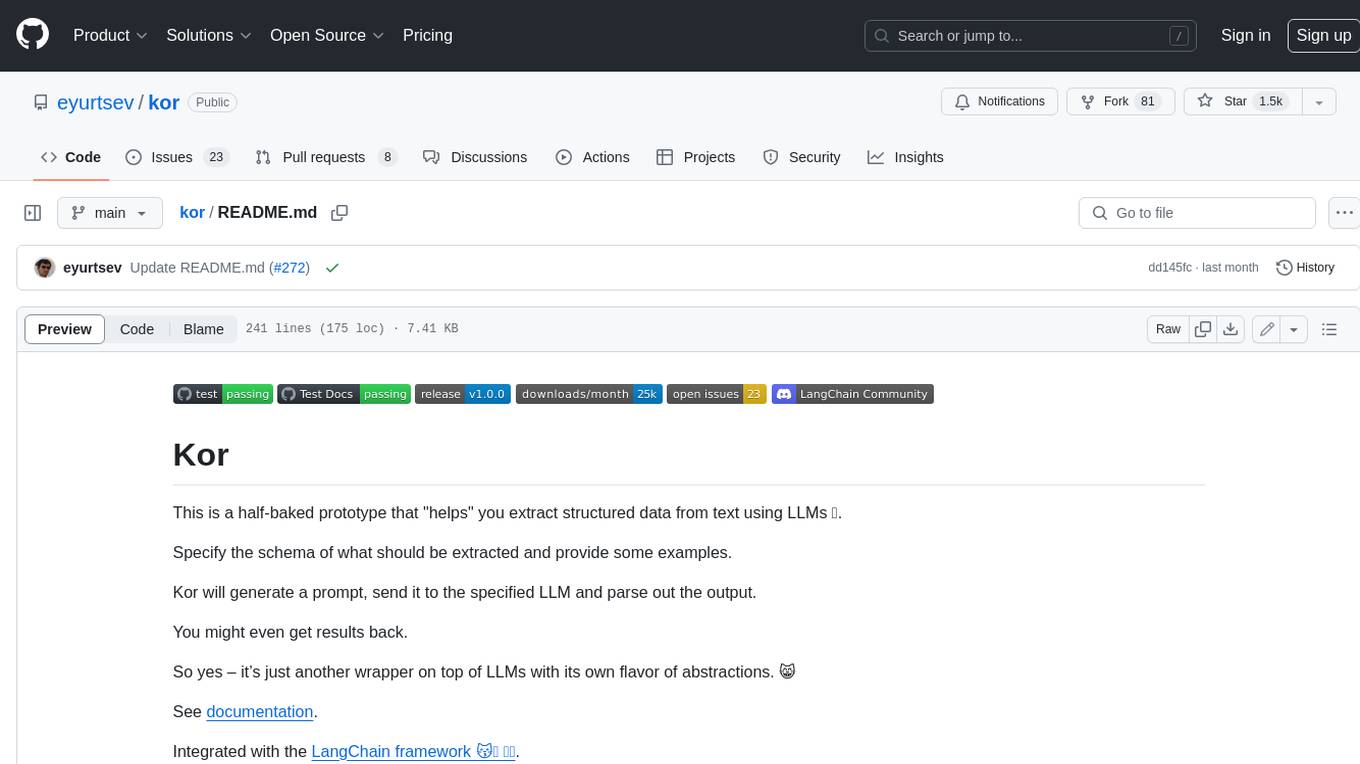

kor

Kor is a prototype tool designed to help users extract structured data from text using Language Models (LLMs). It generates prompts, sends them to specified LLMs, and parses the output. The tool works with the parsing approach and is integrated with the LangChain framework. Kor is compatible with pydantic v2 and v1, and schema is typed checked using pydantic. It is primarily used for extracting information from text based on provided reference examples and schema documentation. Kor is designed to work with all good-enough LLMs regardless of their support for function/tool calling or JSON modes.

awesome-llm-json

This repository is an awesome list dedicated to resources for using Large Language Models (LLMs) to generate JSON or other structured outputs. It includes terminology explanations, hosted and local models, Python libraries, blog articles, videos, Jupyter notebooks, and leaderboards related to LLMs and JSON generation. The repository covers various aspects such as function calling, JSON mode, guided generation, and tool usage with different providers and models.

tensorzero

TensorZero is an open-source platform that helps LLM applications graduate from API wrappers into defensible AI products. It enables a data & learning flywheel for LLMs by unifying inference, observability, optimization, and experimentation. The platform includes a high-performance model gateway, structured schema-based inference, observability, experimentation, and data warehouse for analytics. TensorZero Recipes optimize prompts and models, and the platform supports experimentation features and GitOps orchestration for deployment.

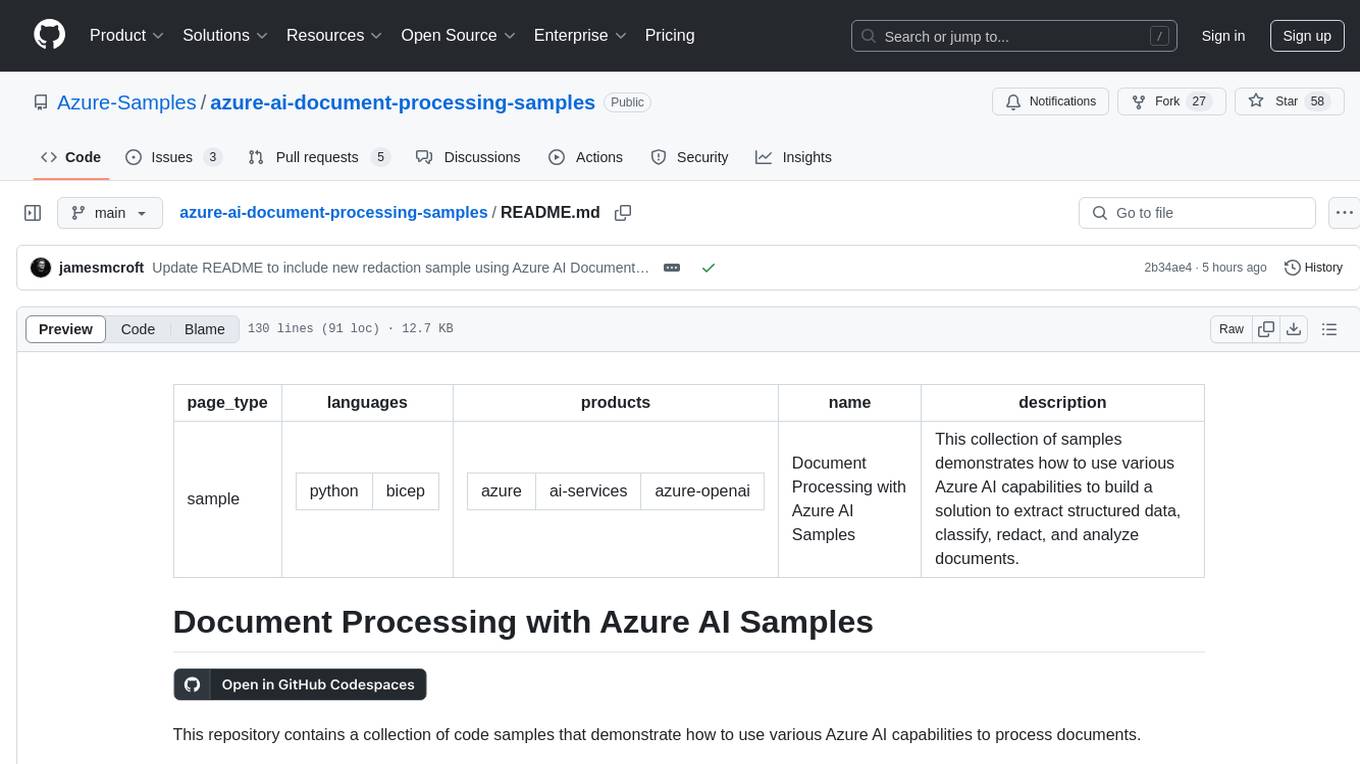

azure-ai-document-processing-samples

This repository contains a collection of code samples that demonstrate how to use various Azure AI capabilities to process documents. The samples help engineering teams establish techniques with Azure AI Foundry, Azure OpenAI, Azure AI Document Intelligence, and Azure AI Language services to build solutions for extracting structured data, classifying, and analyzing documents. The techniques simplify custom model training, improve reliability in document processing, and simplify document processing workflows by providing reusable code and patterns that can be easily modified and evaluated for most use cases.

firecrawl-mcp-server

Firecrawl MCP Server is a Model Context Protocol (MCP) server implementation that integrates with Firecrawl for web scraping capabilities. It supports features like scrape, crawl, search, extract, and batch scrape. It provides web scraping with JS rendering, URL discovery, web search with content extraction, automatic retries with exponential backoff, credit usage monitoring, comprehensive logging system, support for cloud and self-hosted FireCrawl instances, mobile/desktop viewport support, and smart content filtering with tag inclusion/exclusion. The server includes configurable parameters for retry behavior and credit usage monitoring, rate limiting and batch processing capabilities, and tools for scraping, batch scraping, checking batch status, searching, crawling, and extracting structured information from web pages.

For similar jobs

aiscript

AiScript is a lightweight scripting language that runs on JavaScript. It supports arrays, objects, and functions as first-class citizens, and is easy to write without the need for semicolons or commas. AiScript runs in a secure sandbox environment, preventing infinite loops from freezing the host. It also allows for easy provision of variables and functions from the host.

askui

AskUI is a reliable, automated end-to-end automation tool that only depends on what is shown on your screen instead of the technology or platform you are running on.

bots

The 'bots' repository is a collection of guides, tools, and example bots for programming bots to play video games. It provides resources on running bots live, installing the BotLab client, debugging bots, testing bots in simulated environments, and more. The repository also includes example bots for games like EVE Online, Tribal Wars 2, and Elvenar. Users can learn about developing bots for specific games, syntax of the Elm programming language, and tools for memory reading development. Additionally, there are guides on bot programming, contributing to BotLab, and exploring Elm syntax and core library.

ain

Ain is a terminal HTTP API client designed for scripting input and processing output via pipes. It allows flexible organization of APIs using files and folders, supports shell-scripts and executables for common tasks, handles url-encoding, and enables sharing the resulting curl, wget, or httpie command-line. Users can put things that change in environment variables or .env-files, and pipe the API output for further processing. Ain targets users who work with many APIs using a simple file format and uses curl, wget, or httpie to make the actual calls.

LaVague

LaVague is an open-source Large Action Model framework that uses advanced AI techniques to compile natural language instructions into browser automation code. It leverages Selenium or Playwright for browser actions. Users can interact with LaVague through an interactive Gradio interface to automate web interactions. The tool requires an OpenAI API key for default examples and offers a Playwright integration guide. Contributors can help by working on outlined tasks, submitting PRs, and engaging with the community on Discord. The project roadmap is available to track progress, but users should exercise caution when executing LLM-generated code using 'exec'.

robocorp

Robocorp is a platform that allows users to create, deploy, and operate Python automations and AI actions. It provides an easy way to extend the capabilities of AI agents, assistants, and copilots with custom actions written in Python. Users can create and deploy tools, skills, loaders, and plugins that securely connect any AI Assistant platform to their data and applications. The Robocorp Action Server makes Python scripts compatible with ChatGPT and LangChain by automatically creating and exposing an API based on function declaration, type hints, and docstrings. It simplifies the process of developing and deploying AI actions, enabling users to interact with AI frameworks effortlessly.

Open-Interface

Open Interface is a self-driving software that automates computer tasks by sending user requests to a language model backend (e.g., GPT-4V) and simulating keyboard and mouse inputs to execute the steps. It course-corrects by sending current screenshots to the language models. The tool supports MacOS, Linux, and Windows, and requires setting up the OpenAI API key for access to GPT-4V. It can automate tasks like creating meal plans, setting up custom language model backends, and more. Open Interface is currently not efficient in accurate spatial reasoning, tracking itself in tabular contexts, and navigating complex GUI-rich applications. Future improvements aim to enhance the tool's capabilities with better models trained on video walkthroughs. The tool is cost-effective, with user requests priced between $0.05 - $0.20, and offers features like interrupting the app and primary display visibility in multi-monitor setups.

AI-Case-Sorter-CS7.1

AI-Case-Sorter-CS7.1 is a project focused on building a case sorter using machine vision and machine learning AI to sort cases by headstamp. The repository includes Arduino code and 3D models necessary for the project.