shortest

QA via natural language AI tests

Stars: 4425

Shortest is an AI-powered natural language end-to-end testing framework built on Playwright. It provides a seamless testing experience by allowing users to write tests in natural language and execute them using Anthropic Claude API. The framework also offers GitHub integration with 2FA support, making it suitable for testing web applications with complex authentication flows. Shortest simplifies the testing process by enabling users to run tests locally or in CI/CD pipelines, ensuring the reliability and efficiency of web applications.

README:

AI-powered natural language end-to-end testing framework.

Your browser does not support the video tag.- Natural language E2E testing framework

- AI-powered test execution using Anthropic Claude API

- Built on Playwright

- GitHub integration with 2FA support

- Email validation with Mailosaur

If helpful, here's a short video!

Use the shortest init command to streamline the setup process in a new or existing project.

The shortest init command will:

npx @antiwork/shortest initThis will:

- Automatically install the

@antiwork/shortestpackage as a dev dependency if it is not already installed - Create a default

shortest.config.tsfile with boilerplate configuration - Generate a

.env.localfile (unless present) with placeholders for required environment variables, such asANTHROPIC_API_KEY - Add

.env.localand.shortest/to.gitignore

- Determine your test entry and add your Anthropic API key in config file:

shortest.config.ts

import type { ShortestConfig } from "@antiwork/shortest";

export default {

headless: false,

baseUrl: "http://localhost:3000",

testPattern: "**/*.test.ts",

ai: {

provider: "anthropic",

},

} satisfies ShortestConfig;Anthropic API key will default to SHORTEST_ANTHROPIC_API_KEY / ANTHROPIC_API_KEY environment variables. Can be overwritten via ai.config.apiKey.

- Create test files using the pattern specified in the config:

app/login.test.ts

import { shortest } from "@antiwork/shortest";

shortest("Login to the app using email and password", {

username: process.env.GITHUB_USERNAME,

password: process.env.GITHUB_PASSWORD,

});You can also use callback functions to add additional assertions and other logic. AI will execute the callback function after the test execution in browser is completed.

import { shortest } from "@antiwork/shortest";

import { db } from "@/lib/db/drizzle";

import { users } from "@/lib/db/schema";

import { eq } from "drizzle-orm";

shortest("Login to the app using username and password", {

username: process.env.USERNAME,

password: process.env.PASSWORD,

}).after(async ({ page }) => {

// Get current user's clerk ID from the page

const clerkId = await page.evaluate(() => {

return window.localStorage.getItem("clerk-user");

});

if (!clerkId) {

throw new Error("User not found in database");

}

// Query the database

const [user] = await db

.select()

.from(users)

.where(eq(users.clerkId, clerkId))

.limit(1);

expect(user).toBeDefined();

});You can use lifecycle hooks to run code before and after the test.

import { shortest } from "@antiwork/shortest";

shortest.beforeAll(async ({ page }) => {

await clerkSetup({

frontendApiUrl:

process.env.PLAYWRIGHT_TEST_BASE_URL ?? "http://localhost:3000",

});

});

shortest.beforeEach(async ({ page }) => {

await clerk.signIn({

page,

signInParams: {

strategy: "email_code",

identifier: "[email protected]",

},

});

});

shortest.afterEach(async ({ page }) => {

await page.close();

});

shortest.afterAll(async ({ page }) => {

await clerk.signOut({ page });

});Shortest supports flexible test chaining patterns:

// Sequential test chain

shortest([

"user can login with email and password",

"user can modify their account-level refund policy",

]);

// Reusable test flows

const loginAsLawyer = "login as lawyer with valid credentials";

const loginAsContractor = "login as contractor with valid credentials";

const allAppActions = ["send invoice to company", "view invoices"];

// Combine flows with spread operator

shortest([loginAsLawyer, ...allAppActions]);

shortest([loginAsContractor, ...allAppActions]);Test API endpoints using natural language

const req = new APIRequest({

baseURL: API_BASE_URI,

});

shortest(

"Ensure the response contains only active users",

req.fetch({

url: "/users",

method: "GET",

params: new URLSearchParams({

active: true,

}),

}),

);Or simply:

shortest(`

Test the API GET endpoint ${API_BASE_URI}/users with query parameter { "active": true }

Expect the response to contain only active users

`);pnpm shortest # Run all tests

pnpm shortest __tests__/login.test.ts # Run specific test

pnpm shortest --headless # Run in headless mode using CLIYou can find example tests in the examples directory.

You can run Shortest in your CI/CD pipeline by running tests in headless mode. Make sure to add your Anthropic API key to your CI/CD pipeline secrets.

Shortest supports login using GitHub 2FA. For GitHub authentication tests:

- Go to your repository settings

- Navigate to "Password and Authentication"

- Click on "Authenticator App"

- Select "Use your authenticator app"

- Click "Setup key" to obtain the OTP secret

- Add the OTP secret to your

.env.localfile or use the Shortest CLI to add it - Enter the 2FA code displayed in your terminal into Github's Authenticator setup page to complete the process

shortest --github-code --secret=<OTP_SECRET>Required in .env.local:

ANTHROPIC_API_KEY=your_api_key

GITHUB_TOTP_SECRET=your_secret # Only for GitHub auth testsThe NPM package is located in packages/shortest/. See CONTRIBUTING guide.

This guide will help you set up the Shortest web app for local development.

- React >=19.0.0 (if using with Next.js 14+ or Server Actions)

- Next.js >=14.0.0 (if using Server Components/Actions)

[!WARNING] Using this package with React 18 in Next.js 14+ projects may cause type conflicts with Server Actions and

useFormStatusIf you encounter type errors with form actions or React hooks, ensure you're using React 19

- Clone the repository:

git clone https://github.com/anti-work/shortest.git

cd shortest- Install dependencies:

npm install -g pnpm

pnpm installPull Vercel env vars:

pnpm i -g vercel

vercel link

vercel env pull- Run

pnpm run setupto configure the environment variables. - The setup wizard will ask you for information. Refer to "Services Configuration" section below for more details.

pnpm drizzle-kit generate

pnpm db:migrate

pnpm db:seed # creates stripe products, currently unusedYou'll need to set up the following services for local development. If you're not a Anti-Work Vercel team member, you'll need to either run the setup wizard pnpm run setup or manually configure each of these services and add the corresponding environment variables to your .env.local file:

Clerk

- Go to clerk.com and create a new app.

- Name it whatever you like and disable all login methods except GitHub.

- Once created, copy the environment variables to your

.env.localfile. - In the Clerk dashboard, disable the "Require the same device and browser" setting to ensure tests with Mailosaur work properly.

Vercel Postgres

- Go to your dashboard at vercel.com.

- Navigate to the Storage tab and click the

Create Databasebutton. - Choose

Postgresfrom theBrowse Storagemenu. - Copy your environment variables from the

Quickstart.env.localtab.

Anthropic

- Go to your dashboard at anthropic.com and grab your API Key.

Stripe

- Go to your

Developersdashboard at stripe.com. - Turn on

Test mode. - Go to the

API Keystab and copy yourSecret key. - Go to the terminal of your project and type

pnpm run stripe:webhooks. It will prompt you to login with a code then give you yourSTRIPE_WEBHOOK_SECRET.

GitHub OAuth

-

Create a GitHub OAuth App:

- Go to your GitHub account settings.

- Navigate to

Developer settings>OAuth Apps>New OAuth App. - Fill in the application details:

-

Configure Clerk with GitHub OAuth:

Mailosaur

- Sign up for an account with Mailosaur.

- Create a new Inbox/Server.

- Go to API Keys and create a standard key.

- Update the environment variables:

-

MAILOSAUR_API_KEY: Your API key -

MAILOSAUR_SERVER_ID: Your server ID

-

The email used to test the login flow will have the format shortest@<MAILOSAUR_SERVER_ID>.mailosaur.net, where

MAILOSAUR_SERVER_ID is your server ID.

Make sure to add the email as a new user under the Clerk app.

Run the development server:

pnpm devOpen http://localhost:3000 in your browser to see the app in action.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for shortest

Similar Open Source Tools

shortest

Shortest is an AI-powered natural language end-to-end testing framework built on Playwright. It provides a seamless testing experience by allowing users to write tests in natural language and execute them using Anthropic Claude API. The framework also offers GitHub integration with 2FA support, making it suitable for testing web applications with complex authentication flows. Shortest simplifies the testing process by enabling users to run tests locally or in CI/CD pipelines, ensuring the reliability and efficiency of web applications.

mcpdoc

The MCP LLMS-TXT Documentation Server is an open-source server that provides developers full control over tools used by applications like Cursor, Windsurf, and Claude Code/Desktop. It allows users to create a user-defined list of `llms.txt` files and use a `fetch_docs` tool to read URLs within these files, enabling auditing of tool calls and context returned. The server supports various applications and provides a way to connect to them, configure rules, and test tool calls for tasks related to documentation retrieval and processing.

python-tgpt

Python-tgpt is a Python package that enables seamless interaction with over 45 free LLM providers without requiring an API key. It also provides image generation capabilities. The name _python-tgpt_ draws inspiration from its parent project tgpt, which operates on Golang. Through this Python adaptation, users can effortlessly engage with a number of free LLMs available, fostering a smoother AI interaction experience.

deepgram-js-sdk

Deepgram JavaScript SDK. Power your apps with world-class speech and Language AI models.

well-architected-iac-analyzer

Well-Architected Infrastructure as Code (IaC) Analyzer is a project demonstrating how generative AI can evaluate infrastructure code for alignment with best practices. It features a modern web application allowing users to upload IaC documents, complete IaC projects, or architecture diagrams for assessment. The tool provides insights into infrastructure code alignment with AWS best practices, offers suggestions for improving cloud architecture designs, and can generate IaC templates from architecture diagrams. Users can analyze CloudFormation, Terraform, or AWS CDK templates, architecture diagrams in PNG or JPEG format, and complete IaC projects with supporting documents. Real-time analysis against Well-Architected best practices, integration with AWS Well-Architected Tool, and export of analysis results and recommendations are included.

js-genai

The Google Gen AI JavaScript SDK is an experimental SDK for TypeScript and JavaScript developers to build applications powered by Gemini. It supports both the Gemini Developer API and Vertex AI. The SDK is designed to work with Gemini 2.0 features. Users can access API features through the GoogleGenAI classes, which provide submodules for querying models, managing caches, creating chats, uploading files, and starting live sessions. The SDK also allows for function calling to interact with external systems. Users can find more samples in the GitHub samples directory.

exif-photo-blog

EXIF Photo Blog is a full-stack photo blog application built with Next.js, Vercel, and Postgres. It features built-in authentication, photo upload with EXIF extraction, photo organization by tag, infinite scroll, light/dark mode, automatic OG image generation, a CMD-K menu with photo search, experimental support for AI-generated descriptions, and support for Fujifilm simulations. The application is easy to deploy to Vercel with just a few clicks and can be customized with a variety of environment variables.

Gemini-API

Gemini-API is a reverse-engineered asynchronous Python wrapper for Google Gemini web app (formerly Bard). It provides features like persistent cookies, ImageFx support, extension support, classified outputs, official flavor, and asynchronous operation. The tool allows users to generate contents from text or images, have conversations across multiple turns, retrieve images in response, generate images with ImageFx, save images to local files, use Gemini extensions, check and switch reply candidates, and control log level.

openai

An open-source client package that allows developers to easily integrate the power of OpenAI's state-of-the-art AI models into their Dart/Flutter applications. The library provides simple and intuitive methods for making requests to OpenAI's various APIs, including the GPT-3 language model, DALL-E image generation, and more. It is designed to be lightweight and easy to use, enabling developers to focus on building their applications without worrying about the complexities of dealing with HTTP requests. Note that this is an unofficial library as OpenAI does not have an official Dart library.

pentagi

PentAGI is an innovative tool for automated security testing that leverages cutting-edge artificial intelligence technologies. It is designed for information security professionals, researchers, and enthusiasts who need a powerful and flexible solution for conducting penetration tests. The tool provides secure and isolated operations in a sandboxed Docker environment, fully autonomous AI-powered agent for penetration testing steps, a suite of 20+ professional security tools, smart memory system for storing research results, web intelligence for gathering information, integration with external search systems, team delegation system, comprehensive monitoring and reporting, modern interface, API integration, persistent storage, scalable architecture, self-hosted solution, flexible authentication, and quick deployment through Docker Compose.

agenticSeek

AgenticSeek is a voice-enabled AI assistant powered by DeepSeek R1 agents, offering a fully local alternative to cloud-based AI services. It allows users to interact with their filesystem, code in multiple languages, and perform various tasks autonomously. The tool is equipped with memory to remember user preferences and past conversations, and it can divide tasks among multiple agents for efficient execution. AgenticSeek prioritizes privacy by running entirely on the user's hardware without sending data to the cloud.

ppt2desc

ppt2desc is a command-line tool that converts PowerPoint presentations into detailed textual descriptions using vision language models. It interprets and describes visual elements, capturing the full semantic meaning of each slide in a machine-readable format. The tool supports various model providers and offers features like converting PPT/PPTX files to semantic descriptions, processing individual files or directories, visual elements interpretation, rate limiting for API calls, customizable prompts, and JSON output format for easy integration.

cursor-tools

cursor-tools is a CLI tool designed to enhance AI agents with advanced skills, such as web search, repository context, documentation generation, GitHub integration, Xcode tools, and browser automation. It provides features like Perplexity for web search, Gemini 2.0 for codebase context, and Stagehand for browser operations. The tool requires API keys for Perplexity AI and Google Gemini, and supports global installation for system-wide access. It offers various commands for different tasks and integrates with Cursor Composer for AI agent usage.

gemini-srt-translator

Gemini SRT Translator is a tool that utilizes Google Generative AI to provide accurate and efficient translations for subtitle files. Users can customize translation settings, such as model name and batch size, and list available models from the Gemini API. The tool requires a free API key from Google AI Studio for setup and offers features like translating subtitles to a specified target language and resuming partial translations. Users can further customize translation settings with optional parameters like gemini_api_key2, output_file, start_line, model_name, batch_size, and more.

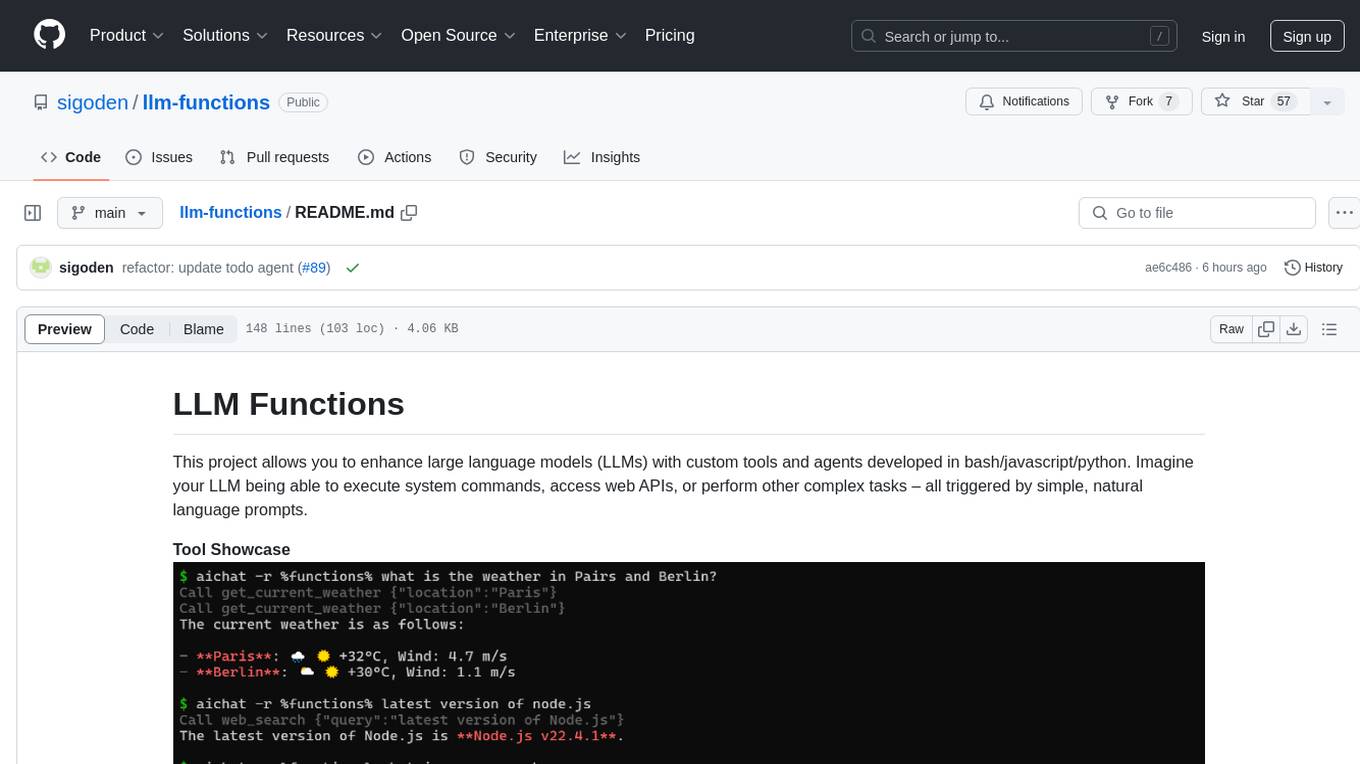

llm-functions

LLM Functions is a project that enables the enhancement of large language models (LLMs) with custom tools and agents developed in bash, javascript, and python. Users can create tools for their LLM to execute system commands, access web APIs, or perform other complex tasks triggered by natural language prompts. The project provides a framework for building tools and agents, with tools being functions written in the user's preferred language and automatically generating JSON declarations based on comments. Agents combine prompts, function callings, and knowledge (RAG) to create conversational AI agents. The project is designed to be user-friendly and allows users to easily extend the capabilities of their language models.

MCPSharp

MCPSharp is a .NET library that helps build Model Context Protocol (MCP) servers and clients for AI assistants and models. It allows creating MCP-compliant tools, connecting to existing MCP servers, exposing .NET methods as MCP endpoints, and handling MCP protocol details seamlessly. With features like attribute-based API, JSON-RPC support, parameter validation, and type conversion, MCPSharp simplifies the development of AI capabilities in applications through standardized interfaces.

For similar tasks

LLMstudio

LLMstudio by TensorOps is a platform that offers prompt engineering tools for accessing models from providers like OpenAI, VertexAI, and Bedrock. It provides features such as Python Client Gateway, Prompt Editing UI, History Management, and Context Limit Adaptability. Users can track past runs, log costs and latency, and export history to CSV. The tool also supports automatic switching to larger-context models when needed. Coming soon features include side-by-side comparison of LLMs, automated testing, API key administration, project organization, and resilience against rate limits. LLMstudio aims to streamline prompt engineering, provide execution history tracking, and enable effortless data export, offering an evolving environment for teams to experiment with advanced language models.

kaizen

Kaizen is an open-source project that helps teams ensure quality in their software delivery by providing a suite of tools for code review, test generation, and end-to-end testing. It integrates with your existing code repositories and workflows, allowing you to streamline your software development process. Kaizen generates comprehensive end-to-end tests, provides UI testing and review, and automates code review with insightful feedback. The file structure includes components for API server, logic, actors, generators, LLM integrations, documentation, and sample code. Getting started involves installing the Kaizen package, generating tests for websites, and executing tests. The tool also runs an API server for GitHub App actions. Contributions are welcome under the AGPL License.

flux-fine-tuner

This is a Cog training model that creates LoRA-based fine-tunes for the FLUX.1 family of image generation models. It includes features such as automatic image captioning during training, image generation using LoRA, uploading fine-tuned weights to Hugging Face, automated test suite for continuous deployment, and Weights and biases integration. The tool is designed for users to fine-tune Flux models on Replicate for image generation tasks.

shortest

Shortest is an AI-powered natural language end-to-end testing framework built on Playwright. It provides a seamless testing experience by allowing users to write tests in natural language and execute them using Anthropic Claude API. The framework also offers GitHub integration with 2FA support, making it suitable for testing web applications with complex authentication flows. Shortest simplifies the testing process by enabling users to run tests locally or in CI/CD pipelines, ensuring the reliability and efficiency of web applications.

lmstudio-python

LM Studio Python SDK provides a convenient API for interacting with LM Studio instance, including text completion and chat response functionalities. The SDK allows users to manage websocket connections and chat history easily. It also offers tools for code consistency checks, automated testing, and expanding the API.

mastering-github-copilot-for-dotnet-csharp-developers

Enhance coding efficiency with expert-led GitHub Copilot course for C#/.NET developers. Learn to integrate AI-powered coding assistance, automate testing, and boost collaboration using Visual Studio Code and Copilot Chat. From autocompletion to unit testing, cover essential techniques for cleaner, faster, smarter code.

agentql

AgentQL is a suite of tools for extracting data and automating workflows on live web sites featuring an AI-powered query language, Python and JavaScript SDKs, a browser-based debugger, and a REST API endpoint. It uses natural language queries to pinpoint data and elements on any web page, including authenticated and dynamically generated content. Users can define structured data output and apply transforms within queries. AgentQL's natural language selectors find elements intuitively based on the content of the web page and work across similar web sites, self-healing as UI changes over time.

c4-genai-suite

C4-GenAI-Suite is a comprehensive AI tool for generating code snippets and automating software development tasks. It leverages advanced machine learning models to assist developers in writing efficient and error-free code. The suite includes features such as code completion, refactoring suggestions, and automated testing, making it a valuable asset for enhancing productivity and code quality in software development projects.

For similar jobs

aiscript

AiScript is a lightweight scripting language that runs on JavaScript. It supports arrays, objects, and functions as first-class citizens, and is easy to write without the need for semicolons or commas. AiScript runs in a secure sandbox environment, preventing infinite loops from freezing the host. It also allows for easy provision of variables and functions from the host.

askui

AskUI is a reliable, automated end-to-end automation tool that only depends on what is shown on your screen instead of the technology or platform you are running on.

bots

The 'bots' repository is a collection of guides, tools, and example bots for programming bots to play video games. It provides resources on running bots live, installing the BotLab client, debugging bots, testing bots in simulated environments, and more. The repository also includes example bots for games like EVE Online, Tribal Wars 2, and Elvenar. Users can learn about developing bots for specific games, syntax of the Elm programming language, and tools for memory reading development. Additionally, there are guides on bot programming, contributing to BotLab, and exploring Elm syntax and core library.

ain

Ain is a terminal HTTP API client designed for scripting input and processing output via pipes. It allows flexible organization of APIs using files and folders, supports shell-scripts and executables for common tasks, handles url-encoding, and enables sharing the resulting curl, wget, or httpie command-line. Users can put things that change in environment variables or .env-files, and pipe the API output for further processing. Ain targets users who work with many APIs using a simple file format and uses curl, wget, or httpie to make the actual calls.

LaVague

LaVague is an open-source Large Action Model framework that uses advanced AI techniques to compile natural language instructions into browser automation code. It leverages Selenium or Playwright for browser actions. Users can interact with LaVague through an interactive Gradio interface to automate web interactions. The tool requires an OpenAI API key for default examples and offers a Playwright integration guide. Contributors can help by working on outlined tasks, submitting PRs, and engaging with the community on Discord. The project roadmap is available to track progress, but users should exercise caution when executing LLM-generated code using 'exec'.

robocorp

Robocorp is a platform that allows users to create, deploy, and operate Python automations and AI actions. It provides an easy way to extend the capabilities of AI agents, assistants, and copilots with custom actions written in Python. Users can create and deploy tools, skills, loaders, and plugins that securely connect any AI Assistant platform to their data and applications. The Robocorp Action Server makes Python scripts compatible with ChatGPT and LangChain by automatically creating and exposing an API based on function declaration, type hints, and docstrings. It simplifies the process of developing and deploying AI actions, enabling users to interact with AI frameworks effortlessly.

Open-Interface

Open Interface is a self-driving software that automates computer tasks by sending user requests to a language model backend (e.g., GPT-4V) and simulating keyboard and mouse inputs to execute the steps. It course-corrects by sending current screenshots to the language models. The tool supports MacOS, Linux, and Windows, and requires setting up the OpenAI API key for access to GPT-4V. It can automate tasks like creating meal plans, setting up custom language model backends, and more. Open Interface is currently not efficient in accurate spatial reasoning, tracking itself in tabular contexts, and navigating complex GUI-rich applications. Future improvements aim to enhance the tool's capabilities with better models trained on video walkthroughs. The tool is cost-effective, with user requests priced between $0.05 - $0.20, and offers features like interrupting the app and primary display visibility in multi-monitor setups.

AI-Case-Sorter-CS7.1

AI-Case-Sorter-CS7.1 is a project focused on building a case sorter using machine vision and machine learning AI to sort cases by headstamp. The repository includes Arduino code and 3D models necessary for the project.