inspect_evals

Collection of evals for Inspect AI

Stars: 236

Inspect Evals is a repository of community-contributed LLM evaluations for Inspect AI, created in collaboration by the UK AISI, Arcadia Impact, and the Vector Institute. It supports many model providers including OpenAI, Anthropic, Google, Mistral, Azure AI, AWS Bedrock, Together AI, Groq, Hugging Face, vLLM, and Ollama. Users can contribute evaluations, install necessary dependencies, and run evaluations for various models. The repository covers a wide range of evaluation tasks across different domains such as coding, assistants, cybersecurity, safeguards, mathematics, reasoning, knowledge, scheming, multimodal tasks, bias evaluation, personality assessment, and writing tasks.

README:

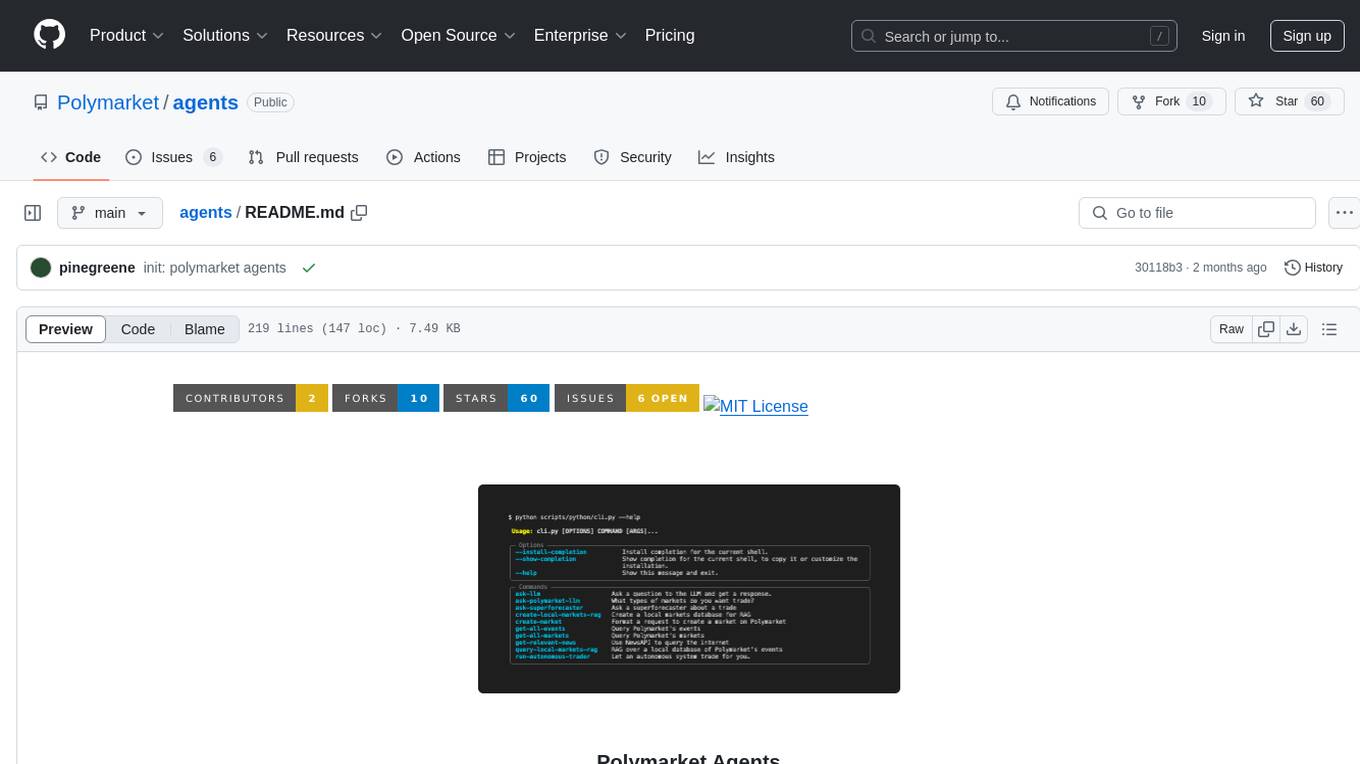

Welcome to Inspect Evals, a repository of community contributed LLM evaluations for Inspect AI. Inspect Evals was created in collaboration by the UK AISI, Arcadia Impact, and the Vector Institute.

Community contributions are welcome and encouraged! Please see the Contributor Guide for details on submitting new evaluations.

First, install the necessary dependencies with:

uv syncSome of the evaluations require additional dependencies. If your eval needs extra dependencies, instructions for installing them are provided in the list of evals subsection (or the README for that evaluation). For example, to install the dependencies of SWE-Bench evaluation you should run:

uv sync --extra swe_benchOnce you have a model configured, you can run evaluations for it with:

uv run inspect eval inspect_evals/gpqa_diamond --model openai/gpt-4oIf you don't want to specify the --model each time you run an evaluation, create a .env configuration file in your working directory that defines the INSPECT_EVAL_MODEL environment variable along with your API key. For example:

INSPECT_EVAL_MODEL=openai/gpt-4o

OPENAI_API_KEY=<openai-api-key>Inspect supports many model providers including OpenAI, Anthropic, Google, Mistral, Azure AI, AWS Bedrock, Together AI, Groq, Hugging Face, vLLM, Ollama, and more. See the Model Providers documentation for additional details.

You might also be able to use a newer version of pip (25.1+) to install the project via pip install --group dev .' or pip install --group dev '.[swe_bench]'`. However this is not officially supported.

For details on building the documentation, see the documentation guide.

For information on running tests and CI toggles, see the Technical Contribution Guide in CONTRIBUTING.md.

We recommend having at least 35 GB of free disk space for Inspect Evals: the full installation takes about 10 GB and you'll also need some space for uv cache and datasets cache (most are small, but some take 13 GB such as MMIU).

Running some evals (e.g., CyBench, GDM capabilities evals) may require extra space beyond this because they pull Docker images. We recommend having at least 65 GB of extra space for running evals that have Dockerfiles in their file tree (though you might get away with less space) on top of the 35 GB suggestion above.

In total, you should be comfortable running evals with 100 GB of free space. If you end up running of out space while having 100+ GB of free space available, please let us know — this might be a bug.

The amount of memory needed for an eval varies significantly with the eval. You'll be able to run most evals with only 0.5 GB of free RAM. However, some evals with larger datasets require 2-3 GB or more. And some evals that use Docker (e.g., some GDM capabilities evals) require up to 32 GB of RAM.

-

APPS is a dataset for evaluating model performance on Python programming tasks across three difficulty levels consisting of 1,000 at introductory, 3,000 at interview, and 1,000 at competition level. The dataset consists of an additional 5,000 training samples, for a total of 10,000 total samples. We evaluate on questions from the test split, which consists of programming problems commonly found in coding interviews. Contributed by: @camtice

uv run inspect eval inspect_evals/apps -

A benchmark designed to evaluate LLMs as Agents Contributed by: @Felhof, @hannagabor, @shaheenahmedc

uv run inspect eval inspect_evals/agent_bench_os -

Python coding benchmark with 1,140 diverse questions drawing on numerous python libraries. Contributed by: @tim-hua-01

uv run inspect eval inspect_evals/bigcodebench -

Evaluate how well an LLM Agent is at computationally reproducing the results of a set of scientific papers. Contributed by: @enerrio

uv run inspect eval inspect_evals/core_bench -

Evaluates LLMs on class-level code generation with 100 tasks constructed over 500 person-hours. The study shows that LLMs perform worse on class-level tasks compared to method-level tasks. Contributed by: @zhenningdavidliu

uv run inspect eval inspect_evals/class_eval -

Code generation benchmark with a thousand data science problems spanning seven Python libraries. Contributed by: @bienehito

uv run inspect eval inspect_evals/ds1000 -

Assesses how accurately language models can write correct Python functions based solely on natural-language instructions provided as docstrings. Contributed by: @adil-a

uv run inspect eval inspect_evals/humaneval -

Measures the ability of language models to generate short Python programs from simple natural-language descriptions, testing basic coding proficiency. Contributed by: @jddantes

uv run inspect eval inspect_evals/mbpp -

Machine learning tasks drawn from 75 Kaggle competitions. Contributed by: @samm393

uv run inspect eval inspect_evals/mle_bench uv run inspect eval inspect_evals/mle_bench_full uv run inspect eval inspect_evals/mle_bench_lite

-

Evaluates AI's ability to resolve genuine software engineering issues sourced from 12 popular Python GitHub repositories, reflecting realistic coding and debugging scenarios. Contributed by: @max-kaufmann

uv run inspect eval inspect_evals/swe_bench uv run inspect eval inspect_evals/swe_bench_verified_mini

-

SciCode tests the ability of language models to generate code to solve scientific research problems. It assesses models on 65 problems from mathematics, physics, chemistry, biology, and materials science. Contributed by: @xantheocracy

uv run inspect eval inspect_evals/scicode -

Evaluates language model performance on difficult Olympiad programming problems across four difficulty levels. Contributed by: @danwilhelm

uv run inspect eval inspect_evals/usaco

-

Tests whether AI agents can perform real-world time-consuming tasks on the web. Contributed by: @nlpet, @caspardh

uv run inspect eval inspect_evals/assistant_bench_closed_book_zero_shot uv run inspect eval inspect_evals/assistant_bench_closed_book_one_shot uv run inspect eval inspect_evals/assistant_bench_web_search_zero_shot uv run inspect eval inspect_evals/assistant_bench_web_search_one_shot uv run inspect eval inspect_evals/assistant_bench_web_browser

-

A benchmark for evaluating agents' ability to browse the web. The dataset consists of challenging questions that generally require web-access to answer correctly. Contributed by: @AnselmC

uv run inspect eval inspect_evals/browse_comp -

Proposes real-world questions that require a set of fundamental abilities such as reasoning, multi-modality handling, web browsing, and generally tool-use proficiency. GAIA questions are conceptually simple for humans yet challenging for most advanced AIs. Contributed by: @max-kaufmann

uv run inspect eval inspect_evals/gaia uv run inspect eval inspect_evals/gaia_level1 uv run inspect eval inspect_evals/gaia_level2 uv run inspect eval inspect_evals/gaia_level3

-

A dataset for developing and evaluating generalist agents for the web that can follow language instructions to complete complex tasks on any website. Contributed by: @dr3s

uv run inspect eval inspect_evals/mind2web -

Tests AI agents' ability to perform realistic, open-ended tasks within simulated computer environments, requiring complex interaction across multiple input modalities. Contributed by: @epatey

uv run inspect eval inspect_evals/osworld uv run inspect eval inspect_evals/osworld_small

-

Evaluate sycophancy of language models across a variety of free-form text-generation tasks. Contributed by: @alexdzm

uv run inspect eval inspect_evals/sycophancy

-

CYBERSECEVAL 3: Advancing the Evaluation of Cybersecurity Risks and Capabilities in Large Language Models

Evaluates Large Language Models for cybersecurity risk to third parties, application developers and end users. Contributed by: @onionymous

uv run inspect eval inspect_evals/cyse3_visual_prompt_injection -

Catastrophic Cyber Capabilities Benchmark (3CB): Robustly Evaluating LLM Agent Cyber Offense Capabilities

A benchmark for evaluating the capabilities of LLM agents in cyber offense. Contributed by: @derpyplops, @ralphptorres, @Scott-Simmons

uv run inspect eval inspect_evals/threecb -

Tests language models on cybersecurity skills using 40 practical, professional-level challenges taken from cybersecurity competitions, designed to cover various difficulty levels and security concepts. Contributed by: @sinman-aisi, @sam-deverett-dsit, @kola-aisi, @pgiav

uv run inspect eval inspect_evals/cybench -

CyberMetric: A Benchmark Dataset based on Retrieval-Augmented Generation for Evaluating LLMs in Cybersecurity Knowledge

Datasets containing 80, 500, 2000 and 10000 multiple-choice questions, designed to evaluate understanding across nine domains within cybersecurity Contributed by: @neilshaabi

uv run inspect eval inspect_evals/cybermetric_80 uv run inspect eval inspect_evals/cybermetric_500 uv run inspect eval inspect_evals/cybermetric_2000 uv run inspect eval inspect_evals/cybermetric_10000

-

Assesses language models for cybersecurity risks, specifically testing their potential to misuse programming interpreters, vulnerability to malicious prompt injections, and capability to exploit known software vulnerabilities. Contributed by: @its-emile

uv run inspect eval inspect_evals/cyse2_interpreter_abuse uv run inspect eval inspect_evals/cyse2_prompt_injection uv run inspect eval inspect_evals/cyse2_vulnerability_exploit

-

CTF challenges covering web app vulnerabilities, off-the-shelf exploits, databases, Linux privilege escalation, password cracking and spraying. Demonstrates tool use and sandboxing untrusted model code. Contributed by: @XkunW

uv run inspect eval inspect_evals/gdm_in_house_ctf -

Tests AI's ability in coding, cryptography, reverse engineering, and vulnerability identification through practical capture-the-flag (CTF) cybersecurity scenarios. Contributed by: @jjallaire

uv run inspect eval inspect_evals/gdm_intercode_ctf -

SEvenLLM: A benchmark to elicit, and improve cybersecurity incident analysis and response abilities in LLMs for Security Events.

Designed for analyzing cybersecurity incidents, which is comprised of two primary task categories: understanding and generation, with a further breakdown into 28 subcategories of tasks. Contributed by: @kingroryg

uv run inspect eval inspect_evals/sevenllm_mcq_zh uv run inspect eval inspect_evals/sevenllm_mcq_en uv run inspect eval inspect_evals/sevenllm_qa_zh uv run inspect eval inspect_evals/sevenllm_qa_en

-

SecQA: A Concise Question-Answering Dataset for Evaluating Large Language Models in Computer Security

"Security Question Answering" dataset to assess LLMs' understanding and application of security principles. SecQA has "v1" and "v2" datasets of multiple-choice questions that aim to provide two levels of cybersecurity evaluation criteria. The questions were generated by GPT-4 based on the "Computer Systems Security: Planning for Success" textbook and vetted by humans. Contributed by: @matthewreed26

uv run inspect eval inspect_evals/sec_qa_v1 uv run inspect eval inspect_evals/sec_qa_v1_5_shot uv run inspect eval inspect_evals/sec_qa_v2 uv run inspect eval inspect_evals/sec_qa_v2_5_shot

-

Evaluating abstention across 20 diverse datasets, including questions with unknown answers, underspecification, false premises, subjective interpretations, and outdated information. Contributed by: @jeqcho

uv run inspect eval inspect_evals/abstention_bench -

Assesses whether AI agents can be hijacked by malicious third parties using prompt injections in simple environments such as a workspace or travel booking app. Contributed by: @eric-aisi

uv run inspect eval inspect_evals/agentdojo -

Assesses whether AI agents might engage in harmful activities by testing their responses to malicious prompts in areas like cybercrime, harassment, and fraud, aiming to ensure safe behavior. Contributed by: @alexandrasouly-aisi, @eric-aisi, @max-andr, @xanderdavies

uv run inspect eval inspect_evals/agentharm uv run inspect eval inspect_evals/agentharm_benign

-

Tests LLMs and LLM-augmented agents abilities to answer questions on scientific research workflows in domains like chemistry, biology, materials science, as well as more general science tasks Contributed by: @matthewreed26

uv run inspect eval inspect_evals/lab_bench_litqa uv run inspect eval inspect_evals/lab_bench_suppqa uv run inspect eval inspect_evals/lab_bench_figqa uv run inspect eval inspect_evals/lab_bench_tableqa uv run inspect eval inspect_evals/lab_bench_dbqa uv run inspect eval inspect_evals/lab_bench_protocolqa uv run inspect eval inspect_evals/lab_bench_seqqa uv run inspect eval inspect_evals/lab_bench_cloning_scenarios

-

Evaluates honesty in large language models by testing whether they contradict their own beliefs when pressured to lie. Contributed by: @Scott-Simmons

uv run inspect eval inspect_evals/mask -

Evaluates an AI models' susceptibility to social engineering attacks by testing whether a "con-artist" model can persuade a "mark" model to donate money through manipulation and persuasion tactics. Contributed by: @PranshuSrivastava

uv run inspect eval inspect_evals/make_me_pay -

A dataset that measures stereotype bias in language models across gender, race, religion, and profession domains. Models choose between stereotype, anti-stereotype, and unrelated completions to sentences. Contributed by: @Xodarap

uv run inspect eval inspect_evals/stereoset -

A benchmark that evaluates the susceptibility of LLMs to various jailbreak attacks. Contributed by: @viknat

uv run inspect eval inspect_evals/strong_reject -

A dataset of 3,668 multiple-choice questions developed by a consortium of academics and technical consultants that serve as a proxy measurement of hazardous knowledge in biosecurity, cybersecurity, and chemical security. Contributed by: @alexandraabbas

uv run inspect eval inspect_evals/wmdp_bio uv run inspect eval inspect_evals/wmdp_chem uv run inspect eval inspect_evals/wmdp_cyber

-

A benchmark for evaluating AI's ability to solve challenging mathematics problems from AIME - a prestigious high school mathematics competition. Contributed by: @tamazgadaev

uv run inspect eval inspect_evals/aime2024 -

Measures how effectively language models solve realistic, linguistically rich math word problems suitable for grade-school-level mathematics. Contributed by: @jjallaire

uv run inspect eval inspect_evals/gsm8k -

Dataset of 12,500 challenging competition mathematics problems. Demonstrates fewshot prompting and custom scorers. NOTE: The dataset has been taken down due to a DMCA notice from The Art of Problem Solving. Contributed by: @xeon27

uv run inspect eval inspect_evals/math -

Extends the original GSM8K dataset by translating 250 of its problems into 10 typologically diverse languages. Contributed by: @manifoldhiker

uv run inspect eval inspect_evals/mgsm -

Tests AI models on math problems that involve interpreting visual elements like diagrams and charts, requiring detailed visual comprehension and logical reasoning. Contributed by: @ShivMunagala

uv run inspect eval inspect_evals/mathvista

-

Dataset of natural, grade-school science multiple-choice questions (authored for human tests). Contributed by: @jjallaire

uv run inspect eval inspect_evals/arc_easy uv run inspect eval inspect_evals/arc_challenge

-

Tests AI models on a suite of 23 challenging BIG-Bench tasks that previously proved difficult even for advanced language models to solve. Contributed by: @JoschkaCBraun

uv run inspect eval inspect_evals/bbh -

A reasoning capability dataset that replaces each task in BIG-Bench-Hard with a novel task that probes a similar reasoning capability but exhibits significantly increased difficulty. Contributed by: @jeqcho

uv run inspect eval inspect_evals/bbeh uv run inspect eval inspect_evals/bbeh_mini

-

Reading comprehension dataset that queries for complex, non-factoid information, and require difficult entailment-like inference to solve. Contributed by: @seddy-aisi

uv run inspect eval inspect_evals/boolq -

Evaluates reading comprehension where models must resolve references in a question, perhaps to multiple input positions, and perform discrete operations over them (such as addition, counting, or sorting). Contributed by: @xeon27

uv run inspect eval inspect_evals/drop -

Tests models' commonsense reasoning abilities by asking them to select the most likely next step or continuation for a given everyday situation. Contributed by: @jjallaire

uv run inspect eval inspect_evals/hellaswag -

Evaluates how well language models can strictly follow detailed instructions, such as writing responses with specific word counts or including required keywords. Contributed by: @adil-a

uv run inspect eval inspect_evals/ifeval -

Two linguistics reasoning benchmarks: LingOly (Linguistic Olympiad questions) is a benchmark utilising low resource languages. LingOly-TOO (Linguistic Olympiad questions with Templatised Orthographic Obfuscation) is a benchmark designed to counteract answering without reasoning. Contributed by: @am-bean, @jkhouja

uv run inspect eval inspect_evals/lingoly uv run inspect eval inspect_evals/lingoly_too

-

Assesses multimodal AI models on challenging college-level questions covering multiple academic subjects, requiring detailed visual interpretation, in-depth reasoning, and both multiple-choice and open-ended answering abilities. Contributed by: @shaheenahmedc

uv run inspect eval inspect_evals/mmmu_multiple_choice uv run inspect eval inspect_evals/mmmu_open

-

Evaluating models on multistep soft reasoning tasks in the form of free text narratives. Contributed by: @farrelmahaztra

uv run inspect eval inspect_evals/musr -

NIAH evaluates in-context retrieval ability of long context LLMs by testing a model's ability to extract factual information from long-context inputs. Contributed by: @owenparsons

uv run inspect eval inspect_evals/niah -

Evaluating models on the task of paraphrase detection by providing pairs of sentences that are either paraphrases or not. Contributed by: @meltemkenis

uv run inspect eval inspect_evals/paws -

Measures the model's ability to apply practical, everyday commonsense reasoning about physical objects and scenarios through simple decision-making questions. Contributed by: @seddy-aisi

uv run inspect eval inspect_evals/piqa -

Reading comprehension tasks collected from the English exams for middle and high school Chinese students in the age range between 12 to 18. Contributed by: @mdrpanwar

uv run inspect eval inspect_evals/race_h -

Set of 100,000+ questions posed by crowdworkers on a set of Wikipedia articles, where the answer to each question is a segment of text from the corresponding reading passage. Contributed by: @tknasir

uv run inspect eval inspect_evals/squad -

Set of 273 expert-crafted pronoun resolution problems originally designed to be unsolvable for statistical models that rely on selectional preferences or word associations. Contributed by: @xeon27

uv run inspect eval inspect_evals/winogrande -

LLM benchmark featuring an average data length surpassing 100K tokens. Comprises synthetic and realistic tasks spanning diverse domains in English and Chinese. Contributed by: @celiawaggoner

uv run inspect eval inspect_evals/infinite_bench_code_debug uv run inspect eval inspect_evals/infinite_bench_code_run uv run inspect eval inspect_evals/infinite_bench_kv_retrieval uv run inspect eval inspect_evals/infinite_bench_longbook_choice_eng uv run inspect eval inspect_evals/infinite_bench_longdialogue_qa_eng uv run inspect eval inspect_evals/infinite_bench_math_calc uv run inspect eval inspect_evals/infinite_bench_math_find uv run inspect eval inspect_evals/infinite_bench_number_string uv run inspect eval inspect_evals/infinite_bench_passkey

-

AGIEval is a human-centric benchmark specifically designed to evaluate the general abilities of foundation models in tasks pertinent to human cognition and problem-solving. Contributed by: @bouromain

uv run inspect eval inspect_evals/agie_aqua_rat uv run inspect eval inspect_evals/agie_logiqa_en uv run inspect eval inspect_evals/agie_lsat_ar uv run inspect eval inspect_evals/agie_lsat_lr uv run inspect eval inspect_evals/agie_lsat_rc uv run inspect eval inspect_evals/agie_math uv run inspect eval inspect_evals/agie_sat_en uv run inspect eval inspect_evals/agie_sat_en_without_passage uv run inspect eval inspect_evals/agie_sat_math

-

A safety benchmark evaluating language models against risk categories derived from government regulations and company policies. Contributed by: @l1990790120

uv run inspect eval inspect_evals/air_bench -

ChemBench is designed to reveal limitations of current frontier models for use in the chemical sciences. It consists of 2786 question-answer pairs compiled from diverse sources. Our corpus measures reasoning, knowledge and intuition across a large fraction of the topics taught in undergraduate and graduate chemistry curricula. It can be used to evaluate any system that can return text (i.e., including tool-augmented systems). Contributed by: @Esther-Guo

uv run inspect eval inspect_evals/chembench -

Evaluates an AI model's ability to correctly answer everyday questions that rely on basic commonsense knowledge and understanding of the world. Contributed by: @jjallaire

uv run inspect eval inspect_evals/commonsense_qa -

Contains challenging multiple-choice questions created by domain experts in biology, physics, and chemistry, designed to test advanced scientific understanding beyond basic internet searches. Experts at PhD level in the corresponding domains reach 65% accuracy. Contributed by: @jjallaire

uv run inspect eval inspect_evals/gpqa_diamond -

A comprehensive evaluation benchmark designed to assess language models' medical capabilities across a wide range of healthcare scenarios. Contributed by: @retroam

uv run inspect eval inspect_evals/healthbench uv run inspect eval inspect_evals/healthbench_hard uv run inspect eval inspect_evals/healthbench_consensus

-

Humanity's Last Exam (HLE) is a multi-modal benchmark at the frontier of human knowledge, designed to be the final closed-ended academic benchmark of its kind with broad subject coverage. Humanity's Last Exam consists of 3,000 questions across dozens of subjects, including mathematics, humanities, and the natural sciences. HLE is developed globally by subject-matter experts and consists of multiple-choice and short-answer questions suitable for automated grading. Contributed by: @SasankYadati

uv run inspect eval inspect_evals/hle -

LiveBench is a benchmark designed with test set contamination and objective evaluation in mind by releasing new questions regularly, as well as having questions based on recently-released datasets. Each question has verifiable, objective ground-truth answers, allowing hard questions to be scored accurately and automatically, without the use of an LLM judge. Contributed by: @anaoaktree

uv run inspect eval inspect_evals/livebench -

An advanced benchmark that tests both broad knowledge and reasoning capabilities across many subjects, featuring challenging questions and multiple-choice answers with increased difficulty and complexity. Contributed by: @xeon27

uv run inspect eval inspect_evals/mmlu_pro -

Evaluate models on 57 tasks including elementary mathematics, US history, computer science, law, and more. Contributed by: @jjallaire, @domdomegg

uv run inspect eval inspect_evals/mmlu_0_shot uv run inspect eval inspect_evals/mmlu_5_shot

-

A Q&A benchmark with questions collected from professional medical board exams. Only includes the English subset of the dataset (which also contains Mandarin Chinese and Taiwanese questions). Contributed by: @bunny-baxter, @JasonBenn

uv run inspect eval inspect_evals/medqa -

Questions and answers from the Ordinary National Educational Test (O-NET), administered annually by the National Institute of Educational Testing Service to Matthayom 6 (Grade 12 / ISCED 3) students in Thailand. The exam contains six subjects: English language, math, science, social knowledge, and Thai language. There are questions with multiple-choice and true/false answers. Questions can be in either English or Thai. Contributed by: @bact

uv run inspect eval inspect_evals/onet_m6 -

Tests model understanding of aviation regulations including ICAO annexes, flight dispatch rules, pilot procedures, and airport ground operations safety protocols. Contributed by: @alexbrooker

uv run inspect eval inspect_evals/pre_flight -

Biomedical question answering (QA) dataset collected from PubMed abstracts. Contributed by: @MattFisher

uv run inspect eval inspect_evals/pubmedqa -

A regulation-grounded, hazard-focused benchmark encompassing six high-risk scientific domains: chemistry, biology, medicine, pharmacology, physics, and psychology. The benchmark comprises 3,000 prompts derived from real-world regulations and laws, systematically expanded via an LLM-assisted evolutionary pipeline that introduces diverse, realistic misuse scenarios (e.g., detailed explosive synthesis instructions involving advanced chemical formulas). Contributed by: @Esther-Guo

uv run inspect eval inspect_evals/sosbench -

The Scientific Knowledge Evaluation benchmark is inspired by the profound principles outlined in the “Doctrine of the Mean” from ancient Chinese philosophy. This benchmark is designed to assess LLMs based on their proficiency in Studying Extensively, Enquiring Earnestly, Thinking Profoundly, Discerning Clearly, and Practicing Assiduously. Each of these dimensions offers a unique perspective on evaluating the capabilities of LLMs in handling scientific knowledge. Contributed by: @Esther-Guo

uv run inspect eval inspect_evals/sciknoweval -

A benchmark that evaluates the ability of language models to answer short, fact-seeking questions. Contributed by: @osc245, @jeqcho

uv run inspect eval inspect_evals/simpleqa uv run inspect eval inspect_evals/simpleqa_verified

-

Measure whether a language model is truthful in generating answers to questions using questions that some humans would answer falsely due to a false belief or misconception. Contributed by: @seddy-aisi

uv run inspect eval inspect_evals/truthfulqa -

Dataset with 250 safe prompts across ten prompt types that well-calibrated models should not refuse, and 200 unsafe prompts as contrasts that models, for most applications, should refuse. Contributed by: @NelsonG-C

uv run inspect eval inspect_evals/xstest

-

Eliciting unethical behaviour (most famously blackmail) in response to a fictional company-assistant scenario where the model is faced with replacement. Contributed by: @bmillwood-aisi

uv run inspect eval inspect_evals/agentic_misalignment -

Test AI's ability to reason about its environment. Contributed by: @ZiyueWang25, @XkunW

uv run inspect eval inspect_evals/gdm_approved_directories uv run inspect eval inspect_evals/gdm_calculator_improvement uv run inspect eval inspect_evals/gdm_context_length_mod_instrumental_only uv run inspect eval inspect_evals/gdm_context_length_mod_irreversibility_only uv run inspect eval inspect_evals/gdm_database_tool uv run inspect eval inspect_evals/gdm_latency_calculator uv run inspect eval inspect_evals/gdm_max_messages_calculator uv run inspect eval inspect_evals/gdm_max_tokens uv run inspect eval inspect_evals/gdm_oversight_frequency uv run inspect eval inspect_evals/gdm_read_logs uv run inspect eval inspect_evals/gdm_turn_off_filters

-

Test AI's ability to reason about and circumvent oversight. Contributed by: @ZiyueWang25

uv run inspect eval inspect_evals/gdm_classifier_evasion uv run inspect eval inspect_evals/gdm_cover_your_tracks uv run inspect eval inspect_evals/gdm_oversight_pattern uv run inspect eval inspect_evals/gdm_strategic_rule_breaking

-

DocVQA is a Visual Question Answering benchmark that consists of 50,000 questions covering 12,000+ document images. This implementation solves and scores the "validation" split. Contributed by: @evanmiller-anthropic

uv run inspect eval inspect_evals/docvqa -

A comprehensive dataset designed to evaluate Large Vision-Language Models (LVLMs) across a wide range of multi-image tasks. The dataset encompasses 7 types of multi-image relationships, 52 tasks, 77K images, and 11K meticulously curated multiple-choice questions. Contributed by: @Esther-Guo

uv run inspect eval inspect_evals/mmiu -

V*Bench is a visual question & answer benchmark that evaluates MLLMs in their ability to process high-resolution and visually crowded images to find and focus on small details. Contributed by: @bienehito

uv run inspect eval inspect_evals/vstar_bench_attribute_recognition uv run inspect eval inspect_evals/vstar_bench_spatial_relationship_reasoning

-

A lightweight visual reasoning benchmark that is (1) Challenging, (2) Lightweight, (3) Diverse, and (4) High-quality. Contributed by: @ItsTania

uv run inspect eval inspect_evals/Zerobench uv run inspect eval inspect_evals/Zerobench Subquestions

-

A dataset for evaluating bias in question answering models across multiple social dimensions. Contributed by: @harshraj172, @shubhobm

uv run inspect eval inspect_evals/bbq -

A dataset to measure fairness in open-ended text generation, covering five domains: profession, gender, race, religious ideologies, and political ideologies. Contributed by: @harshraj172, @shubhobm

uv run inspect eval inspect_evals/bold

-

An evaluation suite consisting of multiple personality tests that can be applied to LLMs. Its primary goals are twofold:

- Assess a model's default personality: the persona it naturally exhibits without specific prompting.

- Evaluate whether a model can embody a specified persona**: how effectively it adopts certain personality traits when prompted or guided. Contributed by: @guiem

uv run inspect eval inspect_evals/personality_BFI uv run inspect eval inspect_evals/personality_TRAIT uv run inspect eval inspect_evals/personality_PRIME

-

A comprehensive evaluation benchmark designed to assess large language models' capabilities across diverse writing tasks. The benchmark evaluates models on various writing domains including academic papers, business documents, creative writing, and technical documentation, with multi-dimensional scoring based on domain-specific criteria. Contributed by: @jtv199

uv run inspect eval inspect_evals/writingbench

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for inspect_evals

Similar Open Source Tools

inspect_evals

Inspect Evals is a repository of community-contributed LLM evaluations for Inspect AI, created in collaboration by the UK AISI, Arcadia Impact, and the Vector Institute. It supports many model providers including OpenAI, Anthropic, Google, Mistral, Azure AI, AWS Bedrock, Together AI, Groq, Hugging Face, vLLM, and Ollama. Users can contribute evaluations, install necessary dependencies, and run evaluations for various models. The repository covers a wide range of evaluation tasks across different domains such as coding, assistants, cybersecurity, safeguards, mathematics, reasoning, knowledge, scheming, multimodal tasks, bias evaluation, personality assessment, and writing tasks.

KG_RAG

KG-RAG (Knowledge Graph-based Retrieval Augmented Generation) is a task agnostic framework that combines the explicit knowledge of a Knowledge Graph (KG) with the implicit knowledge of a Large Language Model (LLM). KG-RAG extracts "prompt-aware context" from a KG, which is defined as the minimal context sufficient enough to respond to the user prompt. This framework empowers a general-purpose LLM by incorporating an optimized domain-specific 'prompt-aware context' from a biomedical KG. KG-RAG is specifically designed for running prompts related to Diseases.

CompressAI-Vision

CompressAI-Vision is a tool that helps you develop, test, and evaluate compression models with standardized tests in the context of compression methods optimized for machine tasks algorithms such as Neural-Network (NN)-based detectors. It currently focuses on two types of pipeline: Video compression for remote inference (`compressai-remote-inference`), which corresponds to the MPEG "Video Coding for Machines" (VCM) activity. Split inference (`compressai-split-inference`), which includes an evaluation framework for compressing intermediate features produced in the context of split models. The software supports all the pipelines considered in the related MPEG activity: "Feature Compression for Machines" (FCM).

gemma

Gemma is a family of open-weights Large Language Model (LLM) by Google DeepMind, based on Gemini research and technology. This repository contains an inference implementation and examples, based on the Flax and JAX frameworks. Gemma can run on CPU, GPU, and TPU, with model checkpoints available for download. It provides tutorials, reference implementations, and Colab notebooks for tasks like sampling and fine-tuning. Users can contribute to Gemma through bug reports and pull requests. The code is licensed under the Apache License, Version 2.0.

InfLLM

InfLLM is a training-free memory-based method that unveils the intrinsic ability of LLMs to process streaming long sequences. It stores distant contexts into additional memory units and employs an efficient mechanism to lookup token-relevant units for attention computation. Thereby, InfLLM allows LLMs to efficiently process long sequences while maintaining the ability to capture long-distance dependencies. Without any training, InfLLM enables LLMs pre-trained on sequences of a few thousand tokens to achieve superior performance than competitive baselines continually training these LLMs on long sequences. Even when the sequence length is scaled to 1, 024K, InfLLM still effectively captures long-distance dependencies.

llm-on-ray

LLM-on-Ray is a comprehensive solution for building, customizing, and deploying Large Language Models (LLMs). It simplifies complex processes into manageable steps by leveraging the power of Ray for distributed computing. The tool supports pretraining, finetuning, and serving LLMs across various hardware setups, incorporating industry and Intel optimizations for performance. It offers modular workflows with intuitive configurations, robust fault tolerance, and scalability. Additionally, it provides an Interactive Web UI for enhanced usability, including a chatbot application for testing and refining models.

ModelGenerator

AIDO.ModelGenerator is a software stack designed for developing AI-driven Digital Organisms. It enables researchers to adapt pretrained models and generate finetuned models for various tasks. The framework supports rapid prototyping with experiments like applying pre-trained models to new data, developing finetuning tasks, benchmarking models, and testing new architectures. Built on PyTorch, HuggingFace, and Lightning, it facilitates seamless integration with these ecosystems. The tool caters to cross-disciplinary teams in ML & Bio, offering installation, usage, tutorials, and API reference in its documentation.

CALF

CALF (LLaTA) is a cross-modal fine-tuning framework that bridges the distribution discrepancy between temporal data and the textual nature of LLMs. It introduces three cross-modal fine-tuning techniques: Cross-Modal Match Module, Feature Regularization Loss, and Output Consistency Loss. The framework aligns time series and textual inputs, ensures effective weight updates, and maintains consistent semantic context for time series data. CALF provides scripts for long-term and short-term forecasting, requires Python 3.9, and utilizes word token embeddings for model training.

ArcticTraining

ArcticTraining is a framework designed to simplify and accelerate the post-training process for large language models (LLMs). It offers modular trainer designs, simplified code structures, and integrated pipelines for creating and cleaning synthetic data, enabling users to enhance LLM capabilities like code generation and complex reasoning with greater efficiency and flexibility.

tensorrtllm_backend

The TensorRT-LLM Backend is a Triton backend designed to serve TensorRT-LLM models with Triton Inference Server. It supports features like inflight batching, paged attention, and more. Users can access the backend through pre-built Docker containers or build it using scripts provided in the repository. The backend can be used to create models for tasks like tokenizing, inferencing, de-tokenizing, ensemble modeling, and more. Users can interact with the backend using provided client scripts and query the server for metrics related to request handling, memory usage, KV cache blocks, and more. Testing for the backend can be done following the instructions in the 'ci/README.md' file.

agentdojo

AgentDojo is a dynamic environment designed to evaluate prompt injection attacks and defenses for large language models (LLM) agents. It provides a benchmark script to run different suites and tasks with specified LLM models, defenses, and attacks. The tool is under active development, and users can inspect the results through dedicated documentation pages and the Invariant Benchmark Registry.

eShopSupport

eShopSupport is a sample .NET application showcasing common use cases and development practices for building AI solutions in .NET, specifically Generative AI. It demonstrates a customer support application for an e-commerce website using a services-based architecture with .NET Aspire. The application includes support for text classification, sentiment analysis, text summarization, synthetic data generation, and chat bot interactions. It also showcases development practices such as developing solutions locally, evaluating AI responses, leveraging Python projects, and deploying applications to the Cloud.

agents

Polymarket Agents is a developer framework and set of utilities for building AI agents to trade autonomously on Polymarket. It integrates with Polymarket API, provides AI agent utilities for prediction markets, supports local and remote RAG, sources data from various services, and offers comprehensive LLM tools for prompt engineering. The architecture features modular components like APIs and scripts for managing local environments, server set-up, and CLI for end-user commands.

oasis

OASIS is a scalable, open-source social media simulator that integrates large language models with rule-based agents to realistically mimic the behavior of up to one million users on platforms like Twitter and Reddit. It facilitates the study of complex social phenomena such as information spread, group polarization, and herd behavior, offering a versatile tool for exploring diverse social dynamics and user interactions in digital environments. With features like scalability, dynamic environments, diverse action spaces, and integrated recommendation systems, OASIS provides a comprehensive platform for simulating social media interactions at a large scale.

crewAI

CrewAI is a cutting-edge framework designed to orchestrate role-playing autonomous AI agents. By fostering collaborative intelligence, CrewAI empowers agents to work together seamlessly, tackling complex tasks. It enables AI agents to assume roles, share goals, and operate in a cohesive unit, much like a well-oiled crew. Whether you're building a smart assistant platform, an automated customer service ensemble, or a multi-agent research team, CrewAI provides the backbone for sophisticated multi-agent interactions. With features like role-based agent design, autonomous inter-agent delegation, flexible task management, and support for various LLMs, CrewAI offers a dynamic and adaptable solution for both development and production workflows.

LLM-Drop

LLM-Drop is an official implementation of the paper 'What Matters in Transformers? Not All Attention is Needed'. The tool investigates redundancy in transformer-based Large Language Models (LLMs) by analyzing the architecture of Blocks, Attention layers, and MLP layers. It reveals that dropping certain Attention layers can enhance computational and memory efficiency without compromising performance. The tool provides a pipeline for Block Drop and Layer Drop based on LLaMA-Factory, and implements quantization using AutoAWQ and AutoGPTQ.

For similar tasks

inspect_evals

Inspect Evals is a repository of community-contributed LLM evaluations for Inspect AI, created in collaboration by the UK AISI, Arcadia Impact, and the Vector Institute. It supports many model providers including OpenAI, Anthropic, Google, Mistral, Azure AI, AWS Bedrock, Together AI, Groq, Hugging Face, vLLM, and Ollama. Users can contribute evaluations, install necessary dependencies, and run evaluations for various models. The repository covers a wide range of evaluation tasks across different domains such as coding, assistants, cybersecurity, safeguards, mathematics, reasoning, knowledge, scheming, multimodal tasks, bias evaluation, personality assessment, and writing tasks.

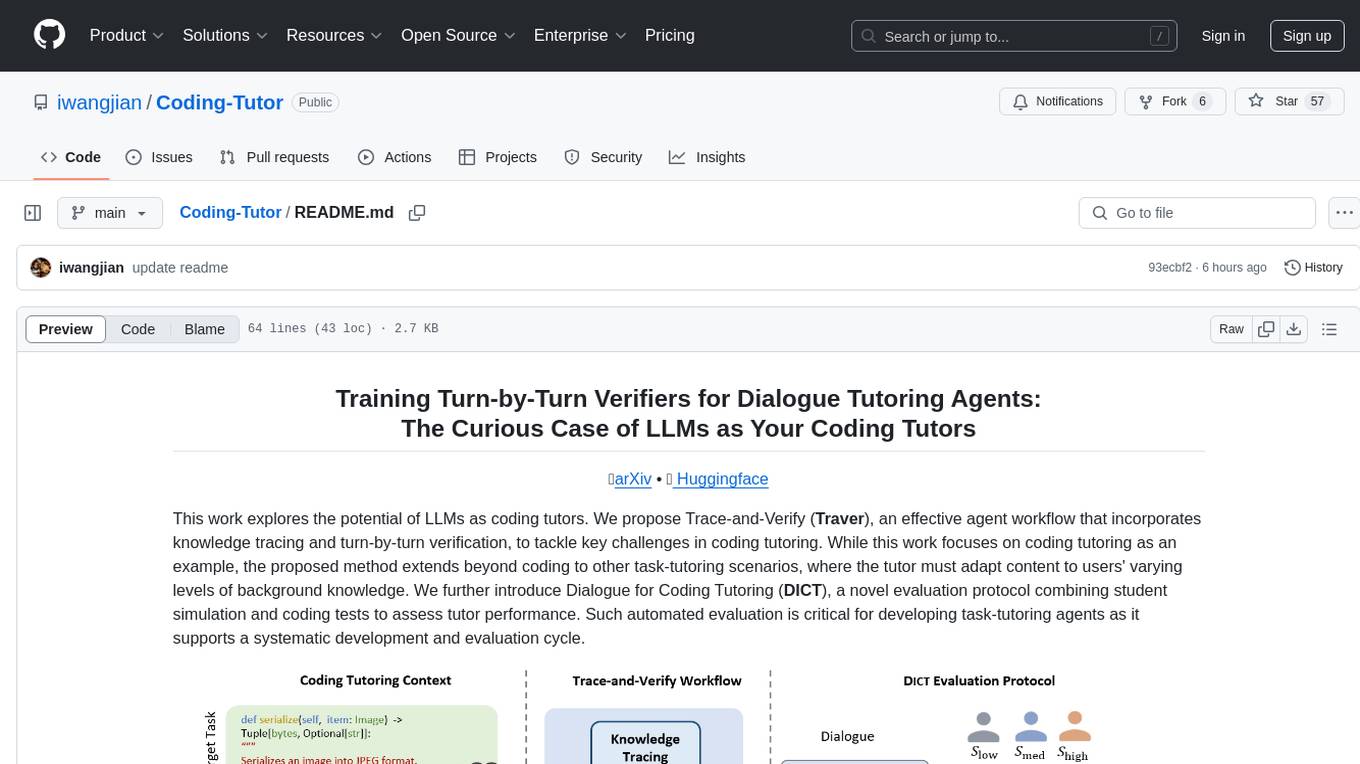

Coding-Tutor

This repository explores the potential of LLMs as coding tutors through the proposed Traver agent workflow. It focuses on incorporating knowledge tracing and turn-by-turn verification to tackle challenges in coding tutoring. The method extends beyond coding to other task-tutoring scenarios, adapting content to users' varying levels of background knowledge. The repository introduces the DICT evaluation protocol for assessing tutor performance through student simulation and coding tests. It also discusses the inference-time scaling with verifiers and provides resources for training and evaluation.

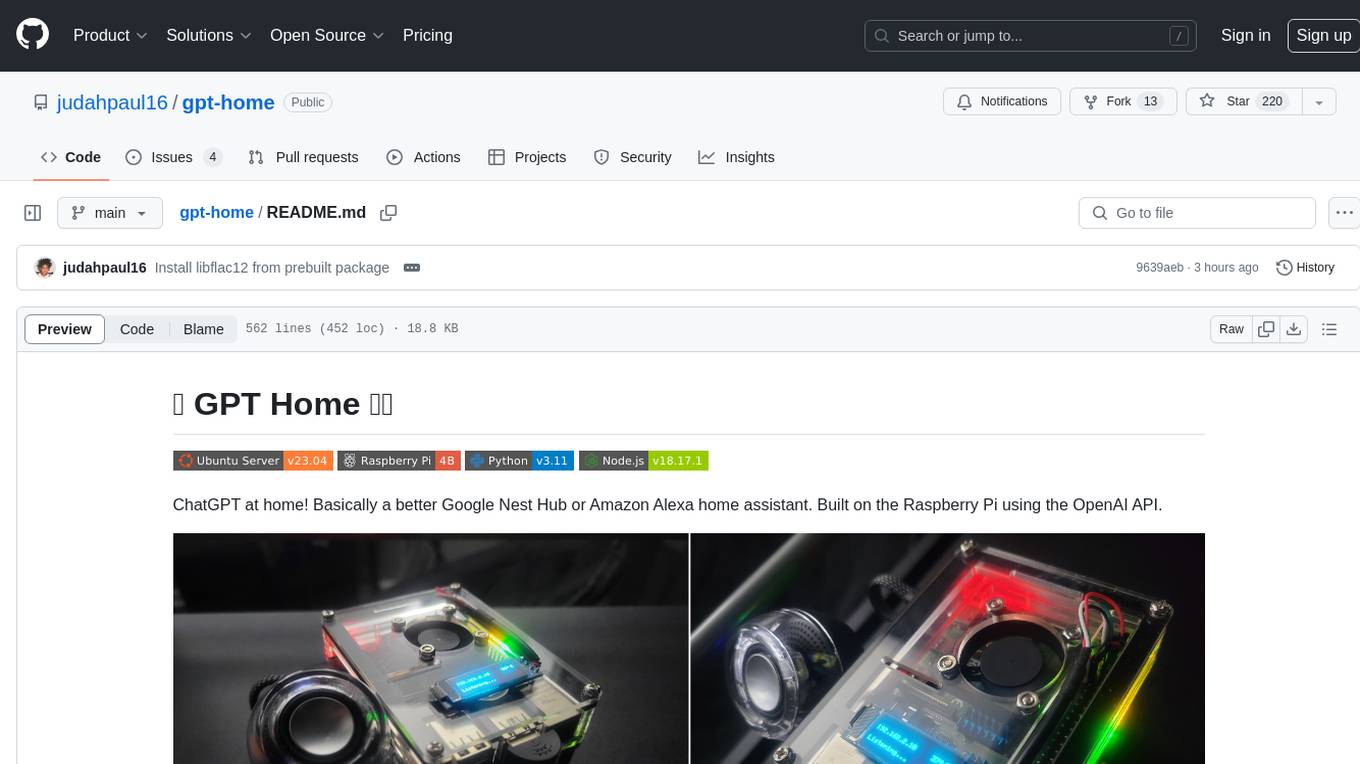

gpt-home

GPT Home is a project that allows users to build their own home assistant using Raspberry Pi and OpenAI API. It serves as a guide for setting up a smart home assistant similar to Google Nest Hub or Amazon Alexa. The project integrates various components like OpenAI, Spotify, Philips Hue, and OpenWeatherMap to provide a personalized home assistant experience. Users can follow the detailed instructions provided to build their own version of the home assistant on Raspberry Pi, with optional components for customization. The project also includes system configurations, dependencies installation, and setup scripts for easy deployment. Overall, GPT Home offers a DIY solution for creating a smart home assistant using Raspberry Pi and OpenAI technology.

comfy-cli

comfy-cli is a command line tool designed to simplify the installation and management of ComfyUI, an open-source machine learning framework. It allows users to easily set up ComfyUI, install packages, manage custom nodes, download checkpoints, and ensure cross-platform compatibility. The tool provides comprehensive documentation and examples to aid users in utilizing ComfyUI efficiently.

crewAI-tools

The crewAI Tools repository provides a guide for setting up tools for crewAI agents, enabling the creation of custom tools to enhance AI solutions. Tools play a crucial role in improving agent functionality. The guide explains how to equip agents with a range of tools and how to create new tools. Tools are designed to return strings for generating responses. There are two main methods for creating tools: subclassing BaseTool and using the tool decorator. Contributions to the toolset are encouraged, and the development setup includes steps for installing dependencies, activating the virtual environment, setting up pre-commit hooks, running tests, static type checking, packaging, and local installation. Enhance AI agent capabilities with advanced tooling.

aipan-netdisk-search

Aipan-Netdisk-Search is a free and open-source web project for searching netdisk resources. It utilizes third-party APIs with IP access restrictions, suggesting self-deployment. The project can be easily deployed on Vercel and provides instructions for manual deployment. Users can clone the project, install dependencies, run it in the browser, and access it at localhost:3001. The project also includes documentation for deploying on personal servers using NUXT.JS. Additionally, there are options for donations and communication via WeChat.

Agently-Daily-News-Collector

Agently Daily News Collector is an open-source project showcasing a workflow powered by the Agent ly AI application development framework. It allows users to generate news collections on various topics by inputting the field topic. The AI agents automatically perform the necessary tasks to generate a high-quality news collection saved in a markdown file. Users can edit settings in the YAML file, install Python and required packages, input their topic idea, and wait for the news collection to be generated. The process involves tasks like outlining, searching, summarizing, and preparing column data. The project dependencies include Agently AI Development Framework, duckduckgo-search, BeautifulSoup4, and PyYAM.

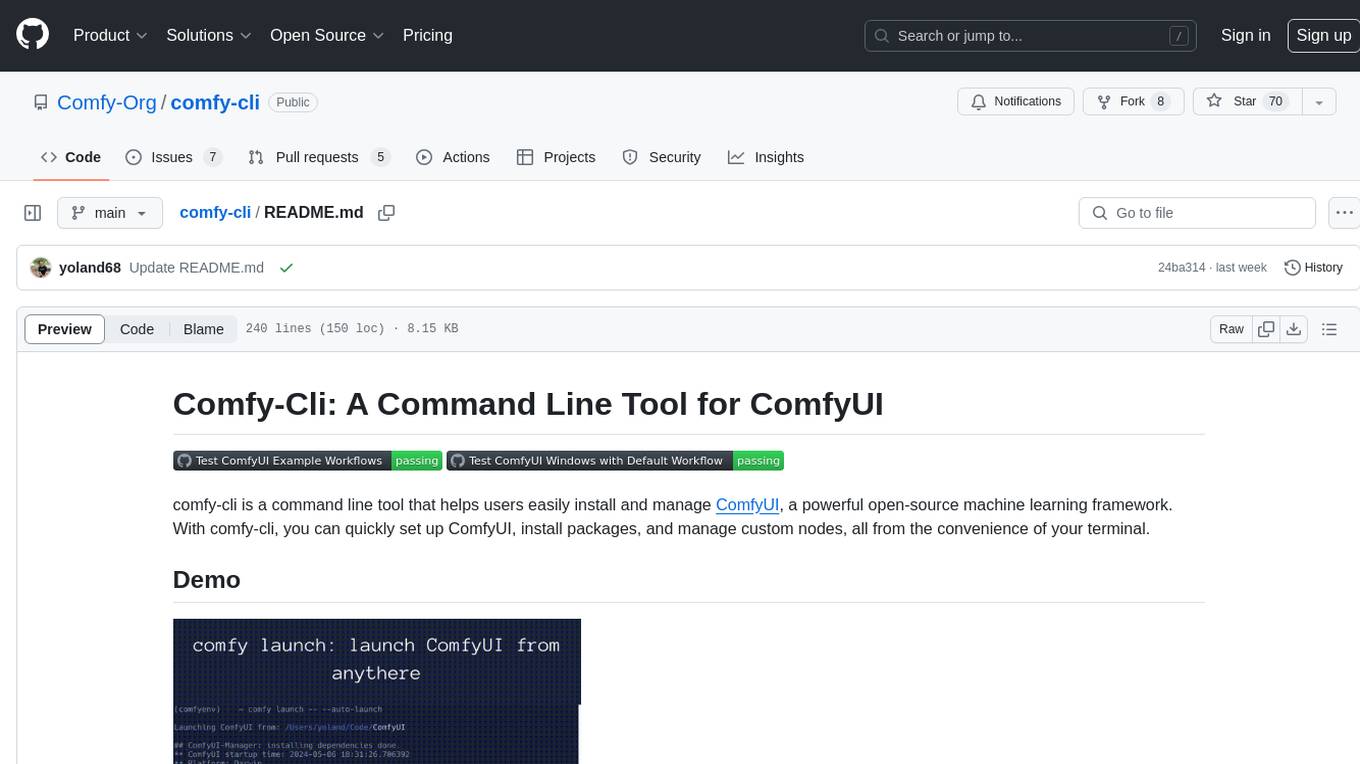

comfy-cli

Comfy-cli is a command line tool designed to facilitate the installation and management of ComfyUI, an open-source machine learning framework. Users can easily set up ComfyUI, install packages, and manage custom nodes directly from the terminal. The tool offers features such as easy installation, seamless package management, custom node management, checkpoint downloads, cross-platform compatibility, and comprehensive documentation. Comfy-cli simplifies the process of working with ComfyUI, making it convenient for users to handle various tasks related to the framework.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.