BrowserGym

🌎💪 BrowserGym, a Gym environment for web task automation

Stars: 883

BrowserGym is an open, easy-to-use, and extensible framework designed to accelerate web agent research. It provides benchmarks like MiniWoB, WebArena, VisualWebArena, WorkArena, AssistantBench, and WebLINX. Users can design new web benchmarks by inheriting the AbstractBrowserTask class. The tool allows users to install different packages for core functionalities, experiments, and specific benchmarks. It supports the development setup and offers boilerplate code for running agents on various tasks. BrowserGym is not a consumer product and should be used with caution.

README:

🛠️ Setup - 🏋 Usage - 💻 Demo - 🌐 Ecosystem - 🚀 AgentLab - 🌟 Contributors - 📄 Paper - 📝 Citation

pip install browsergym[!WARNING] BrowserGym is meant to provide an open, easy-to-use and extensible framework to accelerate the field of web agent research. It is not meant to be a consumer product. Use with caution!

[!TIP] 🚀 Check out AgentLab✨ ! A seamless framework to implement, test, and evaluate your web agents on all BrowserGym benchmarks.

https://github.com/ServiceNow/BrowserGym/assets/26232819/e0bfc788-cc8e-44f1-b8c3-0d1114108b85

Example of a GPT4-V agent executing openended tasks (top row, chat interactive), as well as WebArena and WorkArena tasks (bottom row).

BrowserGym includes the following benchmarks by default:

- MiniWoB

- WebArena

- VisualWebArena

- WorkArena

- AssistantBench

- WebLINX (static benchmark)

Designing new web benchmarks with BrowserGym is easy, and simply requires to inherit the AbstractBrowserTask class.

To use browsergym, install one of the following packages:

pip install browsergym # (recommended) everything below

pip install browsergym-experiments # experiment utilities (agent, loop, benchmarks) + everything below

pip install browsergym-core # core functionalities only (no benchmark, just the openended task)

pip install browsergym-miniwob # core + miniwob

pip install browsergym-webarena # core + webarena

pip install browsergym-visualwebarena # core + visualwebarena

pip install browsergym-workarena # core + workarena

pip install browsergym-assistantbench # core + assistantbench

pip install weblinx-browsergym # core + weblinxThen setup playwright by running

playwright install chromiumFinally, each benchmark comes with its own specific setup that requires to follow additional steps.

- for MiniWoB++, see miniwob/README.md

- for WebArena, see webarena/README.md

- for VisualWebArena, see visualwebarena/README.md

- for WorkArena, see WorkArena

- for AssistantBench, see assistantbench/README.md

To install browsergym locally for development, use the following commands:

git clone [email protected]:ServiceNow/BrowserGym.git

cd BrowserGym

make installContributions are welcome! 😊

Boilerplate code to run an agent on an interactive, open-ended task:

import gymnasium as gym

import browsergym.core # register the openended task as a gym environment

# start an openended environment

env = gym.make(

"browsergym/openended",

task_kwargs={"start_url": "https://www.google.com/"}, # starting URL

wait_for_user_message=True, # wait for a user message after each agent message sent to the chat

)

# run the environment <> agent loop until termination

obs, info = env.reset()

while True:

action = ... # implement your agent here

obs, reward, terminated, truncated, info = env.step(action)

if terminated or truncated:

break

# release the environment

env.close()MiniWoB

import gymnasium as gym

import browsergym.miniwob # register miniwob tasks as gym environments

# start a miniwob task

env = gym.make("browsergym/miniwob.choose-list")

...

# list all the available miniwob tasks

env_ids = [id for id in gym.envs.registry.keys() if id.startswith("browsergym/miniwob")]

print("\n".join(env_ids))WorkArena

import gymnasium as gym

import browsergym.workarena # register workarena tasks as gym environments

# start a workarena task

env = gym.make("browsergym/workarena.servicenow.order-ipad-pro")

...

# list all the available workarena tasks

env_ids = [id for id in gym.envs.registry.keys() if id.startswith("browsergym/workarena")]

print("\n".join(env_ids))WebArena

import gymnasium as gym

import browsergym.webarena # register webarena tasks as gym environments

# start a webarena task

env = gym.make("browsergym/webarena.310")

...

# list all the available webarena tasks

env_ids = [id for id in gym.envs.registry.keys() if id.startswith("browsergym/webarena")]

print("\n".join(env_ids))VisualWebArena

import gymnasium as gym

import browsergym.webarena # register webarena tasks as gym environments

# start a visualwebarena task

env = gym.make("browsergym/visualwebarena.721")

...

# list all the available visualwebarena tasks

env_ids = [id for id in gym.envs.registry.keys() if id.startswith("browsergym/visualwebarena")]

print("\n".join(env_ids))AssistantBench

import gymnasium as gym

import browsergym.workarena # register assistantbench tasks as gym environments

# start an assistantbench task

env = gym.make("browsergym/assistantbench.validation.3")

...

# list all the available assistantbench tasks

env_ids = [id for id in gym.envs.registry.keys() if id.startswith("browsergym/workarena")]

print("\n".join(env_ids))If you want to experiment with a demo agent in BrowserGym, follow these steps

# conda setup

conda env create -f demo_agent/environment.yml

conda activate demo_agent

# or pip setup

pip install -r demo_agent/requirements.txt

# then download the browser for playwright

playwright install chromiumOur demo agent uses openai as a backend, be sure to set your OPENAI_API_KEY.

Launch the demo agent as follows

# openended (interactive chat mode)

python demo_agent/run_demo.py --task_name openended --start_url https://www.google.com

# miniwob

python demo_agent/run_demo.py --task_name miniwob.click-test

# workarena

python demo_agent/run_demo.py --task_name workarena.servicenow.order-standard-laptop

# webarena

python demo_agent/run_demo.py --task_name webarena.4

# visualwebarena

python demo_agent/run_demo.py --task_name visualwebarena.398You can customize your experience by changing the model_name to your preferred LLM (it uses gpt-4o-mini by default), adding screenshots for your VLMs with use_screenshot, and much more!

python demo_agent/run_demo.py --help- AgentLab: Seamlessly run agents on benchmarks, collect and analyse traces.

- WorkArena(++): A benchmark for web agents on the ServiceNow platform.

- WebArena: A benchmark of realistic web tasks on self-hosted domains.

- VisualWebArena: A benchmark of realistic visual web tasks on self-hosted domains.

- MiniWoB(++): A collection of over 100 web tasks on synthetic web pages.

- WebLINX: A dataset of real-world web interaction traces.

- AssistantBench: A benchmark of realistic and time-consuming tasks on the open web.

- DoomArena: A framework for AI agent security testing which supports injecting attacks into web pages from Browsergym environments.

Please use the two following bibtex entries if you wish to cite BrowserGym:

@article{

chezelles2025browsergym,

title={The BrowserGym Ecosystem for Web Agent Research},

author={Thibault Le Sellier de Chezelles and Maxime Gasse and Alexandre Lacoste and Massimo Caccia and Alexandre Drouin and L{\'e}o Boisvert and Megh Thakkar and Tom Marty and Rim Assouel and Sahar Omidi Shayegan and Lawrence Keunho Jang and Xing Han L{\`u} and Ori Yoran and Dehan Kong and Frank F. Xu and Siva Reddy and Graham Neubig and Quentin Cappart and Russ Salakhutdinov and Nicolas Chapados},

journal={Transactions on Machine Learning Research},

issn={2835-8856},

year={2025},

url={https://openreview.net/forum?id=5298fKGmv3},

note={Expert Certification}

}

@inproceedings{workarena2024,

title = {{W}ork{A}rena: How Capable are Web Agents at Solving Common Knowledge Work Tasks?},

author = {Drouin, Alexandre and Gasse, Maxime and Caccia, Massimo and Laradji, Issam H. and Del Verme, Manuel and Marty, Tom and Vazquez, David and Chapados, Nicolas and Lacoste, Alexandre},

booktitle = {Proceedings of the 41st International Conference on Machine Learning},

pages = {11642--11662},

year = {2024},

editor = {Salakhutdinov, Ruslan and Kolter, Zico and Heller, Katherine and Weller, Adrian and Oliver, Nuria and Scarlett, Jonathan and Berkenkamp, Felix},

volume = {235},

series = {Proceedings of Machine Learning Research},

month = {21--27 Jul},

publisher = {PMLR},

url = {https://proceedings.mlr.press/v235/drouin24a.html},

}Here is an example of how they can be used:

We use the BrowserGym framework for our experiments \cite{workarena2024,chezelles2025browsergym}.For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for BrowserGym

Similar Open Source Tools

BrowserGym

BrowserGym is an open, easy-to-use, and extensible framework designed to accelerate web agent research. It provides benchmarks like MiniWoB, WebArena, VisualWebArena, WorkArena, AssistantBench, and WebLINX. Users can design new web benchmarks by inheriting the AbstractBrowserTask class. The tool allows users to install different packages for core functionalities, experiments, and specific benchmarks. It supports the development setup and offers boilerplate code for running agents on various tasks. BrowserGym is not a consumer product and should be used with caution.

vivaria

Vivaria is a web application tool designed for running evaluations and conducting agent elicitation research. Users can interact with Vivaria using a web UI and a command-line interface. It allows users to start task environments based on METR Task Standard definitions, run AI agents, perform agent elicitation research, view API requests and responses, add tags and comments to runs, store results in a PostgreSQL database, sync data to Airtable, test prompts against LLMs, and authenticate using Auth0.

WorkflowAI

WorkflowAI is a powerful tool designed to streamline and automate various tasks within the workflow process. It provides a user-friendly interface for creating custom workflows, automating repetitive tasks, and optimizing efficiency. With WorkflowAI, users can easily design, execute, and monitor workflows, allowing for seamless integration of different tools and systems. The tool offers advanced features such as conditional logic, task dependencies, and error handling to ensure smooth workflow execution. Whether you are managing project tasks, processing data, or coordinating team activities, WorkflowAI simplifies the workflow management process and enhances productivity.

lightfriend

Lightfriend is a lightweight and user-friendly tool designed to assist developers in managing their GitHub repositories efficiently. It provides a simple and intuitive interface for users to perform various repository-related tasks, such as creating new repositories, managing branches, and reviewing pull requests. With Lightfriend, developers can streamline their workflow and collaborate more effectively with team members. The tool is designed to be easy to use and requires minimal setup, making it ideal for developers of all skill levels. Whether you are a beginner looking to get started with GitHub or an experienced developer seeking a more efficient way to manage your repositories, Lightfriend is the perfect companion for your GitHub workflow.

OllamaSharp

OllamaSharp is a .NET binding for the Ollama API, providing an intuitive API client to interact with Ollama. It offers support for all Ollama API endpoints, real-time streaming, progress reporting, and an API console for remote management. Users can easily set up the client, list models, pull models with progress feedback, stream completions, and build interactive chats. The project includes a demo console for exploring and managing the Ollama host.

MiniSearch

MiniSearch is a minimalist search engine with integrated browser-based AI. It is privacy-focused, easy to use, cross-platform, integrated, time-saving, efficient, optimized, and open-source. MiniSearch can be used for a variety of tasks, including searching the web, finding files on your computer, and getting answers to questions. It is a great tool for anyone who wants a fast, private, and easy-to-use search engine.

parlant

Parlant is a structured approach to building and guiding customer-facing AI agents. It allows developers to create and manage robust AI agents, providing specific feedback on agent behavior and helping understand user intentions better. With features like guidelines, glossary, coherence checks, dynamic context, and guided tool use, Parlant offers control over agent responses and behavior. Developer-friendly aspects include instant changes, Git integration, clean architecture, and type safety. It enables confident deployment with scalability, effective debugging, and validation before deployment. Parlant works with major LLM providers and offers client SDKs for Python and TypeScript. The tool facilitates natural customer interactions through asynchronous communication and provides a chat UI for testing new behaviors before deployment.

ai

This repository contains a collection of AI algorithms and models for various machine learning tasks. It provides implementations of popular algorithms such as neural networks, decision trees, and support vector machines. The code is well-documented and easy to understand, making it suitable for both beginners and experienced developers. The repository also includes example datasets and tutorials to help users get started with building and training AI models. Whether you are a student learning about AI or a professional working on machine learning projects, this repository can be a valuable resource for your development journey.

nanocoder

Nanocoder is a versatile code editor designed for beginners and experienced programmers alike. It provides a user-friendly interface with features such as syntax highlighting, code completion, and error checking. With Nanocoder, you can easily write and debug code in various programming languages, making it an ideal tool for learning, practicing, and developing software projects. Whether you are a student, hobbyist, or professional developer, Nanocoder offers a seamless coding experience to boost your productivity and creativity.

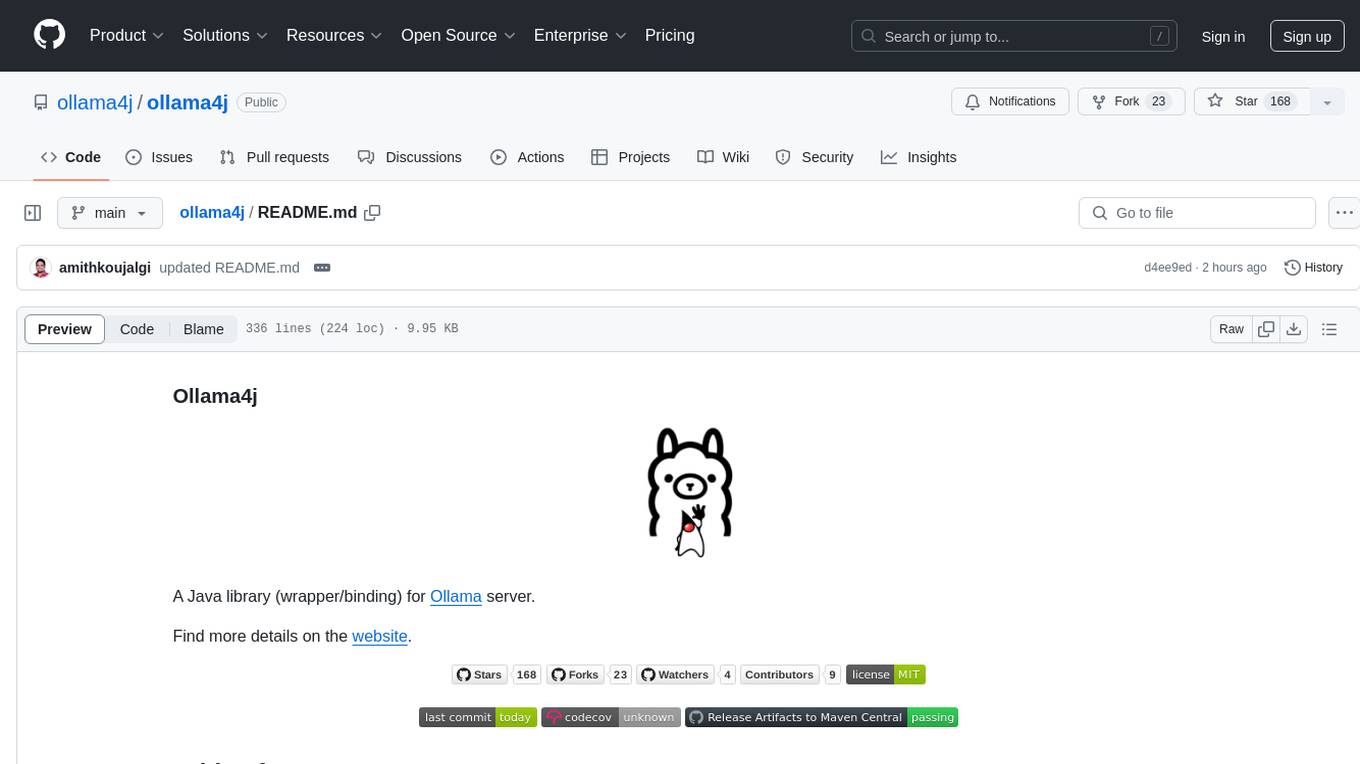

ollama4j

Ollama4j is a Java library that serves as a wrapper or binding for the Ollama server. It allows users to communicate with the Ollama server and manage models for various deployment scenarios. The library provides APIs for interacting with Ollama, generating fake data, testing UI interactions, translating messages, and building web UIs. Users can easily integrate Ollama4j into their Java projects to leverage the functionalities offered by the Ollama server.

promptl

Promptl is a versatile command-line tool designed to streamline the process of creating and managing prompts for user input in various programming projects. It offers a simple and efficient way to prompt users for information, validate their input, and handle different scenarios based on their responses. With Promptl, developers can easily integrate interactive prompts into their scripts, applications, and automation workflows, enhancing user experience and improving overall usability. The tool provides a range of customization options and features, making it suitable for a wide range of use cases across different programming languages and environments.

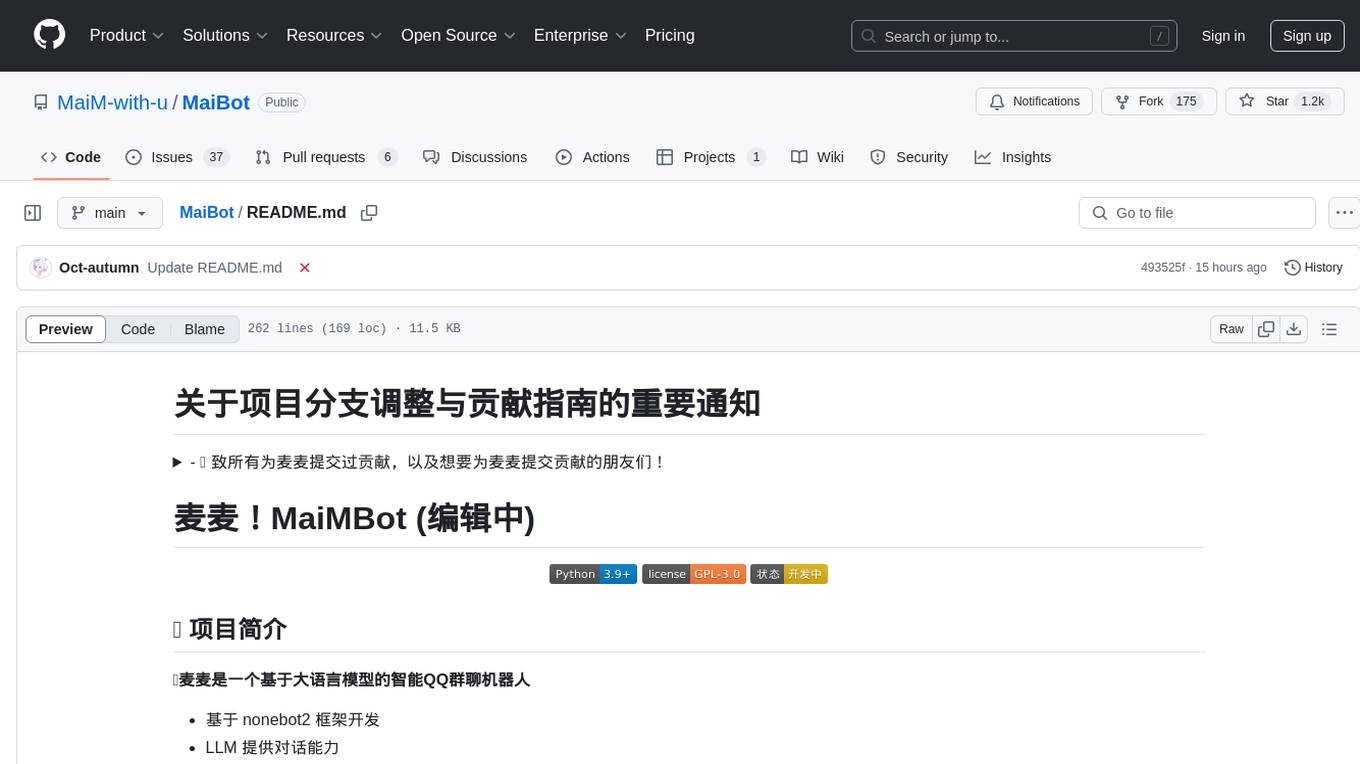

MaiBot

MaiBot is an intelligent QQ group chat bot based on a large language model. It is developed using the nonebot2 framework, with LLM providing conversation abilities, MongoDB for data persistence support, and NapCat as the QQ protocol endpoint support. The project is in active development stage, with features like chat functionality, emoji functionality, schedule management, memory function, knowledge base function, and relationship function planned for future updates. The project aims to create a 'life form' active in QQ group chats, focusing on companionship and creating a more human-like presence rather than a perfect assistant. The application generates content from AI models, so users are advised to discern carefully and not use it for illegal purposes.

udm14

udm14 is a basic website designed to facilitate easy searches on Google with the &udm=14 parameter, ensuring AI-free results without knowledge panels. The tool simplifies access to these specific search results buried within Google's interface, providing a straightforward solution for users seeking this functionality.

langfuse-docs

Langfuse Docs is a repository for langfuse.com, built on Nextra. It provides guidelines for contributing to the documentation using GitHub Codespaces and local development setup. The repository includes Python cookbooks in Jupyter notebooks format, which are converted to markdown for rendering on the site. It also covers media management for images, videos, and gifs. The stack includes Nextra, Next.js, shadcn/ui, and Tailwind CSS. Additionally, there is a bundle analysis feature to analyze the production build bundle size using @next/bundle-analyzer.

contextgem

Contextgem is a Ruby gem that provides a simple way to manage context-specific configurations in your Ruby applications. It allows you to define different configurations based on the context in which your application is running, such as development, testing, or production. This helps you keep your configuration settings organized and easily accessible, making it easier to maintain and update your application. With Contextgem, you can easily switch between different configurations without having to modify your code, making it a valuable tool for managing complex applications with multiple environments.

tools

Strands Agents Tools is a community-driven project that provides a powerful set of tools for your agents to use. It bridges the gap between large language models and practical applications by offering ready-to-use tools for file operations, system execution, API interactions, mathematical operations, and more. The tools cover a wide range of functionalities including file operations, shell integration, memory storage, web infrastructure, HTTP client, Slack client, Python execution, mathematical tools, AWS integration, image and video processing, audio output, environment management, task scheduling, advanced reasoning, swarm intelligence, dynamic MCP client, parallel tool execution, browser automation, diagram creation, RSS feed management, and computer automation.

For similar tasks

BrowserGym

BrowserGym is an open, easy-to-use, and extensible framework designed to accelerate web agent research. It provides benchmarks like MiniWoB, WebArena, VisualWebArena, WorkArena, AssistantBench, and WebLINX. Users can design new web benchmarks by inheriting the AbstractBrowserTask class. The tool allows users to install different packages for core functionalities, experiments, and specific benchmarks. It supports the development setup and offers boilerplate code for running agents on various tasks. BrowserGym is not a consumer product and should be used with caution.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.