aws-mcp

Talk with your AWS using Claude. Model Context Protocol (MCP) server for AWS. Better Amazon Q alternative.

Stars: 118

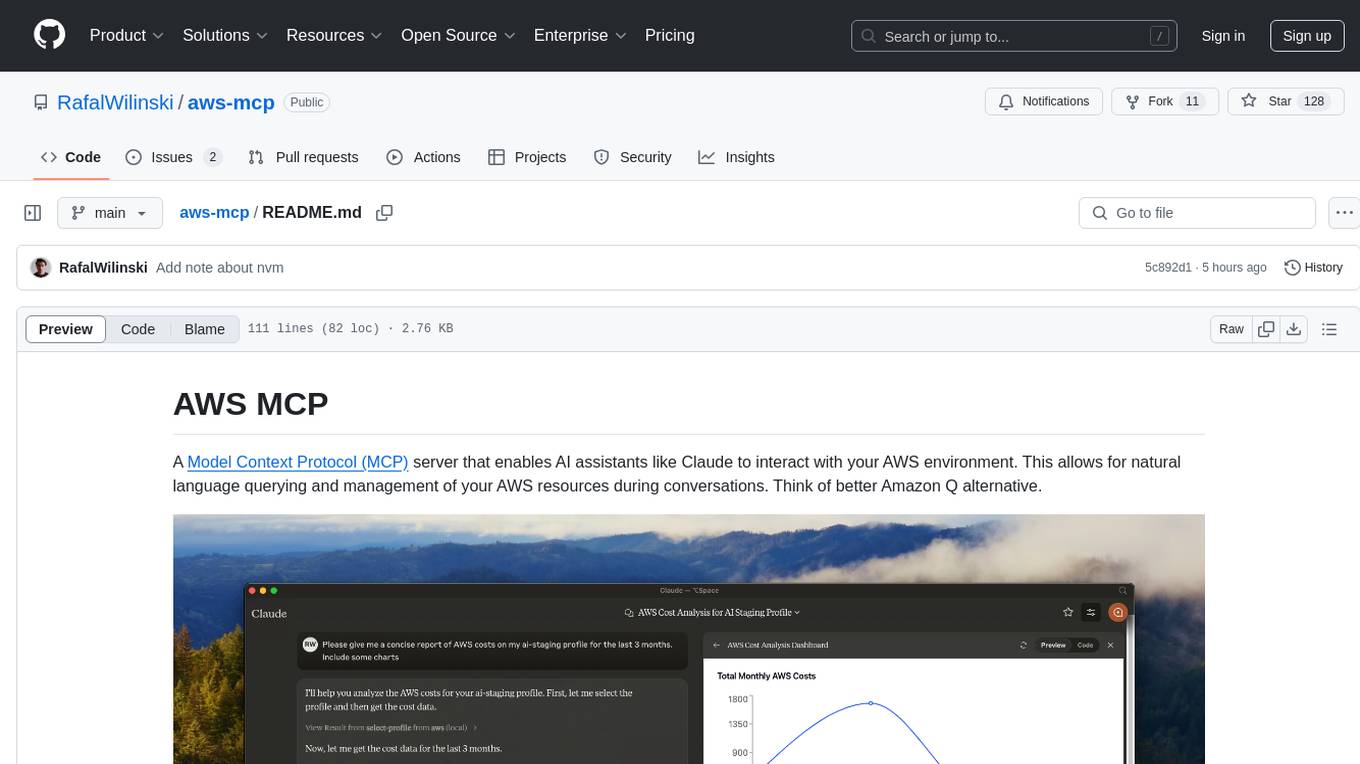

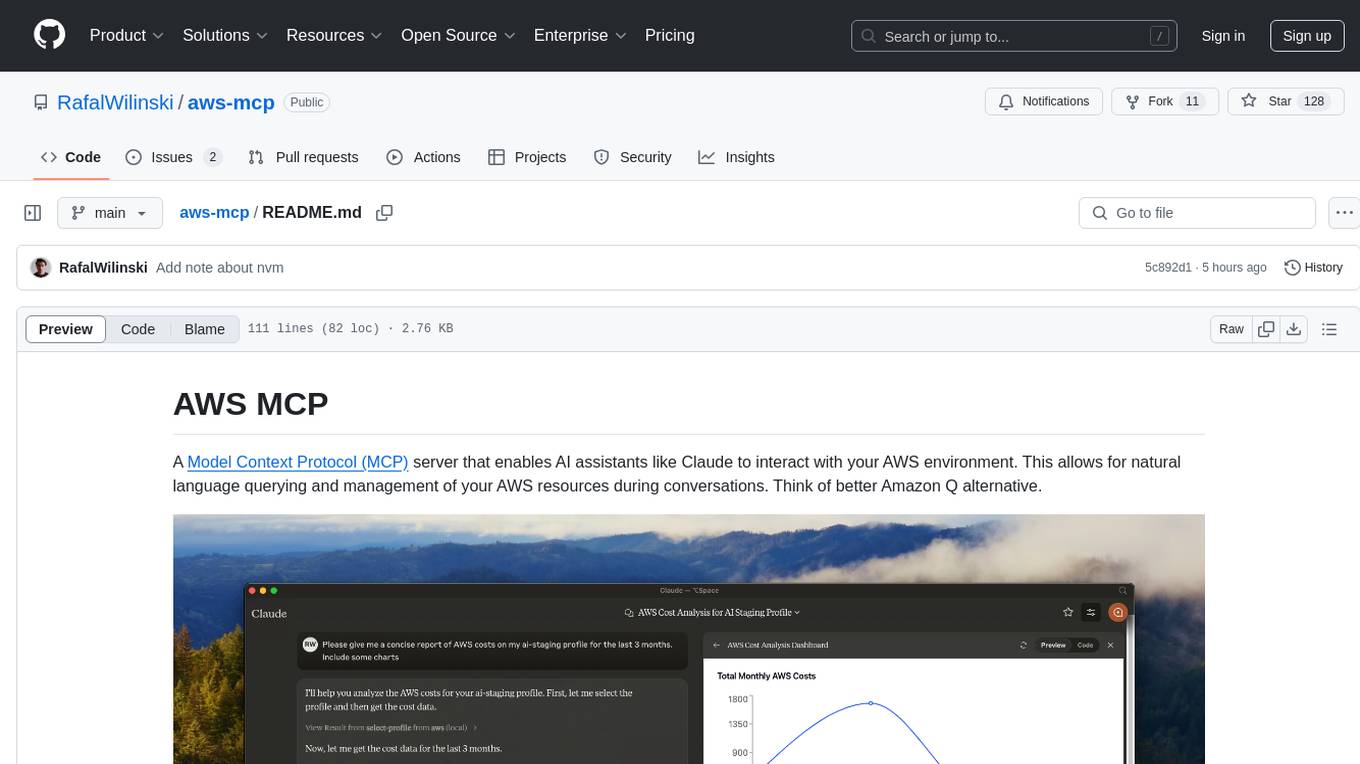

AWS MCP is a Model Context Protocol (MCP) server that facilitates interactions between AI assistants and AWS environments. It allows for natural language querying and management of AWS resources during conversations. The server supports multiple AWS profiles, SSO authentication, multi-region operations, and secure credential handling. Users can locally execute commands with their AWS credentials, enhancing the conversational experience with AWS resources.

README:

A Model Context Protocol (MCP) server that enables AI assistants like Claude to interact with your AWS environment. This allows for natural language querying and management of your AWS resources during conversations. Think of better Amazon Q alternative.

- 🔍 Query and modify AWS resources using natural language

- ☁️ Support for multiple AWS profiles and SSO authentication

- 🌐 Multi-region support

- 🔐 Secure credential handling (no credentials are exposed to external services, your local credentials are used)

- 🏃♂️ Local execution with your AWS credentials

- Node.js

- Claude Desktop

- AWS credentials configured locally (

~/.aws/directory)

- Clone the repository:

git clone https://github.com/RafalWilinski/aws-mcp

cd aws-mcp- Install dependencies:

pnpm install

# or

npm install- Open Claude desktop app and go to Settings -> Developer -> Edit Config

- Add the following entry to your

claude_desktop_config.json:

{

"mcpServers": {

"aws": {

"command": "npm", // OR pnpm

"args": [

"--silent",

"--prefix",

"/Users/<YOUR USERNAME>/aws-mcp",

"start"

]

}

}

}Important: Replace /Users/<YOUR USERNAME>/aws-mcp with the actual path to your project directory.

- Restart Claude desktop app. You should see this:

- Start by selecting an AWS profile or jump to action by asking:

- "List available AWS profiles"

- "List all EC2 instances in my account"

- "Show me S3 buckets with their sizes"

- "What Lambda functions are deployed in us-east-1?"

- "List all ECS clusters and their services"

Build from source first and add following config:

{

"mcpServers": {

"aws": {

"command": "/Users/<USERNAME>/.nvm/versions/node/v20.10.0/bin/node",

"args": [

"<WORKSPACE_PATH>/aws-mcp/node_modules/tsx/dist/cli.mjs",

"<WORKSPACE_PATH>/aws-mcp/index.ts",

"--prefix",

"<WORKSPACE_PATH>/aws-mcp",

"start"

]

}

}

}To see logs:

tail -n 50 -f ~/Library/Logs/Claude/mcp-server-aws.log

# or

tail -n 50 -f ~/Library/Logs/Claude/mcp.log- [ ] MFA support

- [ ] Cache SSO credentials to prevent from refreshing them too eagerly

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for aws-mcp

Similar Open Source Tools

aws-mcp

AWS MCP is a Model Context Protocol (MCP) server that facilitates interactions between AI assistants and AWS environments. It allows for natural language querying and management of AWS resources during conversations. The server supports multiple AWS profiles, SSO authentication, multi-region operations, and secure credential handling. Users can locally execute commands with their AWS credentials, enhancing the conversational experience with AWS resources.

ai-gateway

LangDB AI Gateway is an open-source enterprise AI gateway built in Rust. It provides a unified interface to all LLMs using the OpenAI API format, focusing on high performance, enterprise readiness, and data control. The gateway offers features like comprehensive usage analytics, cost tracking, rate limiting, data ownership, and detailed logging. It supports various LLM providers and provides OpenAI-compatible endpoints for chat completions, model listing, embeddings generation, and image generation. Users can configure advanced settings, such as rate limiting, cost control, dynamic model routing, and observability with OpenTelemetry tracing. The gateway can be run with Docker Compose and integrated with MCP tools for server communication.

mcp-redis

The Redis MCP Server is a natural language interface designed for agentic applications to efficiently manage and search data in Redis. It integrates seamlessly with MCP (Model Content Protocol) clients, enabling AI-driven workflows to interact with structured and unstructured data in Redis. The server supports natural language queries, seamless MCP integration, full Redis support for various data types, search and filtering capabilities, scalability, and lightweight design. It provides tools for managing data stored in Redis, such as string, hash, list, set, sorted set, pub/sub, streams, JSON, query engine, and server management. Installation can be done from PyPI or GitHub, with options for testing, development, and Docker deployment. Configuration can be via command line arguments or environment variables. Integrations include OpenAI Agents SDK, Augment, Claude Desktop, and VS Code with GitHub Copilot. Use cases include AI assistants, chatbots, data search & analytics, and event processing. Contributions are welcome under the MIT License.

mcp

Semgrep MCP Server is a beta server under active development for using Semgrep to scan code for security vulnerabilities. It provides a Model Context Protocol (MCP) for various coding tools to get specialized help in tasks. Users can connect to Semgrep AppSec Platform, scan code for vulnerabilities, customize Semgrep rules, analyze and filter scan results, and compare results. The tool is published on PyPI as semgrep-mcp and can be installed using pip, pipx, uv, poetry, or other methods. It supports CLI and Docker environments for running the server. Integration with VS Code is also available for quick installation. The project welcomes contributions and is inspired by core technologies like Semgrep and MCP, as well as related community projects and tools.

snak

The starknet-agent-kit is a toolkit designed for creating AI agents that can interact with the Starknet blockchain. It provides support for multiple AI providers such as Anthropic, OpenAI, Google Gemini, and Ollama. The kit includes an NPM package and a NestJS server with a web interface. Users can run the server in different modes like Chat Mode for conversations, checking balances, executing transfers, and managing accounts, as well as Autonomous Mode for automated monitoring. Additionally, the kit offers a library mode for more advanced usage, allowing users to interact with the StarknetAgent class for executing specific actions. The kit aims to simplify the process of integrating AI capabilities with blockchain interactions.

open-edison

OpenEdison is a secure MCP control panel that connects AI to data/software with additional security controls to reduce data exfiltration risks. It helps address the lethal trifecta problem by providing visibility, monitoring potential threats, and alerting on data interactions. The tool offers features like data leak monitoring, controlled execution, easy configuration, visibility into agent interactions, a simple API, and Docker support. It integrates with LangGraph, LangChain, and plain Python agents for observability and policy enforcement. OpenEdison helps gain observability, control, and policy enforcement for AI interactions with systems of records, existing company software, and data to reduce risks of AI-caused data leakage.

agentpress

AgentPress is a collection of simple but powerful utilities that serve as building blocks for creating AI agents. It includes core components for managing threads, registering tools, processing responses, state management, and utilizing LLMs. The tool provides a modular architecture for handling messages, LLM API calls, response processing, tool execution, and results management. Users can easily set up the environment, create custom tools with OpenAPI or XML schema, and manage conversation threads with real-time interaction. AgentPress aims to be agnostic, simple, and flexible, allowing users to customize and extend functionalities as needed.

json-repair

JSON Repair is a toolkit designed to address JSON anomalies that can arise from Large Language Models (LLMs). It offers a comprehensive solution for repairing JSON strings, ensuring accuracy and reliability in your data processing. With its user-friendly interface and extensive capabilities, JSON Repair empowers developers to seamlessly integrate JSON repair into their workflows.

Gmail-MCP-Server

Gmail AutoAuth MCP Server is a Model Context Protocol (MCP) server designed for Gmail integration in Claude Desktop. It supports auto authentication and enables AI assistants to manage Gmail through natural language interactions. The server provides comprehensive features for sending emails, reading messages, managing labels, searching emails, and batch operations. It offers full support for international characters, email attachments, and Gmail API integration. Users can install and authenticate the server via Smithery or manually with Google Cloud Project credentials. The server supports both Desktop and Web application credentials, with global credential storage for convenience. It also includes Docker support and instructions for cloud server authentication.

langcorn

LangCorn is an API server that enables you to serve LangChain models and pipelines with ease, leveraging the power of FastAPI for a robust and efficient experience. It offers features such as easy deployment of LangChain models and pipelines, ready-to-use authentication functionality, high-performance FastAPI framework for serving requests, scalability and robustness for language processing applications, support for custom pipelines and processing, well-documented RESTful API endpoints, and asynchronous processing for faster response times.

UnrealOpenAIPlugin

UnrealOpenAIPlugin is a comprehensive Unreal Engine wrapper for the OpenAI API, supporting various endpoints such as Models, Completions, Chat, Images, Vision, Embeddings, Speech, Audio, Files, Moderations, Fine-tuning, and Functions. It provides support for both C++ and Blueprints, allowing users to interact with OpenAI services seamlessly within Unreal Engine projects. The plugin also includes tutorials, updates, installation instructions, authentication steps, examples of usage, blueprint nodes overview, C++ examples, plugin structure details, documentation references, tests, packaging guidelines, and limitations. Users can leverage this plugin to integrate powerful AI capabilities into their Unreal Engine projects effortlessly.

scylla

Scylla is an intelligent proxy pool tool designed for humanities, enabling users to extract content from the internet and build their own Large Language Models in the AI era. It features automatic proxy IP crawling and validation, an easy-to-use JSON API, a simple web-based user interface, HTTP forward proxy server, Scrapy and requests integration, and headless browser crawling. Users can start using Scylla with just one command, making it a versatile tool for various web scraping and content extraction tasks.

client-python

The Mistral Python Client is a tool inspired by cohere-python that allows users to interact with the Mistral AI API. It provides functionalities to access and utilize the AI capabilities offered by Mistral. Users can easily install the client using pip and manage dependencies using poetry. The client includes examples demonstrating how to use the API for various tasks, such as chat interactions. To get started, users need to obtain a Mistral API Key and set it as an environment variable. Overall, the Mistral Python Client simplifies the integration of Mistral AI services into Python applications.

swarmzero

SwarmZero SDK is a library that simplifies the creation and execution of AI Agents and Swarms of Agents. It supports various LLM Providers such as OpenAI, Azure OpenAI, Anthropic, MistralAI, Gemini, Nebius, and Ollama. Users can easily install the library using pip or poetry, set up the environment and configuration, create and run Agents, collaborate with Swarms, add tools for complex tasks, and utilize retriever tools for semantic information retrieval. Sample prompts are provided to help users explore the capabilities of the agents and swarms. The SDK also includes detailed examples and documentation for reference.

aiavatarkit

AIAvatarKit is a tool for building AI-based conversational avatars quickly. It supports various platforms like VRChat and cluster, along with real-world devices. The tool is extensible, allowing unlimited capabilities based on user needs. It requires VOICEVOX API, Google or Azure Speech Services API keys, and Python 3.10. Users can start conversations out of the box and enjoy seamless interactions with the avatars.

shell-ai

Shell-AI (`shai`) is a CLI utility that enables users to input commands in natural language and receive single-line command suggestions. It leverages natural language understanding and interactive CLI tools to enhance command line interactions. Users can describe tasks in plain English and receive corresponding command suggestions, making it easier to execute commands efficiently. Shell-AI supports cross-platform usage and is compatible with Azure OpenAI deployments, offering a user-friendly and efficient way to interact with the command line.

For similar tasks

aws-mcp

AWS MCP is a Model Context Protocol (MCP) server that facilitates interactions between AI assistants and AWS environments. It allows for natural language querying and management of AWS resources during conversations. The server supports multiple AWS profiles, SSO authentication, multi-region operations, and secure credential handling. Users can locally execute commands with their AWS credentials, enhancing the conversational experience with AWS resources.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.