pdr_ai_v2

AI Integrated Professional Document Reader

Stars: 599

pdr_ai_v2 is a Python library for implementing machine learning algorithms and models. It provides a wide range of tools and functionalities for data preprocessing, model training, evaluation, and deployment. The library is designed to be user-friendly and efficient, making it suitable for both beginners and experienced data scientists. With pdr_ai_v2, users can easily build and deploy machine learning models for various applications, such as classification, regression, clustering, and more.

README:

A Next.js application that uses advanced AI technology to analyze, interpret, and extract insights from professional documents. Features employee/employer authentication, document upload and management, AI-powered chat, and comprehensive predictive document analysis that identifies missing documents, provides recommendations, and suggests related content.

- Missing Document Detection: AI automatically identifies critical documents that should be present but are missing

- Priority Assessment: Categorizes missing documents by priority (high, medium, low) for efficient workflow management

- Smart Recommendations: Provides actionable recommendations for document organization and compliance

- Related Document Suggestions: Suggests relevant external resources and related documents

- Page-Level Analysis: Pinpoints specific pages where missing documents are referenced

- Real-time Analysis: Instant analysis with caching for improved performance

- Comprehensive Reporting: Detailed breakdown of analysis results with actionable insights

- Advanced AI algorithms analyze documents and extract key information

- AI-Powered Chat: Interactive chat interface for document-specific questions and insights

- Role-Based Authentication: Separate interfaces for employees and employers using Clerk

- Document Management: Upload, organize, and manage documents with category support

- Employee Management: Employer dashboard for managing employee access and approvals

- Real-time Chat History: Persistent chat sessions for each document

- Responsive Design: Modern UI with Tailwind CSS

The Predictive Document Analysis feature is the cornerstone of PDR AI, providing intelligent document management and compliance assistance:

- Document Upload: Upload your professional documents (PDFs, contracts, manuals, etc.)

- AI Analysis: Our advanced AI scans through the document content and structure

- Missing Document Detection: Identifies references to documents that should be present but aren't

- Priority Classification: Automatically categorizes findings by importance and urgency

- Smart Recommendations: Provides specific, actionable recommendations for document management

- Related Content: Suggests relevant external resources and related documents

- Compliance Assurance: Never miss critical documents required for compliance

- Workflow Optimization: Streamline document management with AI-powered insights

- Risk Mitigation: Identify potential gaps in documentation before they become issues

- Time Savings: Automated analysis saves hours of manual document review

- Proactive Management: Stay ahead of document requirements and deadlines

The system provides comprehensive analysis including:

- Missing Documents Count: Total number of missing documents identified

- High Priority Items: Critical documents requiring immediate attention

- Recommendations: Specific actions to improve document organization

- Suggested Related Documents: External resources and related content

- Page References: Exact page numbers where missing documents are mentioned

The predictive analysis feature automatically scans uploaded documents and provides comprehensive insights:

{

"success": true,

"documentId": 123,

"analysisType": "predictive",

"summary": {

"totalMissingDocuments": 5,

"highPriorityItems": 2,

"totalRecommendations": 3,

"totalSuggestedRelated": 4,

"analysisTimestamp": "2024-01-15T10:30:00Z"

},

"analysis": {

"missingDocuments": [

{

"documentName": "Employee Handbook",

"documentType": "Policy Document",

"reason": "Referenced in section 2.1 but not found in uploaded documents",

"page": 15,

"priority": "high",

"suggestedLinks": [

{

"title": "Sample Employee Handbook Template",

"link": "https://example.com/handbook-template",

"snippet": "Comprehensive employee handbook template..."

}

]

}

],

"recommendations": [

"Consider implementing a document version control system",

"Review document retention policies for compliance",

"Establish regular document audit procedures"

],

"suggestedRelatedDocuments": [

{

"title": "Document Management Best Practices",

"link": "https://example.com/best-practices",

"snippet": "Industry standards for document organization..."

}

]

}

}- Upload Documents: Use the employer dashboard to upload your documents

- Run Analysis: Click the "Predictive Analysis" tab in the document viewer

- Review Results: Examine missing documents, recommendations, and suggestions

- Take Action: Follow the provided recommendations and suggested links

- Track Progress: Re-run analysis to verify improvements

Ask questions about your documents and get AI-powered responses:

// Example API call for document Q&A

const response = await fetch('/api/LangChain', {

method: 'POST',

headers: { 'Content-Type': 'application/json' },

body: JSON.stringify({

question: "What are the key compliance requirements mentioned?",

documentId: 123,

style: "professional" // or "casual", "technical", "summary"

})

});- Contract Management: Identify missing clauses, attachments, and referenced documents

- Regulatory Compliance: Ensure all required documentation is present and up-to-date

- Due Diligence: Comprehensive document review for mergers and acquisitions

- Risk Assessment: Identify potential legal risks from missing documentation

- Employee Documentation: Ensure all required employee documents are collected

- Policy Compliance: Verify policy documents are complete and current

- Onboarding Process: Streamline new employee documentation requirements

- Audit Preparation: Prepare for HR audits with confidence

- Financial Reporting: Ensure all supporting documents are included

- Audit Trail: Maintain complete documentation for financial audits

- Compliance Reporting: Meet regulatory requirements for document retention

- Process Documentation: Streamline financial process documentation

- Patient Records: Ensure complete patient documentation

- Regulatory Compliance: Meet healthcare documentation requirements

- Quality Assurance: Maintain high standards for medical documentation

- Risk Management: Identify potential documentation gaps

- Automated Analysis: Reduce manual document review time by 80%

- Instant Insights: Get immediate feedback on document completeness

- Proactive Management: Address issues before they become problems

- Compliance Assurance: Never miss critical required documents

- Error Prevention: Catch documentation gaps before they cause issues

- Audit Readiness: Always be prepared for regulatory audits

- Standardized Workflows: Establish consistent document management processes

- Quality Control: Maintain high standards for document organization

- Continuous Improvement: Use AI insights to optimize processes

- Document Review Time: 80% reduction in manual review time

- Compliance Risk: 95% reduction in missing document incidents

- Audit Preparation: 90% faster audit preparation time

- Process Efficiency: 70% improvement in document management workflows

- Framework: Next.js 15 with TypeScript

- Authentication: Clerk

- Database: PostgreSQL with Drizzle ORM

- AI Integration: OpenAI + LangChain

- File Upload: UploadThing

- Styling: Tailwind CSS

- Package Manager: pnpm

Before you begin, ensure you have the following installed:

- Node.js (version 18.0 or higher)

- pnpm (recommended) or npm

- Docker (for local database)

- Git

git clone <repository-url>

cd pdr_ai_v2-2pnpm installCreate a .env file in the root directory with the following variables:

# Database

DATABASE_URL="postgresql://postgres:password@localhost:5432/pdr_ai_v2"

# Clerk Authentication (get from https://clerk.com/)

NEXT_PUBLIC_CLERK_PUBLISHABLE_KEY=your_clerk_publishable_key

CLERK_SECRET_KEY=your_clerk_secret_key

# OpenAI API (get from https://platform.openai.com/)

OPENAI_API_KEY=your_openai_api_key

# UploadThing (get from https://uploadthing.com/)

UPLOADTHING_SECRET=your_uploadthing_secret

UPLOADTHING_APP_ID=your_uploadthing_app_id

# Environment

NODE_ENV=development# Make the script executable

chmod +x start-database.sh

# Start the database container

./start-database.shThis will:

- Create a Docker container with PostgreSQL

- Set up the database with proper credentials

- Generate a secure password if using default settings

# Generate migration files

pnpm db:generate

# Apply migrations to database

pnpm db:migrate

# Alternative: Push schema directly (for development)

pnpm db:push- Create account at Clerk

- Create a new application

- Copy the publishable and secret keys to your

.envfile - Configure sign-in/sign-up methods as needed

- Create account at OpenAI

- Generate an API key

- Add the key to your

.envfile

- Create account at UploadThing

- Create a new app

- Copy the secret and app ID to your

.envfile

pnpm devThe application will be available at http://localhost:3000

# Build the application

pnpm build

# Start production server

pnpm start# Database management

pnpm db:studio # Open Drizzle Studio (database GUI)

pnpm db:generate # Generate new migrations

pnpm db:migrate # Apply migrations

pnpm db:push # Push schema changes directly

# Code quality

pnpm lint # Run ESLint

pnpm lint:fix # Fix ESLint issues

pnpm typecheck # Run TypeScript type checking

pnpm format:write # Format code with Prettier

pnpm format:check # Check code formatting

# Development

pnpm check # Run linting and type checking

pnpm preview # Build and start production previewsrc/

├── app/ # Next.js App Router

│ ├── api/ # API routes

│ │ ├── predictive-document-analysis/ # Predictive analysis endpoints

│ │ │ ├── route.ts # Main analysis API

│ │ │ └── agent.ts # AI analysis agent

│ │ ├── LangChain/ # AI chat functionality

│ │ └── ... # Other API endpoints

│ ├── employee/ # Employee dashboard pages

│ ├── employer/ # Employer dashboard pages

│ │ └── documents/ # Document viewer with predictive analysis

│ ├── signup/ # Authentication pages

│ └── _components/ # Shared components

├── server/

│ └── db/ # Database configuration and schema

├── styles/ # CSS modules and global styles

└── env.js # Environment validation

Key directories:

- `/employee` - Employee interface for document viewing and chat

- `/employer` - Employer interface for management and uploads

- `/api/predictive-document-analysis` - Core predictive analysis functionality

- `/api` - Backend API endpoints for all functionality

- `/server/db` - Database schema and configuration

-

POST /api/predictive-document-analysis- Analyze documents for missing content and recommendations -

GET /api/fetchDocument- Retrieve document content for analysis -

POST /api/uploadDocument- Upload documents for processing

-

POST /api/LangChain- AI-powered document Q&A -

GET /api/Questions/fetch- Retrieve Q&A history -

POST /api/Questions/add- Add new questions

-

GET /api/fetchCompany- Get company documents -

POST /api/deleteDocument- Remove documents -

GET /api/Categories/GetCategories- Get document categories

- View assigned documents

- Chat with AI about documents

- Access document analysis and insights

- Pending approval flow for new employees

- Upload and manage documents

- Manage employee access and approvals

- View analytics and statistics

- Configure document categories

- Employee management dashboard

| Variable | Description | Required |

|---|---|---|

DATABASE_URL |

PostgreSQL connection string | ✅ |

NEXT_PUBLIC_CLERK_PUBLISHABLE_KEY |

Clerk publishable key | ✅ |

CLERK_SECRET_KEY |

Clerk secret key | ✅ |

OPENAI_API_KEY |

OpenAI API key for AI features | ✅ |

UPLOADTHING_SECRET |

UploadThing secret for file uploads | ✅ |

UPLOADTHING_APP_ID |

UploadThing application ID | ✅ |

NODE_ENV |

Environment mode | ✅ |

- Ensure Docker is running before starting the database

- Check if the database container is running:

docker ps - Restart the database:

docker restart pdr_ai_v2-postgres

- Verify all required environment variables are set

- Check

.envfile formatting (no spaces around=) - Ensure API keys are valid and have proper permissions

- Clear Next.js cache:

rm -rf .next - Reinstall dependencies:

rm -rf node_modules && pnpm install - Check TypeScript errors:

pnpm typecheck

- Fork the repository

- Create a feature branch:

git checkout -b feature-name - Make your changes

- Run tests and linting:

pnpm check - Commit your changes:

git commit -m 'Add feature' - Push to the branch:

git push origin feature-name - Submit a pull request

This project is private and proprietary.

For support or questions, contact the development team or create an issue in the repository.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for pdr_ai_v2

Similar Open Source Tools

pdr_ai_v2

pdr_ai_v2 is a Python library for implementing machine learning algorithms and models. It provides a wide range of tools and functionalities for data preprocessing, model training, evaluation, and deployment. The library is designed to be user-friendly and efficient, making it suitable for both beginners and experienced data scientists. With pdr_ai_v2, users can easily build and deploy machine learning models for various applications, such as classification, regression, clustering, and more.

AI_Spectrum

AI_Spectrum is a versatile machine learning library that provides a wide range of tools and algorithms for building and deploying AI models. It offers a user-friendly interface for data preprocessing, model training, and evaluation. With AI_Spectrum, users can easily experiment with different machine learning techniques and optimize their models for various tasks. The library is designed to be flexible and scalable, making it suitable for both beginners and experienced data scientists.

ml-retreat

ML-Retreat is a comprehensive machine learning library designed to simplify and streamline the process of building and deploying machine learning models. It provides a wide range of tools and utilities for data preprocessing, model training, evaluation, and deployment. With ML-Retreat, users can easily experiment with different algorithms, hyperparameters, and feature engineering techniques to optimize their models. The library is built with a focus on scalability, performance, and ease of use, making it suitable for both beginners and experienced machine learning practitioners.

atomic-agents

The Atomic Agents framework is a modular and extensible tool designed for creating powerful applications. It leverages Pydantic for data validation and serialization. The framework follows the principles of Atomic Design, providing small and single-purpose components that can be combined. It integrates with Instructor for AI agent architecture and supports various APIs like Cohere, Anthropic, and Gemini. The tool includes documentation, examples, and testing features to ensure smooth development and usage.

deeppowers

Deeppowers is a powerful Python library for deep learning applications. It provides a wide range of tools and utilities to simplify the process of building and training deep neural networks. With Deeppowers, users can easily create complex neural network architectures, perform efficient training and optimization, and deploy models for various tasks. The library is designed to be user-friendly and flexible, making it suitable for both beginners and experienced deep learning practitioners.

mcp-context-forge

MCP Context Forge is a powerful tool for generating context-aware data for machine learning models. It provides functionalities to create diverse datasets with contextual information, enhancing the performance of AI algorithms. The tool supports various data formats and allows users to customize the context generation process easily. With MCP Context Forge, users can efficiently prepare training data for tasks requiring contextual understanding, such as sentiment analysis, recommendation systems, and natural language processing.

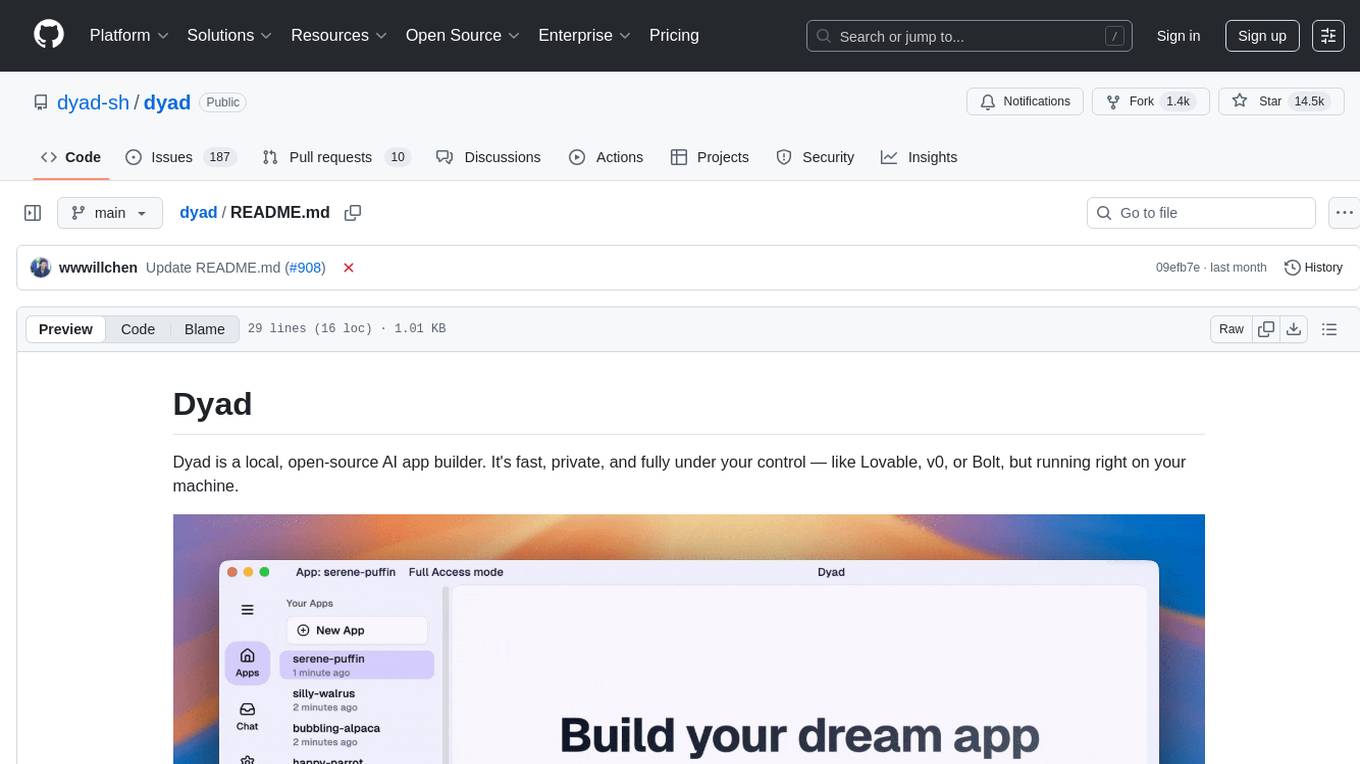

dyad

Dyad is a lightweight Python library for analyzing dyadic data, which involves pairs of individuals and their interactions. It provides functions for computing various network metrics, visualizing network structures, and conducting statistical analyses on dyadic data. Dyad is designed to be user-friendly and efficient, making it suitable for researchers and practitioners working with relational data in fields such as social network analysis, communication studies, and psychology.

LightLLM

LightLLM is a lightweight library for linear and logistic regression models. It provides a simple and efficient way to train and deploy machine learning models for regression tasks. The library is designed to be easy to use and integrate into existing projects, making it suitable for both beginners and experienced data scientists. With LightLLM, users can quickly build and evaluate regression models using a variety of algorithms and hyperparameters. The library also supports feature engineering and model interpretation, allowing users to gain insights from their data and make informed decisions based on the model predictions.

GEN-AI

GEN-AI is a versatile Python library for implementing various artificial intelligence algorithms and models. It provides a wide range of tools and functionalities to support machine learning, deep learning, natural language processing, computer vision, and reinforcement learning tasks. With GEN-AI, users can easily build, train, and deploy AI models for diverse applications such as image recognition, text classification, sentiment analysis, object detection, and game playing. The library is designed to be user-friendly, efficient, and scalable, making it suitable for both beginners and experienced AI practitioners.

cellm

Cellm is an Excel extension that allows users to leverage Large Language Models (LLMs) like ChatGPT within cell formulas. It enables users to extract AI responses to text ranges, making it useful for automating repetitive tasks that involve data processing and analysis. Cellm supports various models from Anthropic, Mistral, OpenAI, and Google, as well as locally hosted models via Llamafiles, Ollama, or vLLM. The tool is designed to simplify the integration of AI capabilities into Excel for tasks such as text classification, data cleaning, content summarization, entity extraction, and more.

CrossIntelligence

CrossIntelligence is a powerful tool for data analysis and visualization. It allows users to easily connect and analyze data from multiple sources, providing valuable insights and trends. With a user-friendly interface and customizable features, CrossIntelligence is suitable for both beginners and advanced users in various industries such as marketing, finance, and research.

wingman

The LLM Platform, also known as Inference Hub, is an open-source tool designed to simplify the development and deployment of large language model applications at scale. It provides a unified framework for integrating and managing multiple LLM vendors, models, and related services through a flexible approach. The platform supports various LLM providers, document processing, RAG, advanced AI workflows, infrastructure operations, and flexible configuration using YAML files. Its modular and extensible architecture allows developers to plug in different providers and services as needed. Key components include completers, embedders, renderers, synthesizers, transcribers, document processors, segmenters, retrievers, summarizers, translators, AI workflows, tools, and infrastructure components. Use cases range from enterprise AI applications to scalable LLM deployment and custom AI pipelines. Integrations with LLM providers like OpenAI, Azure OpenAI, Anthropic, Google Gemini, AWS Bedrock, Groq, Mistral AI, xAI, Hugging Face, and more are supported.

ai

This repository contains a collection of AI algorithms and models for various machine learning tasks. It provides implementations of popular algorithms such as neural networks, decision trees, and support vector machines. The code is well-documented and easy to understand, making it suitable for both beginners and experienced developers. The repository also includes example datasets and tutorials to help users get started with building and training AI models. Whether you are a student learning about AI or a professional working on machine learning projects, this repository can be a valuable resource for your development journey.

tools

This repository contains a collection of various tools and utilities that can be used for different purposes. It includes scripts, programs, and resources to assist with tasks related to software development, data analysis, automation, and more. The tools are designed to be versatile and easy to use, providing solutions for common challenges faced by developers and users alike.

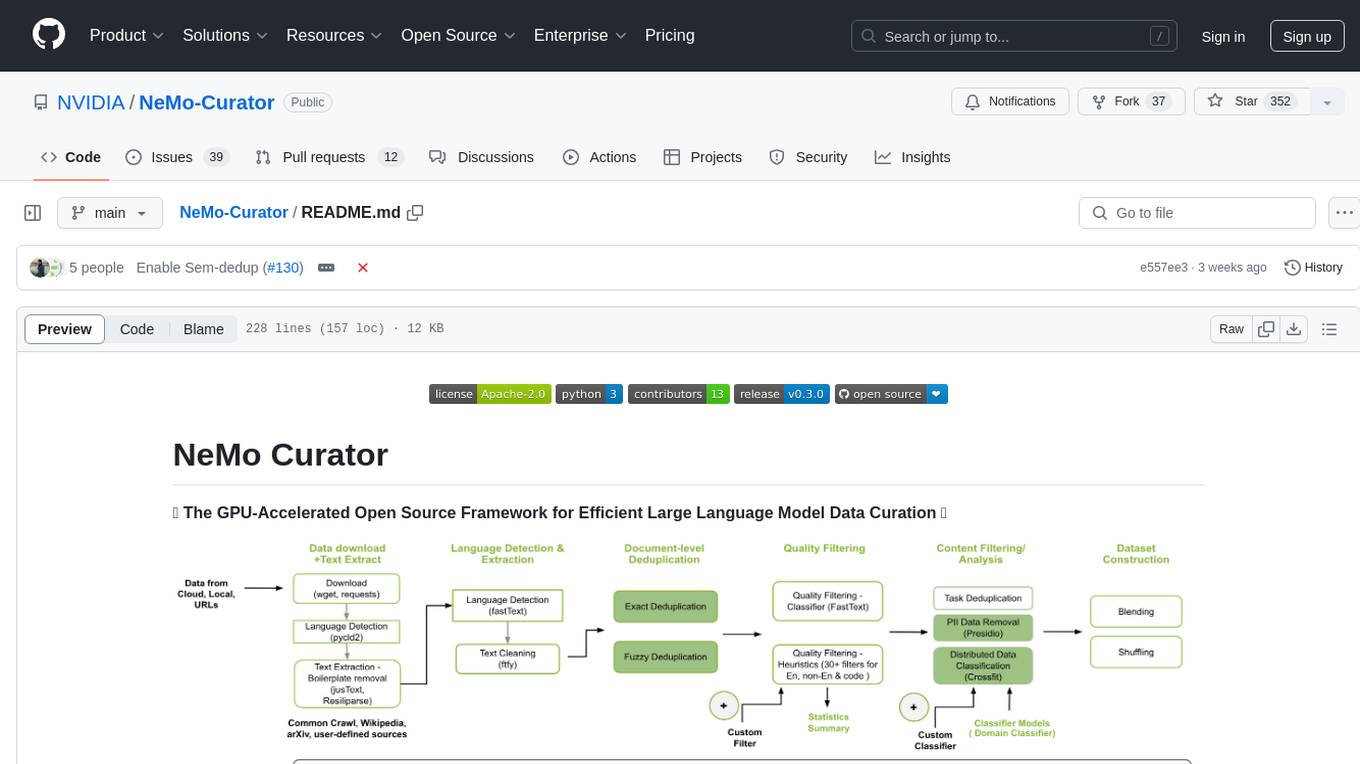

Curator

NeMo Curator is a Python library designed for fast and scalable data processing and curation for generative AI use cases. It accelerates data processing by leveraging GPUs with Dask and RAPIDS, providing customizable pipelines for text and image curation. The library offers pre-built pipelines for synthetic data generation, enabling users to train and customize generative AI models such as LLMs, VLMs, and WFMs.

agent-lightning

Agent Lightning is a lightweight and efficient tool for automating repetitive tasks in the field of data analysis and machine learning. It provides a user-friendly interface to create and manage automated workflows, allowing users to easily schedule and execute data processing, model training, and evaluation tasks. With its intuitive design and powerful features, Agent Lightning streamlines the process of building and deploying machine learning models, making it ideal for data scientists, machine learning engineers, and AI enthusiasts looking to boost their productivity and efficiency in their projects.

For similar tasks

nlp-llms-resources

The 'nlp-llms-resources' repository is a comprehensive resource list for Natural Language Processing (NLP) and Large Language Models (LLMs). It covers a wide range of topics including traditional NLP datasets, data acquisition, libraries for NLP, neural networks, sentiment analysis, optical character recognition, information extraction, semantics, topic modeling, multilingual NLP, domain-specific LLMs, vector databases, ethics, costing, books, courses, surveys, aggregators, newsletters, papers, conferences, and societies. The repository provides valuable information and resources for individuals interested in NLP and LLMs.

adata

AData is a free and open-source A-share database that focuses on transaction-related data. It provides comprehensive data on stocks, including basic information, market data, and sentiment analysis. AData is designed to be easy to use and integrate with other applications, making it a valuable tool for quantitative trading and AI training.

PIXIU

PIXIU is a project designed to support the development, fine-tuning, and evaluation of Large Language Models (LLMs) in the financial domain. It includes components like FinBen, a Financial Language Understanding and Prediction Evaluation Benchmark, FIT, a Financial Instruction Dataset, and FinMA, a Financial Large Language Model. The project provides open resources, multi-task and multi-modal financial data, and diverse financial tasks for training and evaluation. It aims to encourage open research and transparency in the financial NLP field.

hezar

Hezar is an all-in-one AI library designed specifically for the Persian community. It brings together various AI models and tools, making it easy to use AI with just a few lines of code. The library seamlessly integrates with Hugging Face Hub, offering a developer-friendly interface and task-based model interface. In addition to models, Hezar provides tools like word embeddings, tokenizers, feature extractors, and more. It also includes supplementary ML tools for deployment, benchmarking, and optimization.

text-embeddings-inference

Text Embeddings Inference (TEI) is a toolkit for deploying and serving open source text embeddings and sequence classification models. TEI enables high-performance extraction for popular models like FlagEmbedding, Ember, GTE, and E5. It implements features such as no model graph compilation step, Metal support for local execution on Macs, small docker images with fast boot times, token-based dynamic batching, optimized transformers code for inference using Flash Attention, Candle, and cuBLASLt, Safetensors weight loading, and production-ready features like distributed tracing with Open Telemetry and Prometheus metrics.

CodeProject.AI-Server

CodeProject.AI Server is a standalone, self-hosted, fast, free, and open-source Artificial Intelligence microserver designed for any platform and language. It can be installed locally without the need for off-device or out-of-network data transfer, providing an easy-to-use solution for developers interested in AI programming. The server includes a HTTP REST API server, backend analysis services, and the source code, enabling users to perform various AI tasks locally without relying on external services or cloud computing. Current capabilities include object detection, face detection, scene recognition, sentiment analysis, and more, with ongoing feature expansions planned. The project aims to promote AI development, simplify AI implementation, focus on core use-cases, and leverage the expertise of the developer community.

spark-nlp

Spark NLP is a state-of-the-art Natural Language Processing library built on top of Apache Spark. It provides simple, performant, and accurate NLP annotations for machine learning pipelines that scale easily in a distributed environment. Spark NLP comes with 36000+ pretrained pipelines and models in more than 200+ languages. It offers tasks such as Tokenization, Word Segmentation, Part-of-Speech Tagging, Named Entity Recognition, Dependency Parsing, Spell Checking, Text Classification, Sentiment Analysis, Token Classification, Machine Translation, Summarization, Question Answering, Table Question Answering, Text Generation, Image Classification, Image to Text (captioning), Automatic Speech Recognition, Zero-Shot Learning, and many more NLP tasks. Spark NLP is the only open-source NLP library in production that offers state-of-the-art transformers such as BERT, CamemBERT, ALBERT, ELECTRA, XLNet, DistilBERT, RoBERTa, DeBERTa, XLM-RoBERTa, Longformer, ELMO, Universal Sentence Encoder, Llama-2, M2M100, BART, Instructor, E5, Google T5, MarianMT, OpenAI GPT2, Vision Transformers (ViT), OpenAI Whisper, and many more not only to Python and R, but also to JVM ecosystem (Java, Scala, and Kotlin) at scale by extending Apache Spark natively.

scikit-llm

Scikit-LLM is a tool that seamlessly integrates powerful language models like ChatGPT into scikit-learn for enhanced text analysis tasks. It allows users to leverage large language models for various text analysis applications within the familiar scikit-learn framework. The tool simplifies the process of incorporating advanced language processing capabilities into machine learning pipelines, enabling users to benefit from the latest advancements in natural language processing.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.