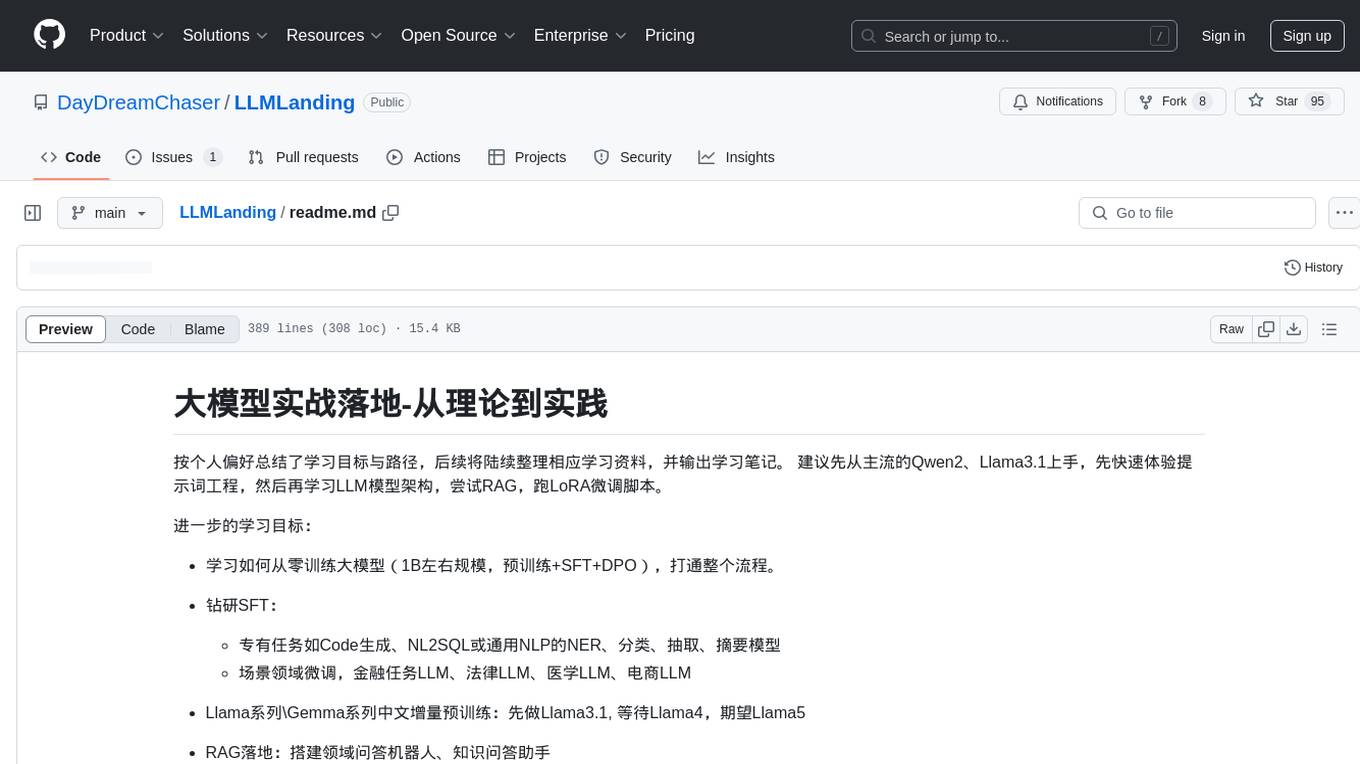

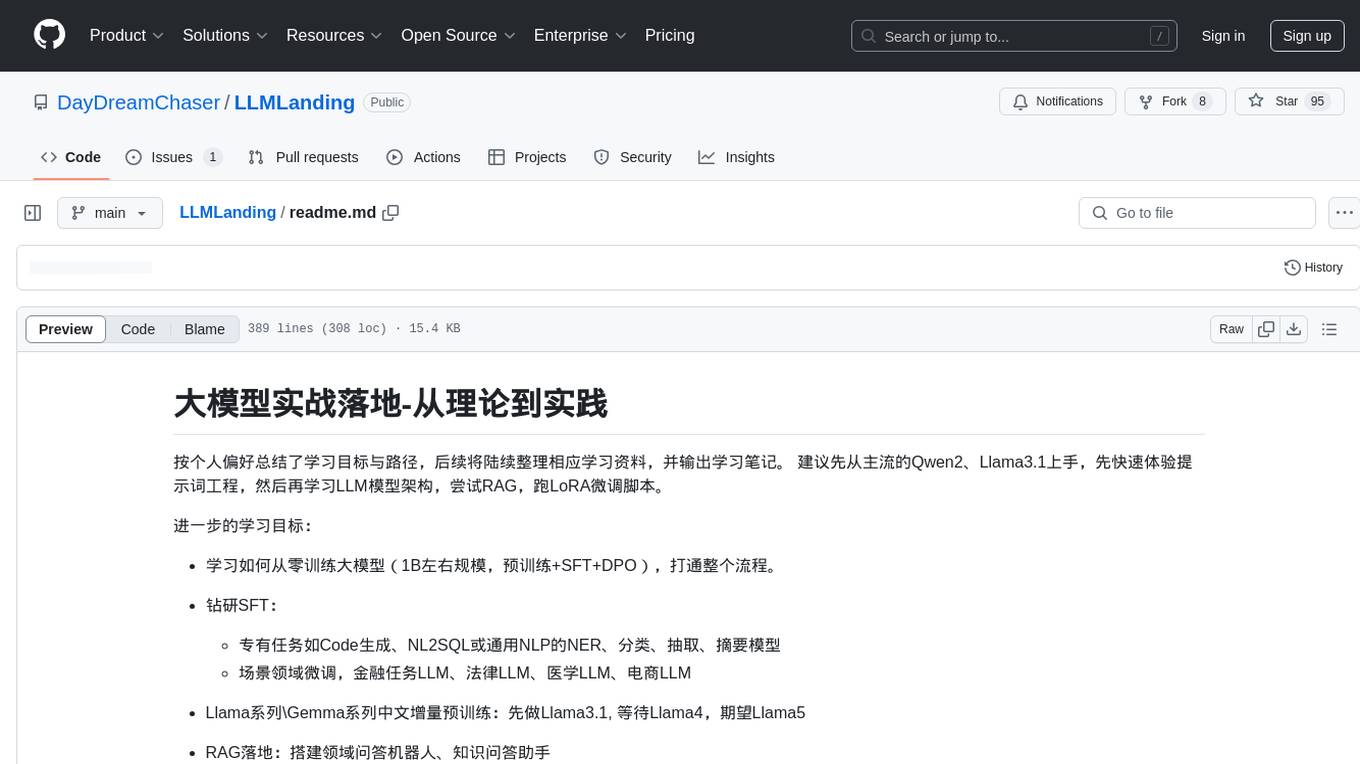

LLMLanding

Learning LLM Implementaion and Theory for Practical Landing

Stars: 95

LLMLanding is a repository focused on practical implementation of large models, covering topics from theory to practice. It provides a structured learning path for training large models, including specific tasks like training 1B-scale models, exploring SFT, and working on specialized tasks such as code generation, NLP tasks, and domain-specific fine-tuning. The repository emphasizes a dual learning approach: quickly applying existing tools for immediate output benefits and delving into foundational concepts for long-term understanding. It offers detailed resources and pathways for in-depth learning based on individual preferences and goals, combining theory with practical application to avoid overwhelm and ensure sustained learning progress.

README:

按个人偏好总结了学习目标与路径,后续将陆续整理相应学习资料,并输出学习笔记。 建议先从主流的Qwen2、Llama3.1上手,先快速体验提示词工程,然后再学习LLM模型架构,尝试RAG,跑LoRA微调脚本。

进一步的学习目标:

-

学习如何从零训练大模型(1B左右规模,预训练+SFT+DPO),打通整个流程。

-

钻研SFT:

- 专有任务如Code生成、NL2SQL或通用NLP的NER、分类、抽取、摘要模型

- 场景领域微调,金融任务LLM、法律LLM、医学LLM、电商LLM

-

Llama系列\Gemma系列中文增量预训练:先做Llama3.1, 等待Llama4,期望Llama5

-

RAG落地:搭建领域问答机器人、知识问答助手

大模型学习的思路有两个:

- 学习见效最快,投入产出比最大的 -> 快速上手之后,能立即带来产出收益(譬如调包微调)

- 学习底层基础,越靠近第一性原理越好 -> 底层变得慢,短期无收益但长期看好(譬如优化器)

但这么多内容,不可能什么都学,一定得排一个优先级,立一个目标来学习,实践和理论相结合,不然四处为战,很快就懈怠了。 如果要深入学习,建议按以下步骤(提供了详细的学习资料和路径),按需学习:

按个人偏好总结了学习目标与路径,后续将陆续整理相应学习资料,并输出学习笔记。 学习思路: 快速应用Transformers库等轮子来使用、微调和对齐LLM,同时深入学习NLP预训练模型原理和推理部署(因为偏底层的东西变化不大)

- 熟悉主流LLM(Llama, Qwen)的技术架构和细节;熟悉LLM训练流程,有实际应用RAG、PEFT和SFT的项目经验;

- 较强的NLP基础,熟悉GPT、BERT、Transformer练语言模型的实现,有对话系统、搜索相关研发经验;

- 掌握分布式训练DeepSpeed框架使用,有百亿模型预训练或SFT经验;熟悉vLLM理加速框架,模型量化、FlashAttention等推理加速技术方案,

- 熟悉Pytorch,具备扎实的深度学习和机器学习基础,有Liunx下Python、Git开发经验,了解计算机系统原理

注意,不同岗位的学习目标,需求不同。

- 大模型初创或大厂自研大模型岗,具体有预训练组、后训练组(微调、强化学习对齐)、评测组、数据组、Infra优化组,但偏难。

- 大模型应用算法

- 搜广推场景

- 降本增效

- 助手

- 熟悉主流LLM(Llama, ChatGLM, Qwen)的技术架构和技术细节;有实际应用RAG、PEFT和SFT的项目经验

- 较强的NLP基础,熟悉BERT、GPT、Transformer等预训练语言模型的实现,有对话系统相关研发经验

- 掌握TensorRT-LLM、vLLM等主流推理加速框架,熟悉模型量化、FlashAttention等推理加速技术方案,对分布式训练DeepSpeed框架有实战经验

- 熟悉Pytorch,具备扎实的深度学习和机器学习基础,基本掌握C/C++、Cuda和计算机系统原理

Build a Large Language Model (From Scratch)

-

LlamaFactory: 一键式LoRA微调、全参SFT、增量预训练框架 易用便捷,整合了很多微调算法,支持主流开源模型(封装地太厉害) https://zhuanlan.zhihu.com/p/697773502

-

大牛Karpathy的Github 从基础的makemore、minbpe, 到NanaGPT、llm.c等,都是学习LLM的非常好的项目。

github.com/karpathy/ 他的LLM101c教程,虽然还在制作中,但是主要内容还是他之前的Github项目。

-

torchkeras https://github.com/lyhue1991/torchkeras/

-

llm-action https://github.com/liguodongiot/llm-action

- 大规模语言模型:从理论到实践 https://intro-llm.github.io/intro-llm

-

ChatGPT原理与实战 https://github.com/liucongg/ChatGPTBook

-

面向开发者的LLM入门课程(吴恩达课程-中文版) https://github.com/datawhalechina/prompt-engineering-for-developers/blob/main/README.md

-

普林斯顿-COS 597G (Fall 2022): Understanding Large Language Models https://www.cs.princeton.edu/courses/archive/fall22/cos597G/

-

斯坦福-CS324 - Large Language Models https://stanford-cs324.github.io/winter2022/

- Huggingface Transformers官方课程 https://huggingface.co/learn/nlp-course/

- Transformers快速入门(快速调包BERT系列) https://transformers.run/

- 基于transformers的自然语言处理(NLP)入门: https://github.com/datawhalechina/learn-nlp-with-transformers/tree/main

- 力求快速应用 (先调包,再深入学习)

- 在实践中动手学习,力求搞懂每个关键点

- 【原理学习】+【代码实践】 + 【输出总结】

-

视频课程:

-

书籍

- 深度学习入门:基于Python的理论与实践:numpy实现MLP、卷积的训练 -《深度学习进阶:自然语言处理》:numpy实现Transformers、word2vec、RNN的训练

- Dive In Deep Learning(动手学深度学习) https://d2l.ai/

- 《神经网络与深度学习》https://nndl.github.io/

- 《机器学习方法》:李航的NLP相关的机器学习 + 深度学习知识(按需选学)

-

强化学习

- 强化学习教程-蘑菇书EasyRL(李宏毅强化学习+强化学习纲要)https://datawhalechina.github.io/easy-rl/

- 动手学强化学习 https://github.com/boyu-ai/Hands-on-RL/blob/main/README.md

-

数学基础 推荐这本非常实用的开源数学书:《爱丽丝梦游可微仙境》 www.sscardapane.it/alice-book/?s=09

应用:

Zero Shot / Few Shot 快速开箱即用

- Prompt调优:

- 上下文学习In-Context Learning, ICL

- 思维链 Chain of Thought, COT

- Costar提示词模板:云端听茗:提示工程综合指南:揭示COSTAR框架的力量提示工程综合指南:揭示COSTAR框架的力量

- Kimi的提示词应用: 自动出提示词,基本是按Costar模板

- RAG (Retrieval Augmented Generation)

- 基于文档分块、向量索引和LLM生成,如Langchain文档问答

- https://link.zhihu.com/?target=https%3A//www.kaggle.com/code/leonyiyi/chinesechatbot-rag-with-qwen2-7b

领域数据-指令微调LLM

-

PEFT (Parameter-Efficient Fine-Tuning):

- LORA (Low-Rank Adaption of LLMs)

- QLORA 参数高效的微调,适合用于纠正模型输出格式(PEFT上限不高,并向LLM输入的知识有限) 一般就是尝试LoRA,不然就是全参SFT, LoRA变体尝试地比较少(边际效应不高)

-

SFT (Supervised Fintuning):

- 全参数监督微调,使用prompt指令样本全量微调LLM(可以注入新的领域知识)

- 需要控制样本配比(领域数据 + 通用数据)

对齐

- 对齐人类偏好 (RLHF):

- RewardModel 奖励模型 (排序标注,判断答案价值)

- RL (PPO、DPO, 更新SFT模型) 专注基于强化学习的大语言模型对齐

预训练

- 小模型预训练 (NanoGPT, TinyLlama) 不考虑训练参数规模较大的语言模型

训练推理优化:

- 模型量化

- 推理加速

- 蒸馏

- 推理框架(vLLM、TensorRT-LLM、Llama.cpp)

开源大模型技术报告

- Llama技术报告:The Llama 3 Herd of Models - arXiv.org

- Mixtral-8X7B-MOE :https://mistral.ai/news/mixtral-of-experts

- Qwen技术报告:Qwen Technical Report Qwen1比Qwen2的报告细节多

- 70B模型训练细节:https://imbue.com/research/70b-intro/

端侧大模型技术报告: -面壁智能的详尽技术报告:MiniCPM: Unveiling the Potential of Small Language Models with Scalable Training Strategies

- 苹果AFM: Introducing Apple’s On-Device and Server Foundation Models

- Meta团队端侧尝试:MobileLLM: Optimizing Sub-billion Parameter Language Models for On-Device Use CasesLL

- 预训练与提示学习阶段

- 结果评价与奖励建模阶段

- 强化学习阶段

- 标注数据

- 建模思路

论文 《Attention Is All Your Need》 解析: 图解Transformer:http://jalammar.github.io/illustrated-transformer/ 实战 Transformer代码详解和训练实战:LeonYi:长文详解Transformer PyTorch预训练实现

GPT论文

- GPT-1:Improving Language Understanding by Generative Pre-Training

- GPT-2: Language Models are Unsupervised Multitask Learners

- GPT-3:Language Models are Few-Shot Learners

- GPT-4:GPT-4 Technical Report(http://openai.com)

解析

- GPT2图解:http://jalammar.github.io/illustrated-gpt2/

- GPT2图解(中文):https://www.cnblogs.com/zhongzhaoxie/p/13064404.html

- GPT3分析:How GPT3 Works - Visualizations and Animations

- GPT原理分析:https://www.cnblogs.com/justLittleStar/p/17322259.html

推理

- GPT2模型源码阅读系列一GPT2LMHeadModel

- 60行代码实现GPT推理(PicoGPT):LeonYi:多图详解LLM原理-60行numpy实现GPT

- 动手用C++实现GPT:ToDo, 参考:CPP实现Transformer

训练

- 训练GPT2语言模型:基于Transformers库-Colab预训练GPT2

- MiniGPT项目详解-实现双数加法:https://blog.csdn.net/wxc971231/article/details/132000182

- NanoGPT项目详解

- 代码分析:https://zhuanlan.zhihu.com/p/601044938

- 训练实战:LeonYi:【LLM训练系列】NanoGPT源码详解及其中文GPT训练实践

- 微调-文本摘要实战

- LeonYi:大模型实战-微软Phi2对话摘要QLoRA微调

原理

- BERT可视化:A Visual Guide to Using BERT for the First Time

- BERT原理:https://www.cnblogs.com/justLittleStar/p/17322240.html 实战

- BERT结构和预训练代码实现:ToDo

- BERT预训练实战:LeonYi:基于Transformers库预训练自己的BERT

- BERT微调:

- 文本分类

- 抽取:BERT-CRF NER / BERT+指针网络(UIE)信息抽取

- 相似性检索: SimCSE-BERT / BGE/ ColBERT

- 衍生系列 RoBERTa / DeBERTa/

提示学习介绍 答案空间映射设计: LLM时代就是做个标签映 上下文学习ContextLearning介绍

- 手动和自动构建指令: 基于GPT4或top大模型 抽取,再人工校正

- 开源指令数据集:alphaz

Llama1

- Llama1源码深入解析: https://zhuanlan.zhihu.com/p/648365207 Llama2

- llama 2详解: https://zhuanlan.zhihu.com/p/649756898 Llama3: notebook一层层详解实现

- github.com/naklecha/llama3-from-scratch

Qwen2: https://qwenlm.github.io/zh/blog/qwen2/

使用SFT-Trainer或Llama-Factory实现 重要的是数据准备和数据配比

LoRA(Low Rank Adapter)

LoRA原理:大模型高效微调-LoRA原理详解和训练过程深入分析 https://zhuanlan.zhihu.com/p/702629428

大模型PEFT综述详解-从Adpter、PrefixTuning到LoRA https://zhuanlan.zhihu.com/p/696057719

ChatGLM2微调保姆级教程: https://zhuanlan.zhihu.com/p/643856076

QLoRA 用bitsandbytes、4比特量化和QLoRA打造亲民的LLM:https://www.cnblogs.com/huggingface/p/17816374.html

- BPE详解

- WordPiece详解

- SentencePiece详解

MinBPE实战和分析:https://github.com/karpathy/minbpe

- 分布式训练概述

- 分布式训练并行策略

- 分布式训练的集群架构

- 分布式深度学习框架 -Megatron-LM详解

- DeepSpeed详解 实践

- 基于DeepSpeed的Qwen预训练实战

- 基于DeepSpeed的Qwen LoRA和SFT训练实践

思维链提示(Chain-of-Thought Prompting) 6 chain of thought template: Zeroshot / Fewshot/ Step-Back ..

- LangChain框架核心模块 9个范例带你入门langchain:https://zhuanlan.zhihu.com/p/654052645

- LlamaIndex https://qwen.readthedocs.io/en/latest/framework/LlamaIndex.html

智能代理介绍 LLM Powered Autonomous Agents: https://lilianweng.github.io/posts/2023-06-23-agent/

FlashAttention系列 PagedAttention 深入理解 BigBird 的块稀疏注意力: https://www.cnblogs.com/huggingface/p/17852870.htmlhttps://hf.co/blog/big-bird

vLLM推理框架实践

unsloth

- Q-learning算法

- DQN算法

- Policy Gradient算法

- Actor-Critic算法

PPO:Proximal Policy Optimization Algorithms 论文 PPO介绍

- 广义优势估计

- PPO算法原理剖析

- PPO算法对比与评价 使用PPO算法进行RLHF的N步实现细节: https://www.cnblogs.com/huggingface/p/17836295.html

PPO实战

InstructGPT模型分析

- InstructGPT:Training language models to follow instructions with human feedback

- 论文RLHF:Augmenting Reinforcement Learning with Human Feedback

RLHF内部剖析

-

详解大模型RLHF过程(配代码解读) https://zhuanlan.zhihu.com/p/624589622

-

RLHF价值分析

-

RLHF问题分析

-

数据收集与模型训练

-

模型训练\生成\评估 https://zhuanlan.zhihu.com/p/635569455

RLHF 实践

- OpenRLHF

【LLM训练系列】从零开始训练大模型之Phi2-mini-Chinese项目解读 https://zhuanlan.zhihu.com/p/718307193

多模态大模型调研 Qwen2-VL-7B 实战 Qwen2-VL-7B 微调

在企业里面做7B、13B量级的微调,主要就是在搞数据、样本,技术壁垒不高。预训练壁垒高,因为需要烧钱堆经验。

在这个日新月异的时代,如何紧跟行业主流发展,并具备不可替代性是个难题:

- 稀缺(不可替代性)

- 稳定(业务和表层技术天天变,但底层的理论变化不大)

- 需求持续(最好是类似衣食住行的刚需,否则技术 过时/热度褪去/不达预期,泡沫崩溃)

- 不能越老越吃香(放到绝大多数行业都适用:不能经验积累,持续长期创造价值)

- 壁垒(技术、业务、资本上有垄断)

尽量往底层和工程化上靠,学习相对不变的技术(理论上变化很难),迁移到稳定或有前景的行业:

- 计算机系统知识(训练、推理、开发,模型推理部署工程化)

- 数学(深入学习并实践)

- 经济学 / 心理学

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for LLMLanding

Similar Open Source Tools

LLMLanding

LLMLanding is a repository focused on practical implementation of large models, covering topics from theory to practice. It provides a structured learning path for training large models, including specific tasks like training 1B-scale models, exploring SFT, and working on specialized tasks such as code generation, NLP tasks, and domain-specific fine-tuning. The repository emphasizes a dual learning approach: quickly applying existing tools for immediate output benefits and delving into foundational concepts for long-term understanding. It offers detailed resources and pathways for in-depth learning based on individual preferences and goals, combining theory with practical application to avoid overwhelm and ensure sustained learning progress.

llm-resource

llm-resource is a comprehensive collection of high-quality resources for Large Language Models (LLM). It covers various aspects of LLM including algorithms, training, fine-tuning, alignment, inference, data engineering, compression, evaluation, prompt engineering, AI frameworks, AI basics, AI infrastructure, AI compilers, LLM application development, LLM operations, AI systems, and practical implementations. The repository aims to gather and share valuable resources related to LLM for the community to benefit from.

awesome-chatgpt-zh

The Awesome ChatGPT Chinese Guide project aims to help Chinese users understand and use ChatGPT. It collects various free and paid ChatGPT resources, as well as methods to communicate more effectively with ChatGPT in Chinese. The repository contains a rich collection of ChatGPT tools, applications, and examples.

claude-init

Claude Code Chinese development suite is a localized version based on the Claude Code Development Kit, offering a seamless Chinese AI programming experience. It features complete Chinese AI commands, documentation system, error messages, and installation experience. The suite includes intelligent context management with a three-tier document structure, automatic context injection, smart document routing, and cross-session state management. It integrates development tools like Hook system, MCP server support, security scans, and notification system. Additionally, it provides a comprehensive template library with project templates, document templates, and configuration examples.

LLMForEverybody

LLMForEverybody is a comprehensive repository covering various aspects of large language models (LLMs) including pre-training, architecture, optimizers, activation functions, attention mechanisms, tokenization, parallel strategies, training frameworks, deployment, fine-tuning, quantization, GPU parallelism, prompt engineering, agent design, RAG architecture, enterprise deployment challenges, evaluation metrics, and current hot topics in the field. It provides detailed explanations, tutorials, and insights into the workings and applications of LLMs, making it a valuable resource for researchers, developers, and enthusiasts interested in understanding and working with large language models.

Long-Novel-GPT

Long-Novel-GPT is a long novel generator based on large language models like GPT. It utilizes a hierarchical outline/chapter/text structure to maintain the coherence of long novels. It optimizes API calls cost through context management and continuously improves based on self or user feedback until reaching the set goal. The tool aims to continuously refine and build novel content based on user-provided initial ideas, ultimately generating long novels at the level of human writers.

FisherAI

FisherAI is a Chrome extension designed to improve learning efficiency. It supports automatic summarization, web and video translation, multi-turn dialogue, and various large language models such as gpt/azure/gemini/deepseek/mistral/groq/yi/moonshot. Users can enjoy flexible and powerful AI tools with FisherAI.

MaiMBot

MaiMBot is an intelligent QQ group chat bot based on a large language model. It is developed using the nonebot2 framework, utilizes LLM for conversation abilities, MongoDB for data persistence, and NapCat for QQ protocol support. The bot features keyword-triggered proactive responses, dynamic prompt construction, support for images and message forwarding, typo generation, multiple replies, emotion-based emoji responses, daily schedule generation, user relationship management, knowledge base, and group impressions. Work-in-progress features include personality, group atmosphere, image handling, humor, meme functions, and Minecraft interactions. The tool is in active development with plans for GIF compatibility, mini-program link parsing, bug fixes, documentation improvements, and logic enhancements for emoji sending.

AHU-AI-Repository

This repository is dedicated to the learning and exchange of resources for the School of Artificial Intelligence at Anhui University. Notes will be published on this website first: https://www.aoaoaoao.cn and will be synchronized to the repository regularly. You can also contact me at [email protected].

Code-Review-GPT-Gitlab

A project that utilizes large models to help with Code Review on Gitlab, aimed at improving development efficiency. The project is customized for Gitlab and is developing a Multi-Agent plugin for collaborative review. It integrates various large models for code security issues and stays updated with the latest Code Review trends. The project architecture is designed to be powerful, flexible, and efficient, with easy integration of different models and high customization for developers.

AI-Drug-Discovery-Design

AI-Drug-Discovery-Design is a repository focused on Artificial Intelligence-assisted Drug Discovery and Design. It explores the use of AI technology to accelerate and optimize the drug development process. The advantages of AI in drug design include speeding up research cycles, improving accuracy through data-driven models, reducing costs by minimizing experimental redundancies, and enabling personalized drug design for specific patients or disease characteristics.

aituber-kit

AITuber-Kit is a tool that enables users to interact with AI characters, conduct AITuber live streams, and engage in external integration modes. Users can easily converse with AI characters using various LLM APIs, stream on YouTube with AI character reactions, and send messages to server apps via WebSocket. The tool provides settings for API keys, character configurations, voice synthesis engines, and more. It supports multiple languages and allows customization of VRM models and background images. AITuber-Kit follows the MIT license and offers guidelines for adding new languages to the project.

douyin-chatgpt-bot

Douyin ChatGPT Bot is an AI-driven system for automatic replies on Douyin, including comment and private message replies. It offers features such as comment filtering, customizable robot responses, and automated account management. The system aims to enhance user engagement and brand image on the Douyin platform, providing a seamless experience for managing interactions with followers and potential customers.

AIMedia

AIMedia is a fully automated AI media software that automatically fetches hot news, generates news, and publishes on various platforms. It supports hot news fetching from platforms like Douyin, NetEase News, Weibo, The Paper, China Daily, and Sohu News. Additionally, it enables AI-generated images for text-only news to enhance originality and reading experience. The tool is currently commercialized with plans to support video auto-generation for platform publishing in the future. It requires a minimum CPU of 4 cores or above, 8GB RAM, and supports Windows 10 or above. Users can deploy the tool by cloning the repository, modifying the configuration file, creating a virtual environment using Conda, and starting the web interface. Feedback and suggestions can be submitted through issues or pull requests.

Operit

Operit AI is a fully functional AI assistant application for mobile devices, running independently on Android devices with powerful tool invocation capabilities. It offers over 40 built-in tools for file system operations, HTTP requests, system operations, UI automation, and media processing. The app combines these tools with rich plugins to enable a wide range of tasks, from simple to complex, providing a comprehensive experience of a smartphone AI assistant.

qiaoqiaoyun

Qiaoqiaoyun is a new generation zero-code product that combines an AI application development platform, AI knowledge base, and zero-code platform, helping enterprises quickly build personalized business applications in an AI way. Users can build personalized applications that meet business needs without any code. Qiaoqiaoyun has comprehensive application building capabilities, form engine, workflow engine, and dashboard engine, meeting enterprise's normal requirements. It is also an AI application development platform based on LLM large language model and RAG open-source knowledge base question-answering system.

For similar tasks

ai-on-gke

This repository contains assets related to AI/ML workloads on Google Kubernetes Engine (GKE). Run optimized AI/ML workloads with Google Kubernetes Engine (GKE) platform orchestration capabilities. A robust AI/ML platform considers the following layers: Infrastructure orchestration that support GPUs and TPUs for training and serving workloads at scale Flexible integration with distributed computing and data processing frameworks Support for multiple teams on the same infrastructure to maximize utilization of resources

ray

Ray is a unified framework for scaling AI and Python applications. It consists of a core distributed runtime and a set of AI libraries for simplifying ML compute, including Data, Train, Tune, RLlib, and Serve. Ray runs on any machine, cluster, cloud provider, and Kubernetes, and features a growing ecosystem of community integrations. With Ray, you can seamlessly scale the same code from a laptop to a cluster, making it easy to meet the compute-intensive demands of modern ML workloads.

labelbox-python

Labelbox is a data-centric AI platform for enterprises to develop, optimize, and use AI to solve problems and power new products and services. Enterprises use Labelbox to curate data, generate high-quality human feedback data for computer vision and LLMs, evaluate model performance, and automate tasks by combining AI and human-centric workflows. The academic & research community uses Labelbox for cutting-edge AI research.

djl

Deep Java Library (DJL) is an open-source, high-level, engine-agnostic Java framework for deep learning. It is designed to be easy to get started with and simple to use for Java developers. DJL provides a native Java development experience and allows users to integrate machine learning and deep learning models with their Java applications. The framework is deep learning engine agnostic, enabling users to switch engines at any point for optimal performance. DJL's ergonomic API interface guides users with best practices to accomplish deep learning tasks, such as running inference and training neural networks.

mlflow

MLflow is a platform to streamline machine learning development, including tracking experiments, packaging code into reproducible runs, and sharing and deploying models. MLflow offers a set of lightweight APIs that can be used with any existing machine learning application or library (TensorFlow, PyTorch, XGBoost, etc), wherever you currently run ML code (e.g. in notebooks, standalone applications or the cloud). MLflow's current components are:

* `MLflow Tracking

tt-metal

TT-NN is a python & C++ Neural Network OP library. It provides a low-level programming model, TT-Metalium, enabling kernel development for Tenstorrent hardware.

burn

Burn is a new comprehensive dynamic Deep Learning Framework built using Rust with extreme flexibility, compute efficiency and portability as its primary goals.

awsome-distributed-training

This repository contains reference architectures and test cases for distributed model training with Amazon SageMaker Hyperpod, AWS ParallelCluster, AWS Batch, and Amazon EKS. The test cases cover different types and sizes of models as well as different frameworks and parallel optimizations (Pytorch DDP/FSDP, MegatronLM, NemoMegatron...).

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.