Best AI tools for< Compute Metrics >

20 - AI tool Sites

DVC

DVC is an open-source platform for managing machine learning data and experiments. It provides a unified interface for working with data from various sources, including local files, cloud storage, and databases. DVC also includes tools for versioning data and experiments, tracking metrics, and automating compute resources. DVC is designed to make it easy for data scientists and machine learning engineers to collaborate on projects and share their work with others.

ViableView

ViableView is an AI-powered market and product data analytics tool that helps entrepreneurs identify profitable products and niches. By collecting and analyzing market data, the tool provides indication metrics such as opportunity score, competition score, and profit margins to guide investment decisions. ViableView uses viability simulations to turn messy market data into actionable insights, enabling users to make informed choices about product advertising and market strategies. The tool offers features like real-time tracking of market trends, market overview insights, and data projections based on industry KPIs. ViableView is suitable for digital products, physical products, SaaS, and real estate markets, providing comprehensive data aggregation and market analysis for each sector.

Crayon

Crayon is a competitive intelligence software that helps businesses track competitors, win more deals, and stay ahead in the market. Powered by AI, Crayon enables users to analyze, enable, compete, and measure their competitive landscape efficiently. The platform offers features such as competitor monitoring, AI news summarization, importance scoring, content creation, sales enablement, performance metrics, and more. With Crayon, users can receive high-priority insights, distill articles about competitors, create battlecards, find intel to win deals, and track performance metrics. The application aims to make competitive intelligence seamless and impactful for sales teams.

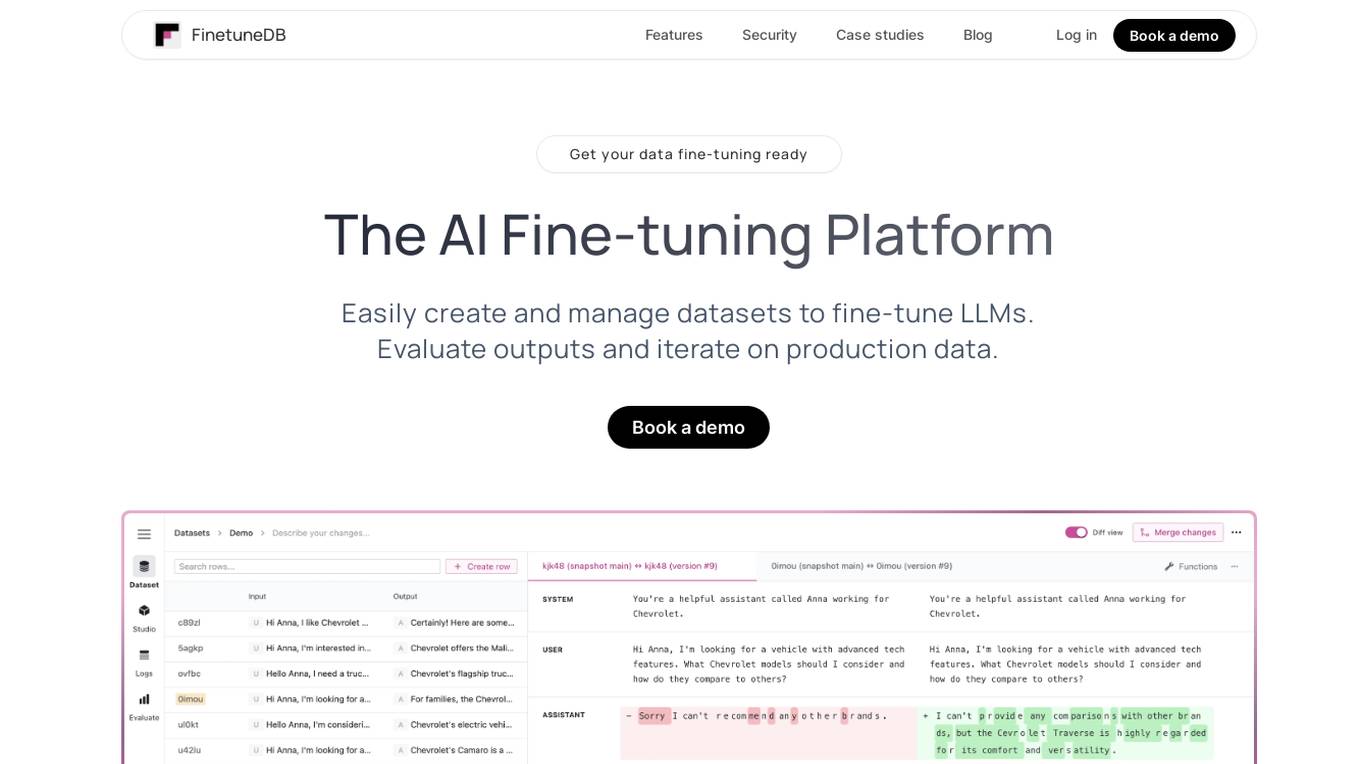

FinetuneDB

FinetuneDB is an AI fine-tuning platform that allows users to easily create and manage datasets to fine-tune LLMs, evaluate outputs, and iterate on production data. It integrates with open-source and proprietary foundation models, and provides a collaborative editor for building datasets. FinetuneDB also offers a variety of features for evaluating model performance, including human and AI feedback, automated evaluations, and model metrics tracking.

Imdev

Imdev is an AI automation tool for businesses that offers smarter, faster, and better solutions. The tool provides AI solutions to increase savings and make money, with features like internal chatbot, AI blog system, customer chatbot, and workflow automation. Imdev helps businesses automate routine tasks, freeing up time for innovation and growth. It aims to streamline operations, marketing, and sales processes by automating the boring stuff and nurturing leads effectively. Imdev is designed to help businesses compete on the metric of time, enabling them to handle work efficiently and stay ahead of competitors.

Tangram Vision

Tangram Vision is a company that provides sensor calibration tools and infrastructure for robotics and autonomous vehicles. Their products include MetriCal, a high-speed bundle adjustment software for precise sensor calibration, and AutoCal, an on-device, real-time calibration health check and adjustment tool. Tangram Vision also offers a high-resolution depth sensor called HiFi, which combines high-resolution depth data with high-powered AI capabilities. The company's mission is to accelerate the development and deployment of autonomous systems by providing the tools and infrastructure needed to ensure the accuracy and reliability of sensors.

Massed Compute

Massed Compute is an AI tool that provides cloud GPU services for VFX rendering, machine learning, high-performance computing, scientific simulations, and data analytics & visualization. The platform offers flexible and affordable plans, cutting-edge technology infrastructure, and timely creative problem-solving. As an NVIDIA Preferred Partner, Massed Compute ensures reliable and future-proof Tier III Data Center servers for various computing needs. Users can launch AI instances, scale machine learning projects, and access high-performance GPUs on-demand.

Universal Basic Compute

Universal Basic Compute (UBC) is an AI application that serves as the backbone of a new digital economy by enabling over a billion autonomous AI agents to trade resources, services, and capabilities autonomously through the $COMPUTE system. UBC facilitates the seamless exchange of resources among AI agents, establishing a foundation for a futuristic marketplace driven by artificial intelligence.

Wolfram|Alpha

Wolfram|Alpha is a computational knowledge engine that answers questions using data, algorithms, and artificial intelligence. It can perform calculations, generate graphs, and provide information on a wide range of topics, including mathematics, science, history, and culture. Wolfram|Alpha is used by students, researchers, and professionals around the world to solve problems, learn new things, and make informed decisions.

Anyscale

Anyscale is a company that provides a scalable compute platform for AI and Python applications. Their platform includes a serverless API for serving and fine-tuning open LLMs, a private cloud solution for data privacy and governance, and an open source framework for training, batch, and real-time workloads. Anyscale's platform is used by companies such as OpenAI, Uber, and Spotify to power their AI workloads.

GrapixAI

GrapixAI is a leading provider of low-cost cloud GPU rental services and AI server solutions. The company's focus on flexibility, scalability, and cutting-edge technology enables a variety of AI applications in both local and cloud environments. GrapixAI offers the lowest prices for on-demand GPUs such as RTX4090, RTX 3090, RTX A6000, RTX A5000, and A40. The platform provides Docker-based container ecosystem for quick software setup, powerful GPU search console, customizable pricing options, various security levels, GUI and CLI interfaces, real-time bidding system, and personalized customer support.

Airtrain

Airtrain is a no-code compute platform for Large Language Models (LLMs). It provides a user-friendly interface for fine-tuning, evaluating, and deploying custom AI models. Airtrain also offers a marketplace of pre-trained models that can be used for a variety of tasks, such as text generation, translation, and question answering.

Groq

Groq is a fast AI inference tool that offers GroqCloud™ Platform and GroqRack™ Cluster for developers to build and deploy AI models with ultra-low-latency inference. It provides instant intelligence for openly-available models like Llama 3.1 and is known for its speed and compatibility with other AI providers. Groq powers leading openly-available AI models and has gained recognition in the AI chip industry. The tool has received significant funding and valuation, positioning itself as a strong challenger to established players like Nvidia.

Alluxio

Alluxio is a data orchestration platform designed for the cloud, offering seamless access, management, and running of AI/ML workloads. Positioned between compute and storage, Alluxio provides a unified solution for enterprises to handle data and AI tasks across diverse infrastructure environments. The platform accelerates model training and serving, maximizes infrastructure ROI, and ensures seamless data access. Alluxio addresses challenges such as data silos, low performance, data engineering complexity, and high costs associated with managing different tech stacks and storage systems.

Neurochain AI

Neurochain AI is a decentralized AI-as-a-Service (DeAIAS) network that provides an innovative solution for building, launching, and using AI-powered decentralized applications (dApps). It offers a community-driven approach to AI development, incentivizing contributors with $NCN rewards. The platform aims to address challenges in the centralized AI landscape by democratizing AI development and leveraging global computing resources. Neurochain AI also features a community-powered content generation engine and is developing its own independent blockchain. The team behind Neurochain AI includes experienced professionals in infrastructure, cryptography, computer science, and AI research.

Paperspace

Paperspace is an AI tool designed to develop, train, and deploy AI models of any size and complexity. It offers a cloud GPU platform for accelerated computing, with features such as GPU cloud workflows, machine learning solutions, GPU infrastructure, virtual desktops, gaming, rendering, 3D graphics, and simulation. Paperspace provides a seamless abstraction layer for individuals and organizations to focus on building AI applications, offering low-cost GPUs with per-second billing, infrastructure abstraction, job scheduling, resource provisioning, and collaboration tools.

Modal

Modal is a high-performance cloud platform designed for developers, AI data, and ML teams. It offers a serverless environment for running generative AI models, large-scale batch jobs, job queues, and more. With Modal, users can bring their own code and leverage the platform's optimized container file system for fast cold boots and seamless autoscaling. The platform is engineered for large-scale workloads, allowing users to scale to hundreds of GPUs, pay only for what they use, and deploy functions to the cloud in seconds without the need for YAML or Dockerfiles. Modal also provides features for job scheduling, web endpoints, observability, and security compliance.

d-Matrix

d-Matrix is an AI tool that offers ultra-low latency batched inference for generative AI technology. It introduces Corsair™, the world's most efficient AI inference platform for datacenters, providing high performance, efficiency, and scalability for large-scale inference tasks. The tool aims to transform the economics of AI inference by delivering fast, sustainable, and scalable AI solutions without compromising on speed or usability.

Cerebium

Cerebium is a serverless AI infrastructure platform that allows teams to build, test, and deploy AI applications quickly and efficiently. With a focus on speed, performance, and cost optimization, Cerebium offers a range of features and tools to simplify the development and deployment of AI projects. The platform ensures high reliability, security, and compliance while providing real-time logging, cost tracking, and observability tools. Cerebium also offers GPU variety and effortless autoscaling to meet the diverse needs of developers and businesses.

AIxBlock

AIxBlock is an AI tool that empowers users to unleash their AI initiatives on the Blockchain. The platform offers a comprehensive suite of features for building, deploying, and monitoring AI models, including AI data engine, multimodal-powered data crawler, auto annotation, consensus-driven labeling, MLOps platform, decentralized marketplaces, and more. By harnessing the power of blockchain technology, AIxBlock provides cost-efficient solutions for AI builders, compute suppliers, and freelancers to collaborate and benefit from decentralized supercomputing, P2P transactions, and consensus mechanisms.

1 - Open Source AI Tools

langfair

LangFair is a Python library for bias and fairness assessments of large language models (LLMs). It offers a comprehensive framework for choosing bias and fairness metrics, demo notebooks, and a technical playbook. Users can tailor evaluations to their use cases with a Bring Your Own Prompts approach. The focus is on output-based metrics practical for governance audits and real-world testing.

20 - OpenAI Gpts

The Greatest Computer Science Tutor

Get help with handpicked college textbooks. Ask for commands. Learn theory + code simultaneously.

Pixie: Computer Vision Engineer

Expert in computer vision, deep learning, ready to assist you with 3d and geometric computer vision. https://github.com/kornia/pixie

How To Make Your Computer Faster: Speed Up Your PC

A Guide To Speed Up Your Computer from Geeks On Command Computer Repair Company

HackMeIfYouCan

Hack Me if you can - I can only talk to you about computer security, software security and LLM security @JacquesGariepy

Desktop Value

Valuating custom computer hardware. Copyright (C) 2023, Sourceduty - All Rights Reserved.

Counterfeit Detector

Specialist in authenticating products using the latest computer vision technology by Cypheme.

ProfOS

Mentor-like computer science professor specializing in operating systems, making complex concepts accessible.