AI tools for underss

Related Tools:

Undress Photo AI

Undress Photo AI is a free online tool that uses artificial intelligence to generate nude and bikini images from photos. The tool is easy to use and requires no registration. Simply upload a photo and the tool will generate a nude or bikini image in seconds. The tool can be used to create realistic and high-quality nude and bikini images for a variety of purposes, such as art, fashion, and advertising.

Undress AI Pro

Undress AI Pro is a controversial computer vision application that uses machine learning to remove clothing from images of people. It was based on deep learning and generative adversarial networks (GANs). The technology powering Undress AI and DeepNude was based on deep learning and generative adversarial networks (GANs). GANs involve two neural networks competing against each other - a generator creates synthetic images trying to mimic the training data, while a discriminator tries to distinguish the real images from the generated ones. Through this adversarial process, the generator learns to produce increasingly realistic outputs. For Undress AI, the GAN was trained on a dataset of nude and clothed images, allowing it to "unclothe" people in new images by generating the nudity.

Undress AI

Undress AI is a free online tool that allows users to create deepnude images. Deepnude images are realistic, nude images of people that are generated using artificial intelligence. The tool is easy to use and does not require any special skills or knowledge. Simply upload an image of a person and the tool will generate a deepnude image of that person.

Undressing AI

Undressing AI is a website that provides information about artificial intelligence (AI) and its potential impact on society. The site includes articles, videos, and other resources on topics such as the history of AI, the different types of AI, and the ethical implications of AI.

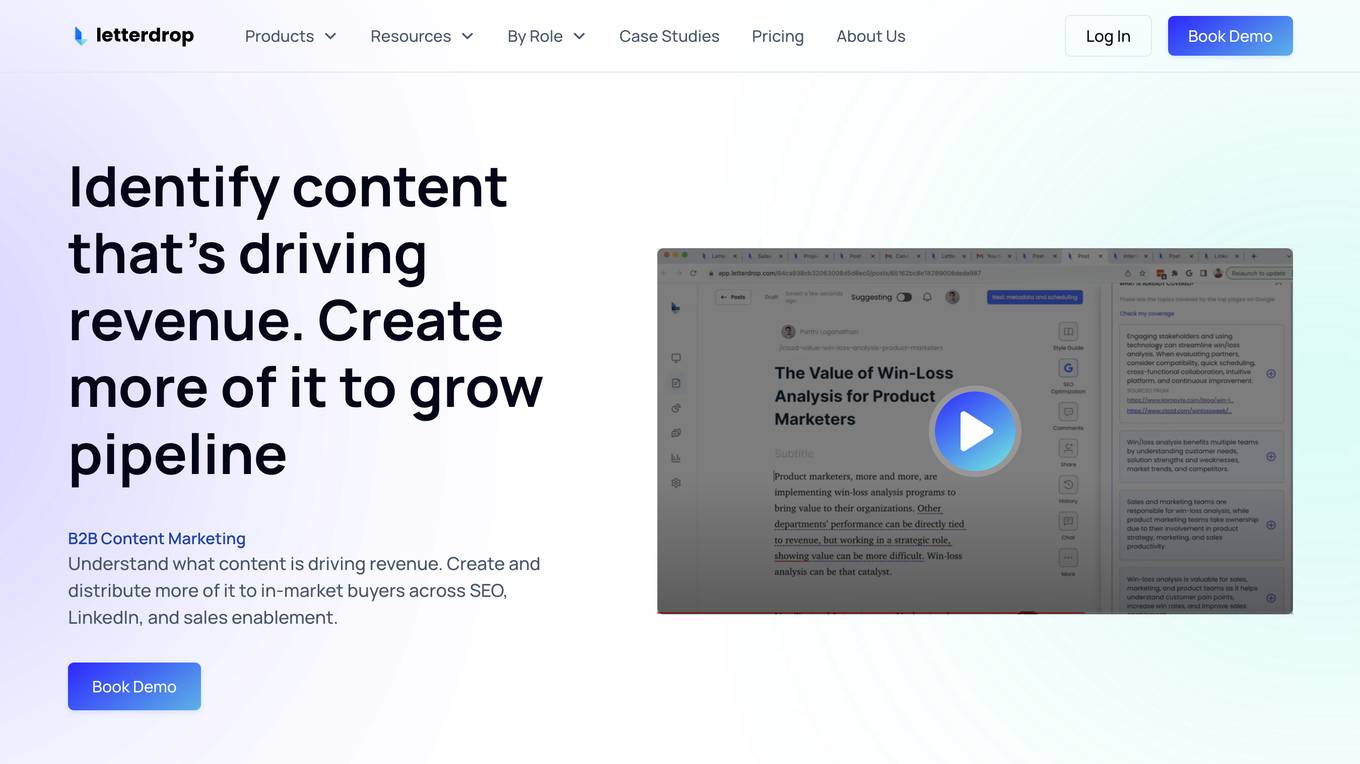

Letterdrop

Letterdrop is an AI-powered application designed to help businesses attract warm leads and increase their pipeline. It offers features such as uncovering contact-level leads, creating thought leadership content, and nurturing leads with turn-key content. Letterdrop aims to help users book more meetings, close deals faster, and improve their outbound messaging strategies. The application leverages AI to filter out irrelevant leads, identify warm leads, and provide context on why they were surfaced. With a focus on personalized outreach and nurturing, Letterdrop helps users engage with hot leads effectively.

DDoS-Guard

DDoS-Guard is a web security service that protects websites from distributed denial-of-service (DDoS) attacks. It checks the user's browser before granting access to the website, ensuring a secure browsing experience. The service provides automatic protection against DDoS attacks and ensures the smooth functioning of websites. DDoS-Guard is trusted by many websites to safeguard their online presence and maintain uninterrupted service for their users.

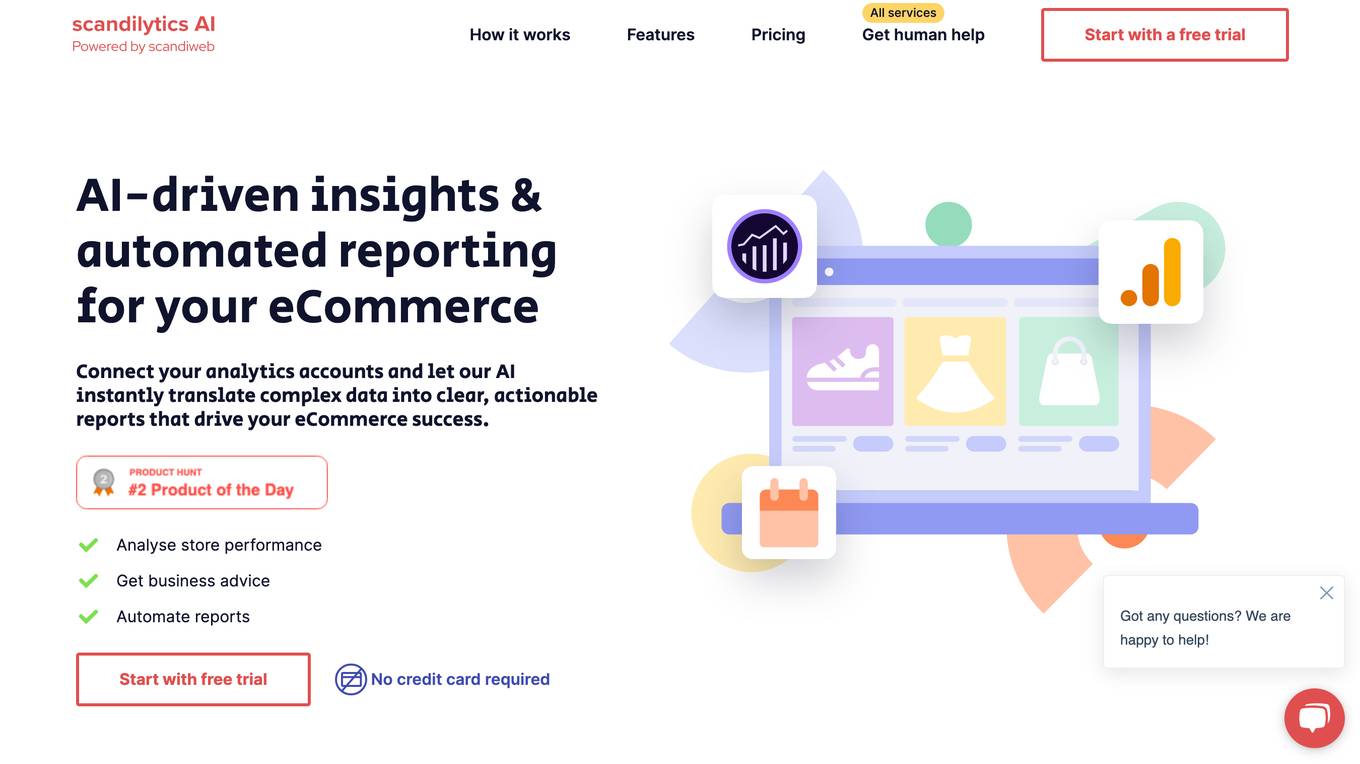

Scandilytics AI

Scandilytics AI is an AI-driven platform that provides data analytics and automated reporting services for eCommerce businesses. By connecting with your analytics accounts, the AI translates complex data into clear, actionable reports to drive success. The platform offers three main AI solutions - Data Analyst AI, Business Analyst AI, and Marketing Agent AI - each designed to analyze data, provide insights, and optimize business strategies. Scandilytics AI empowers global brands by offering smarter analytics at a lower cost, saving time, and delivering clear insights of eCommerce performance 10X faster. The platform ensures data security compliance and helps businesses optimize KPIs, understand customer behavior, and improve marketing strategies.

Legalese Decoder

Legalese Decoder is a web application that utilizes AI, natural language processing (NLP), and machine learning (ML) to analyze legal documents and provide a plain language version of the document. It is designed to simplify legal jargon and complex terms in contracts, agreements, and other legal documents, making it easier for users to understand and interpret them. The application aims to empower individuals, small business owners, and professionals by offering a free tool to navigate legal complexities with ease.

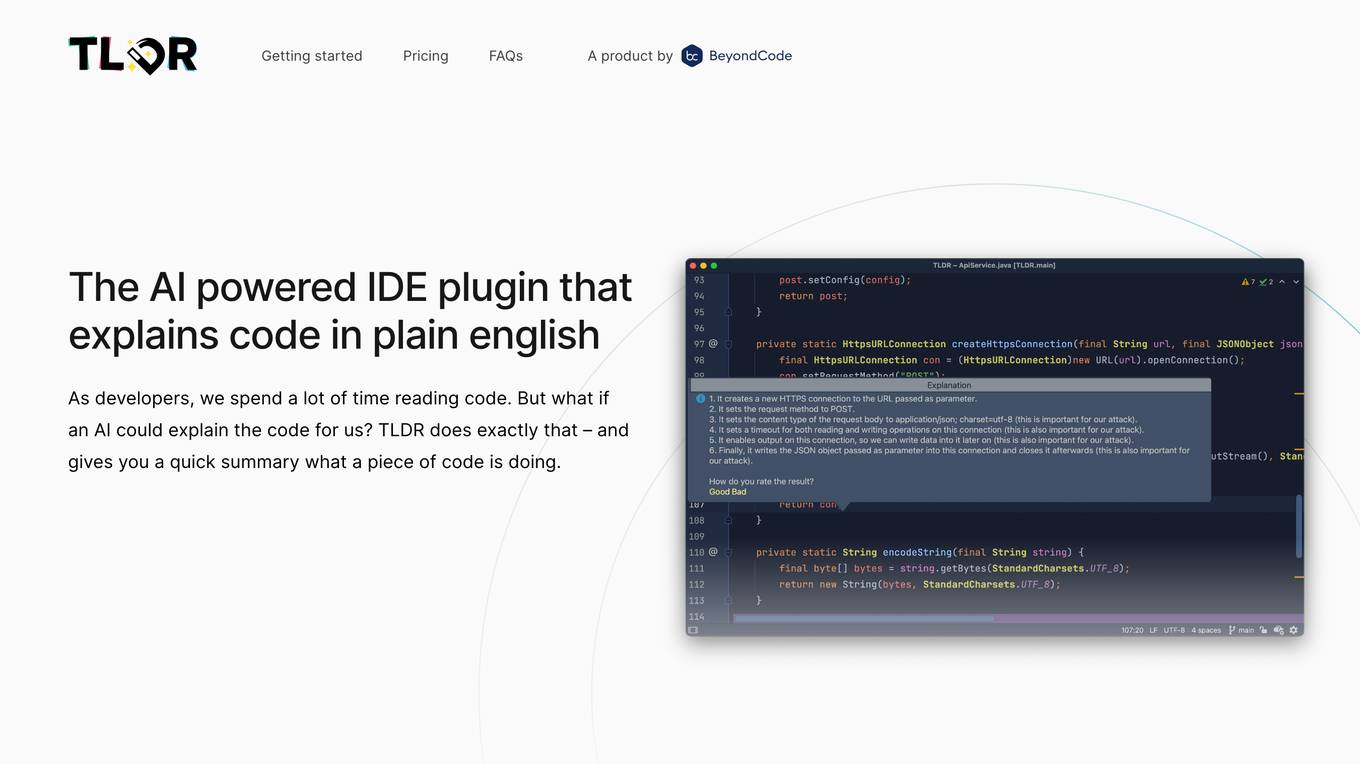

TLDR

TLDR is an AI-powered IDE plugin that explains code in plain English. It helps developers understand code by providing quick summaries of what a piece of code is doing. The tool supports almost all programming languages and offers a free version for users to try before purchasing. TLDR aims to simplify the understanding of complex code structures and save developers time in comprehending codebases.

Totoy

Totoy is a Document AI tool that redefines the way documents are processed. Its API allows users to explain, classify, and create knowledge bases from documents without the need for training. The tool supports 19 languages and works with plain text, images, and PDFs. Totoy is ideal for automating workflows, complying with accessibility laws, and creating custom AI assistants for employees or customers.

Re-View

Re-View is an AI-powered platform that enables users to conduct surveys that capture more than words by utilizing user-friendly video survey forms. The platform allows users to understand emotions, uncover insights, and collect more and better data through authentic emotional connections. With features like automatic insights, efficient research at scale, stunning simplicity, and powerful research capabilities, Re-View offers a practical pricing model that makes research accessible to all. Users can easily create surveys, analyze responses with AI assistance, and gain valuable research reports to support decision-making.

Aide

Aide is an AI platform designed to enhance customer support operations. It offers a range of features to help businesses gain insights into customer needs, automate support processes, improve agent efficiency, and train AI chatbots. Aide's key capabilities include customer insights, workflow automation, agent assist, and AI chatbots. With Aide, businesses can analyze customer conversations, identify pain points, and automate repetitive tasks to streamline support operations and improve customer satisfaction.

LangWatch

LangWatch is a monitoring and analytics tool for Generative AI (GenAI) solutions. It provides detailed evaluations of the faithfulness and relevancy of GenAI responses, coupled with user feedback insights. LangWatch is designed for both technical and non-technical users to collaborate and comment on improvements. With LangWatch, you can understand your users, detect issues, and improve your GenAI products.

MITRE Interpreter

This GPT helps you understand and apply the MITRE ATT&CK Framework, whether you are familiar with the concepts or not.

Concept Explainer

A facilitator for understanding concepts using a simplified Concept Attainment Method.

IPCC Explainer

A conversational guide to the IPCC report, IPCC Explainer breaks down dense information into understandable insights for public awareness

CTMU Sage

Bot that guides users in understanding the Cognitive-Theoretic Model of the Universe

Research Mentor by Dr P.M. Sinclair

A GPT that explains research methods in a language that everyone can easily understand.

LLaMa2lang

This repository contains convenience scripts to finetune LLaMa3-8B (or any other foundation model) for chat towards any language (that isn't English). The rationale behind this is that LLaMa3 is trained on primarily English data and while it works to some extent for other languages, its performance is poor compared to English.

Quantus

Quantus is a toolkit designed for the evaluation of neural network explanations. It offers more than 30 metrics in 6 categories for eXplainable Artificial Intelligence (XAI) evaluation. The toolkit supports different data types (image, time-series, tabular, NLP) and models (PyTorch, TensorFlow). It provides built-in support for explanation methods like captum, tf-explain, and zennit. Quantus is under active development and aims to provide a comprehensive set of quantitative evaluation metrics for XAI methods.

understand-r1-zero

The 'understand-r1-zero' repository focuses on understanding R1-Zero-like training from a critical perspective. It provides insights into base models and reinforcement learning components, highlighting findings and proposing solutions for biased optimization. The repository offers a minimalist recipe for R1-Zero training, detailing the RL-tuning process and achieving state-of-the-art performance with minimal compute resources. It includes codebase, models, and paper related to R1-Zero training implemented with the Oat framework, emphasizing research-friendly and efficient LLM RL techniques.

awesome-llm-understanding-mechanism

This repository is a collection of papers focused on understanding the internal mechanism of large language models (LLM). It includes research on topics such as how LLMs handle multilingualism, learn in-context, and handle factual associations. The repository aims to provide insights into the inner workings of transformer-based language models through a curated list of papers and surveys.

LLM-on-Tabular-Data-Prediction-Table-Understanding-Data-Generation

This repository serves as a comprehensive survey on the application of Large Language Models (LLMs) on tabular data, focusing on tasks such as prediction, data generation, and table understanding. It aims to consolidate recent progress in this field by summarizing key techniques, metrics, datasets, models, and optimization approaches. The survey identifies strengths, limitations, unexplored territories, and gaps in the existing literature, providing insights for future research directions. It also offers code and dataset references to empower readers with the necessary tools and knowledge to address challenges in this rapidly evolving domain.

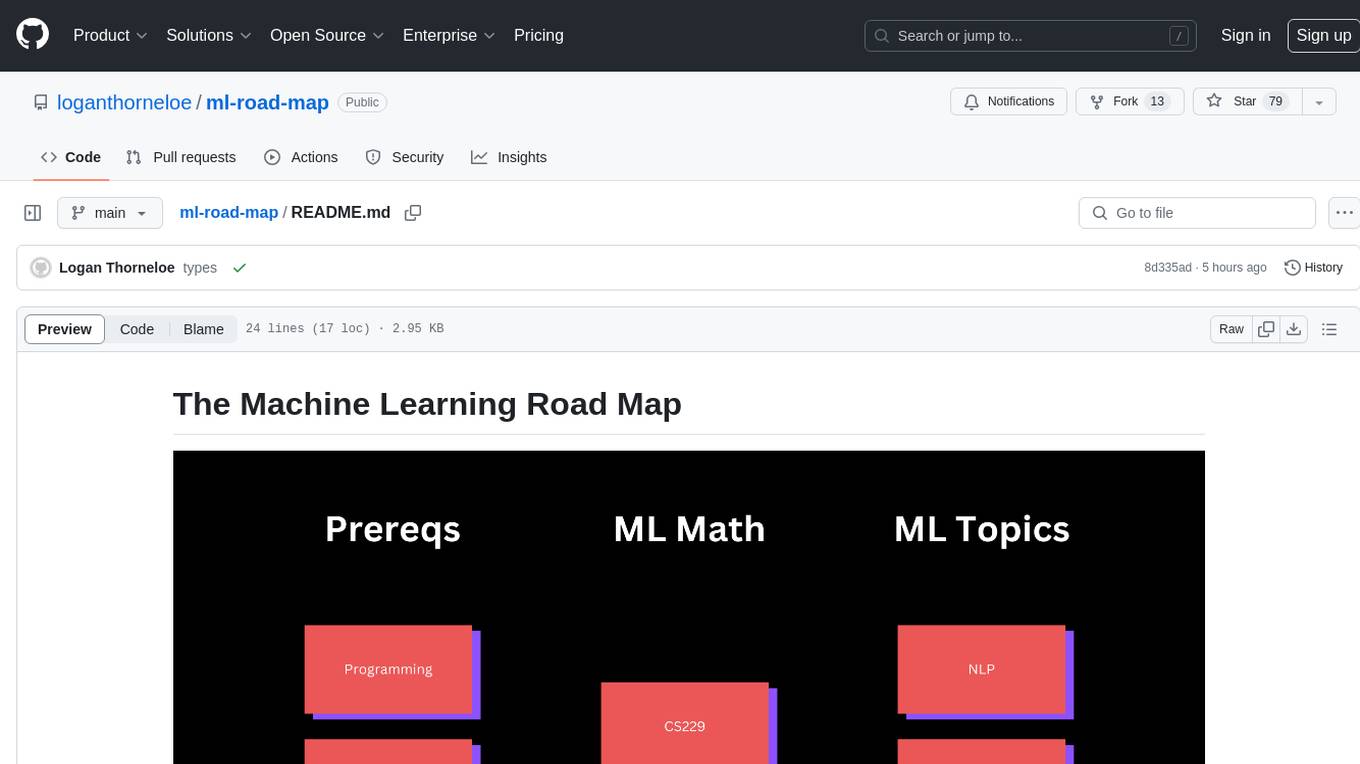

ml-road-map

The Machine Learning Road Map is a comprehensive guide designed to take individuals from various levels of machine learning knowledge to a basic understanding of machine learning principles using high-quality, free resources. It aims to simplify the complex and rapidly growing field of machine learning by providing a structured roadmap for learning. The guide emphasizes the importance of understanding AI for everyone, the need for patience in learning machine learning due to its complexity, and the value of learning from experts in the field. It covers five different paths to learning about machine learning, catering to consumers, aspiring AI researchers, ML engineers, developers interested in building ML applications, and companies looking to implement AI solutions.

LLM-workshop-2024

LLM-workshop-2024 is a tutorial designed for coders interested in understanding the building blocks of large language models (LLMs), how LLMs work, and how to code them from scratch in PyTorch. The tutorial covers topics such as introduction to LLMs, understanding LLM input data, coding LLM architecture, pretraining LLMs, loading pretrained weights, and finetuning LLMs using open-source libraries. Participants will learn to implement a small GPT-like LLM, including data input pipeline, core architecture components, and pretraining code.

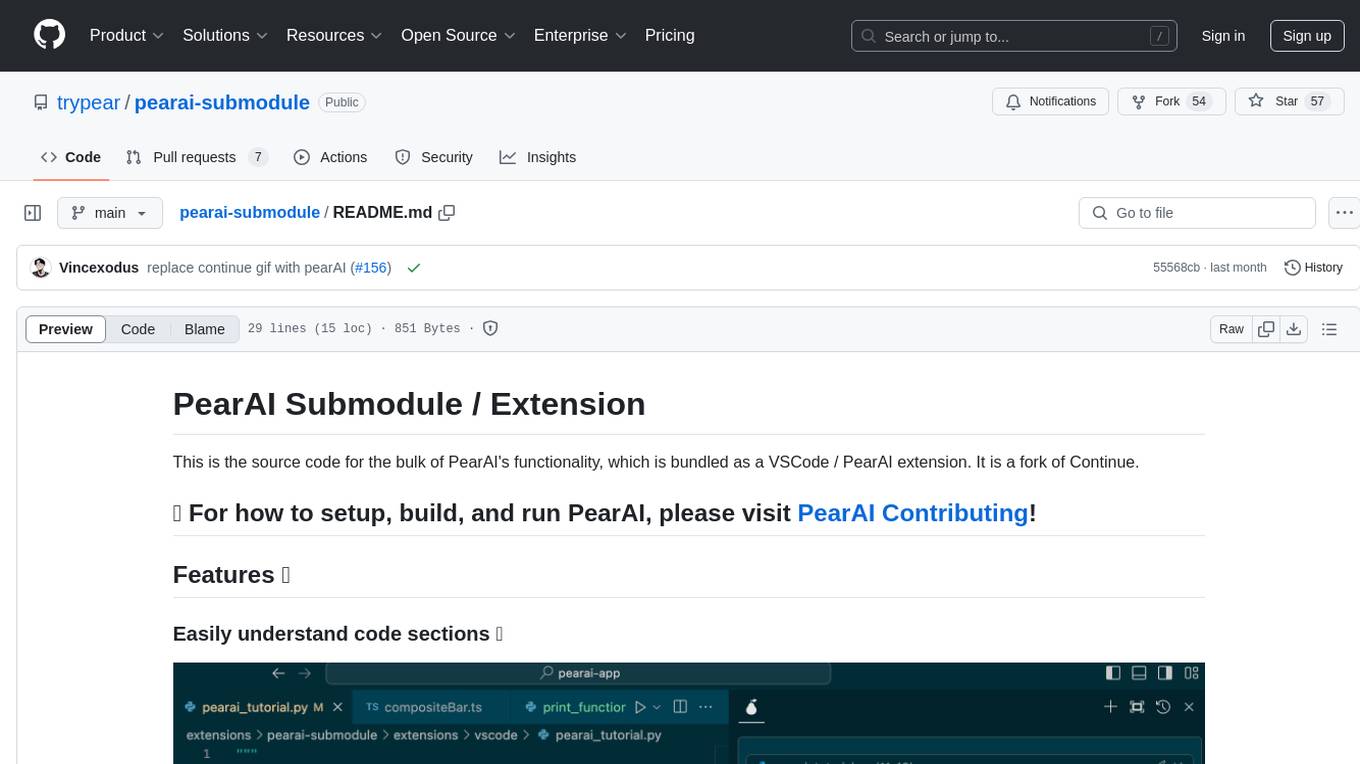

pearai-submodule

PearAI Submodule / Extension is the source code for the bulk of PearAI's functionality, bundled as a VSCode / PearAI extension. It allows users to easily understand code sections, refactor functions, and ask questions by mentioning a file. The tool aims to enhance coding experience and productivity within the VSCode environment.

Embodied-AI-Guide

Embodied-AI-Guide is a comprehensive guide for beginners to understand Embodied AI, focusing on the path of entry and useful information in the field. It covers topics such as Reinforcement Learning, Imitation Learning, Large Language Model for Robotics, 3D Vision, Control, Benchmarks, and provides resources for building cognitive understanding. The repository aims to help newcomers quickly establish knowledge in the field of Embodied AI.

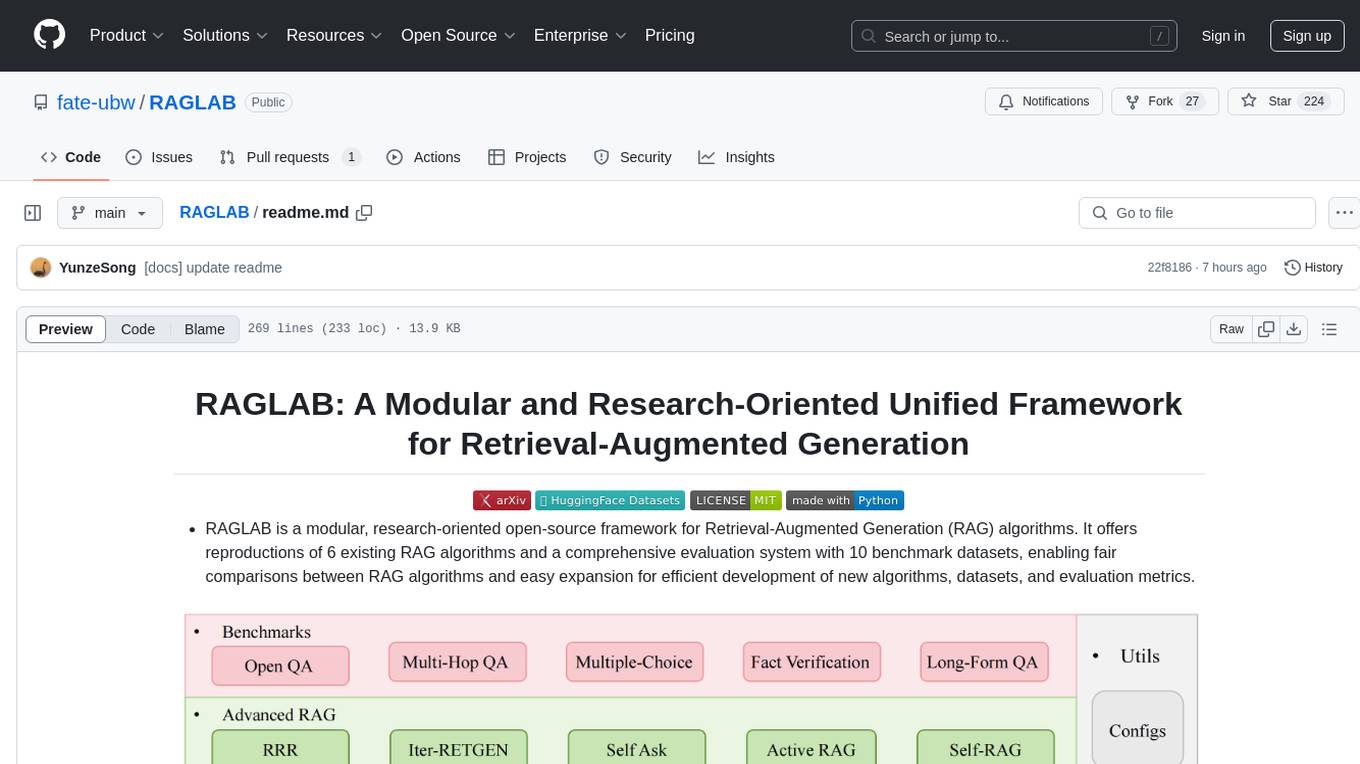

RAGLAB

RAGLAB is a modular, research-oriented open-source framework for Retrieval-Augmented Generation (RAG) algorithms. It offers reproductions of 6 existing RAG algorithms and a comprehensive evaluation system with 10 benchmark datasets, enabling fair comparisons between RAG algorithms and easy expansion for efficient development of new algorithms, datasets, and evaluation metrics. The framework supports the entire RAG pipeline, provides advanced algorithm implementations, fair comparison platform, efficient retriever client, versatile generator support, and flexible instruction lab. It also includes features like Interact Mode for quick understanding of algorithms and Evaluation Mode for reproducing paper results and scientific research.

unoplat-code-confluence

Unoplat-CodeConfluence is a universal code context engine that aims to extract, understand, and provide precise code context across repositories tied through domains. It combines deterministic code grammar with state-of-the-art LLM pipelines to achieve human-like understanding of codebases in minutes. The tool offers smart summarization, graph-based embedding, enhanced onboarding, graph-based intelligence, deep dependency insights, and seamless integration with existing development tools and workflows. It provides a precise context API for knowledge engine and AI coding assistants, enabling reliable code understanding through bottom-up code summarization, graph-based querying, and deep package and dependency analysis.

MMMU

MMMU is a benchmark designed to evaluate multimodal models on college-level subject knowledge tasks, covering 30 subjects and 183 subfields with 11.5K questions. It focuses on advanced perception and reasoning with domain-specific knowledge, challenging models to perform tasks akin to those faced by experts. The evaluation of various models highlights substantial challenges, with room for improvement to stimulate the community towards expert artificial general intelligence (AGI).

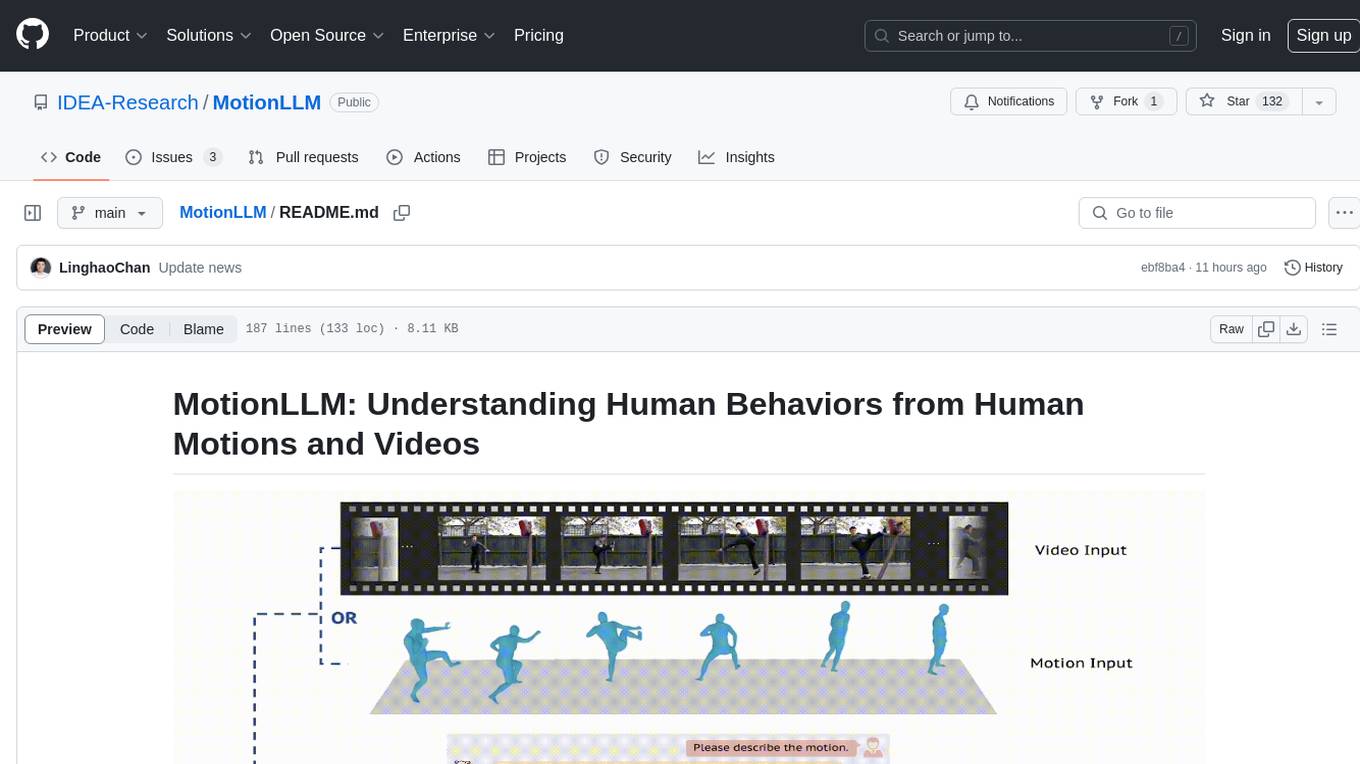

MotionLLM

MotionLLM is a framework for human behavior understanding that leverages Large Language Models (LLMs) to jointly model videos and motion sequences. It provides a unified training strategy, dataset MoVid, and MoVid-Bench for evaluating human behavior comprehension. The framework excels in captioning, spatial-temporal comprehension, and reasoning abilities.

llm_benchmarks

llm_benchmarks is a collection of benchmarks and datasets for evaluating Large Language Models (LLMs). It includes various tasks and datasets to assess LLMs' knowledge, reasoning, language understanding, and conversational abilities. The repository aims to provide comprehensive evaluation resources for LLMs across different domains and applications, such as education, healthcare, content moderation, coding, and conversational AI. Researchers and developers can leverage these benchmarks to test and improve the performance of LLMs in various real-world scenarios.

LL3DA

LL3DA is a Large Language 3D Assistant that responds to both visual and textual interactions within complex 3D environments. It aims to help Large Multimodal Models (LMM) comprehend, reason, and plan in diverse 3D scenes by directly taking point cloud input and responding to textual instructions and visual prompts. LL3DA achieves remarkable results in 3D Dense Captioning and 3D Question Answering, surpassing various 3D vision-language models. The code is fully released, allowing users to train customized models and work with pre-trained weights. The tool supports training with different LLM backends and provides scripts for tuning and evaluating models on various tasks.

MMLU-Pro

MMLU-Pro is an enhanced benchmark designed to evaluate language understanding models across broader and more challenging tasks. It integrates more challenging, reasoning-focused questions and increases answer choices per question, significantly raising difficulty. The dataset comprises over 12,000 questions from academic exams and textbooks across 14 diverse domains. Experimental results show a significant drop in accuracy compared to the original MMLU, with greater stability under varying prompts. Models utilizing Chain of Thought reasoning achieved better performance on MMLU-Pro.

VideoRefer

VideoRefer Suite is a tool designed to enhance the fine-grained spatial-temporal understanding capabilities of Video Large Language Models (Video LLMs). It consists of three primary components: Model (VideoRefer) for perceiving, reasoning, and retrieval for user-defined regions at any specified timestamps, Dataset (VideoRefer-700K) for high-quality object-level video instruction data, and Benchmark (VideoRefer-Bench) to evaluate object-level video understanding capabilities. The tool can understand any object within a video.

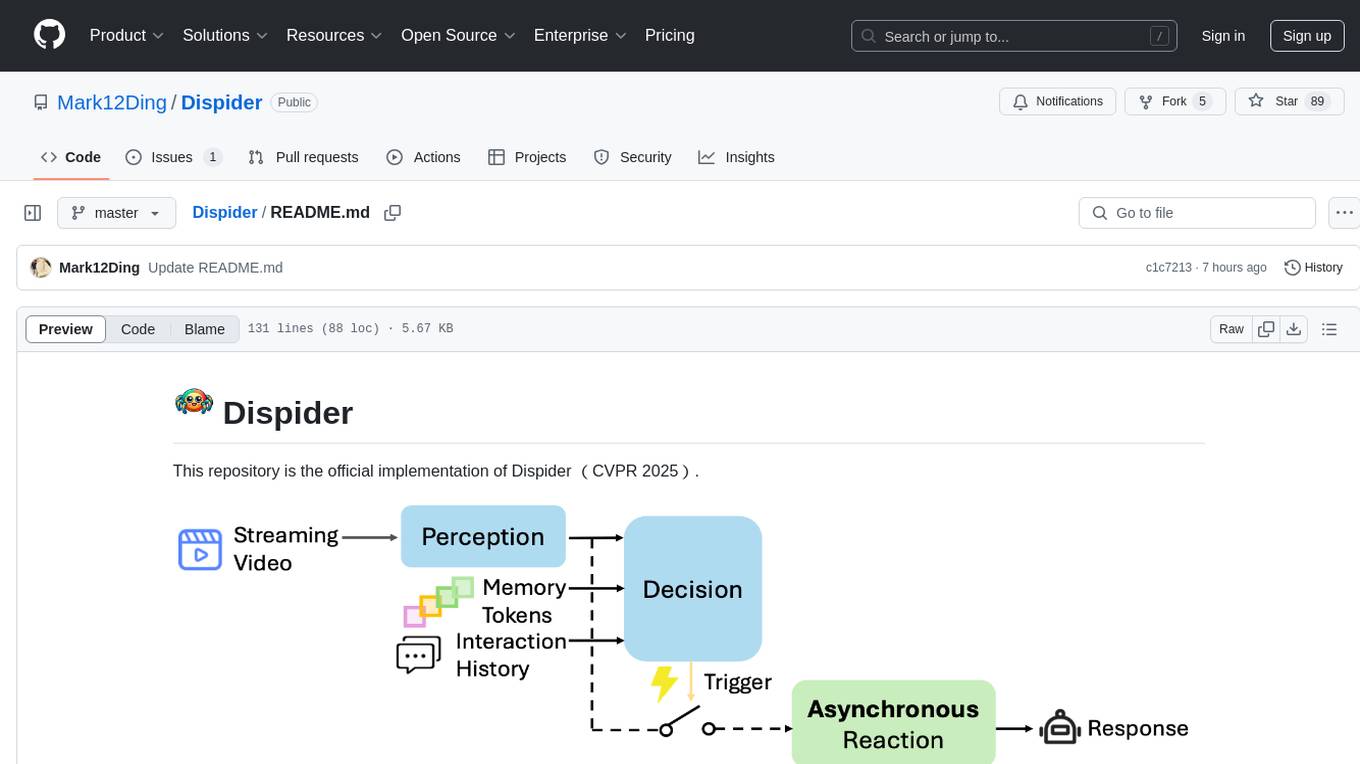

Dispider

Dispider is an implementation enabling real-time interactions with streaming videos, providing continuous feedback in live scenarios. It separates perception, decision-making, and reaction into asynchronous modules, ensuring timely interactions. Dispider outperforms VideoLLM-online on benchmarks like StreamingBench and excels in temporal reasoning. The tool requires CUDA 11.8 and specific library versions for optimal performance.