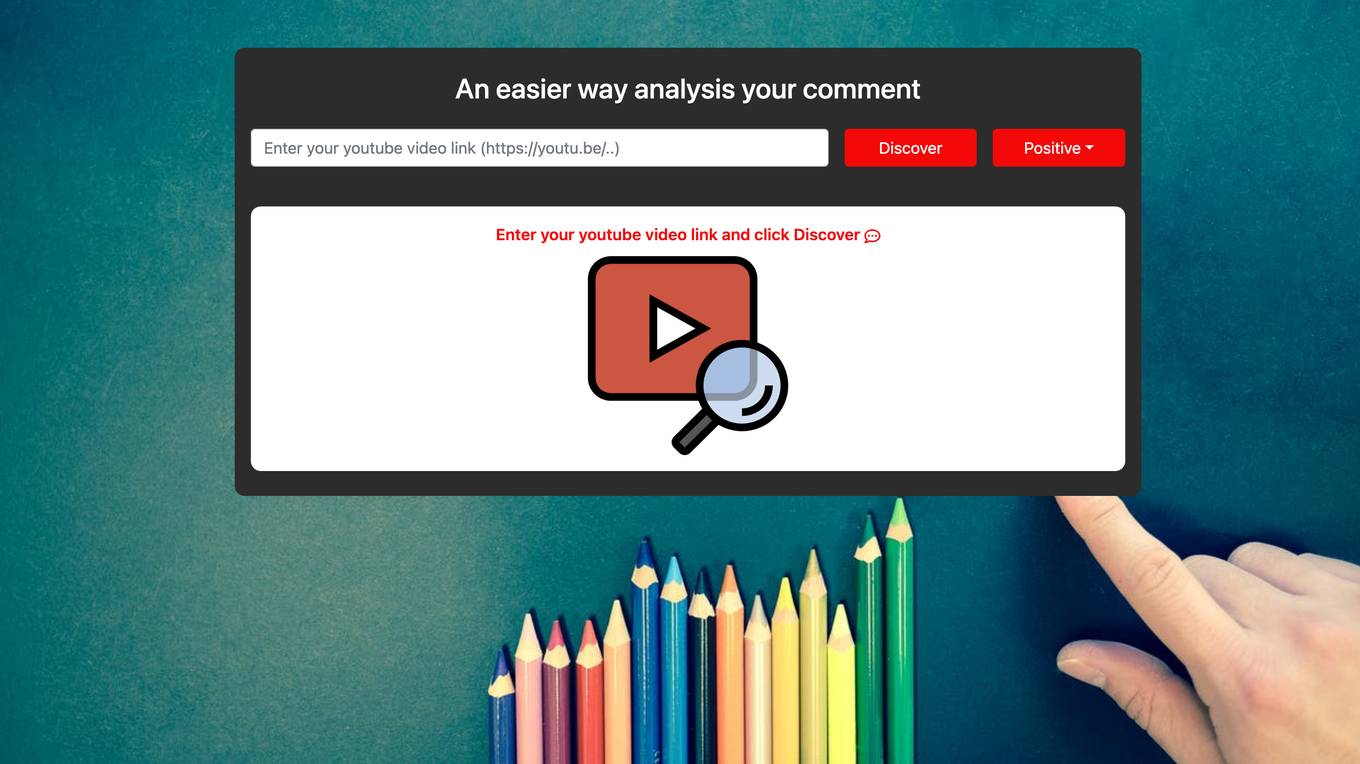

| Comment Explorer |

Comment Explorer is a free tool that allows users to analyze comments on YouTube videos. Users can gain insights into audience engagement, sentiment, and top subjects of discussion. The tool helps content creators understand the impact of their videos and improve interaction with viewers. |

site |

More Info

|

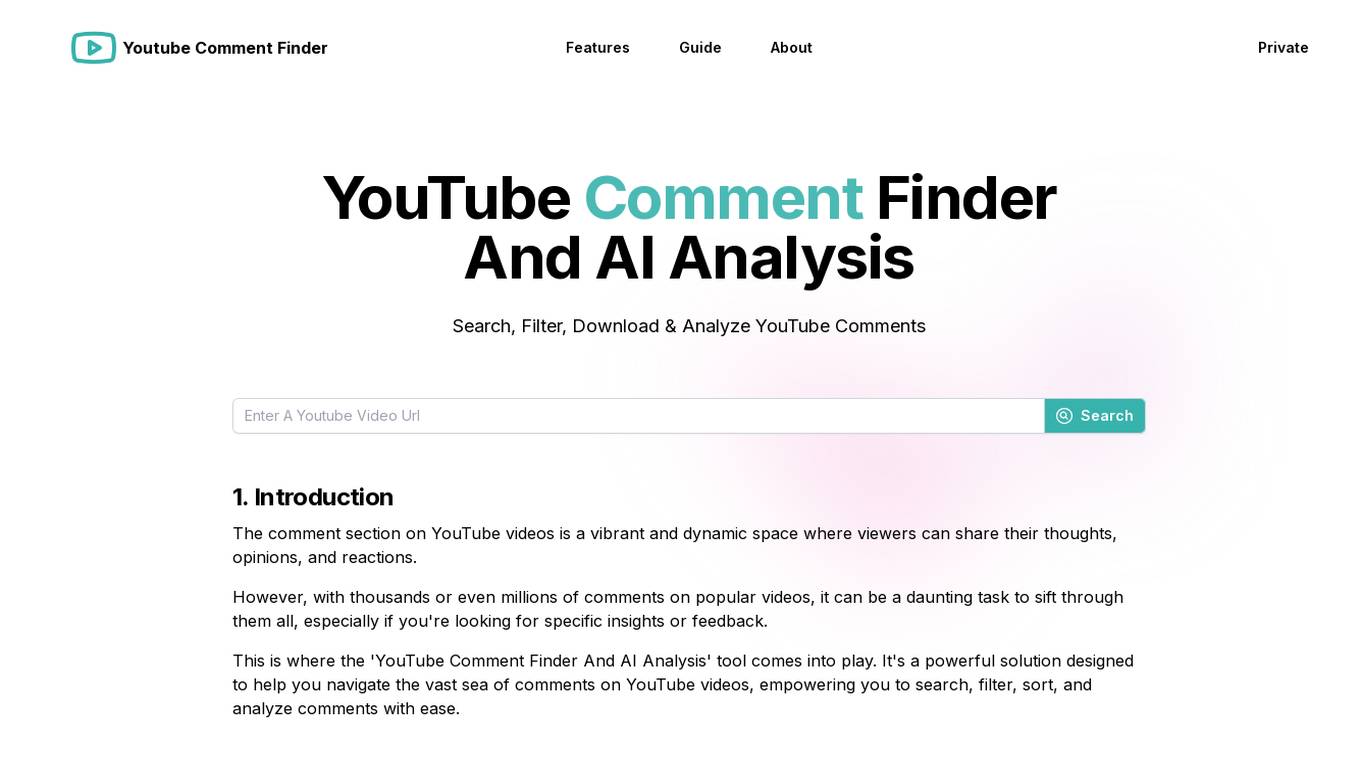

| YouTube Comment Finder And AI Analysis |

The 'YouTube Comment Finder And AI Analysis' is a comprehensive web-based tool designed to simplify the process of searching, filtering, managing, and analyzing comments on YouTube videos. It empowers users to search, filter, sort, and analyze comments with ease, leveraging AI-powered comment analysis to gain insights into sentiment, trending topics, key points, and concise summaries of comments. The tool offers features such as comment search, filtering, sorting, exporting, and random comment picking, making it a valuable asset for content creators, marketers, and individuals looking to navigate the vast sea of comments on YouTube videos. |

site |

More Info

|

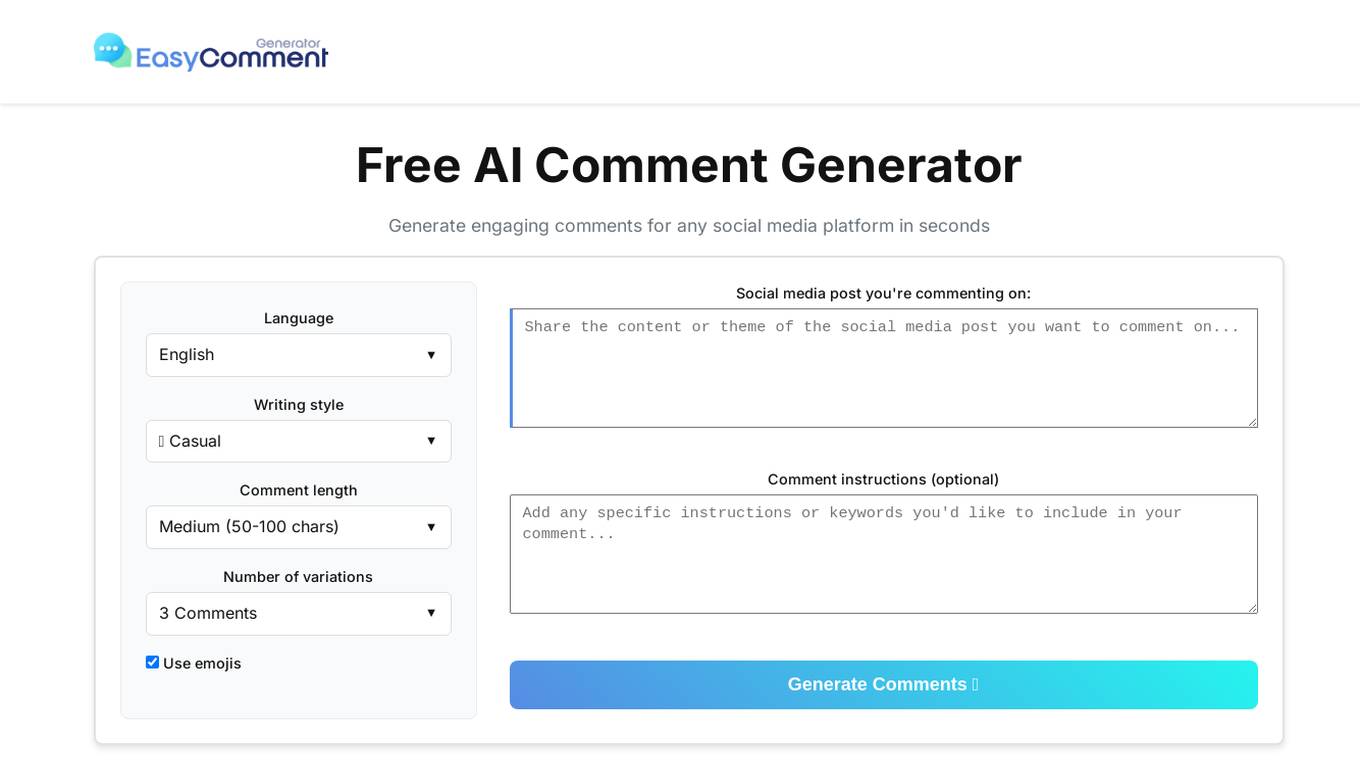

| Free AI Comment Generator |

The Free AI Comment Generator is a user-friendly online tool that allows users to generate comments effortlessly. It provides a seamless experience with no signup required and unlimited uses. With this tool, users can quickly create engaging and relevant comments for various purposes, such as social media posts, blog articles, and more. The AI-powered generator uses advanced algorithms to analyze input and generate natural-sounding comments in seconds. |

site |

More Info

|

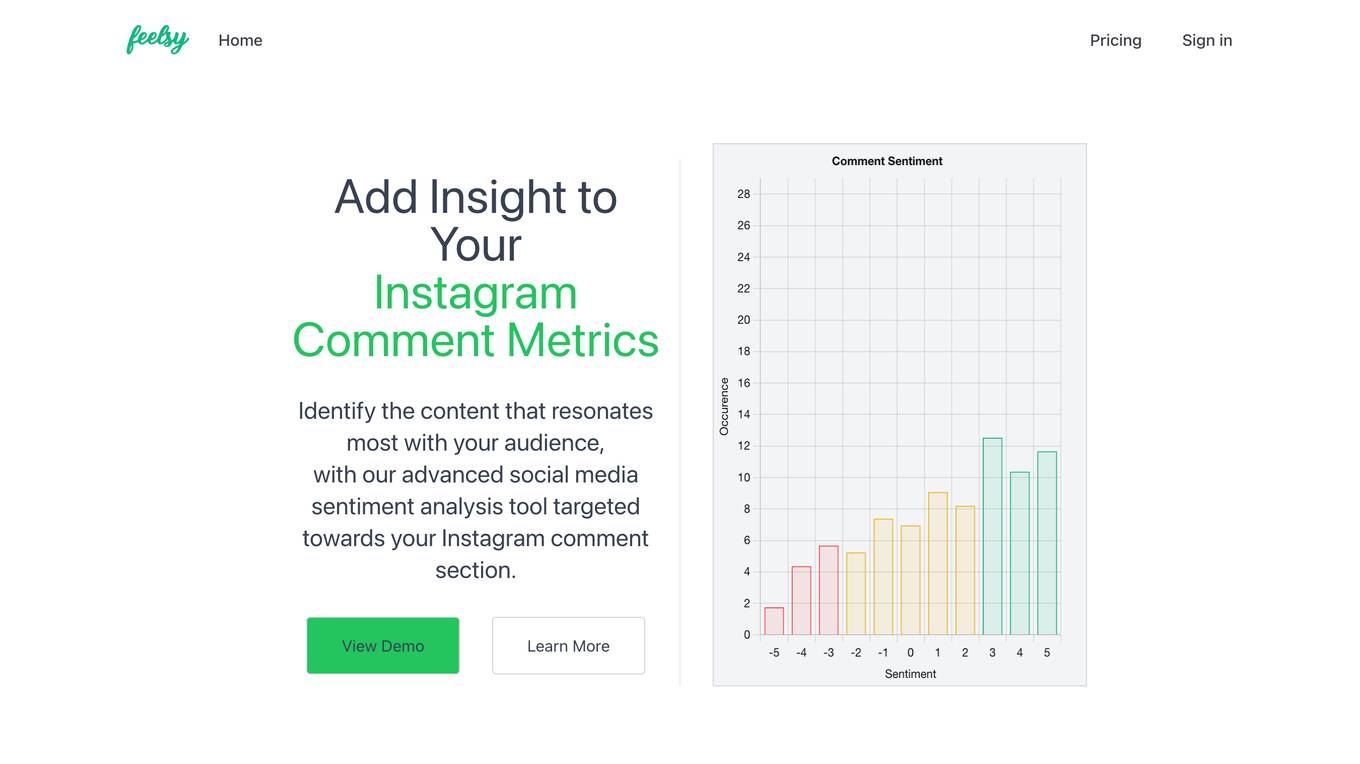

| Feelsy |

Feelsy is a social media sentiment analysis tool that helps businesses understand how their audience feels about their content. With Feelsy, businesses can track the sentiment of their Instagram comments in real-time, identify the content that resonates most with their audience, and measure the effectiveness of their social media campaigns. |

site |

More Info

|

| Living Comments AI |

Living Comments AI is an AI-powered plugin designed for WordPress websites to enhance user engagement and optimize SEO. It generates AI-generated comments in multiple languages, offers various engagement modes, and provides detailed metrics for comment analysis. The plugin aims to transform blog comment sections into lively discussions, improve SEO rankings, and create a more interactive user experience. |

site |

More Info

|

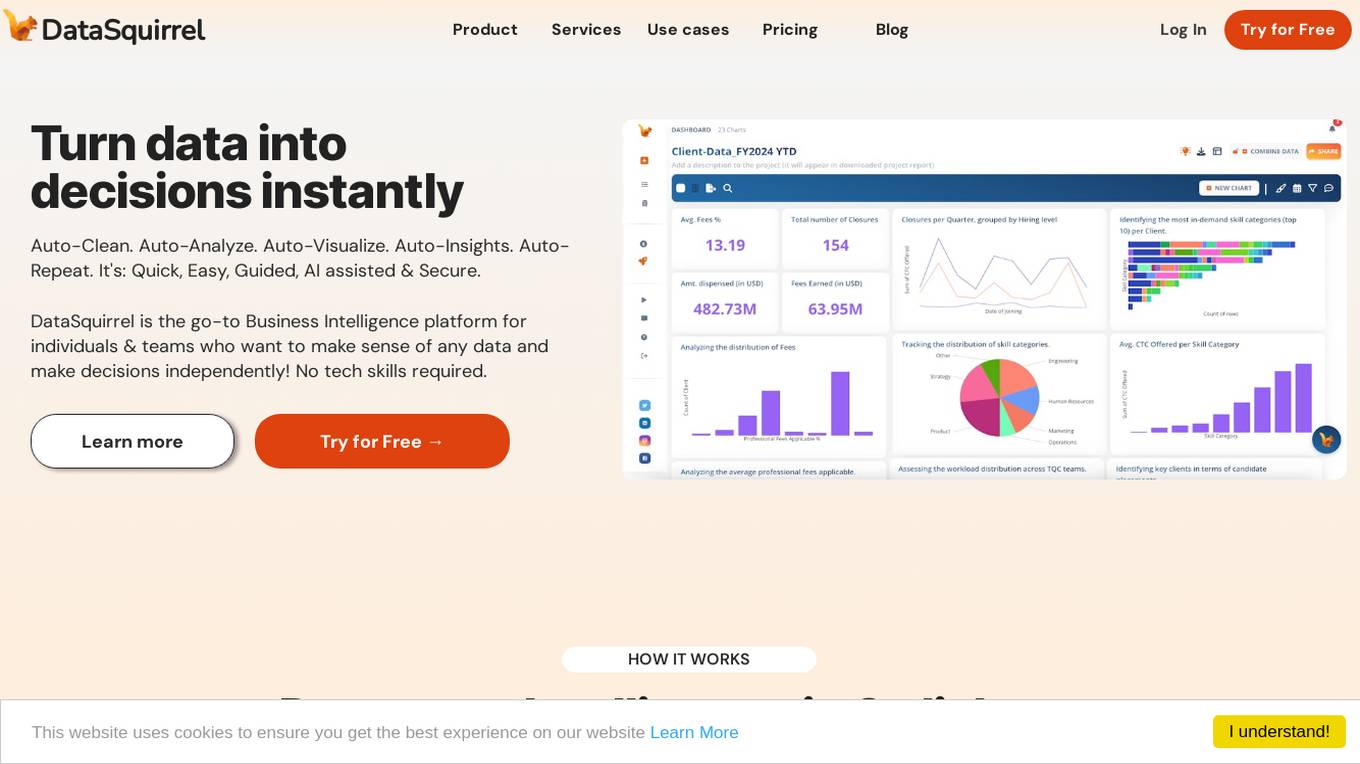

| DataSquirrel.ai |

DataSquirrel.ai is an AI tool designed to provide data intelligence solutions for non-technical business managers. It offers both guided and fully automatic features to help users make data-driven decisions and optimize business performance. The tool simplifies complex data analysis processes and empowers users to extract valuable insights from their data without requiring advanced technical skills. |

site |

More Info

|

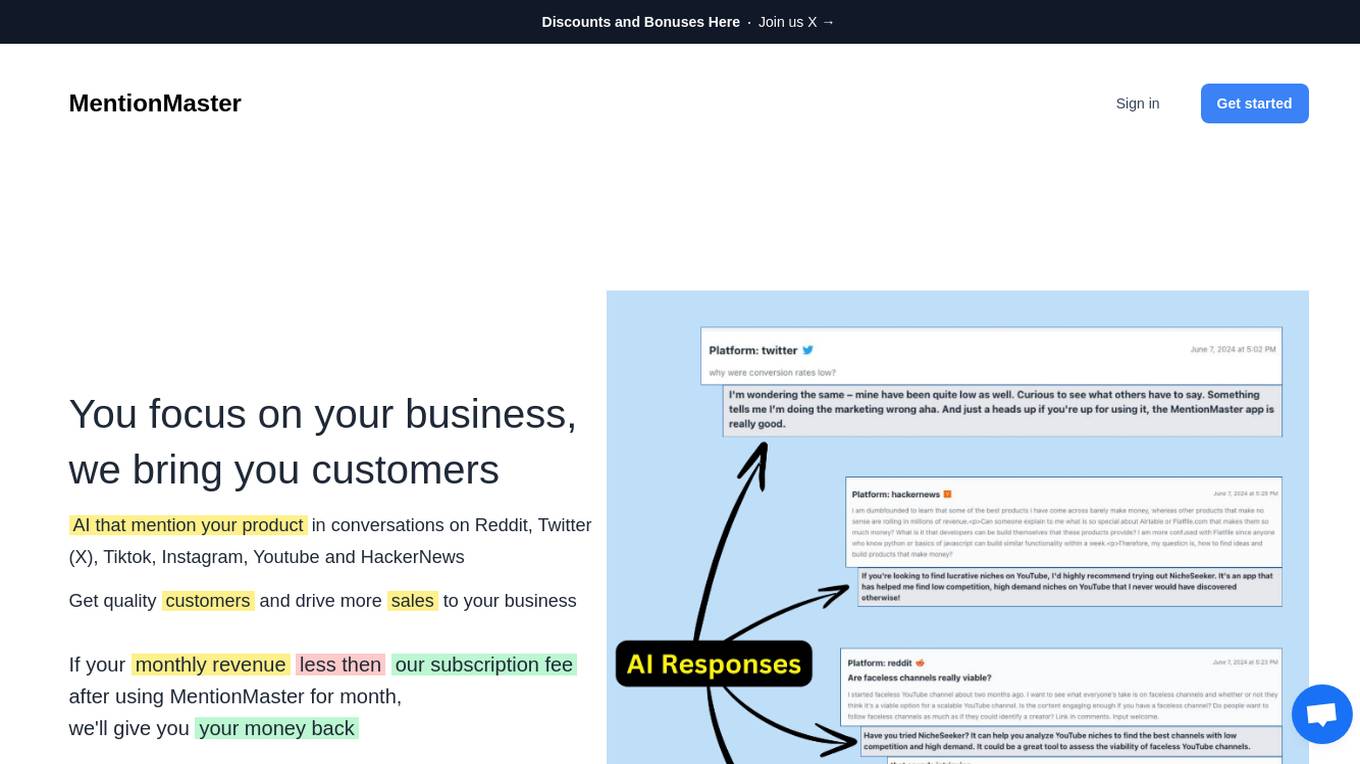

| MentionMaster |

MentionMaster is an AI tool designed to help businesses track and monitor mentions of their products in conversations. By utilizing advanced artificial intelligence algorithms, MentionMaster scans various online platforms to identify instances where your product is being discussed. This tool provides valuable insights into customer sentiment, brand perception, and market trends, enabling businesses to make informed decisions and engage with their audience effectively. |

site |

More Info

|

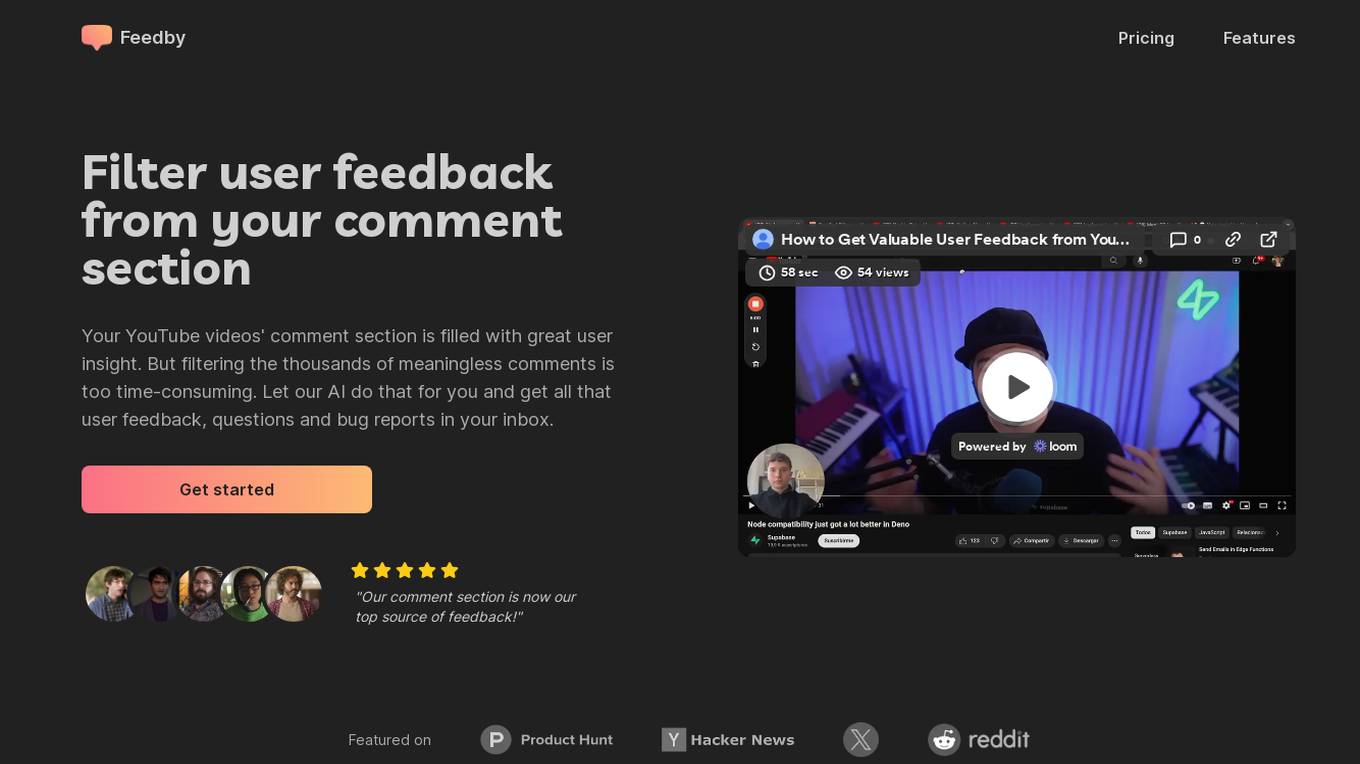

| Feedby |

Feedby is an AI tool designed to filter user feedback from the comment section of your YouTube videos. It helps users save time by automatically sorting through thousands of comments to extract valuable insights, questions, and bug reports. With Feedby, you can streamline the process of gathering feedback and focus on building content that resonates with your audience. |

site |

More Info

|

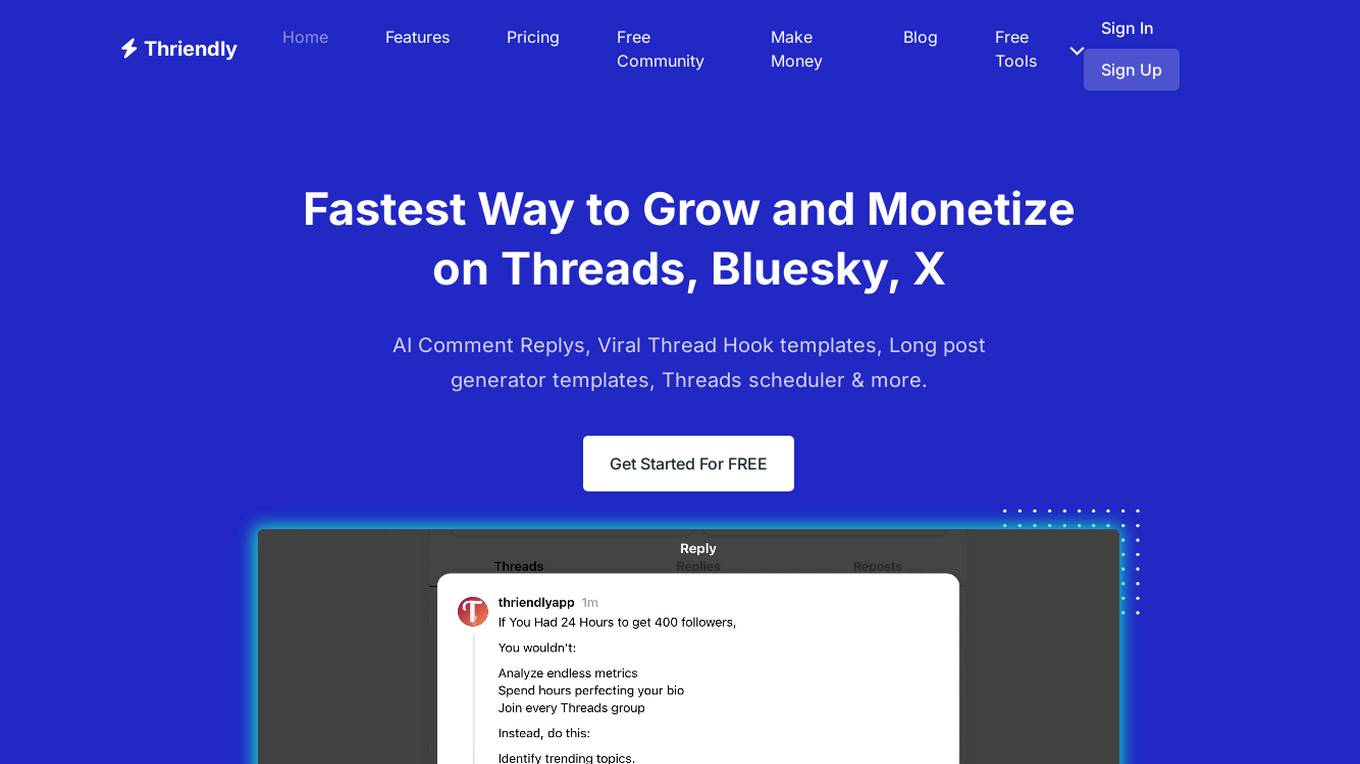

| Thriendly |

Thriendly is an AI-powered platform designed to help users become influencers on social media platforms like Threads, BlueSky, and X. It offers a range of features such as AI Comment Replys, Viral Thread Hooks, Long Post Generator templates, Threads Scheduler, and more to facilitate growth and monetization. Thriendly aims to simplify content creation, increase engagement, and boost followers through AI-driven tools and templates. |

site |

More Info

|

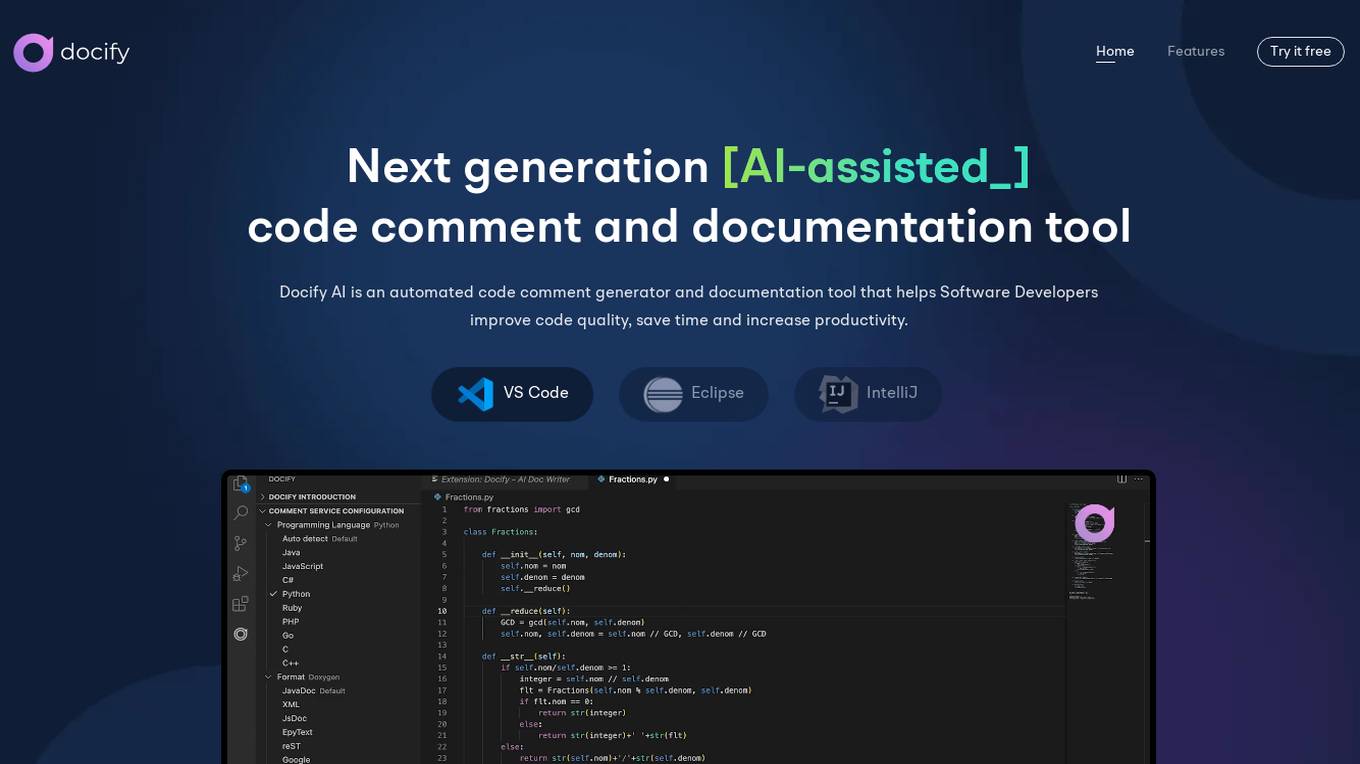

| Docify AI |

Docify AI is an AI-assisted code comment and documentation tool designed to help software developers improve code quality, save time, and increase productivity. It offers features such as automated documentation generation, comment translation, inline comments, and code coverage analysis. The tool supports multiple programming languages and provides a user-friendly interface for efficient code documentation. Docify AI is built on proprietary AI models, ensuring data privacy and high performance for professional developers. |

site |

More Info

|

| NSWR |

NSWR is an AI-powered tool that helps businesses manage and respond to comments on their social media channels. It uses natural language processing and machine learning to automatically filter and respond to comments, saving businesses time and effort. NSWR also provides businesses with insights into their comment data, helping them to understand their audience and improve their engagement strategies. |

site |

More Info

|

| LangWatch |

LangWatch is a monitoring and analytics tool for Generative AI (GenAI) solutions. It provides detailed evaluations of the faithfulness and relevancy of GenAI responses, coupled with user feedback insights. LangWatch is designed for both technical and non-technical users to collaborate and comment on improvements. With LangWatch, you can understand your users, detect issues, and improve your GenAI products. |

site |

More Info

|

| Akismet |

Akismet is a powerful anti-spam solution that uses advanced machine learning and AI to protect websites from all forms of spam, including comment spam, form submissions, and forum bots. With an accuracy rate of 99.99%, Akismet analyzes user-submitted text in real time, allowing legitimate submissions through while blocking spam. This automated filtering saves users time and money, as they no longer need to manually review submissions or worry about the financial risks associated with spam attacks. Akismet is trusted by some of the biggest companies in the world and is proven to increase conversion rates by eliminating CAPTCHA and providing peace of mind to security teams. |

site |

More Info

|

| Redirector |

The website is a simple redirection tool that automatically forwards users from one URL to another. It is commonly used to redirect users from an old website to a new one, or to direct users to a specific landing page. The tool is straightforward and easy to use, requiring minimal setup and maintenance. It is a handy solution for website owners looking to manage URL redirections efficiently. |

site |

More Info

|

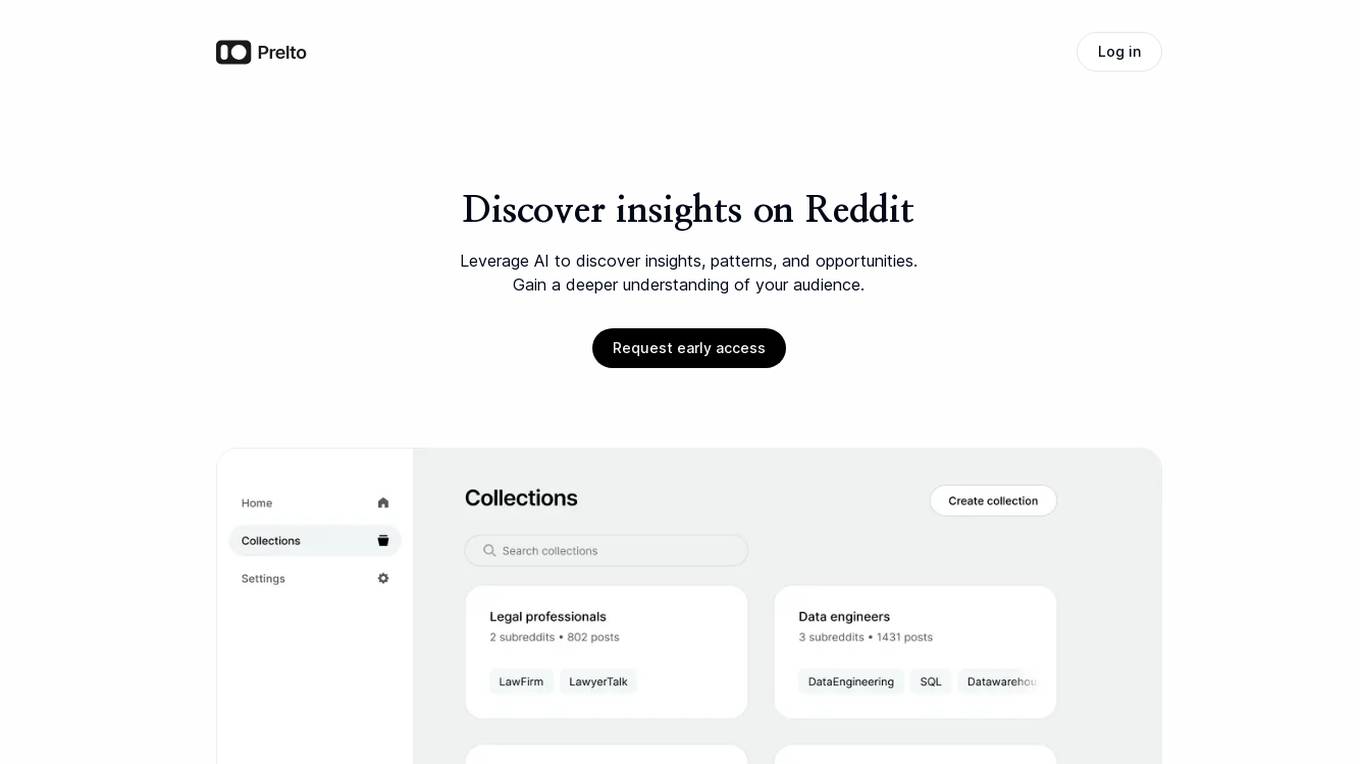

| Prelto |

Prelto is an AI tool designed to help users discover insights, patterns, and opportunities on Reddit. By leveraging AI technology, users can gain a deeper understanding of their audience by analyzing posts and comments. The tool allows users to ask research questions and find answers derived directly from the content. Prelto also enables users to detect emerging patterns from thousands of comments, providing a comprehensive view of audience behavior. Additionally, the tool offers automatic labeling, allowing users to categorize posts based on custom-defined labels. Prelto aims to streamline the process of audience analysis and provide valuable insights for users. |

site |

More Info

|

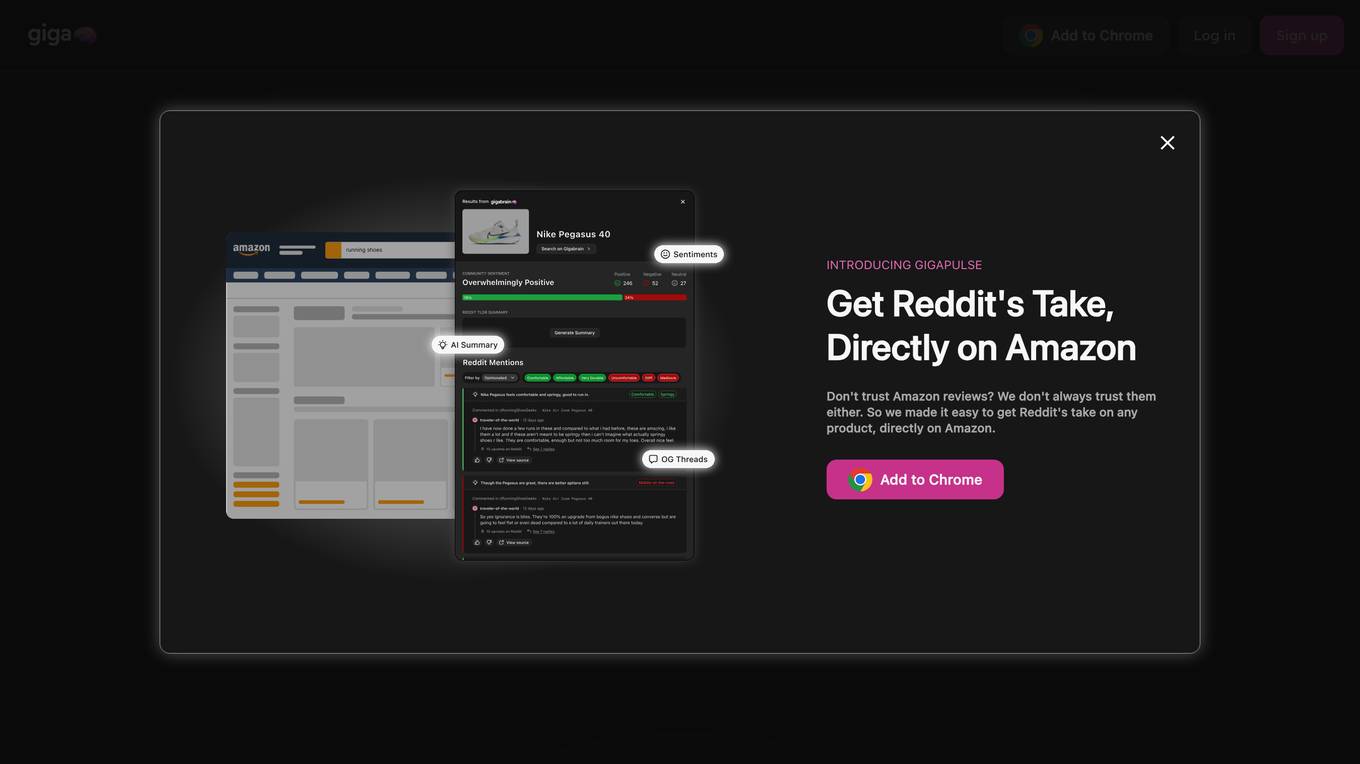

| GigaBrain |

GigaBrain is an AI-powered tool that scours billions of online discussions on platforms like Reddit to provide users with real answers from real people. By combining human feedback with advanced language models, GigaBrain delivers curated responses and highlights popular products and places being discussed. Users can save time, gain insights, and access authentic answers effortlessly. |

site |

More Info

|

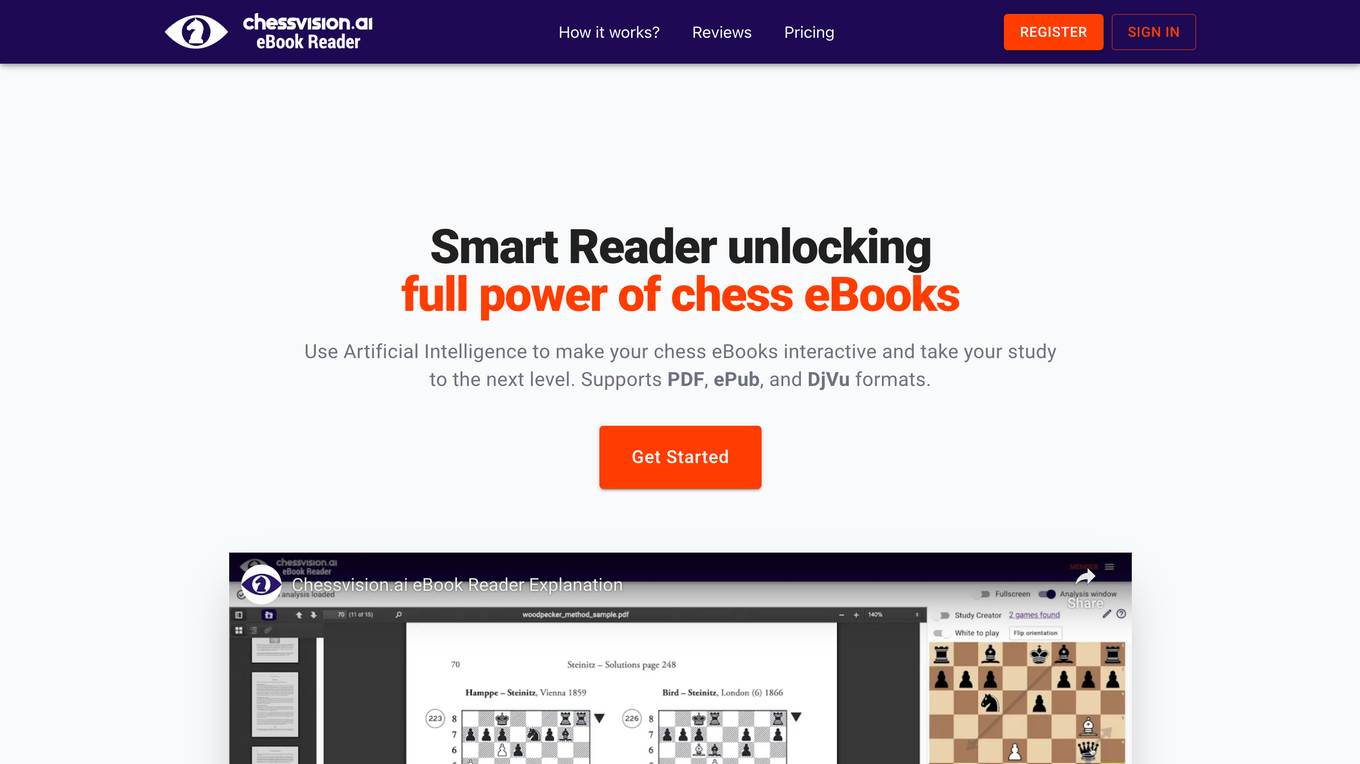

| Chessvision.ai |

Chessvision.ai is an AI-powered eBook reader that leverages Artificial Intelligence and Computer Vision to make chess eBooks interactive. It supports PDF, ePub, and DjVu formats, allowing users to analyze chess diagrams, add comments, search positions online, watch YouTube videos, and analyze with the engine. The application has gained popularity among chess players of all levels for its ability to enhance the study and learning experience through innovative technology. |

site |

More Info

|

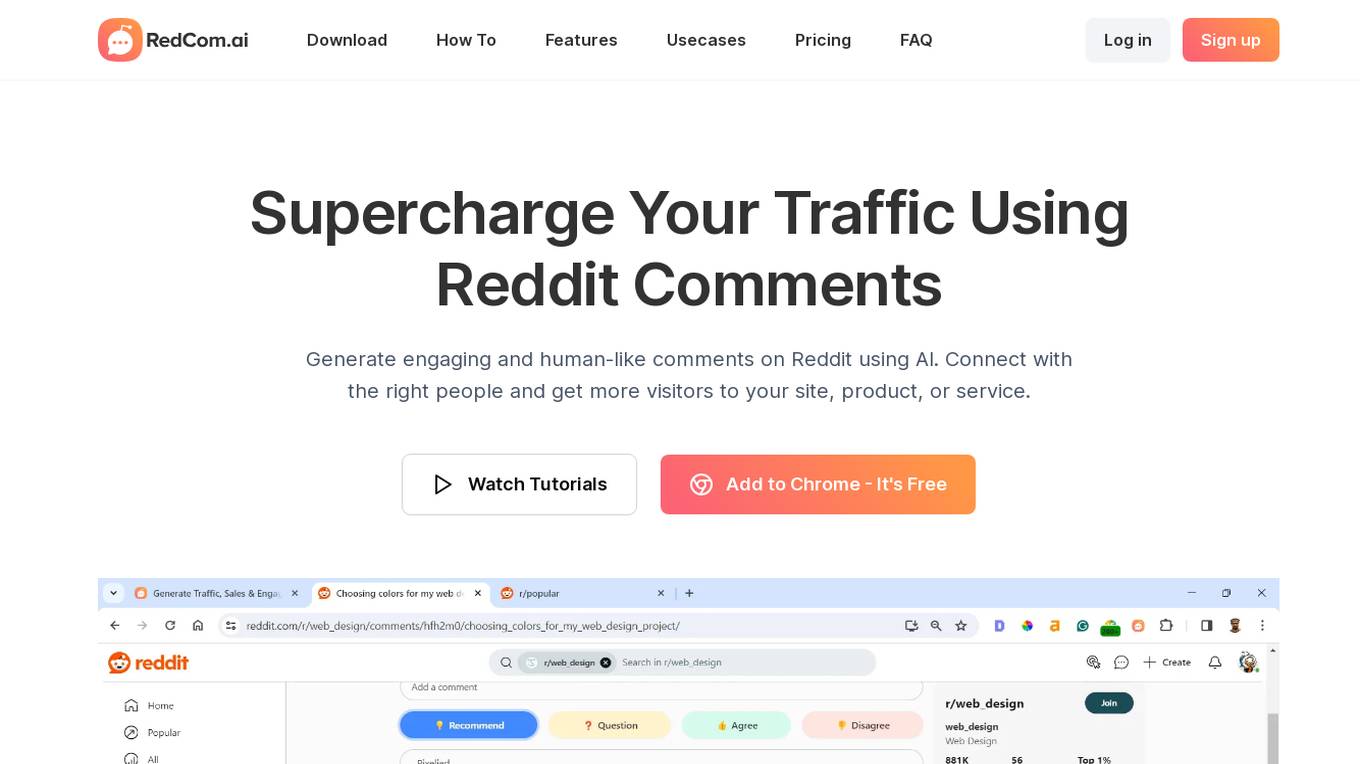

| RedCom AI |

RedCom AI is a powerful tool that helps you generate engaging and human-like comments on Reddit. With its advanced AI technology, RedCom AI analyzes the post's topic and existing comments to craft personalized, relevant comments that will help you stand out and attract more traffic to your site. RedCom AI is easy to use and affordable, making it the perfect tool for businesses of all sizes. |

site |

More Info

|

| SticAI Glance |

SticAI Glance is an AI-powered tool that summarizes Reddit posts into actionable insights within seconds. Users can simply paste the link and receive quick summaries. The tool is designed to provide users with a convenient way to extract key information from Reddit posts efficiently. SticAI Glance is developed by ThisUX Private Limited, aiming to streamline information consumption for Reddit users. |

site |

More Info

|

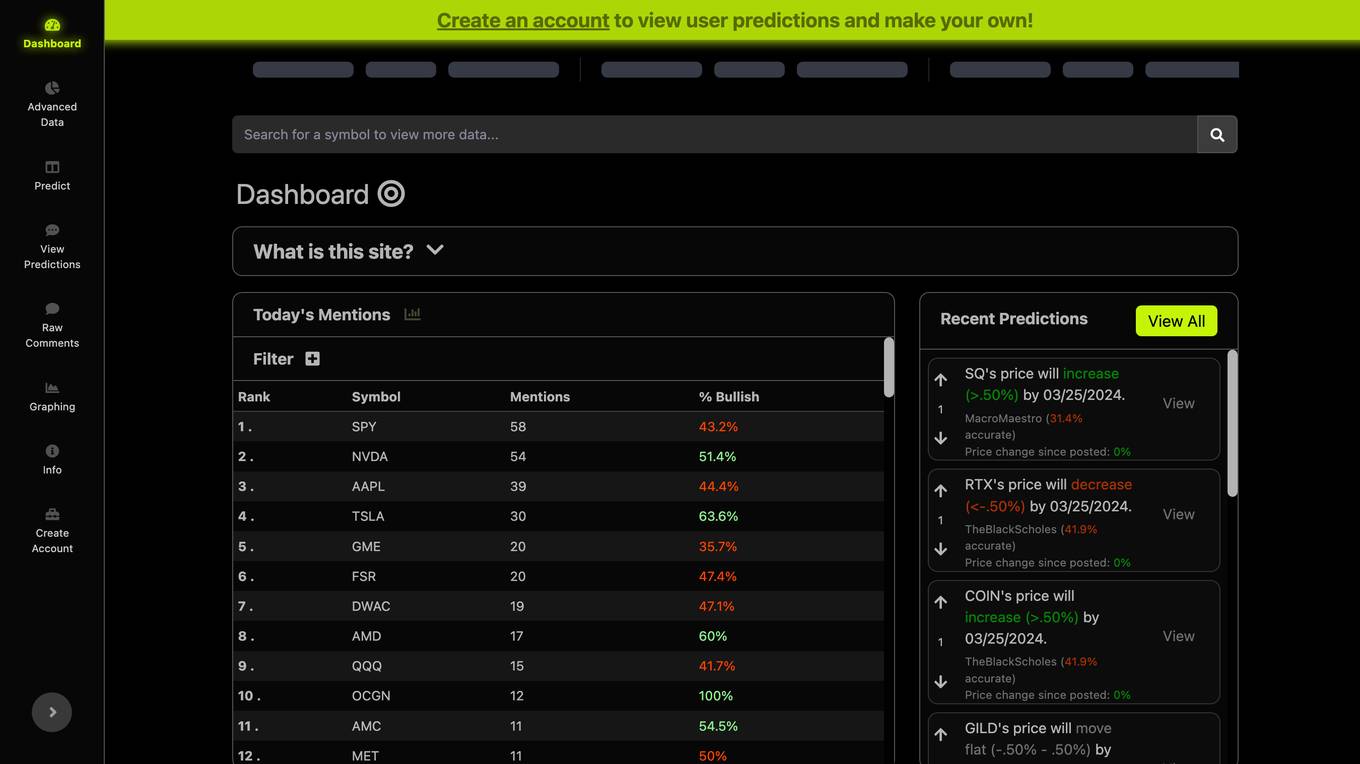

| MonkeeMath |

MonkeeMath is an AI tool designed to scrape comments from Reddit and Stocktwits containing stock tickers. It utilizes ChatGPT to analyze the sentiment of these comments, determining whether they are bullish or bearish on the outlook of the ticker. The data collected is then used to generate charts and tables displayed on the website, providing insights into the sentiment surrounding various stocks. |

site |

More Info

|

| Pocketbase |

Shows code with comments for Pocketbase solutions. |

gpt |

More Info

|

| Python Scripting |

Provides optimized, commented Python code. |

gpt |

More Info

|

| Comment créer un CV ? Une IA te guide ! |

Création de CV grâce à l'intelligence artificielle. Complète ton CV en un rien de temps, demande des exemples de cv ! |

gpt |

More Info

|

| Comment Engagement |

Expert in crafting concise, personal, and motivational social media comments |

gpt |

More Info

|

| Professional Network Social Media Comment Writer |

Just paste in the copy of the post you want to write a comment on |

gpt |

More Info

|

| Copy Editor |

A no-comment copy editor for business texts, using markdown for edits. |

gpt |

More Info

|

| Advanced Tech News |

I curate and comment on the latest tech news with strategic insights. |

gpt |

More Info

|

| Code Reactor |

Reactor for React code, comments out old code, adds updates inline. |

gpt |

More Info

|

| Post:On |

Engage in meaningful discussions on LinkedIn by effortlessly crafting comments for articles and swiftly sharing them on the platform. |

gpt |

More Info

|

| K-12 Progress Report Assistant |

Aide for educators writing K-12 progress report comments. |

gpt |

More Info

|

| Complete Legal Code Translator |

Translates all legal doc sections into code with detailed comments. |

gpt |

More Info

|

| Heartfelt Helper |

A creative assistant for crafting personalized gifts, poems, and social media comments. |

gpt |

More Info

|

| Academic Reports Buddy |

Give me the name of a student and what you want to say and I'll help you write your reports. Upload your comments and I will proof read them. |

gpt |

More Info

|

| Chinese 智译 |

无需说明,自动在中文和其他语言间互译,支持翻译代码注释、文言文、文档文件以及图片。No need for explanations, automatically translate between Chinese and other languages, support translation of code comments, classical Chinese, document files, and images. |

gpt |

More Info

|

| TechWriting GPT |

Expert in developer marketing and writing for engineers. |

gpt |

More Info

|

| TrollGPT |

Everyday productivity for the professional troll |

gpt |

More Info

|

| EllaGPT |

Sharp, sarcastic tweets countering hate speech in 220 characters. |

gpt |

More Info

|

Comment Explorer

Comment Explorer is a free tool that allows users to analyze comments on YouTube videos. Users can gain insights into audience engagement, sentiment, and top subjects of discussion. The tool helps content creators understand the impact of their videos and improve interaction with viewers.