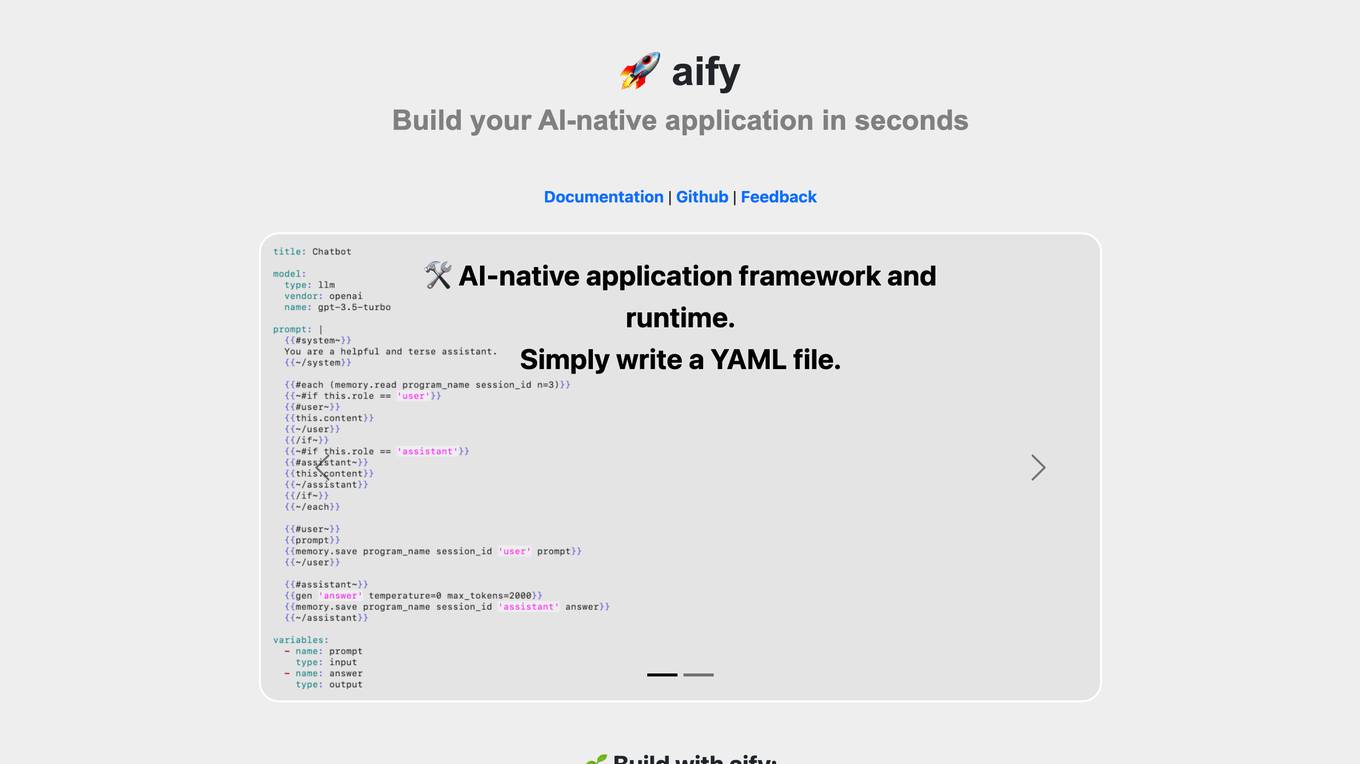

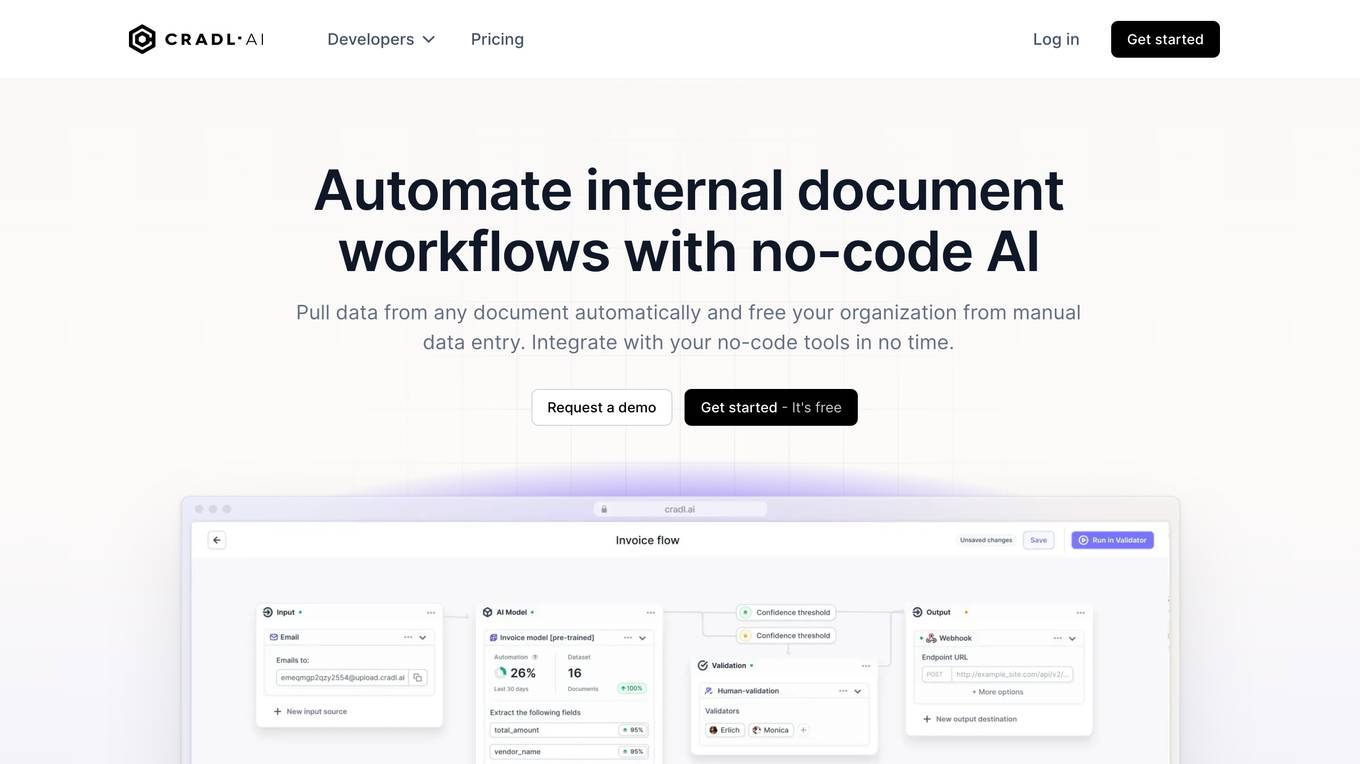

Local AI Playground

Experiment with AI offline, in private. No GPU required!

Monthly visits:26873

Description:

Local AI Playground is a free and open-source native app that simplifies the process of AI management, verification, and inferencing offline and in private, without the need for a GPU. It features a powerful native app with a Rust backend, making it memory efficient and compact. With Local AI Playground, you can power any AI app offline or online, and keep track of your AI models in one centralized location. It also provides a robust digest verification feature to ensure the integrity of downloaded models.

For Tasks:

For Jobs:

Features

- CPU Inferencing

- Adapts to available threads

- GGML quantization q4, 5.1, 8, f16

- Model Management

- Resumable, concurrent downloader

- Usage-based sorting

- Directory agnostic

- Digest Verification

- Digest compute

- Known-good model API

- License and Usage chips

- BLAKE3 quick check

- Model info card

- Inferencing Server

- Streaming server

- Quick inference UI

- Writes to .mdx

- Inference params

- Remote vocabulary

Advantages

- Free and open-source

- Simplifies the whole process of AI management, verification, and inferencing

- No GPU required

- Powerful native app with a Rust backend

- Memory efficient and compact

- Available for Mac M2, Windows, and Linux .deb

- Supports CPU Inferencing

- Supports GGML quantization

- Provides Model Management features

- Offers Digest Verification to ensure the integrity of downloaded models

- Allows you to start a local streaming server for AI inferencing in 2 clicks

Disadvantages

- May not be suitable for complex AI models that require significant computational resources

- Limited features compared to cloud-based AI platforms

- Requires technical knowledge to set up and use

Frequently Asked Questions

-

Q:What is Local AI Playground?

A:Local AI Playground is a free and open-source native app that simplifies the process of AI management, verification, and inferencing offline and in private, without the need for a GPU. -

Q:What are the benefits of using Local AI Playground?

A:Local AI Playground is free and open-source, simplifies the whole process of AI management, verification, and inferencing, does not require a GPU, and is powerful, native, memory efficient, and compact. -

Q:What are the features of Local AI Playground?

A:Local AI Playground features CPU Inferencing, Adapts to available threads, GGML quantization q4, 5.1, 8, f16, Model Management, Resumable, concurrent downloader, Usage-based sorting, Directory agnostic, Digest Verification, Digest compute, Known-good model API, License and Usage chips, BLAKE3 quick check, Model info card, Inferencing Server, Streaming server, Quick inference UI, Writes to .mdx, Inference params, and Remote vocabulary. -

Q:What are the advantages of using Local AI Playground?

A:Local AI Playground is free and open-source, simplifies the whole process of AI management, verification, and inferencing, does not require a GPU, is powerful, native, memory efficient, and compact, supports CPU Inferencing, supports GGML quantization, provides Model Management features, offers Digest Verification to ensure the integrity of downloaded models, and allows you to start a local streaming server for AI inferencing in 2 clicks. -

Q:What are the disadvantages of using Local AI Playground?

A:Local AI Playground may not be suitable for complex AI models that require significant computational resources, has limited features compared to cloud-based AI platforms, and requires technical knowledge to set up and use.

Alternative AI tools for Local AI Playground

For similar jobs

TextSynth

TextSynth: Access to large language and text-to-image models through a REST API and a playground.

site

: 32.5k

Turing.School

Learn to code by solving real-world problems using AI-generated exercises.

site

: 1.3k