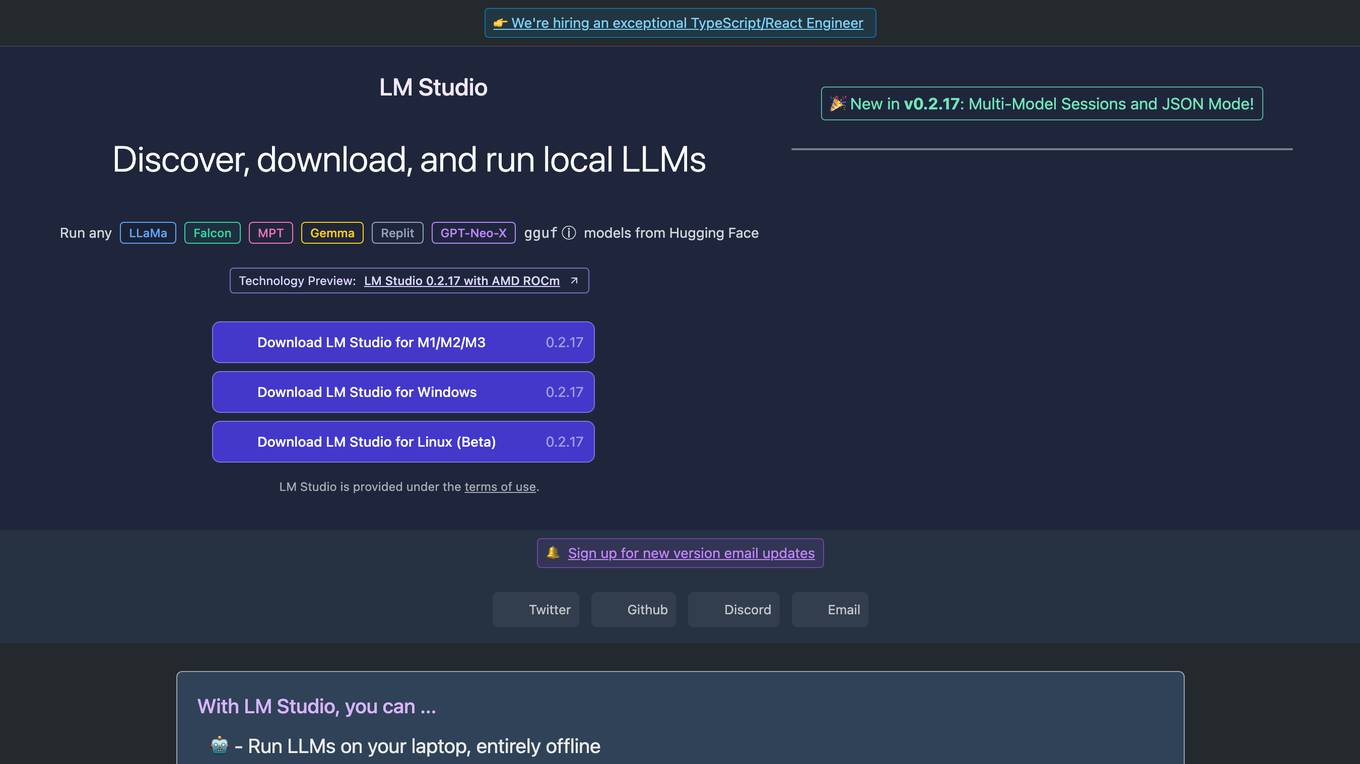

LM Studio

Discover, download, and run local LLMs

Monthly visits:539949

Description:

LM Studio is a desktop application that allows users to discover, download, and run local Large Language Models (LLMs) on their own computers. With LM Studio, users can run LLMs entirely offline, use models through the in-app Chat UI or an OpenAI compatible local server, download any compatible model files from HuggingFace repositories, and discover new & noteworthy LLMs in the app's home page. LM Studio supports any ggml Llama, MPT, and StarCoder model on Hugging Face (Llama 2, Orca, Vicuna, Nous Hermes, WizardCoder, MPT, etc.).

For Tasks:

For Jobs:

Features

- Run LLMs on your laptop, entirely offline

- Use models through the in-app Chat UI or an OpenAI compatible local server

- Download any compatible model files from HuggingFace repositories

- Discover new & noteworthy LLMs in the app's home page

- Supports any ggml Llama, MPT, and StarCoder model on Hugging Face

Advantages

- Privacy: Your data remains private and local to your machine

- Convenience: Run LLMs offline, without the need for an internet connection

- Customization: Use your own models and fine-tune them to your specific needs

- Cost-effective: Free for personal use

- Supports a wide range of LLMs: Choose from a variety of LLMs available on Hugging Face

Disadvantages

- Limited functionality compared to cloud-based LLMs

- Requires a powerful computer to run LLMs effectively

- May not be suitable for business use without a paid subscription

Frequently Asked Questions

-

Q:Does LM Studio collect any data?

A:No. One of the main reasons for using a local LLM is privacy, and LM Studio is designed for that. Your data remains private and local to your machine. -

Q:Can I use LM Studio at work?

A:Please fill out the LM Studio @ Work request form and we will get back to you as soon as we can. Please allow us some time to respond. -

Q:What are the minimum hardware / software requirements?

A:Apple Silicon Mac (M1/M2/M3) with macOS 13.6 or newer Windows / Linux PC with a processor that supports AVX2 (typically newer PCs) 16GB+ of RAM is recommended. For PCs, 6GB+ of VRAM is recommended NVIDIA/AMD GPUs supported -

Q:Are you hiring?

A:Yes! See our careers page for open positions. We are a small team located in Brooklyn, New York, USA.

Alternative AI tools for LM Studio

For similar tasks

Raz

Automate customer conversations. Convert leads into customers, then lifetime buyers with the first truly white-glove, conversational AI experience.

site

: 1.5k