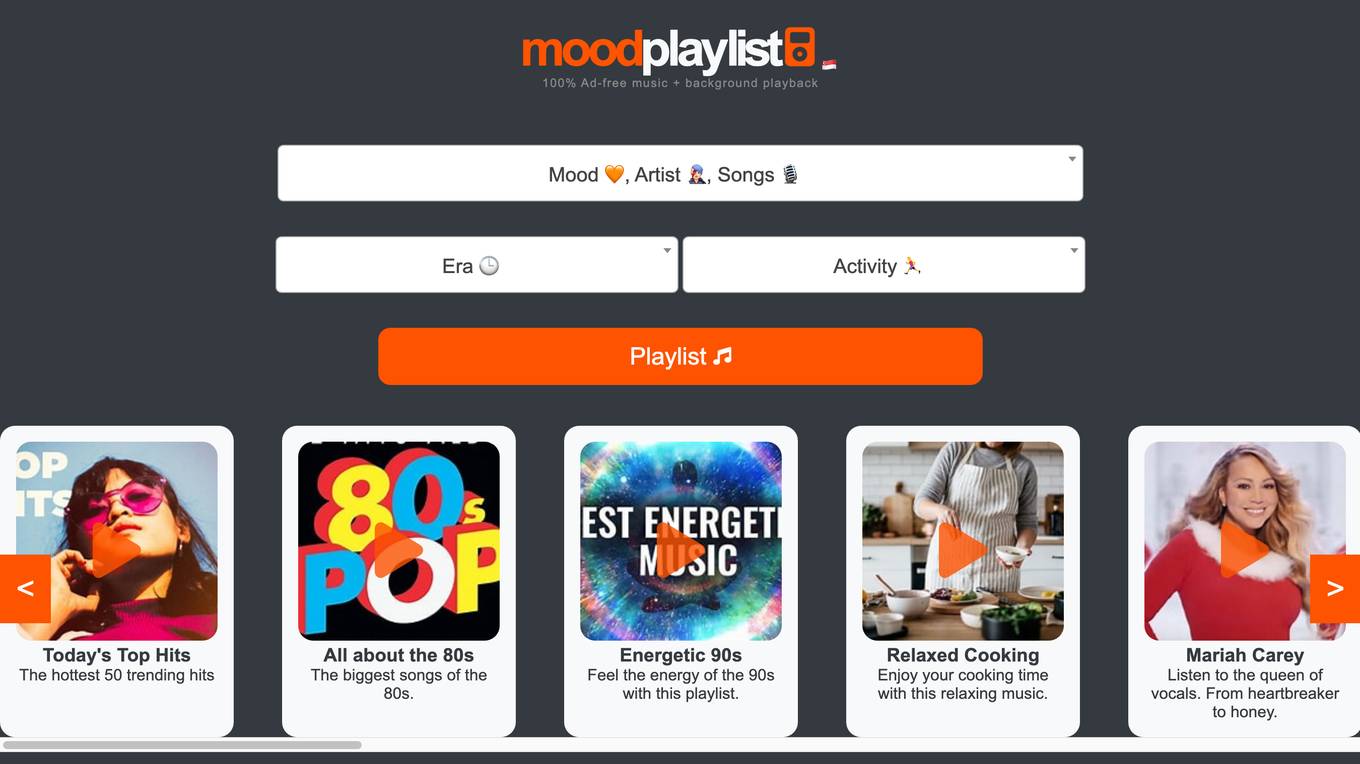

EDGE

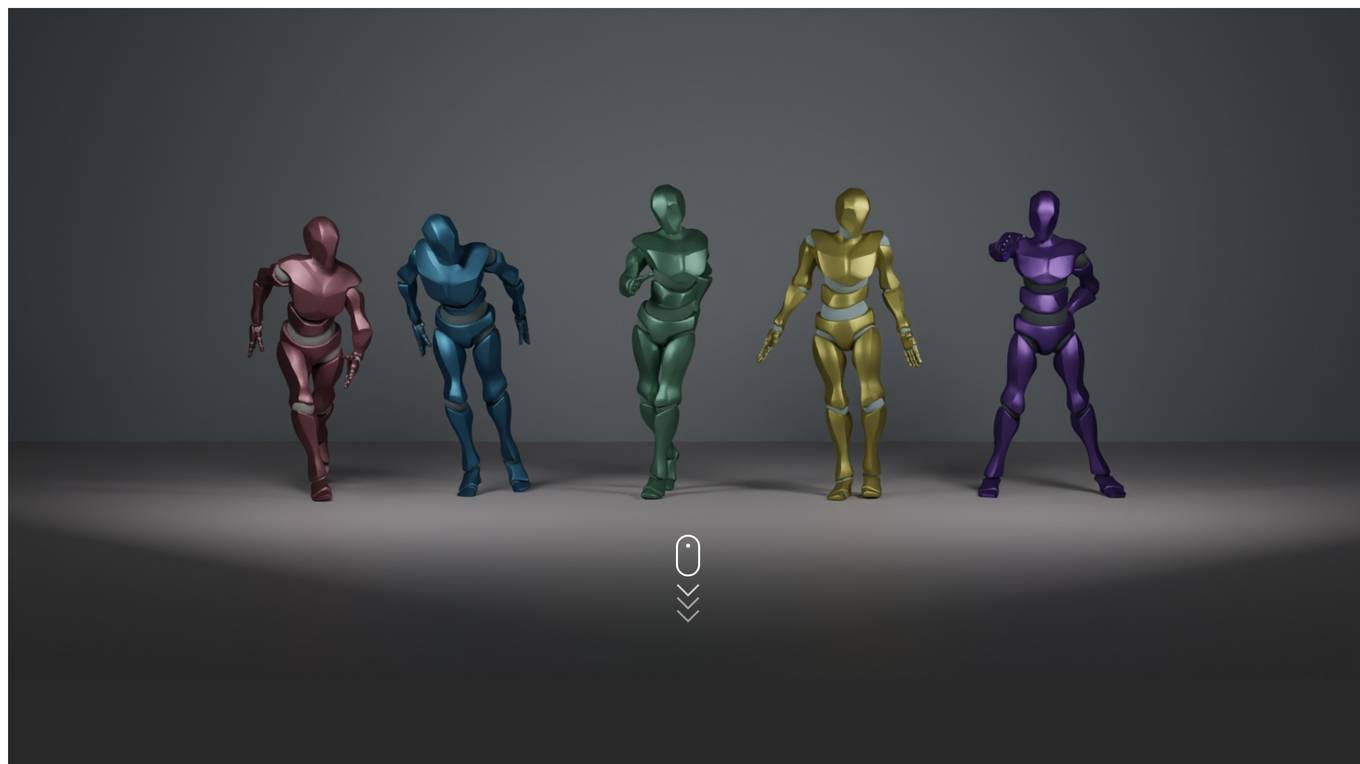

Editable Dance Generation from Music

Description:

EDGE is a powerful method for editable dance generation that can create realistic, physically-plausible dances while remaining faithful to arbitrary input music. It uses a transformer-based diffusion model paired with Jukebox, a strong music feature extractor, and confers powerful editing capabilities well-suited to dance, including joint-wise conditioning, motion in-betweening, and dance continuation. EDGE generates choreographies from music using music embeddings from the powerful Jukebox model to gain a broad understanding of music and create high-quality dances even for in-the-wild music samples. EDGE is trained on 5-second dance clips, but it can generate dances of any length by imposing temporal constraints on batches of sequences. It uses a frozen Jukebox model to encode input music into embeddings. A conditional diffusion model learns to map the music embedding into a series of 5-second dance clips. At inference time, temporal constraints are applied to batches of multiple clips to enforce temporal consistency before stitching them into an arbitrary-length full video.

For Tasks:

For Jobs:

Features

- Generates realistic, physically-plausible dances

- Remains faithful to arbitrary input music

- Uses a transformer-based diffusion model paired with Jukebox

- Confers powerful editing capabilities well-suited to dance

- Supports arbitrary spatial and temporal constraints

Advantages

- Can create dances of any length

- Can generate dances subject to joint-wise constraints

- Can generate dances that start and end with prespecified motions

- Can generate dances that start with a prespecified motion

- Avoids unintentional foot sliding and is trained with physical realism in mind

Disadvantages

- Requires a powerful GPU to run

- Can be slow to generate dances

- May not be able to generate dances in all styles

Frequently Asked Questions

-

Q:What is EDGE?

A:EDGE is a powerful method for editable dance generation that can create realistic, physically-plausible dances while remaining faithful to arbitrary input music. -

Q:How does EDGE work?

A:EDGE uses a transformer-based diffusion model paired with Jukebox, a strong music feature extractor, to generate dances. -

Q:What are the advantages of using EDGE?

A:EDGE can create dances of any length, generate dances subject to joint-wise constraints, generate dances that start and end with prespecified motions, generate dances that start with a prespecified motion, and avoid unintentional foot sliding. -

Q:What are the disadvantages of using EDGE?

A:EDGE requires a powerful GPU to run, can be slow to generate dances, and may not be able to generate dances in all styles.

Alternative AI tools for EDGE

Similar sites

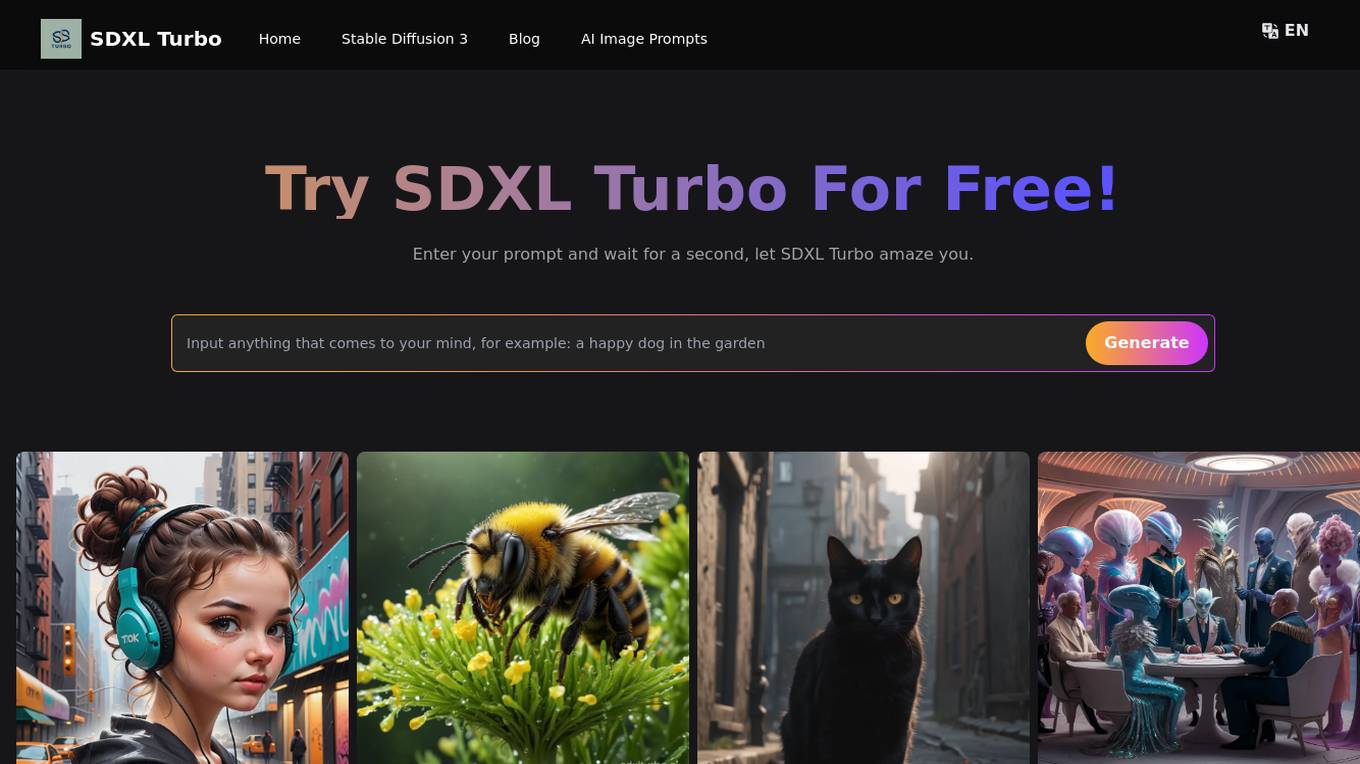

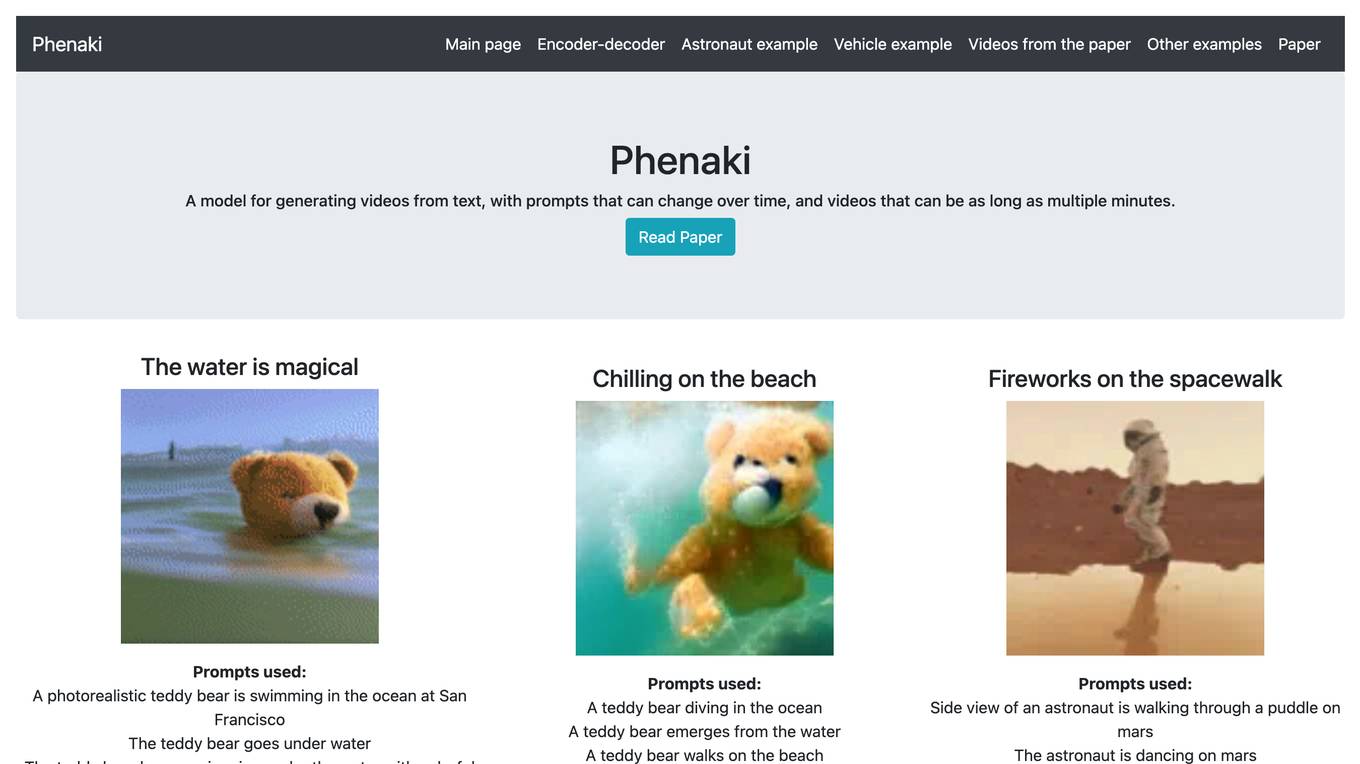

Phenaki

A model for generating videos from text, with prompts that can change over time, and videos that can be as long as multiple minutes.

Amped Studio

Make music online with the most powerful and easy to use recording Studio on the Web!

For similar tasks

Phenaki

A model for generating videos from text, with prompts that can change over time, and videos that can be as long as multiple minutes.

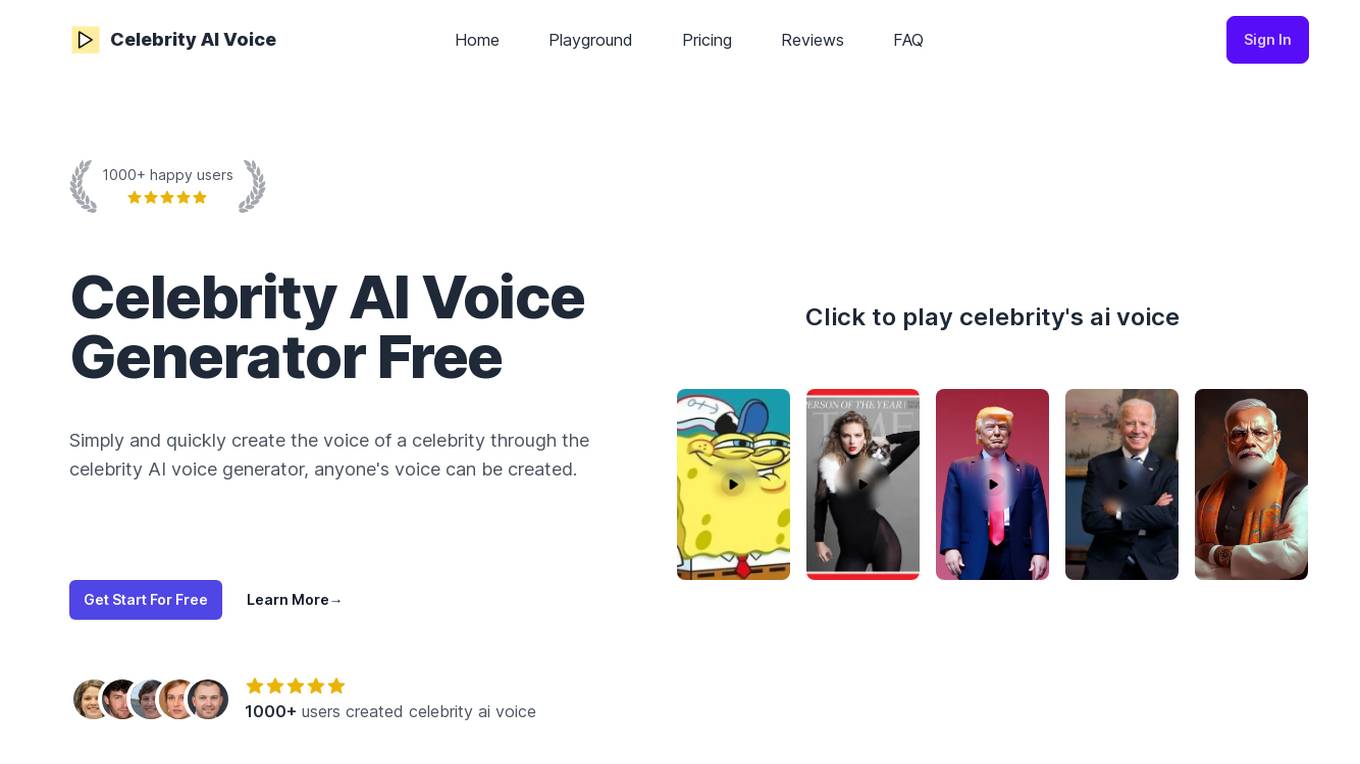

Celebrity AI Voice Generator

Simply and quickly create the voice of a celebrity through the celebrity AI voice generator free, anyone's voice can be created.