Best AI tools for< Use Llm As Judge >

20 - AI tool Sites

Confident AI

Confident AI is an open-source evaluation infrastructure for Large Language Models (LLMs). It provides a centralized platform to judge LLM applications, ensuring substantial benefits and addressing any weaknesses in LLM implementation. With Confident AI, companies can define ground truths to ensure their LLM is behaving as expected, evaluate performance against expected outputs to pinpoint areas for iterations, and utilize advanced diff tracking to guide towards the optimal LLM stack. The platform offers comprehensive analytics to identify areas of focus and features such as A/B testing, evaluation, output classification, reporting dashboard, dataset generation, and detailed monitoring to help productionize LLMs with confidence.

AnythingLLM

AnythingLLM is an all-in-one AI application designed for everyone. It offers a suite of tools for working with LLM (Large Language Models), documents, and agents in a fully private environment. Users can install AnythingLLM on their desktop for Windows, MacOS, and Linux, enabling flexible one-click installation and secure, fully private operation without internet connectivity. The application supports custom models, including enterprise models like GPT-4, custom fine-tuned models, and open-source models like Llama and Mistral. AnythingLLM allows users to work with various document formats, such as PDFs and word documents, providing tailored solutions with locally running defaults for privacy.

Prompt Hippo

Prompt Hippo is an AI tool designed as a side-by-side LLM prompt testing suite to ensure the robustness, reliability, and safety of prompts. It saves time by streamlining the process of testing LLM prompts and allows users to test custom agents and optimize them for production. With a focus on science and efficiency, Prompt Hippo helps users identify the best prompts for their needs.

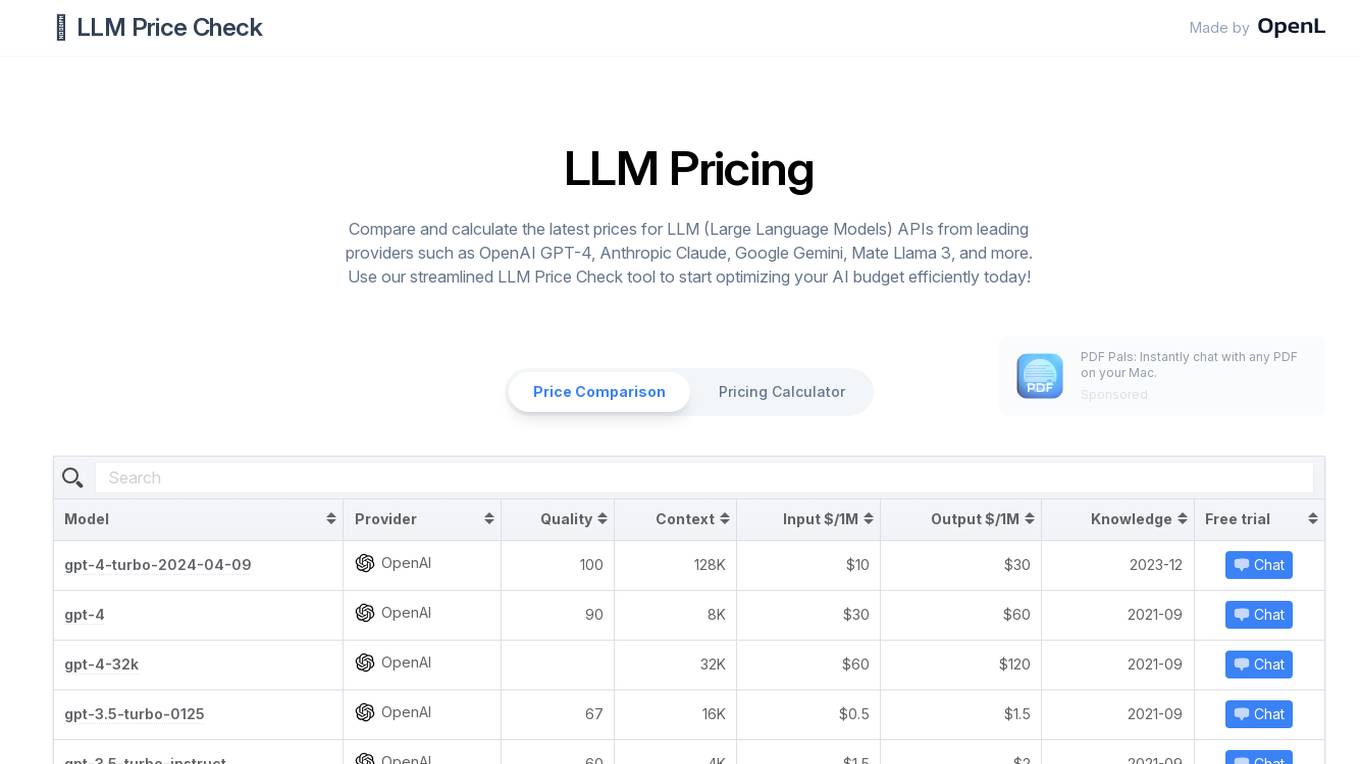

LLM Price Check

LLM Price Check is an AI tool designed to compare and calculate the latest prices for Large Language Models (LLM) APIs from leading providers such as OpenAI, Anthropic, Google, and more. Users can use the streamlined tool to optimize their AI budget efficiently by comparing pricing, sorting by various parameters, and searching for specific models. The tool provides a comprehensive overview of pricing information to help users make informed decisions when selecting an LLM API provider.

Onyxium

Onyxium is an AI platform that provides a comprehensive collection of AI tools for various tasks such as image recognition, text analysis, and speech recognition. It offers users the ability to access and utilize the latest AI technologies in one place, empowering them to enhance their projects and workflows with advanced AI capabilities. With a user-friendly interface and affordable pricing plans, Onyxium aims to make AI tools accessible to everyone, from individuals to large-scale businesses.

Membit

Membit is an AI tool designed to provide real-time context for AI developer documentation. It offers a seamless experience for data hunters by enabling them to try Membit Agent and integrate with LLM/Agent for loading data efficiently. Membit enhances the workflow of developers by offering contextual insights and support during the documentation process.

Weights & Biases

Weights & Biases is an AI tool that offers documentation, guides, tutorials, and support for using AI models in applications. The platform provides two main products: W&B Weave for integrating AI models into code and W&B Models for building custom AI models. Users can access features such as tracing, output evaluation, cost estimates, hyperparameter sweeps, model registry, and more. Weights & Biases aims to simplify the process of working with AI models and improving model reproducibility.

Ragie

Ragie is a fully managed RAG-as-a-Service platform designed for developers. It offers easy-to-use APIs and SDKs to help developers get started quickly, with advanced features like LLM re-ranking, summary index, entity extraction, flexible filtering, and hybrid semantic and keyword search. Ragie allows users to connect directly to popular data sources like Google Drive, Notion, Confluence, and more, ensuring accurate and reliable information delivery. The platform is led by Craft Ventures and offers seamless data connectivity through connectors. Ragie simplifies the process of data ingestion, chunking, indexing, and retrieval, making it a valuable tool for AI applications.

Weavel

Weavel is an AI tool designed to revolutionize prompt engineering for large language models (LLMs). It offers features such as tracing, dataset curation, batch testing, and evaluations to enhance the performance of LLM applications. Weavel enables users to continuously optimize prompts using real-world data, prevent performance regression with CI/CD integration, and engage in human-in-the-loop interactions for scoring and feedback. Ape, the AI prompt engineer, outperforms competitors on benchmark tests and ensures seamless integration and continuous improvement specific to each user's use case. With Weavel, users can effortlessly evaluate LLM applications without the need for pre-existing datasets, streamlining the assessment process and enhancing overall performance.

Autotab

Autotab is an AI-powered digital robot that can automate repetitive tasks on any website or web application. It is designed to help businesses save time and money by automating tasks such as data entry, web scraping, and social media management. Autotab is easy to use and can be set up in minutes. It is also very affordable, with plans starting at just $1 per hour.

Karla

Karla is an AI tool designed for journalists to enhance their writing process by utilizing Large Language Models (LLMs). It helps journalists transform news information into well-structured articles efficiently, augment their sources, customize stories seamlessly, enjoy a sleek editing experience, and export their completed stories easily. Karla acts as a wrapper around the LLM of choice, providing dynamic prompts and integration into a text editor and workflow, allowing journalists to focus on writing without manual prompt crafting. It offers benefits over traditional LLM chat apps by providing efficient prompt crafting, seamless integration, enhanced outcomes, faster performance, model flexibility, and relevant content tailored for journalism.

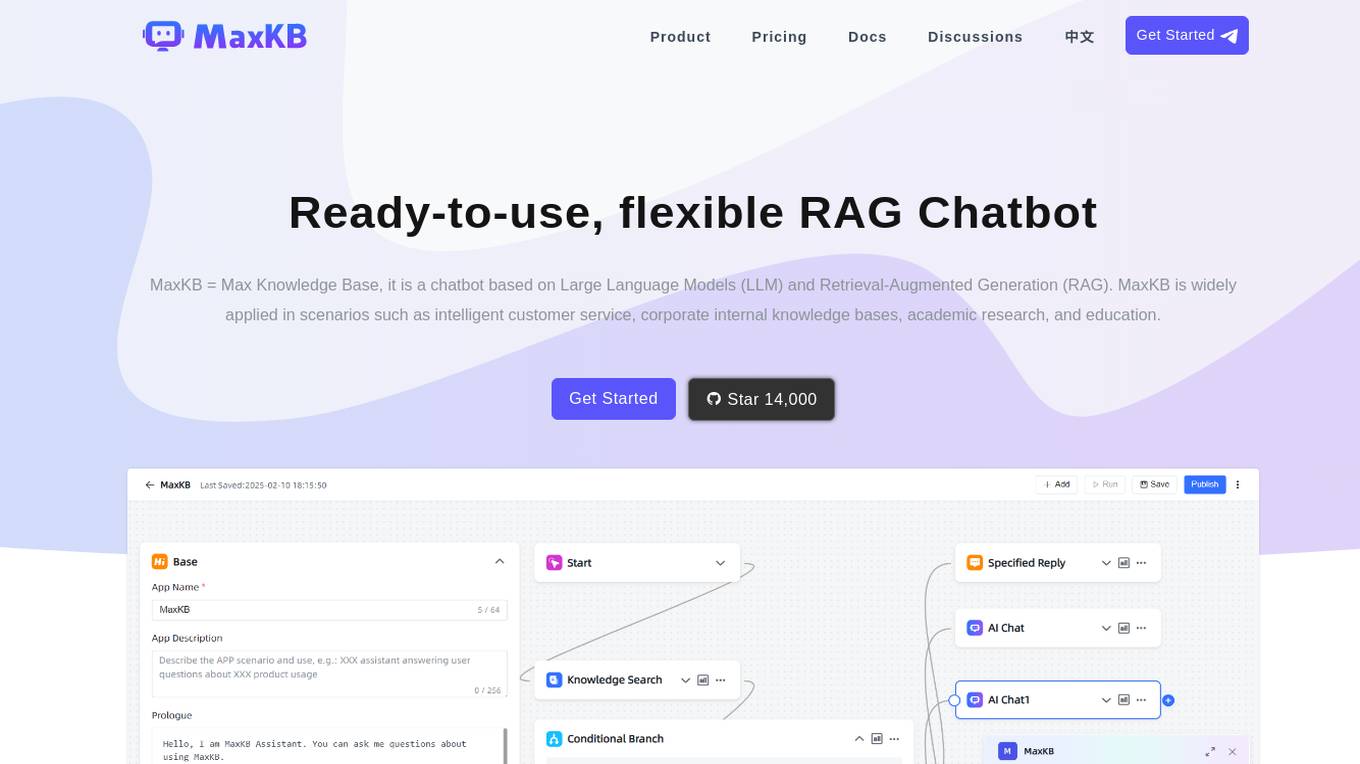

MaxKB

MaxKB is a ready-to-use, flexible RAG Chatbot that is based on Large Language Models (LLM) and Retrieval-Augmented Generation (RAG). It is widely applied in scenarios such as intelligent customer service, corporate internal knowledge bases, academic research, and education. MaxKB supports direct uploading of documents, automatic crawling of online documents, automatic text splitting, vectorization, and RAG for smart Q&A interactions. It also offers flexible orchestration, seamless integration into third-party systems, and supports various large models for enhanced user satisfaction.

AirOps

AirOps is an AI-powered platform designed to help users craft content that wins in AI search results. It offers insights to prioritize actions across AI and traditional search, allowing users to create content that drives pipeline. The platform features tools like Platform Grids, Workflows, AI Models, Integrations, and Knowledge Bases. AirOps caters to various use cases such as Content Refresh, Content Creation, and Offsite content management for teams like Content & SEO Teams and Marketing Agencies. It aims to help users increase production, visibility, and audience growth through data-driven content strategies.

chatQR.ai

chatQR.ai is an AI-powered ordering application that serves as a complete Point Of Sale/Kiosk replacement. It utilizes voice recognition technology combined with the latest Large Language Model (LLM) AI to create a seamless QR code ordering experience for customers. The system is designed to be AI-first, offering mature point of sale features and the ability to integrate the ChatQR Voice Assistant into existing systems. With support for multiple currencies and payment providers like Stripe and Square, chatQR.ai aims to revolutionize the way businesses manage orders and payments.

Evidently AI

Evidently AI is an open-source machine learning (ML) monitoring and observability platform that helps data scientists and ML engineers evaluate, test, and monitor ML models from validation to production. It provides a centralized hub for ML in production, including data quality monitoring, data drift monitoring, ML model performance monitoring, and NLP and LLM monitoring. Evidently AI's features include customizable reports, structured checks for data and models, and a Python library for ML monitoring. It is designed to be easy to use, with a simple setup process and a user-friendly interface. Evidently AI is used by over 2,500 data scientists and ML engineers worldwide, and it has been featured in publications such as Forbes, VentureBeat, and TechCrunch.

Faune

Faune is an anonymous AI chat app that brings the power of large language models (LLMs) like GPT-3, GPT-4, and Mistral directly to users. It prioritizes privacy and offers unique features such as a dynamic prompt editor, support for multiple LLMs, and a built-in image processor. With Faune, users can engage in rich and engaging AI conversations without the need for user accounts or complex setups.

Golem

Golem is an AI chat application that provides a new ChatGPT experience. It offers a beautiful and user-friendly design, ensuring delightful interactions. Users can chat with a Large Language Model (LLM) securely, with data stored locally or on their personal cloud. Golem is open-source, allowing contributions and use as a reference for Nuxt 3 projects.

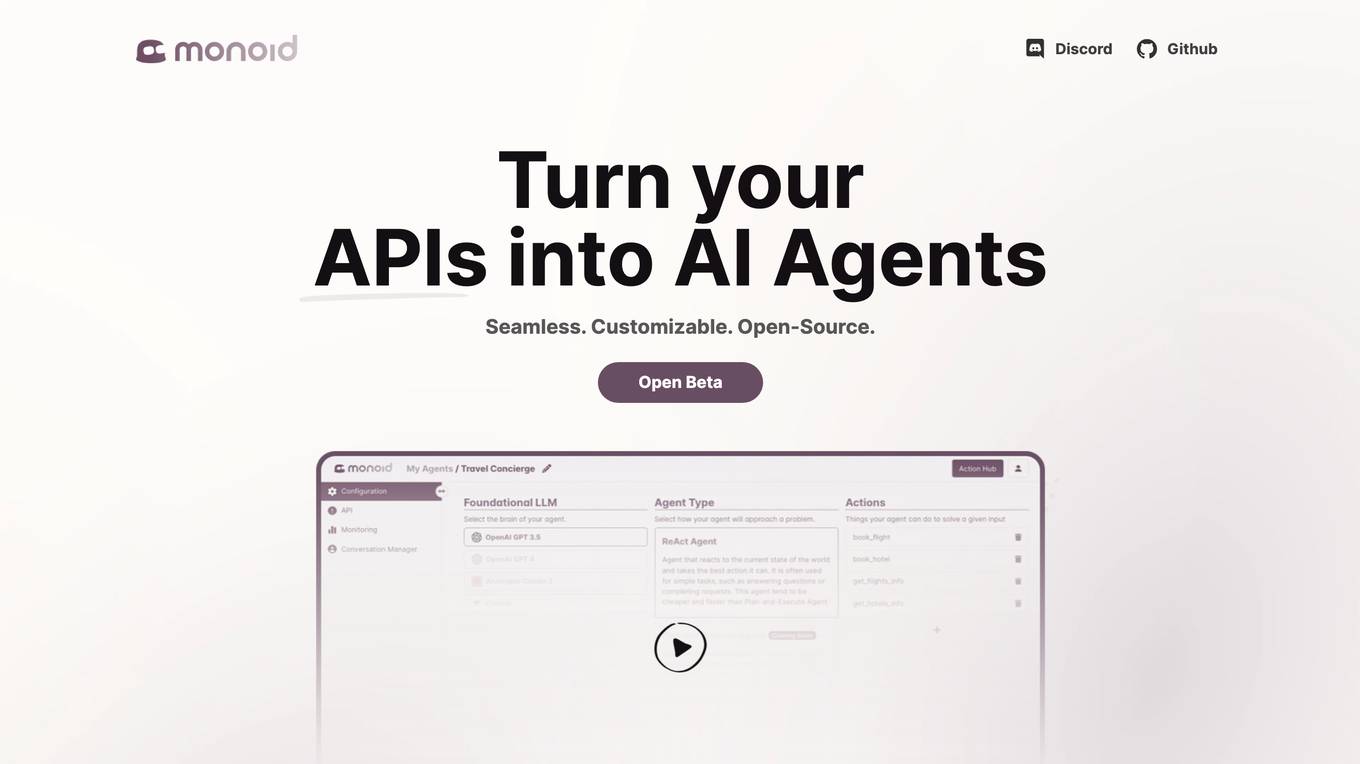

Monoid

Monoid is an AI tool that transforms APIs into AI Agents, enhancing the capabilities of Large Language Models (LLMs) by providing context and real-time actions. Users can create customized AI Agents in minutes by selecting a foundational LLM, an Agent Type, and defining Actions controlled by the AI Agent. Monoid enables users to simulate AI Agents using APIs, chat with the Agents, and share Actions on a collaborative platform. The tool caters to various use-cases such as acting as a Shopping Assistant, Customer Support Agent, and Workflow Automator, offering seamless integration and open-source flexibility.

CaseMark

CaseMark is an AI-powered platform designed to empower legal professionals by providing automated workflow and summarization services. It offers concise summaries of legal documents, such as deposition summaries, medical records summaries, trial summaries, and more. With features like custom workflows, integration with existing platforms, enterprise-class security, and the ability to select the best LLM for each use case, CaseMark aims to streamline legal processes and improve efficiency. Trusted by over 6,000 lawyers and legal tech companies, CaseMark is a privacy-first platform that helps users save time and focus on critical legal matters.

LanguageGUI

LanguageGUI is an open-source design system and UI Kit for giving LLMs the flexibility of formatting text outputs into richer graphical user interfaces. It includes dozens of unique UI elements that serve different use cases for rich conversational user interfaces, such as 100+ UI components & customizable screens, 10+ conversational UI widgets, 20+ chat bubbles, 30+ pre-built screens to kickoff your design, 5+ chat sidebars with customizable settings, multi-prompt workflow screen designs, 8+ prompt boxes, and dark mode. LanguageGUI is designed with variables and styles, designed with Figma Auto Layout, and is free to use for both personal and commercial projects without required attribution.

1 - Open Source AI Tools

HybridAGI

HybridAGI is the first Programmable LLM-based Autonomous Agent that lets you program its behavior using a **graph-based prompt programming** approach. This state-of-the-art feature allows the AGI to efficiently use any tool while controlling the long-term behavior of the agent. Become the _first Prompt Programmers in history_ ; be a part of the AI revolution one node at a time! **Disclaimer: We are currently in the process of upgrading the codebase to integrate DSPy**

20 - OpenAI Gpts

GPT Detector

ChatGPT Detector quickly finds AI writing from ChatGPT, LLMs, Bard, and GPT-4. It's easy and fast to use!

Use Case Writing Assistant

This GPT can generate software use cases, which are based on a use case templates repository and conform to a style guide.

ecosystem.Ai Use Case Designer v2

The use case designer is configured with the latest Data Science and Behavioral Social Science insights to guide you through the process of defining AI and Machine Learning use cases for the ecosystem.Ai platform.

AI Use Case Analyst for Sales & Marketing

Enables sales & marketing leadership to identify high-value AI use cases

Terms of Use & Privacy policy Assistant

OpenAIのTerms of UseとPrivacy policyを参照できます(2023年12月14日適用分)

PragmaPilot - A Generative AI Use Case Generator

Show me your job description or just describe what you do professionally, and I'll help you identify high value use cases for AI in your day-to-day work. I'll also coach you on simple techniques to get the best out of ChatGPT.

Name Generator and Use Checker Toolkit

Need a new name? Character, brand, story, etc? Try the matrix! Use all the different naming modules as different strategies for new names!

Your Headline Writer

Use this to get increased engagement, more clicks and higher rankings for your content. Copy and paste your headline below and get a score out of 100 and 3 new ideas on how to improve it. For FREE.

Write a romance novel

Use this GPT to outline your romance novel: design your story, your characters, obstacles, stakes, twists, arena, etc… Then ask GPT to draft the chapters ❤️ (remember: you are the brain, GPT is just the hand. Stay creative, use this GPT as an author!)

IHeartDomains.BOT | Web3 Domain Knowledgebase

Use me for educational insights, ALPHA, and strategies for investing in Domains & Digital Identity. Your GUIDE to Unstoppable Domains, ENS, Freename, HNS, and more. *DO NOT use as Financial Advice & Always DYOR* https://iheartdomains.com

Acquisition Criteria Creator

Use me to help you decide what type of business to acquire. Let's go!

Family Constellation Guide

Use DALL-E to create a family constellation image for an issue that has been troubling you.

The 80/20 Principle master(80/20法则大师-敏睿)

使用GPTS快速识别关键因素,提高决策效率和工作效率,找到关键的20%,Use GPTS to quickly identify key factors, improve decision-making efficiency and work efficiency, and find the key 20%.