Best AI tools for< Tune Evaluation Parameters >

20 - AI tool Sites

HappyML

HappyML is an AI tool designed to assist users in machine learning tasks. It provides a user-friendly interface for running machine learning algorithms without the need for complex coding. With HappyML, users can easily build, train, and deploy machine learning models for various applications. The tool offers a range of features such as data preprocessing, model evaluation, hyperparameter tuning, and model deployment. HappyML simplifies the machine learning process, making it accessible to users with varying levels of expertise.

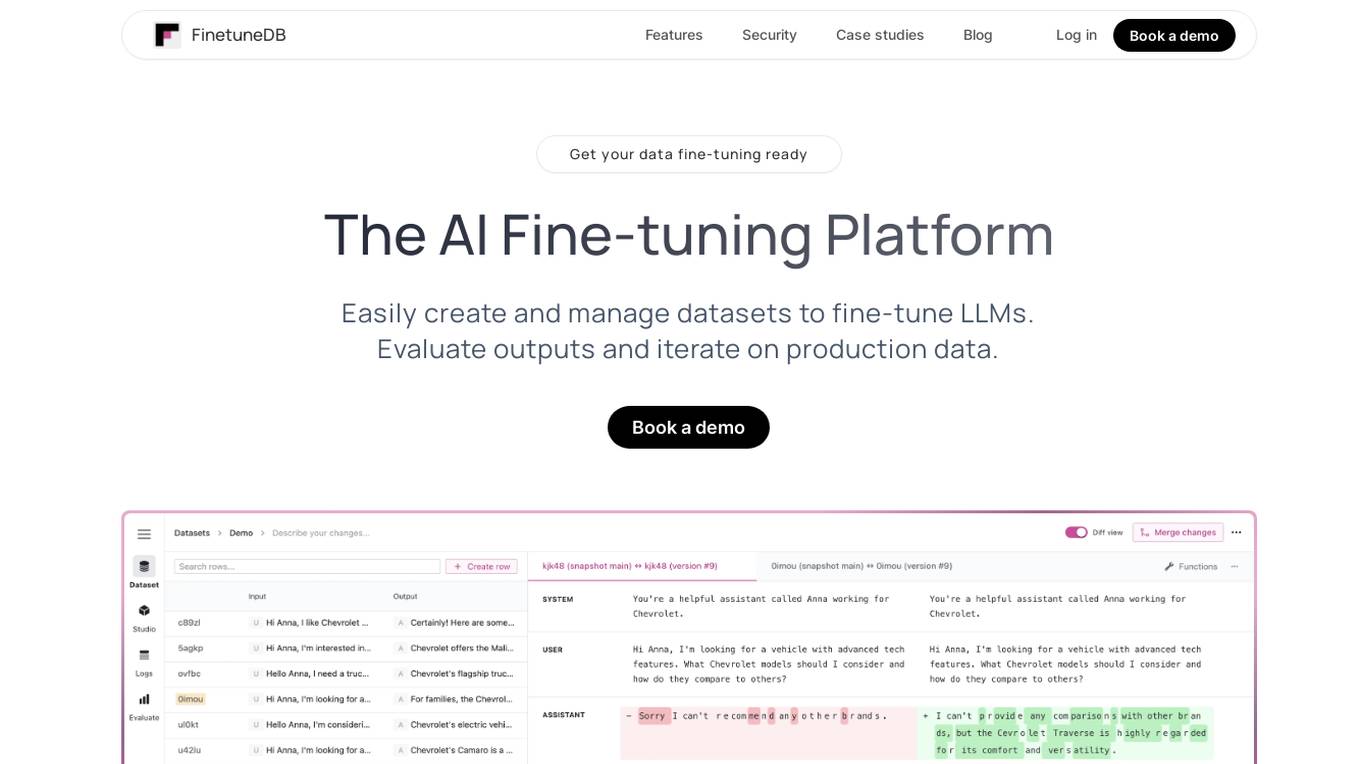

FinetuneDB

FinetuneDB is an AI fine-tuning platform that allows users to easily create and manage datasets to fine-tune LLMs, evaluate outputs, and iterate on production data. It integrates with open-source and proprietary foundation models, and provides a collaborative editor for building datasets. FinetuneDB also offers a variety of features for evaluating model performance, including human and AI feedback, automated evaluations, and model metrics tracking.

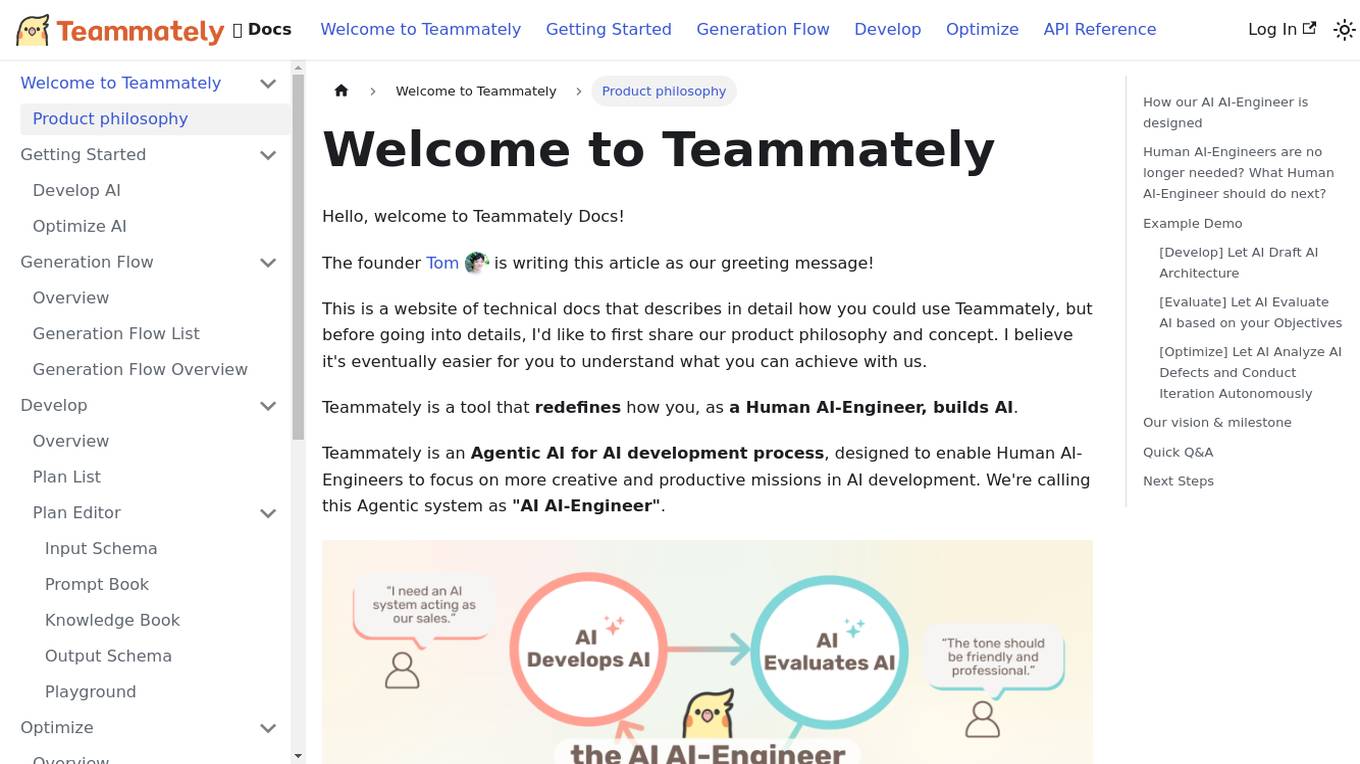

Teammately

Teammately is an AI tool that redefines how Human AI-Engineers build AI. It is an Agentic AI for AI development process, designed to enable Human AI-Engineers to focus on more creative and productive missions in AI development. Teammately follows the best practices of Human LLM DevOps and offers features like Development Prompt Engineering, Knowledge Tuning, Evaluation, and Optimization to assist in the AI development process. The tool aims to revolutionize AI engineering by allowing AI AI-Engineers to handle technical tasks, while Human AI-Engineers focus on planning and aligning AI with human preferences and requirements.

Welo Data

Welo Data is an AI tool that specializes in AI benchmarking, model assessment, and training high-quality datasets for AI models. The platform offers services such as supervised fine tuning, reinforcement learning with human feedback, data generation, expert evaluations, and data quality framework to support the development of world-class AI models. With over 27 years of experience, Welo Data combines language expertise and AI data to deliver exceptional training and performance evaluation solutions.

Rawbot

Rawbot is an AI model comparison tool designed to simplify the selection process by enabling users to identify and understand the strengths and weaknesses of various AI models. It allows users to compare AI models based on performance optimization, strengths and weaknesses identification, customization and tuning, cost and efficiency analysis, and informed decision-making. Rawbot is a user-friendly platform that caters to researchers, developers, and business leaders, offering a comprehensive solution for selecting the best AI models tailored to specific needs.

Scale AI

Scale AI is an AI tool that accelerates the development of AI applications for various sectors including enterprise, government, and automotive industries. It offers solutions for training models, fine-tuning, generative AI, and model evaluations. Scale Data Engine and GenAI Platform enable users to leverage enterprise data effectively. The platform collaborates with leading AI models and provides high-quality data for public and private sector applications.

AnalyStock.ai

AnalyStock.ai is a financial application leveraging AI to provide users with a next-generation investment toolbox. It helps users better understand businesses, risks, and make informed investment decisions. The platform offers direct access to the stock market, powerful data-driven tools to build top-ranking portfolios, and insights into company valuations and growth prospects. AnalyStock.ai aims to optimize the investment process, offering a reliable strategy with factors like A-Score, factor investing scores for value, growth, quality, volatility, momentum, and yield. Users can discover hidden gems, fine-tune filters, access company scorecards, perform activity analysis, understand industry dynamics, evaluate capital structure, profitability, and peers' valuation. The application also provides adjustable DCF valuation, portfolio management tools, net asset value computation, monthly commentary, and an AI assistant for personalized insights and assistance.

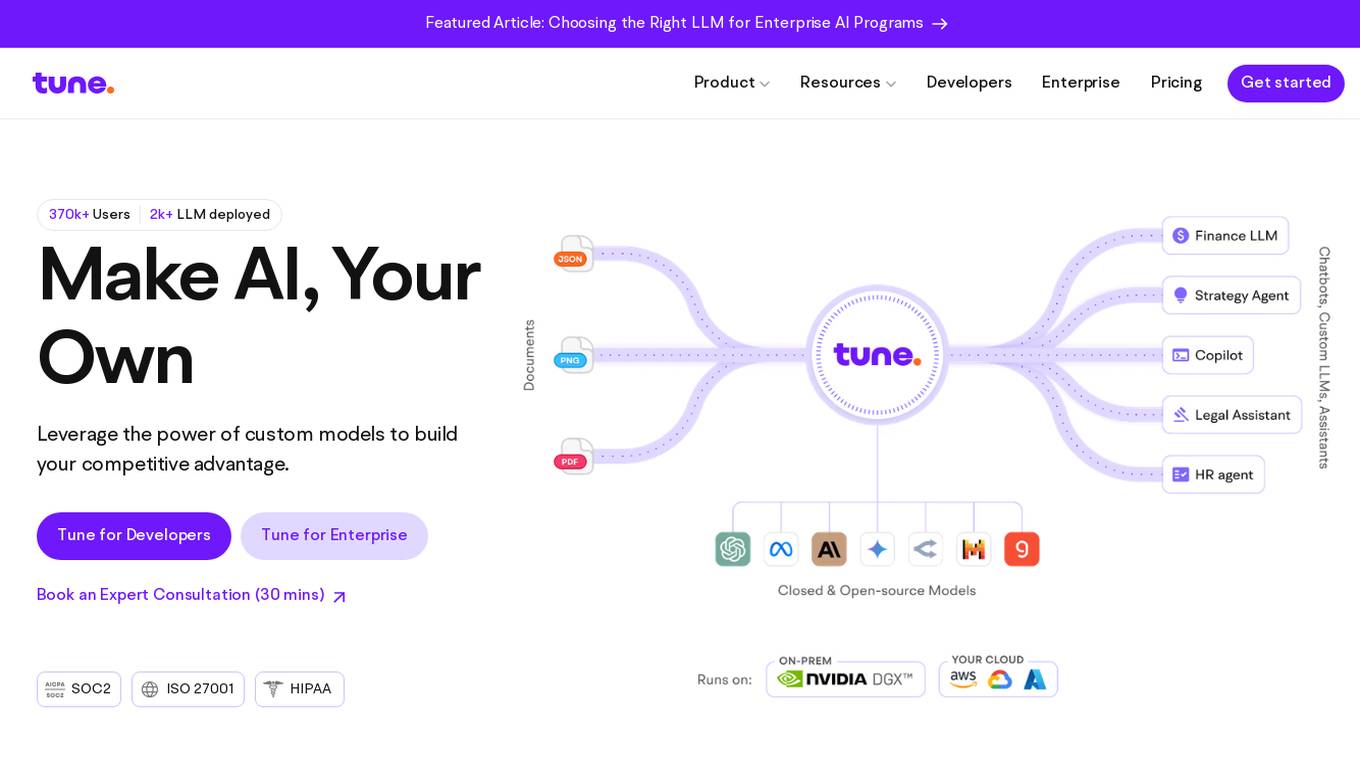

Tune AI

Tune AI is an enterprise Gen AI stack that offers custom models to build competitive advantage. It provides a range of features such as accelerating coding, content creation, indexing patent documents, data audit, automatic speech recognition, and more. The application leverages generative AI to help users solve real-world problems and create custom models on top of industry-leading open source models. With enterprise-grade security and flexible infrastructure, Tune AI caters to developers and enterprises looking to harness the power of AI.

Tune Chat

Tune Chat is a chat application that utilizes open-source Large Language Models (LLMs) to provide users with a conversational and informative experience. It is designed to understand and respond to a wide range of user queries, offering assistance with various tasks and engaging in natural language conversations.

re:tune

re:tune is a no-code AI app solution that provides everything you need to transform your business with AI, from custom chatbots to autonomous agents. With re:tune, you can build chatbots for any use case, connect any data source, and integrate with all your favorite tools and platforms. re:tune is the missing platform to build your AI apps.

Fine-Tune AI

Fine-Tune AI is a tool that allows users to generate fine-tune data sets using prompts. This can be useful for a variety of tasks, such as improving the accuracy of machine learning models or creating new training data for AI applications.

FaceTune.ai

FaceTune.ai is an AI-powered photo editing tool that allows users to enhance their selfies and portraits with various features such as skin smoothing, teeth whitening, and blemish removal. The application uses advanced algorithms to automatically detect facial features and make precise adjustments, resulting in professional-looking photos. With an intuitive interface and real-time editing capabilities, FaceTune.ai is a popular choice for individuals looking to improve their selfies before sharing them on social media.

HeyPhoto

HeyPhoto is an AI photo editor online that utilizes artificial intelligence to enhance and manipulate facial features in photos. Users can tune selfies and group photos by changing gaze direction, skin tone, age, hair style, and other facial attributes. The tool offers a range of features such as face anonymization, gender transformation, age modification, emotion tweaking, skin tone adjustment, and more. HeyPhoto is user-friendly and requires no special skills, making it accessible for individuals looking to edit their photos effortlessly.

prompteasy.ai

Prompteasy.ai is an AI tool that allows users to fine-tune AI models in less than 5 minutes. It simplifies the process of training AI models on user data, making it as easy as having a conversation. Users can fully customize GPT by fine-tuning it to meet their specific needs. The tool offers data-driven customization, interactive AI coaching, and seamless model enhancement, providing users with a competitive edge and simplifying AI integration into their workflows.

FineTuneAIs.com

FineTuneAIs.com is a platform that specializes in custom AI model fine-tuning. Users can fine-tune their AI models to achieve better performance and accuracy. The platform requires JavaScript to be enabled for optimal functionality.

Sapien.io

Sapien.io is a decentralized data foundry that offers data labeling services powered by a decentralized workforce and gamified platform. The platform provides high-quality training data for large language models through a human-in-the-loop labeling process, enabling fine-tuning of datasets to build performant AI models. Sapien combines AI and human intelligence to collect and annotate various data types for any model, offering customized data collection and labeling models across industries.

ReplyInbox

ReplyInbox is a Gmail Chrome extension that revolutionizes email management by harnessing the power of AI. It automates email replies based on your product or service knowledge base, saving you time and effort. Simply select the text you want to respond to, click generate, and let ReplyInbox craft a personalized and high-quality reply. You can also share website links and other documentation with ReplyInbox's AI to facilitate even more accurate and informative responses.

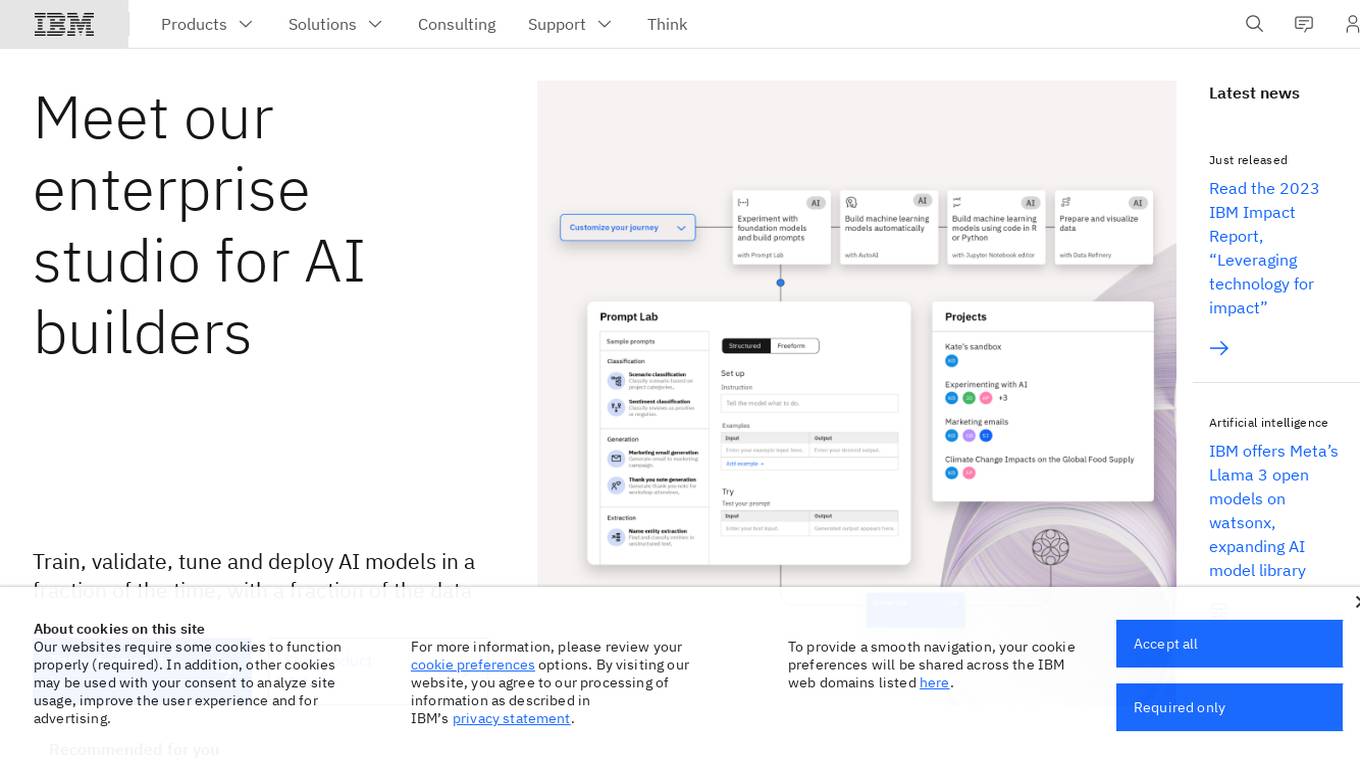

IBM Watsonx

IBM Watsonx is an enterprise studio for AI builders. It provides a platform to train, validate, tune, and deploy AI models quickly and efficiently. With Watsonx, users can access a library of pre-trained AI models, build their own models, and deploy them to the cloud or on-premises. Watsonx also offers a range of tools and services to help users manage and monitor their AI models.

Imajinn AI

Imajinn AI is a cutting-edge visualization tool that utilizes fine-tuned AI technology to reimagine photos and images into stunning works of art. The platform offers a suite of AI-powered tools for creating personalized children's books, printed couples portraits, profile picture photobooth, product photo visualizer, and sneaker generator. Users can also generate concept images for various purposes, such as eCommerce, virtual photoshoots, and more. Imajinn AI provides a fun and creative way to bring ideas to life with high-quality outputs and a user-friendly interface.

Together AI

Together AI is an AI Acceleration Cloud platform that offers fast inference, fine-tuning, and training services. It provides self-service NVIDIA GPUs, model deployment on custom hardware, AI chat app, code execution sandbox, and tools to find the right model for specific use cases. The platform also includes a model library with open-source models, documentation for developers, and resources for advancing open-source AI. Together AI enables users to leverage pre-trained models, fine-tune them, or build custom models from scratch, catering to various generative AI needs.

1 - Open Source AI Tools

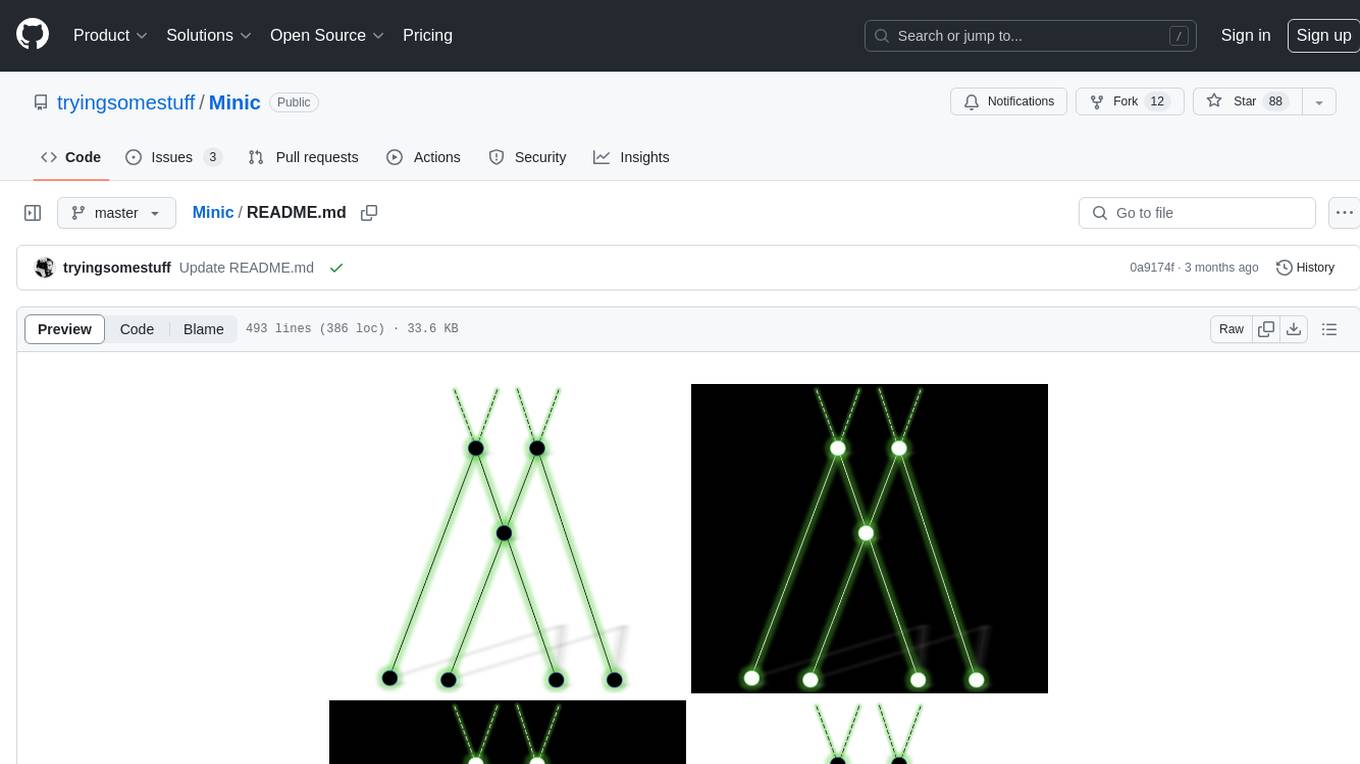

Minic

Minic is a chess engine developed for learning about chess programming and modern C++. It is compatible with CECP and UCI protocols, making it usable in various software. Minic has evolved from a one-file code to a more classic C++ style, incorporating features like evaluation tuning, perft, tests, and more. It has integrated NNUE frameworks from Stockfish and Seer implementations to enhance its strength. Minic is currently ranked among the top engines with an Elo rating around 3400 at CCRL scale.

20 - OpenAI Gpts

Tune Tailor: Playlist Pal

I find and create playlists based on mood, genre, and activities.

Text Tune Up GPT

I edit articles, improving clarity and respectfulness, maintaining your style.

The Name That Tune Game - from lyrics

Joyful music expert in song lyrics, offering trivia, insights, and engaging music discussions.

Joke Smith | Joke Edits for Standup Comedy

A witty editor to fine-tune stand-up comedy jokes.

Rewrite This Song: Lyrics Generator

I rewrite song lyrics to new themes, keeping the tune and essence of the original.

Dr. Tuning your Sim Racing doctor

Your quirky pit crew chief for top-notch sim racing advice

アダチさん12号(Oracle RDBMS篇)

安達孝一さんがSE時代に蓄積してきた、Oracle RDBMSのナレッジやノウハウ等 (Oracle 7/8.1.6/8.1.7/9iR1/9iR2/10gR1/10gR2/11gR2/12c/SQLチューニング) について、ご質問頂けます。また、対話内容を基に、ChatGPT(GPT-4)向けの、汎用的な質問文例も作成できます。

Drone Buddy

An FPV drone specialist aiding in building, tuning, and learning about the hobby.

Pytorch Trainer GPT

Your purpose is to create the pytorch code to train language models using pytorch

BrandChic Strategic

I'm Chic Strategic, your ally in carving out a distinct brand position and fine-tuning your voice. Let's make your brand's presence robust and its message clear in a bustling market.