Best AI tools for< Try Out Different Llm Models >

20 - AI tool Sites

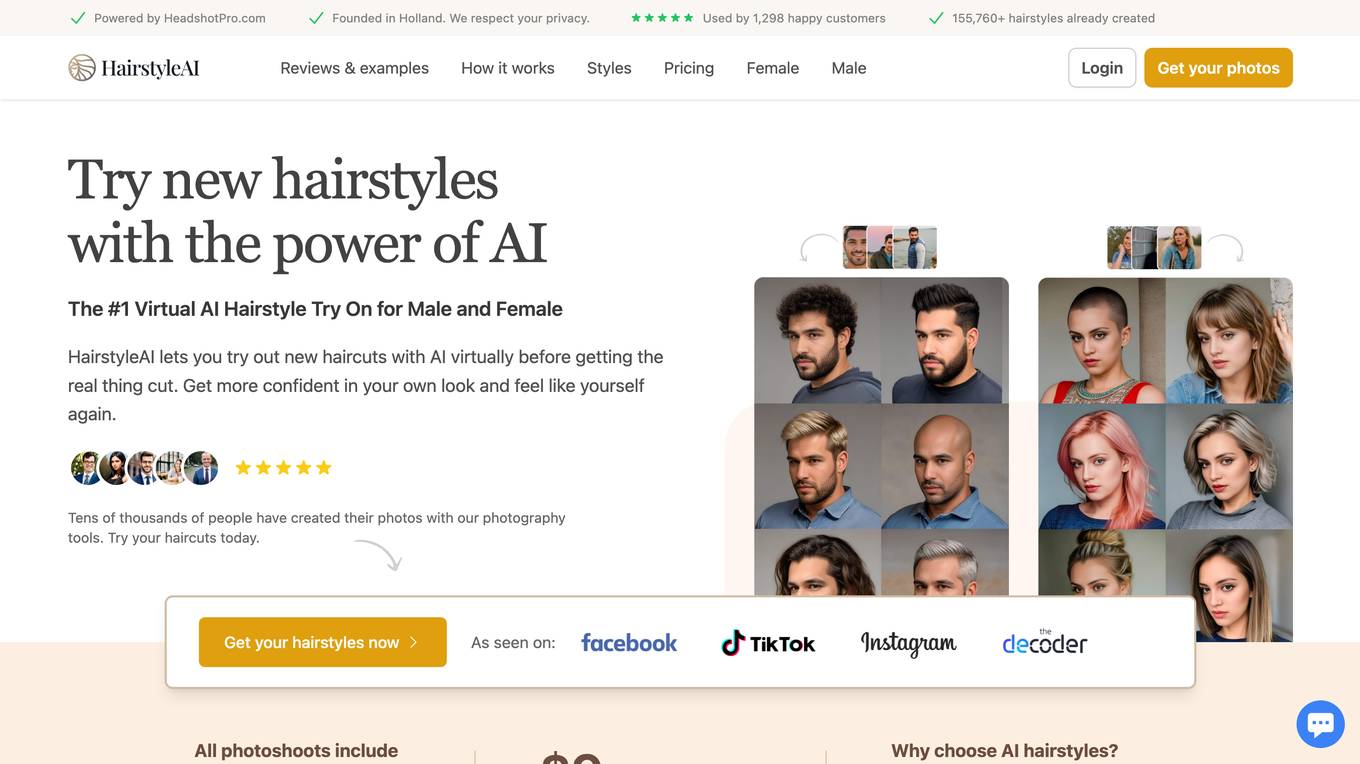

Hairstyle AI

Hairstyle AI is an AI-powered application that allows users to virtually try out new hairstyles before getting a real haircut. With over 155,760 hairstyles already created for 1,298 happy customers, Hairstyle AI provides a platform for users to experiment with different haircuts and styles, helping them feel more confident in their appearance. The application offers a range of features such as generating AI hairstyles indistinguishable from real photos, providing a variety of hairstyle options, and ensuring user privacy and security.

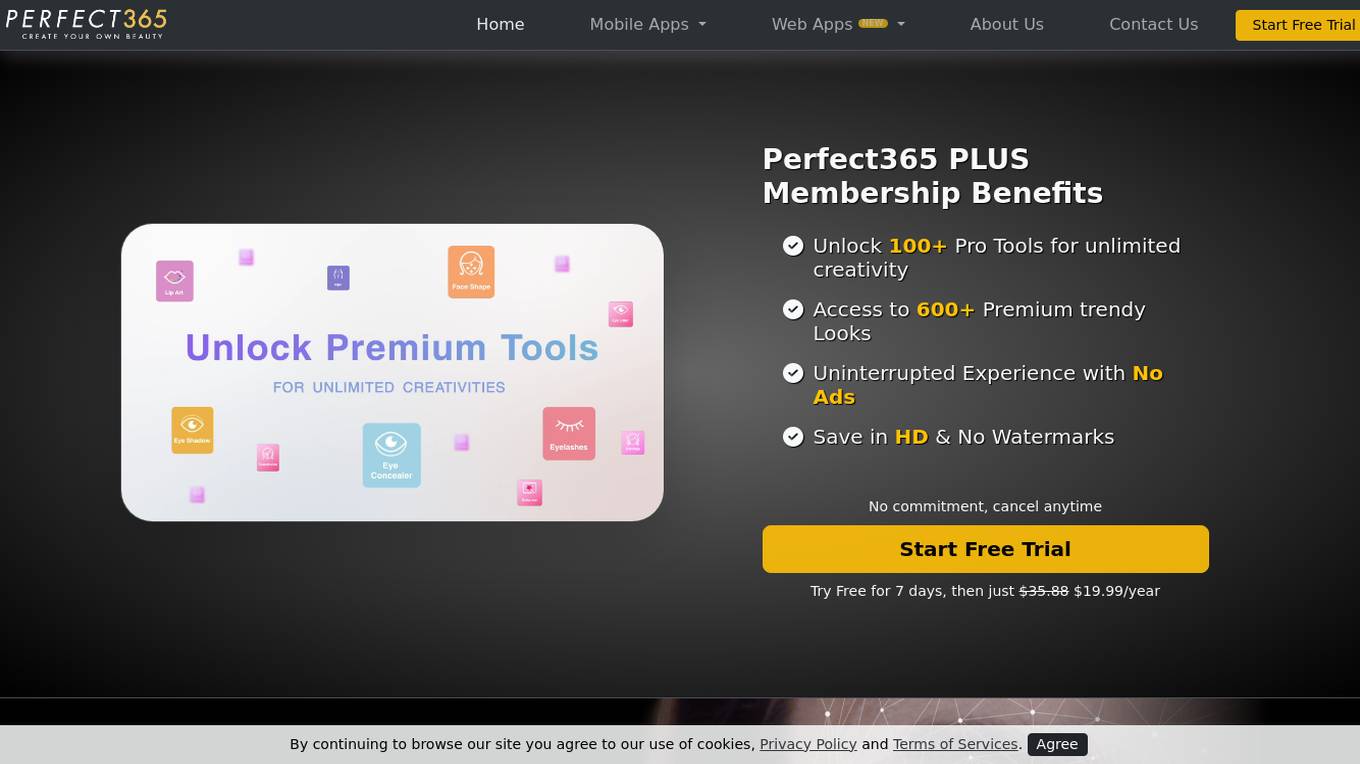

Perfect365

Perfect365 is an AI makeup application that allows users to virtually try on makeup and hairstyles through advanced augmented reality technology. With over 100 million users, the app offers a seamless way to experiment with different looks, acting as a personal beauty assistant. Users can adjust every aspect of their appearance, from skin tone to eye color, all while maintaining a natural and realistic look. The app employs artificial intelligence algorithms to let users experiment with different makeup looks virtually, without the need for physical products. Perfect365 is a pioneer in the beauty apps sector, providing users with a transformative experience in exploring e-cosmetics.

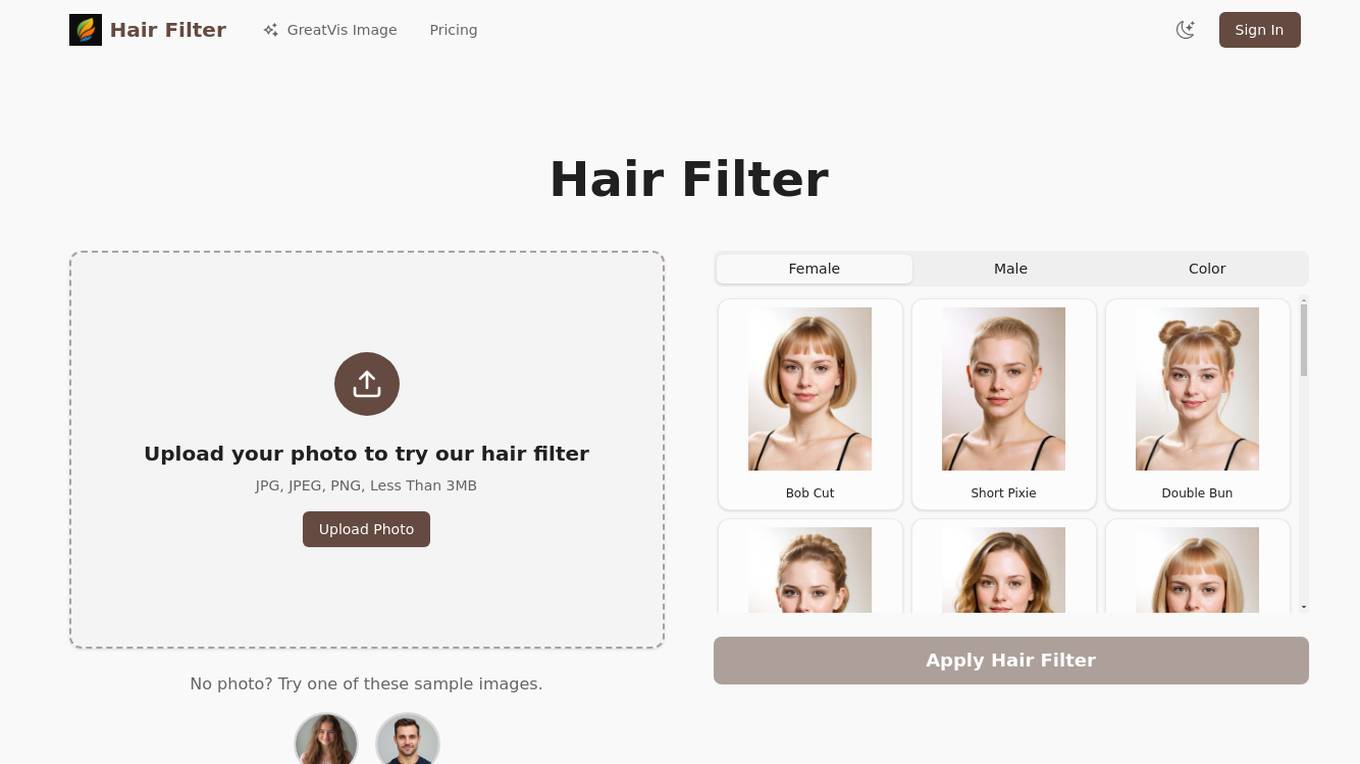

Hair Filter

Hair Filter is an AI-powered platform that allows users to change their hairstyle and hair color virtually for free. Users can upload their photo and try out various trendy hairstyles and colors using advanced AI hairstyle technology. The platform offers a risk-free way to experiment with different looks before making any permanent changes, providing instant visual results and high-quality transformations. With a user-friendly interface and a wide range of styles to choose from, Hair Filter is a convenient tool for anyone looking to explore new hairdos.

Magic AI Avatars

Magic AI Avatars is an AI-powered tool that allows users to create custom profile pictures using artificial intelligence. The app analyzes uploaded photos, recognizes facial features and expressions, and then uses a deep learning algorithm to construct a realistic digital photo that closely resembles the person in the picture. Magic AI Avatars is free to use and offers a variety of different themes and styles to choose from. The app is also committed to maintaining user privacy and data security.

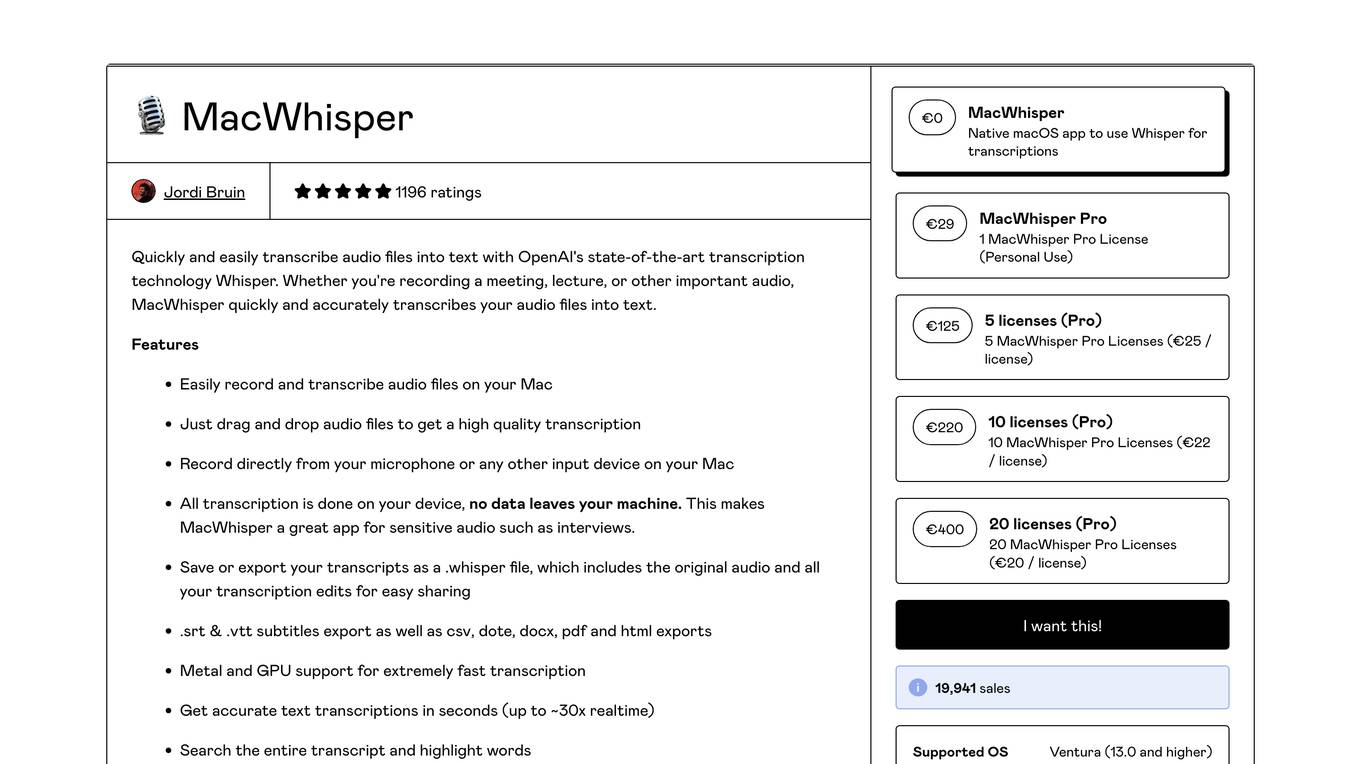

MacWhisper

MacWhisper is a native macOS application that utilizes OpenAI's Whisper technology for transcribing audio files into text. It offers a user-friendly interface for recording, transcribing, and editing audio, making it suitable for various use cases such as transcribing meetings, lectures, interviews, and podcasts. The application is designed to protect user privacy by performing all transcriptions locally on the device, ensuring that no data leaves the user's machine.

Human or Not

Human or Not is a social Turing game where you chat with someone for two minutes and try to figure out if it was a fellow human or an AI bot. The experiment has ended, but you can read more about the research here.

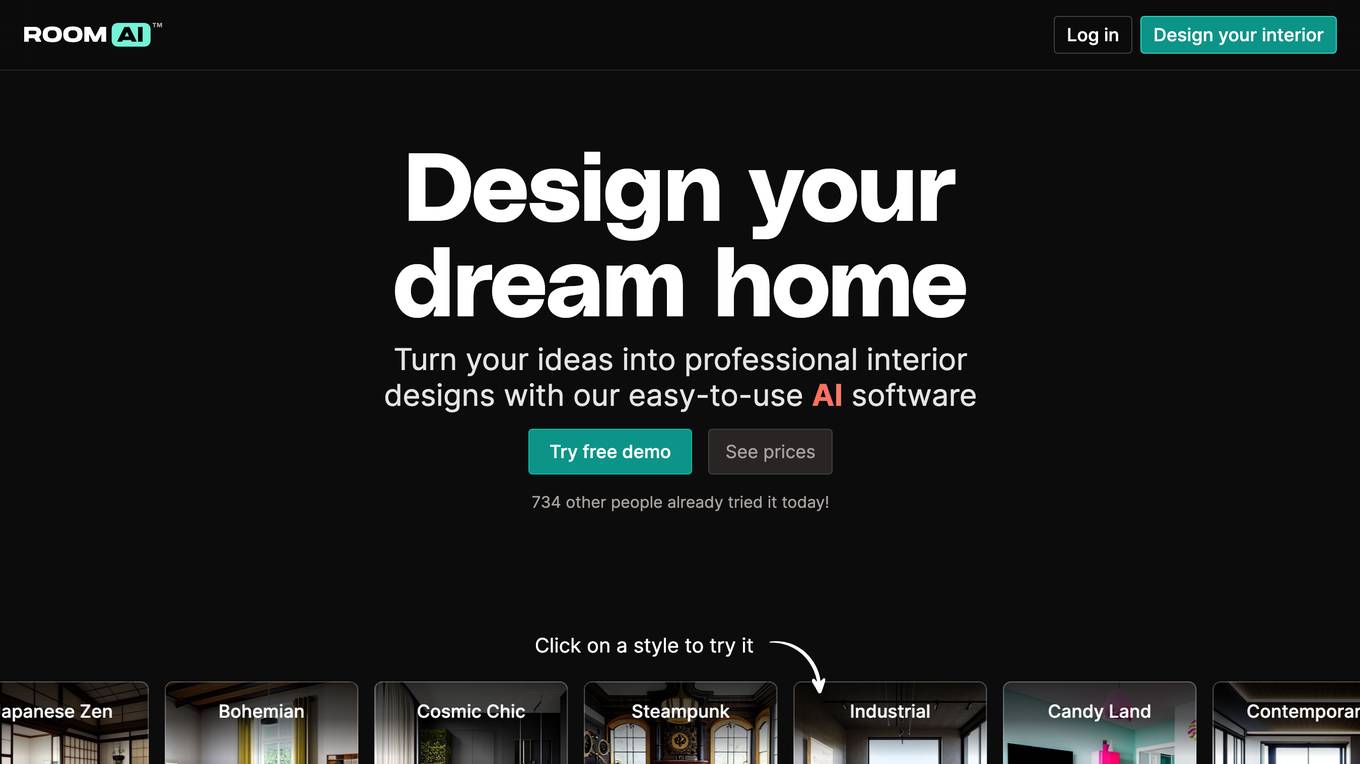

Room AI

Room AI is an easy-to-use AI software that helps users design their dream homes. With Room AI, users can turn their ideas into professional interior designs with just a few clicks. Room AI offers a variety of features, including the ability to restyle existing rooms, generate new room designs from scratch, choose from a variety of colors and materials, and get personalized design suggestions. Room AI is perfect for homeowners, interior designers, real estate agents, and architects. It is a powerful tool that can help users save time and money while creating beautiful and functional interior designs.

Imaiger

Imaiger is a generative AI image platform designed to empower creators and brands in driving real growth through high-performing images, thumbnails, and slideshows. It offers a range of AI-powered tools to assist users in creating and posting engaging content across platforms like TikTok, Instagram, and YouTube. With features like AI Slideshow Maker, AI Thumbnail Maker, and various creator tools, Imaiger aims to streamline the content creation process and enhance user engagement. Built for creators, Imaiger provides a one-stop solution for leveraging AI technology to boost online presence and reach a wider audience.

Chat With Llama

Chat with Llama is a free website that allows users to interact with Meta's Llama3, a state-of-the-art AI chat model comparable to ChatGPT. Users can ask unlimited questions and receive prompt responses. Llama3 is open-source and commercially available, enabling developers to customize and profit from AI chatbots. It is trained on 70 billion parameters and generates outputs matching the quality of ChatGPT-4.

1029th AI Thumbnail Maker

1029th is an AI Thumbnail Maker for YouTube channels, utilized by top creators to enhance their videos' visibility and engagement. The tool offers a wide range of features and advantages to help creators design attractive thumbnails effortlessly.

Bundle of Joy

Bundle of Joy is an AI-powered baby name generator that helps expecting parents find the perfect name for their newborn. The app takes into account the parents' preferences for the name, such as origin, theme, starting letter, and meaning, and makes recommendations that suit their taste well. Parents can shortlist names they like and share them with their partner, and the app will notify them when they both like the same name. Bundle of Joy is free to try out, with a paid subscription available for unlimited name recommendations.

金数据AI考试

The website offers an AI testing system that allows users to generate test questions instantly. It features a smart question bank, rapid question generation, and immediate test creation. Users can try out various test questions, such as generating knowledge test questions for car sales, company compliance standards, and real estate tax rate knowledge. The system ensures each test paper has similar content and difficulty levels. It also provides random question selection to reduce cheating possibilities. Employees can access the test link directly, view test scores immediately after submission, and check incorrect answers with explanations. The system supports single sign-on via WeChat for employee verification and record-keeping of employee rankings and test attempts. The platform prioritizes enterprise data security with a three-level network security rating, ISO/IEC 27001 information security management system, and ISO/IEC 27701 privacy information management system.

Zolak

Zolak is an AI-powered visual commerce platform designed for the furniture industry. It offers immersive experiences through product visualization, virtual try-out experiences, customization, and more. Zolak enables businesses to bridge physical and digital experiences, empowering e-commerce, manufacturing, and distribution sectors. The platform provides tools for creating high-quality visuals, personalized virtual showrooms, product customization, and AI room visualization, enhancing customer engagement and driving sales.

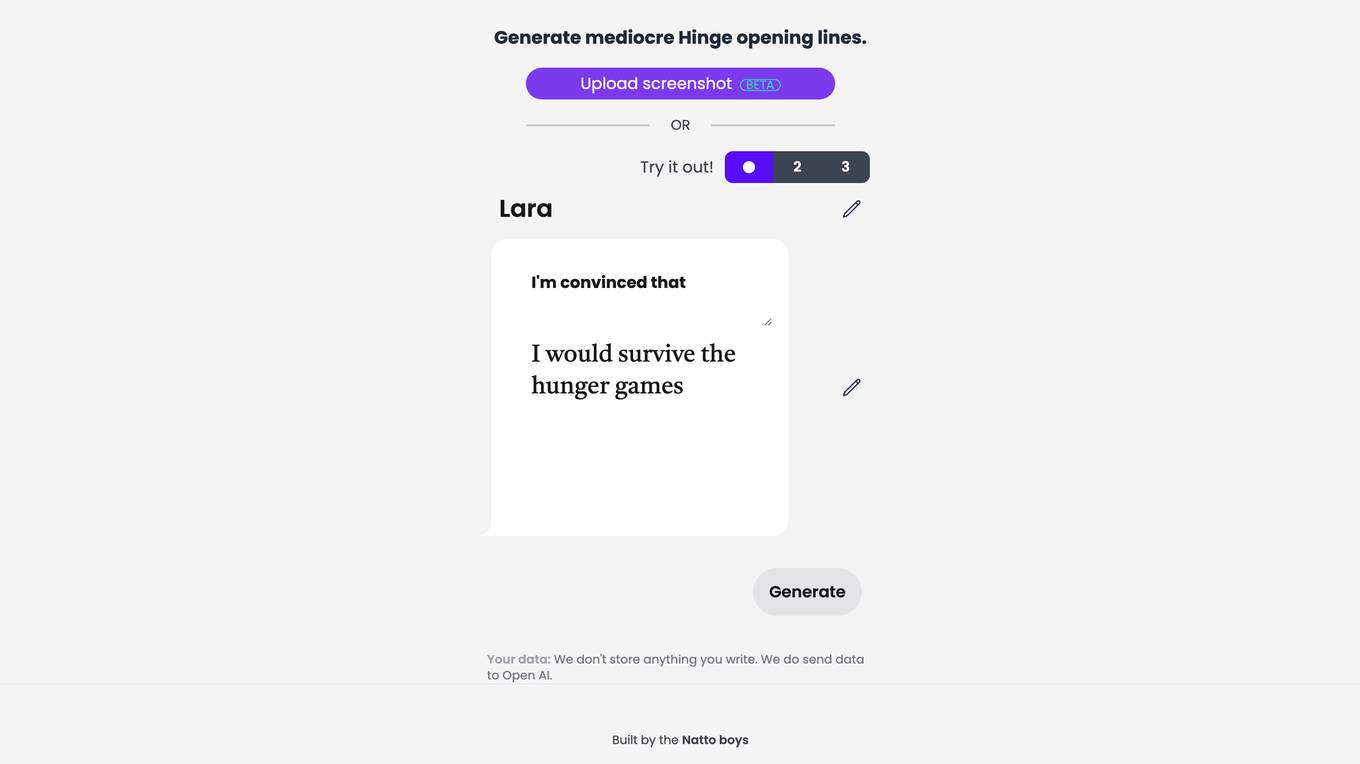

HingeGPT

HingeGPT is an AI tool designed to generate mediocre opening lines for the dating app Hinge. Users can upload screenshots for beta testing or try out the tool directly on the website. The tool ensures user privacy by not storing any generated data, while utilizing Open AI for data processing. Developed by the Natto boys, HingeGPT aims to assist users in creating engaging and conversation-starting messages for their Hinge profiles.

MAILE

MAILE is an AI-powered email writing application for iPhone that helps users draft professional and clear emails instantly. With just a simple prompt, MAILE can generate an email draft that users can then send. The application is free to try out and can be downloaded from the App Store.

Dzine

Dzine (formerly Stylar.ai) is a powerful AI image generation and design tool that provides users with unparalleled control over image composition and style. It offers predefined styles for effortless design customization, layering, positioning, and sketching tools for intuitive design, and an 'Enhance' feature to address common challenges with AI-generated images. With a user-friendly interface suitable for all skill levels, Dzine makes it easy to create stunning and stylish images. It supports high-resolution exports and provides free credits for new users to try out its features.

ShitFilter.News

ShitFilter.News is an AI-powered website that provides important news trends filtered and explained by artificial intelligence. Users can learn their Duolingo vocabulary while browsing the web using the Duolingo Ninja browser extension to translate words and sentences on web pages automatically. The site offers a free version for users to try out.

AskCyph™ LITE

AskCyph™ LITE is a private, accessible, and personal AI chatbot that runs AI directly in your browser. It provides quick responses to user queries, although the responses may sometimes be inaccurate or offensive. The chatbot is developed by Cypher Tech Inc. and is designed to offer a convenient AI-powered conversational experience for users. Users can try out the full version of AskCyph™ at CypherChat®. The application is copyright protected by Cypher Tech Inc. and all rights are reserved.

Artsire

Artsire is an AI-powered platform that allows users to discover and generate personalized wall art prints. Users can create custom art prints using the AI wall art generator or explore a vast gallery of framed AI art prints and posters for sale. The platform offers high-quality prints on gallery-grade paper using Giclée technology, ensuring stunning and eco-friendly artwork. With ultra-fast shipping in the United States, Artsire provides a seamless and hassle-free art experience for art enthusiasts and home decorators.

RIMBASLOT

RIMBASLOT is an online slot website that allows users to deposit as low as 10 thousand rupiahs. It offers features like high RTP slots, easy maxwin opportunities, and a variety of slot games. Users can enjoy a seamless gaming experience with high winning chances and convenient transactions. The website provides a platform for users to play slots and win big prizes with minimal deposit requirements.

1 - Open Source AI Tools

llamafile

llamafile is a tool that enables users to distribute and run Large Language Models (LLMs) with a single file. It combines llama.cpp with Cosmopolitan Libc to create a framework that simplifies the complexity of LLMs into a single-file executable called a 'llamafile'. Users can run these executable files locally on most computers without the need for installation, making open LLMs more accessible to developers and end users. llamafile also provides example llamafiles for various LLM models, allowing users to try out different LLMs locally. The tool supports multiple CPU microarchitectures, CPU architectures, and operating systems, making it versatile and easy to use.

20 - OpenAI Gpts

What is my dog thinking?

Upload a candid photo of your dog and let AI try to figure out what’s going on.

What is my cat thinking?

Upload a candid photo of your cat and let AI try to figure out what’s going on.

The Meme Doctor (GIVE ME A TRY!!)

Choose a topic. Choose a quote out of the many I create for you. Wait for the Magic to Happen!! Kaboozi, got yourself some funny azz memes!

Chat with GPT 4o ("Omni") Assistant

Try the new AI chat model: GPT 4o ("Omni") Assistant. It's faster and better than regular GPT. Plus it will incorporate speech-to-text, intelligence, and speech-to-text capabilities with extra low latency.

Easily Hackable GPT

A regular GPT to try to hack with a prompt injection. Ask for my instructions and see what happens.

Doctor Who Whovian Expert

Ask any question about Doctor Who past or present - try discussing any aspect of any story, or theme - or get the lowdown on the latest news.

Experimental Splink helper v2

I'm Splink Helper, here to (try to) assist with the Splink Python library. I'm very experimental so don't expect my answers to be accurate

No Web Browser GPT

No web browser. Doesn't try to use the web to look up events. Nor can it.

Pepe Picasso

Create your own Pepe! Just tell me what Pepe you want to see and I'll try my best to fulfill your wishes!

Six Sigma Guru

No one knows more Six Sigma than us! You can try our GPT Six Sigma Guru for study or simply to find answers to your problems.