Best AI tools for< Troubleshoot Llm Applications >

20 - AI tool Sites

Arize AI

Arize AI is an AI Observability & LLM Evaluation Platform that helps you monitor, troubleshoot, and evaluate your machine learning models. With Arize, you can catch model issues, troubleshoot root causes, and continuously improve performance. Arize is used by top AI companies to surface, resolve, and improve their models.

xAI Grok

xAI Grok is a visual analytics platform that helps users understand and interpret machine learning models. It provides a variety of tools for visualizing and exploring model data, including interactive charts, graphs, and tables. xAI Grok also includes a library of pre-built visualizations that can be used to quickly get started with model analysis.

403 Forbidden Resolver

The website is currently displaying a '403 Forbidden' error, which means that the server is refusing to respond to the request. This could be due to various reasons such as insufficient permissions, server misconfiguration, or a client error. The 'openresty' message indicates that the server is using the OpenResty web platform. It is important to troubleshoot and resolve the issue to regain access to the website.

Highcountry Toyota Internet Connection Troubleshooter

Highcountrytoyota.stage.autogo.ai is an AI tool designed to provide assistance and support for troubleshooting internet connection issues. The website offers guidance on resolving connection problems, including checking network settings, firewall configurations, and proxy server issues. Users can find step-by-step instructions and tips to troubleshoot and fix connection errors. The platform aims to help users quickly identify and resolve connectivity issues to ensure seamless internet access.

Web Server Error Resolver

The website is currently displaying a '403 Forbidden' error, which indicates that the server is refusing to respond to the request. This error message is typically displayed when the server understands the request made by the client but refuses to fulfill it. The 'openresty' mentioned in the text is likely the web server software being used. It is important to troubleshoot and resolve the 403 Forbidden error to regain access to the website's content.

Webb.ai

Webb.ai is an AI-powered platform that offers automated troubleshooting for Kubernetes. It is designed to assist users in identifying and resolving issues within their Kubernetes environment efficiently. By leveraging AI technology, Webb.ai provides insights and recommendations to streamline the troubleshooting process, ultimately improving system reliability and performance. The platform is user-friendly and caters to both beginners and experienced users in the field of Kubernetes management.

Mavenoid

Mavenoid is an AI-powered product support tool that offers automated product support services, including product selection advice, troubleshooting solutions, replacement part ordering, and more. The platform is designed to understand complex questions and provide step-by-step instructions to guide users through various product-related processes. Mavenoid is trusted by leading product companies and focuses on resolving customer questions efficiently. The tool optimizes help centers for SEO, offers product insights to increase revenue, and provides support in multiple languages. It is known for reducing incoming inquiries and offering a seamless support experience.

404 Error Assistant

The website displays a 404 error message indicating that the deployment cannot be found. It provides a code (DEPLOYMENT_NOT_FOUND) and an ID (sfo1::fpvh6-1770917541134-af74095b8686) for reference. Users are directed to consult the documentation for further information and troubleshooting.

CloudFront Error Page

The website encountered an error (502 ERROR) due to CloudFront not being able to resolve the origin domain name. This error message indicates a connection issue between the user's device and the server hosting the app or website. It suggests potential causes such as high traffic volume or a configuration error. The user is advised to try again later or contact the app or website owner for assistance. If the user provides content through CloudFront, they can refer to the CloudFront documentation for troubleshooting steps.

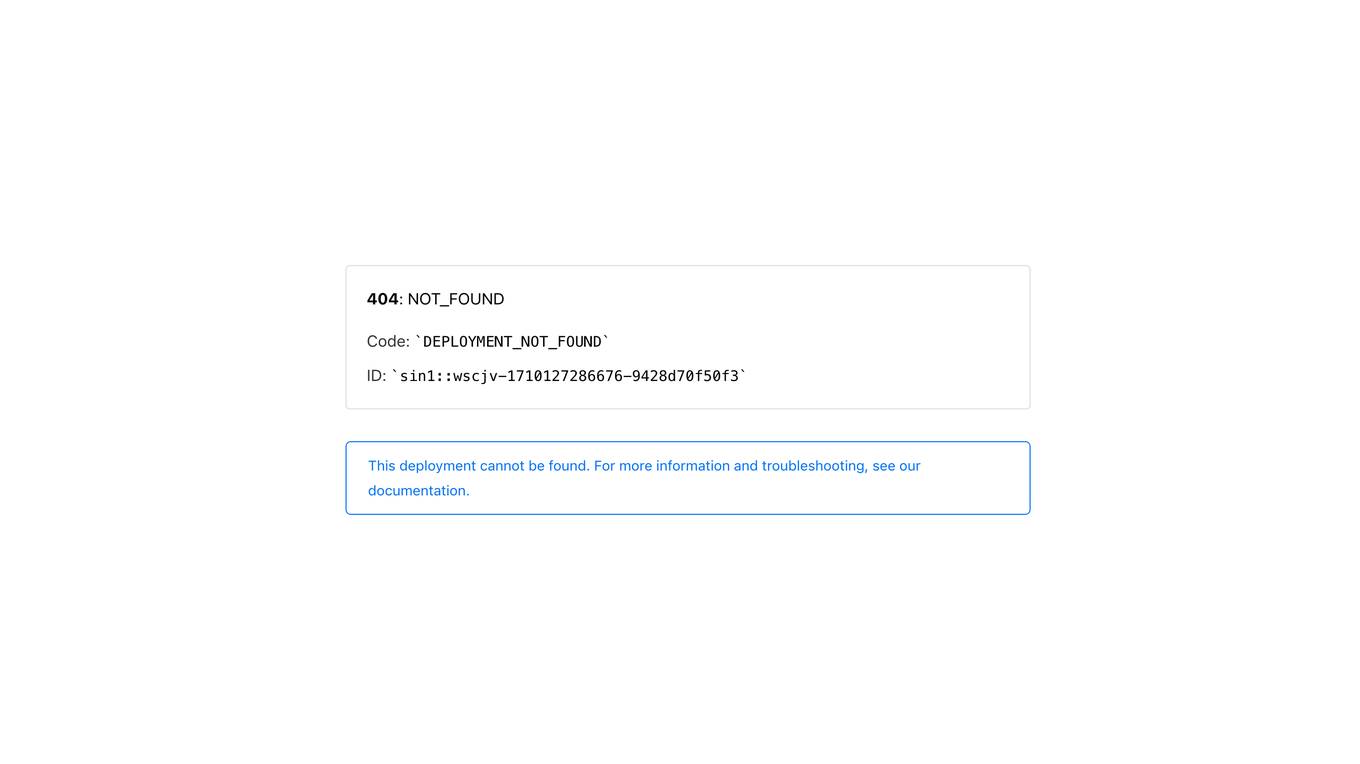

404 Error Page

The website displays a '404: NOT_FOUND' error message indicating that the deployment cannot be found. It provides a code (DEPLOYMENT_NOT_FOUND) and an ID (sin1::22md2-1720772812453-4893618e160a) for reference. Users are directed to check the documentation for further information and troubleshooting.

404 Error Page

The website displays a 404 error message indicating that the deployment cannot be found. It provides a code (DEPLOYMENT_NOT_FOUND) and an ID (sfo1::drw9g-1771091771764-93b091583900) for reference. Users are directed to check the documentation for further information and troubleshooting.

404 Error Page

The website page displays a 404 error message indicating that the deployment cannot be found. It provides a code (DEPLOYMENT_NOT_FOUND) and an ID (sin1::4wq5g-1718736845999-777f28b346ca) for reference. Users are advised to consult the documentation for further information and troubleshooting.

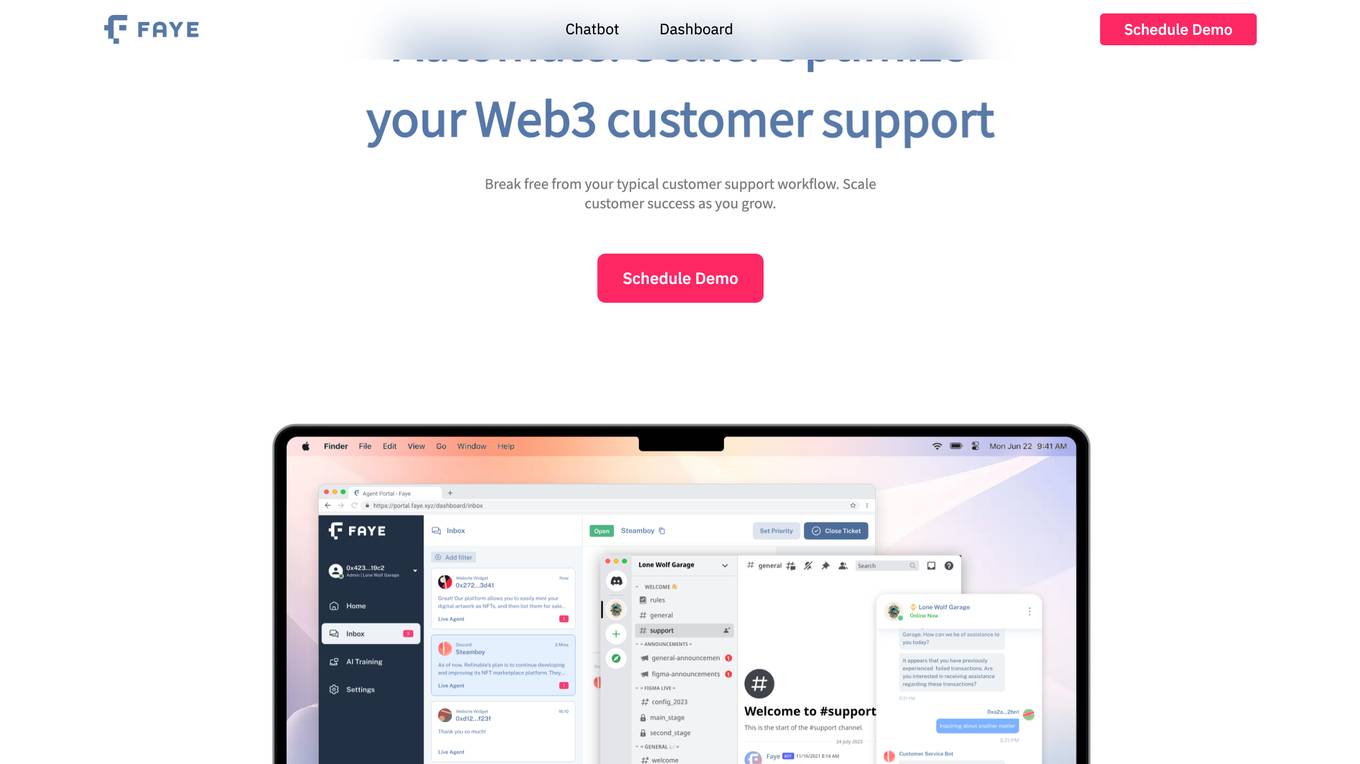

faye.xyz

faye.xyz is a website experiencing an SSL handshake failed error (Error code 525) due to Cloudflare being unable to establish an SSL connection to the origin server. The issue may be related to incompatible SSL configuration with Cloudflare, possibly due to no shared cipher suites. Visitors are advised to try again in a few minutes, while website owners are recommended to check the SSL configuration. Cloudflare provides additional troubleshooting information for resolving such errors.

404 Error Assistant

The website displays a 404 error message indicating that the deployment cannot be found. Users encountering this error are directed to refer to the documentation for more information and troubleshooting.

Error 404 Not Found

The website displays a '404: NOT_FOUND' error message indicating that the deployment cannot be found. It provides a code 'DEPLOYMENT_NOT_FOUND' and an ID 'sin1::t6mdp-1736442717535-3a5d4eeaf597'. Users are directed to refer to the documentation for further information and troubleshooting.

404 Error Not Found

The website displays a 404 error message indicating that the deployment cannot be found. It provides a code (DEPLOYMENT_NOT_FOUND) and an ID (cle1::t5xdd-1771006563046-a762790f1009). Users are directed to refer to the documentation for further information and troubleshooting.

Internal Server Error

The website encountered an internal server error, resulting in a 500 Internal Server Error message. This error indicates that the server faced an issue preventing it from fulfilling the request. Possible causes include server overload or errors within the application.

404 Error Notifier

The website displays a 404 error message indicating that the deployment cannot be found. It provides a code 'DEPLOYMENT_NOT_FOUND' and an ID 'sin1::zdhct-1723140771934-b5e5ad909fad'. Users are directed to refer to the documentation for further information and troubleshooting.

404 Error Page

The website displays a 404 error message indicating that the deployment cannot be found. It provides a code (DEPLOYMENT_NOT_FOUND) and an ID (sin1::hfkql-1741193256810-ca47dff01080). Users are directed to refer to the documentation for further information and troubleshooting.

404 Error Page

The website displays a 404 error message indicating that the deployment cannot be found. It provides a code (DEPLOYMENT_NOT_FOUND) and an ID (sfo1::5wd8j-1770917142388-c57c677706b2) for reference. Users are directed to check the documentation for further information and troubleshooting.

1 - Open Source AI Tools

openllmetry

OpenLLMetry is a set of extensions built on top of OpenTelemetry that gives you complete observability over your LLM application. Because it uses OpenTelemetry under the hood, it can be connected to your existing observability solutions - Datadog, Honeycomb, and others. It's built and maintained by Traceloop under the Apache 2.0 license. The repo contains standard OpenTelemetry instrumentations for LLM providers and Vector DBs, as well as a Traceloop SDK that makes it easy to get started with OpenLLMetry, while still outputting standard OpenTelemetry data that can be connected to your observability stack. If you already have OpenTelemetry instrumented, you can just add any of our instrumentations directly.

20 - OpenAI Gpts

CDR

Explore call detail records (CDR) for a variety of PBX platforms including Avaya, Mitel, NEC, and others with this UC trained GPT. Use specific commands to help you expertly navigate and troubleshoot CDR from diverse UC environments.

Logic Pro - Talk to the Manual

I'm Logic Pro X's manual. Let me answer your questions, troubleshoot whatever issue you're having and get you back into the groove!

Pi Pico + Micropython Assistant

An advanced virtual assistant specializing in RaspBerry Pi Pico's and Micropython. Designed to offer expert advice, troubleshoot code, and provide detailed guidance.

3D Print Diagnostics Expert

Expert in 3D printing diagnostics and problem resolution, mindful of confidentiality and careful with brand usage.

MacExpert

An assistant replying to any question related to the Mac platform: macOS, computers and apps. Visit macexpert.io for human assistance.

Aws Guru

Your friendly coworker in AWS troubleshooting, offering precise, bullet-point advice. Leave feedback: https://dlmdby03vet.typeform.com/to/VqWNt8Dh

Tech Senior Helper

Warm tech support for seniors, with calming strategies, patient and helpful.

GC Method Developer

Provides concise GC troubleshooting and method development advice that is easy to implement.