Best AI tools for< Train Models Faster >

20 - AI tool Sites

Bifrost AI

Bifrost AI is a data generation engine designed for AI and robotics applications. It enables users to train and validate AI models faster by generating physically accurate synthetic datasets in 3D simulations, eliminating the need for real-world data. The platform offers pixel-perfect labels, scenario metadata, and a simulated 3D world to enhance AI understanding. Bifrost AI empowers users to create new scenarios and datasets rapidly, stress test AI perception, and improve model performance. It is built for teams at every stage of AI development, offering features like automated labeling, class imbalance correction, and performance enhancement.

Synthesis AI

Synthesis AI is a synthetic data platform that enables more capable and ethical computer vision AI. It provides on-demand labeled images and videos, photorealistic images, and 3D generative AI to help developers build better models faster. Synthesis AI's products include Synthesis Humans, which allows users to create detailed images and videos of digital humans with rich annotations; Synthesis Scenarios, which enables users to craft complex multi-human simulations across a variety of environments; and a range of applications for industries such as ID verification, automotive, avatar creation, virtual fashion, AI fitness, teleconferencing, visual effects, and security.

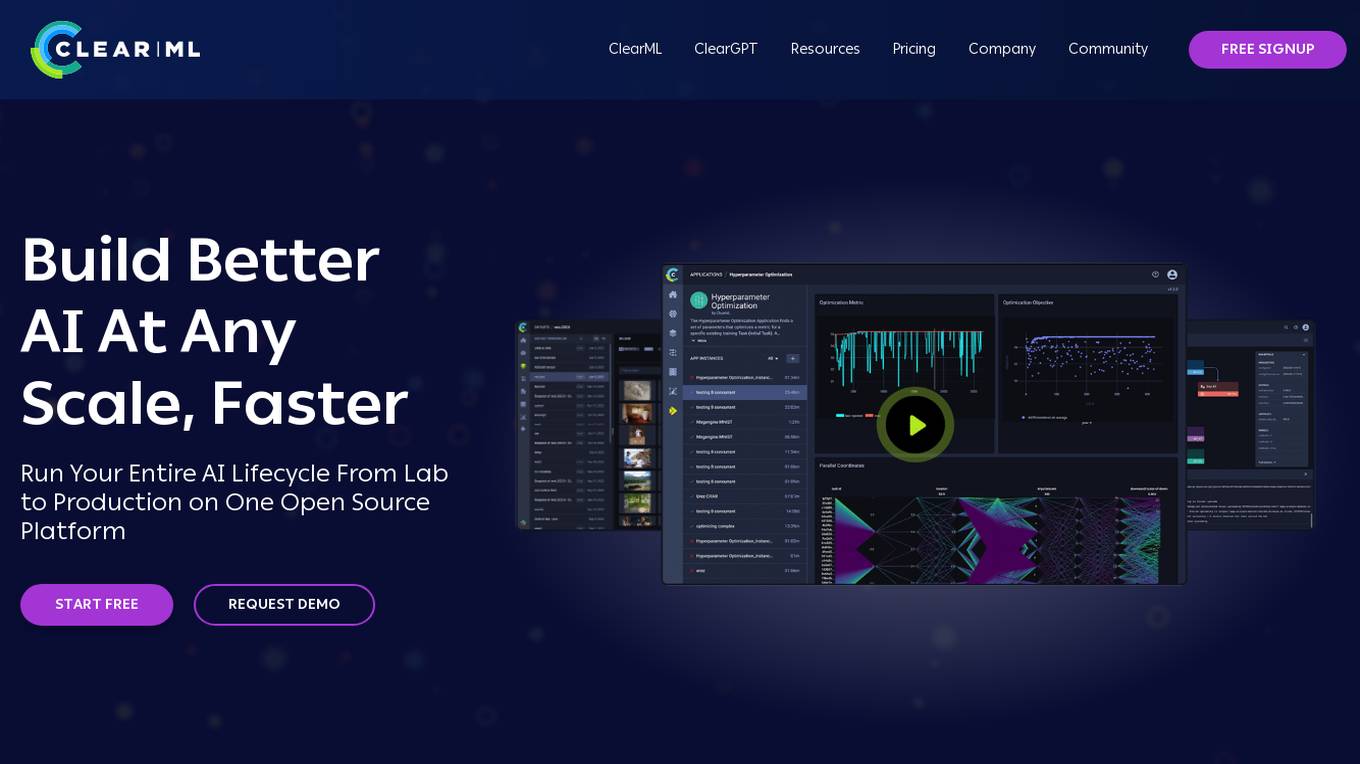

ClearML

ClearML is an open-source, end-to-end platform for continuous machine learning (ML). It provides a unified platform for data management, experiment tracking, model training, deployment, and monitoring. ClearML is designed to make it easy for teams to collaborate on ML projects and to ensure that models are deployed and maintained in a reliable and scalable way.

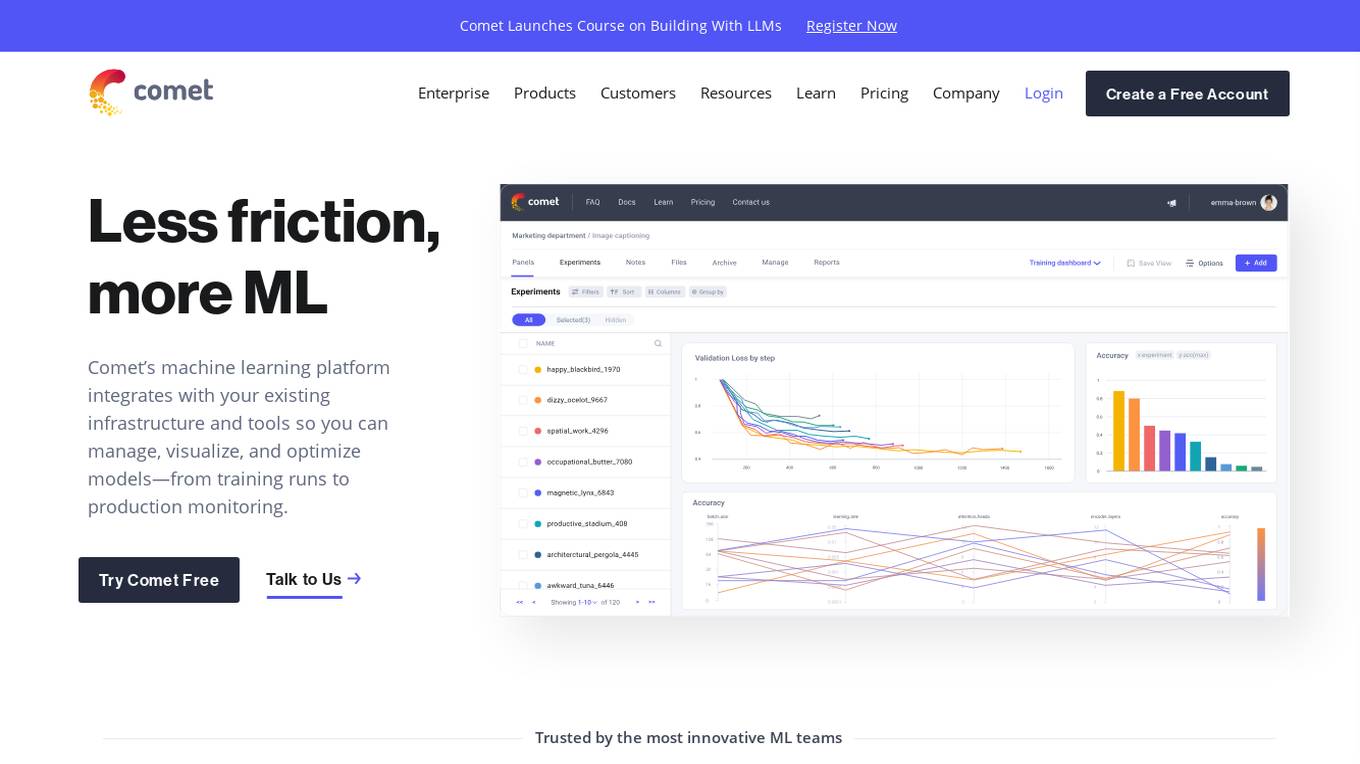

Comet ML

Comet ML is a machine learning platform that integrates with your existing infrastructure and tools so you can manage, visualize, and optimize models—from training runs to production monitoring.

Comet ML

Comet ML is a machine learning platform that integrates with your existing infrastructure and tools so you can manage, visualize, and optimize models—from training runs to production monitoring.

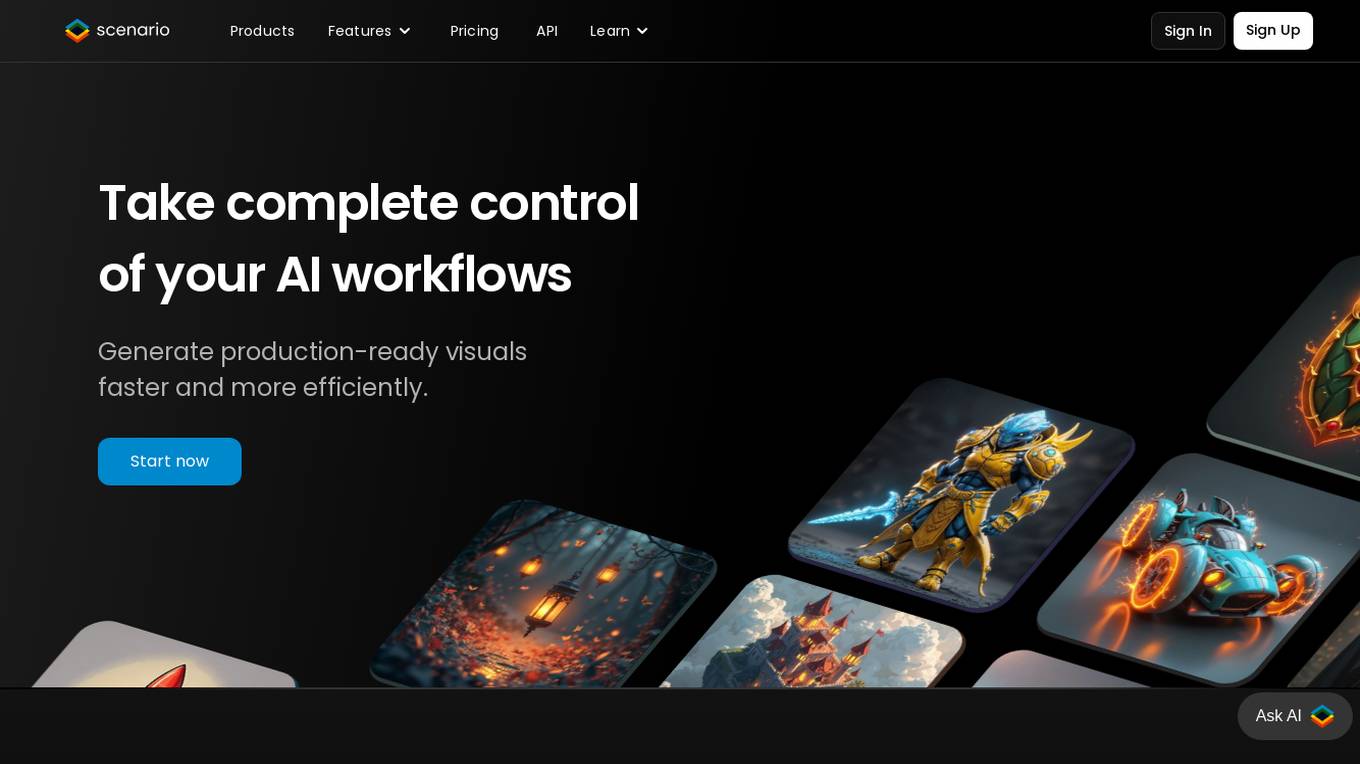

Scenario

Scenario is an AI tool designed to empower creators and marketers by providing unparalleled control over AI workflows. It allows users to generate production-ready visuals faster and more efficiently, streamlining workflows and enhancing creativity. With advanced features like custom AI model training, seamless integration, and full control over outputs, Scenario revolutionizes the process of asset ideation and creation. The tool is API-first and can be easily integrated into diverse workflows, design software, and game engines, making it a versatile solution for various industries.

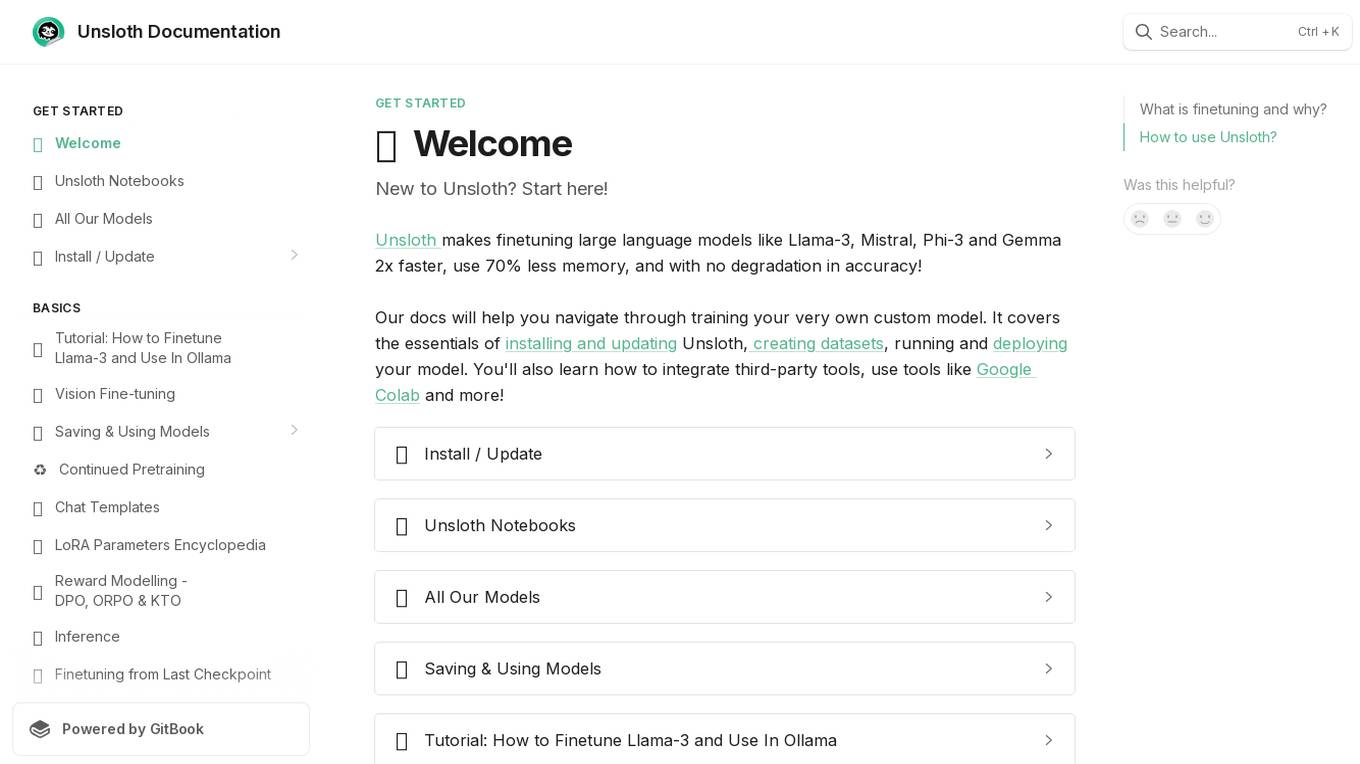

Unsloth

Unsloth is an AI tool designed to make finetuning large language models like Llama-3, Mistral, Phi-3, and Gemma 2x faster, use 70% less memory, and with no degradation in accuracy. The tool provides documentation to help users navigate through training their custom models, covering essentials such as installing and updating Unsloth, creating datasets, running, and deploying models. Users can also integrate third-party tools and utilize platforms like Google Colab.

V7

V7 is an AI data engine for computer vision and generative AI. It provides a multimodal automation tool that helps users label data 10x faster, power AI products via API, build AI + human workflows, and reach 99% AI accuracy. V7's platform includes features such as automated annotation, DICOM annotation, dataset management, model management, image annotation, video annotation, document processing, and labeling services.

Proscia

Proscia is a leading provider of digital pathology solutions for the modern laboratory. Its flagship product, Concentriq, is an enterprise pathology platform that enables anatomic pathology laboratories to achieve 100% digitization and deliver faster, more precise results. Proscia also offers a range of AI applications that can be used to automate tasks, improve diagnostic accuracy, and accelerate research. The company's mission is to perfect cancer diagnosis with intelligent software that changes the way the world practices pathology.

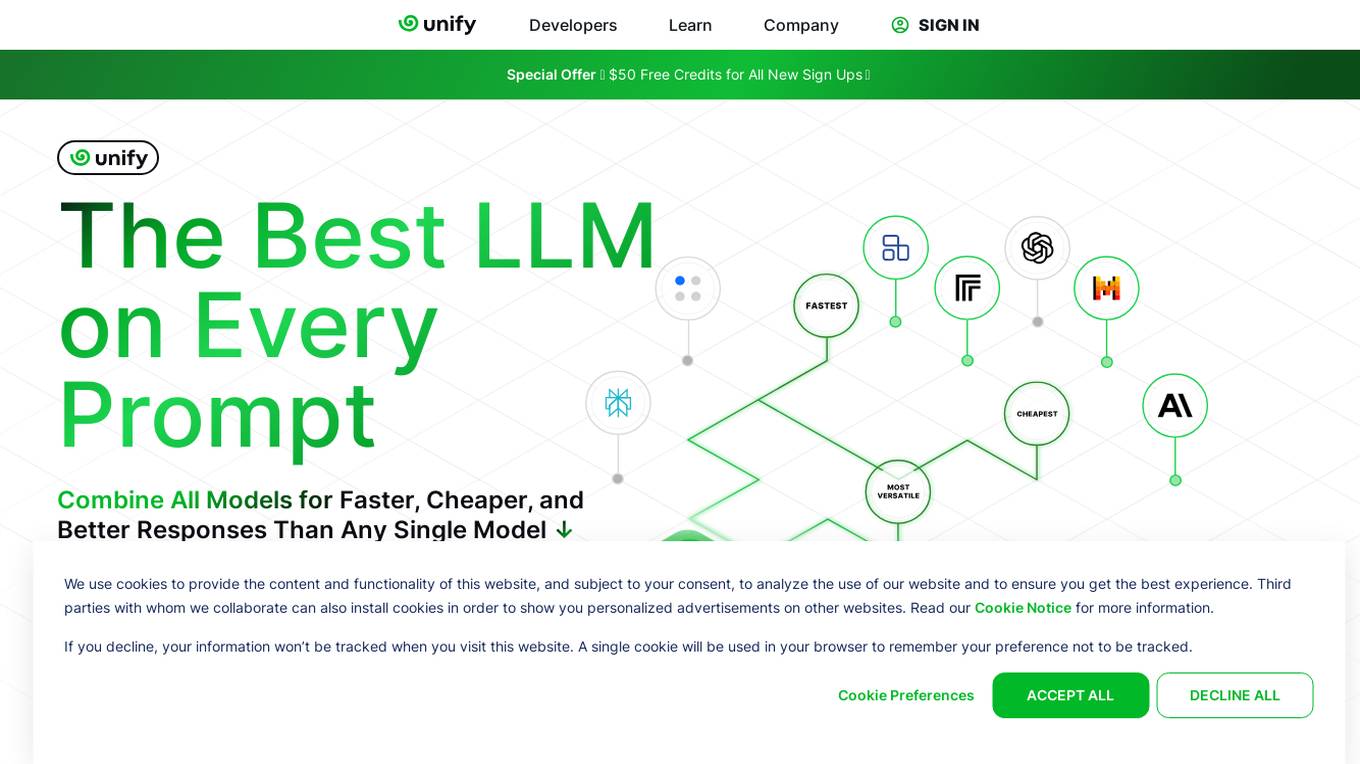

Unify

Unify is an AI tool that offers a unified platform for accessing and comparing various Language Models (LLMs) from different providers. It allows users to combine models for faster, cheaper, and better responses, optimizing for quality, speed, and cost-efficiency. Unify simplifies the complex task of selecting the best LLM by providing transparent benchmarks, personalized routing, and performance optimization tools.

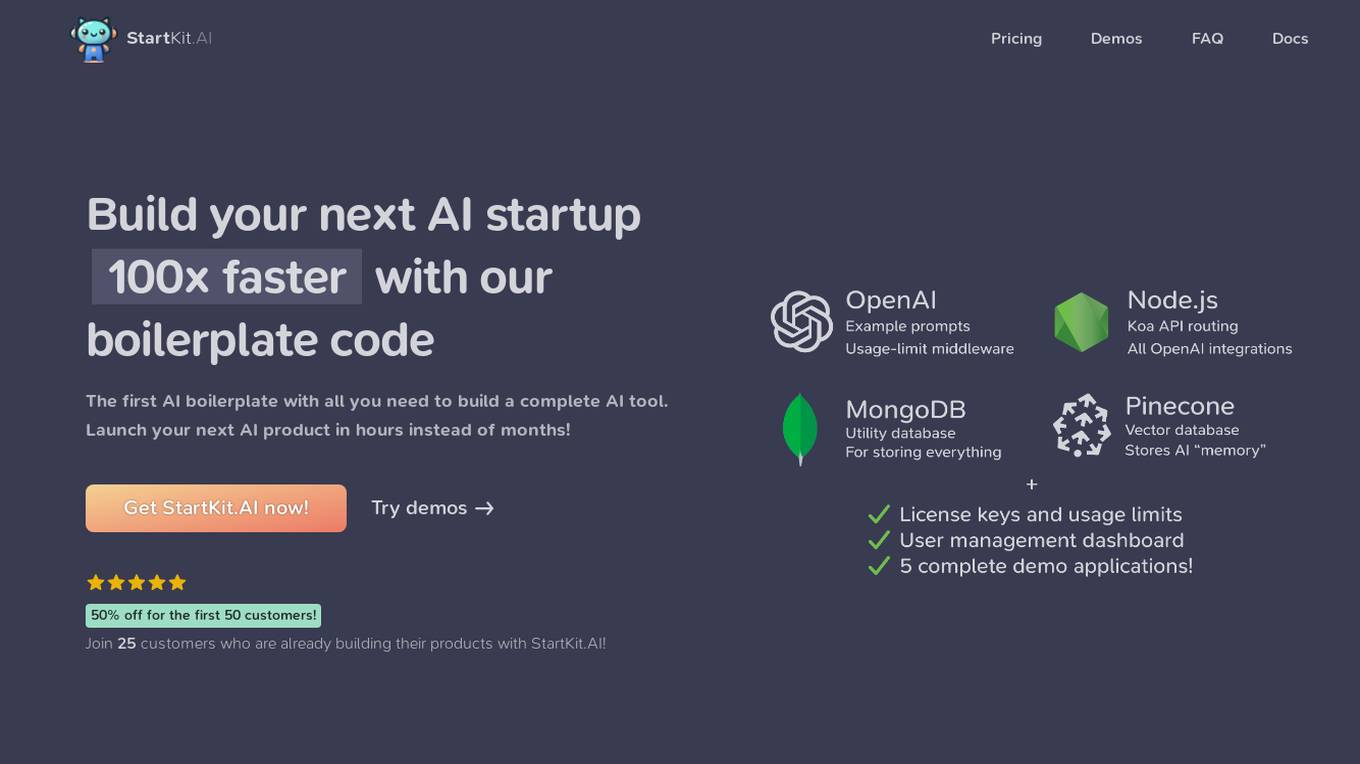

StartKit.AI

StartKit.AI is a boilerplate code for AI products that helps users build their AI startups 100x faster. It includes pre-built REST API routes for all common AI functionality, a pre-configured Pinecone for text embeddings and Retrieval-Augmented Generation (RAG) for chat endpoints, and five React demo apps to help users get started quickly. StartKit.AI also provides a license key and magic link authentication, user & API limit management, and full documentation for all its code. Additionally, users get access to guides to help them get set up and one year of updates.

SupportGuy

SupportGuy is a customer support platform powered by ChatGPT. It allows businesses to create Slack bots that are trained on their knowledge base, enabling them to resolve customer queries 10x faster. The platform is easy to set up and use, and it offers a variety of features and advantages that make it a valuable tool for businesses of all sizes.

Paperspace

Paperspace is an AI tool designed to develop, train, and deploy AI models of any size and complexity. It offers a cloud GPU platform for accelerated computing, with features such as GPU cloud workflows, machine learning solutions, GPU infrastructure, virtual desktops, gaming, rendering, 3D graphics, and simulation. Paperspace provides a seamless abstraction layer for individuals and organizations to focus on building AI applications, offering low-cost GPUs with per-second billing, infrastructure abstraction, job scheduling, resource provisioning, and collaboration tools.

H2O.ai

H2O.ai is a leading AI platform that offers a convergence of predictive and generative AI solutions for private and protected data. The platform provides a wide range of AI agents, digital assistants, and business insights tools for various industries and use cases. With a focus on model building, data science, and enterprise development, H2O.ai empowers users to accelerate model development, automate workflows, and deploy AI applications securely on-premises or in the cloud.

Pandio

Pandio is an AI orchestration platform that simplifies data pipelines to harness the power of AI. It offers cloud-native managed solutions to connect systems, automate data movement, and accelerate machine learning model deployment. Pandio's AI-driven architecture orchestrates models, data, and ML tools to drive AI automation and data-driven decisions faster. The platform is designed for price-performance, offering data movement at high speed and low cost, with near-infinite scalability and compatibility with any data, tools, or cloud environment.

Dialogflow

Dialogflow is a natural language processing platform that allows developers to build conversational interfaces for applications. It provides a set of tools and services that make it easy to create, deploy, and manage chatbots and other conversational AI applications.

Flowshot

Flowshot is an AI plugin for Google Sheets that allows users to supercharge their spreadsheets with AI. With Flowshot, users can work faster with AI prompts, autocomplete repetitive tasks, build custom AI models without code, and generate formulas and AI images. Flowshot is used by organizations of all shapes and sizes and has been rated 5 stars by its customers.

Google for Developers

Google for Developers provides developers with tools, resources, and documentation to build apps for Android, Chrome, ChromeOS, Cloud, Firebase, Flutter, Google AI Studio, Google Maps Platform, Google Workspace, TensorFlow, and YouTube. It also offers programs and events for developers to learn and connect with each other.

Amazon Web Services (AWS)

Amazon Web Services (AWS) is a comprehensive, evolving cloud computing platform from Amazon that provides a broad set of global compute, storage, database, analytics, application, and deployment services that help organizations move faster, lower IT costs, and scale applications. With AWS, you can use as much or as little of its services as you need, and scale up or down as required with only a few minutes notice. AWS has a global network of regions and availability zones, so you can deploy your applications and data in the locations that are optimal for you.

Fathom5

Fathom5 is a company that specializes in the intersection of AI and industrial systems. They offer a range of products and services to help customers build more resilient, flexible, and efficient industrial systems. Fathom5's approach is unique in that they take a security-first approach to cyber-physical system design. This means that security is built into every stage of the development process, from ideation to engineering to testing to deployment. This approach has been proven to achieve higher system resiliency and faster regulatory compliance at a reduced cost.

1 - Open Source AI Tools

neural-compressor

Intel® Neural Compressor is an open-source Python library that supports popular model compression techniques such as quantization, pruning (sparsity), distillation, and neural architecture search on mainstream frameworks such as TensorFlow, PyTorch, ONNX Runtime, and MXNet. It provides key features, typical examples, and open collaborations, including support for a wide range of Intel hardware, validation of popular LLMs, and collaboration with cloud marketplaces, software platforms, and open AI ecosystems.

20 - OpenAI Gpts

Instructor GCP ML

Formador para la certificación de ML Engineer en GCP, con respuestas y explicaciones detalladas.

ChatXGB

GPT chatbot that helps you with technical questions related to XGBoost algorithm and library

HuggingFace Helper

A witty yet succinct guide for HuggingFace, offering technical assistance on using the platform - based on their Learning Hub

TensorFlow Oracle

I'm an expert in TensorFlow, providing detailed, accurate guidance for all skill levels.

TonyAIDeveloperResume

Chat with my resume to see if I am a good fit for your AI related job.