Best AI tools for< Text Processing >

20 - AI tool Sites

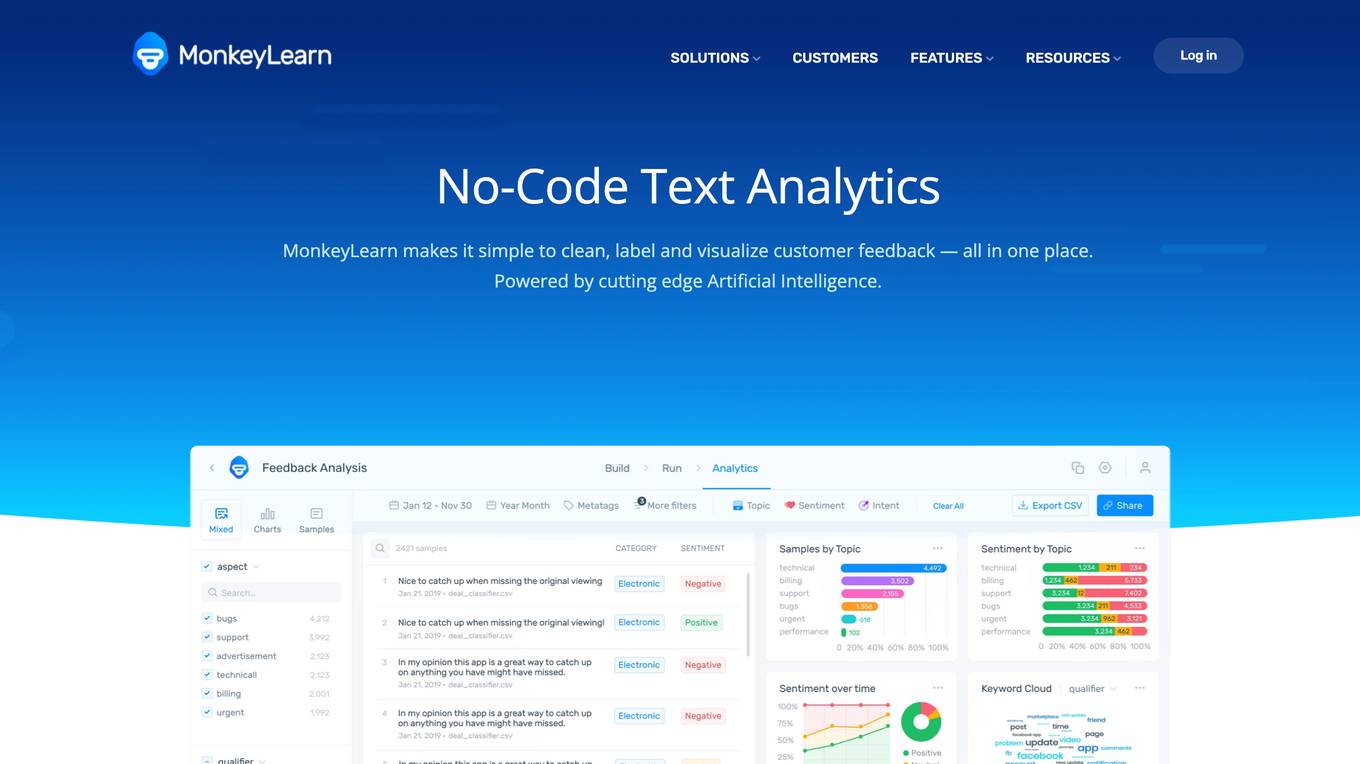

MonkeyLearn

MonkeyLearn is an AI tool that specializes in text processing. It offers a range of features for text classification, extraction, data analysis, and more. Users can build custom models, process data manually or automatically, and integrate the tool into their workflows. MonkeyLearn provides advanced settings for custom models and ensures user data security and privacy compliance.

NLTK

NLTK (Natural Language Toolkit) is a leading platform for building Python programs to work with human language data. It provides easy-to-use interfaces to over 50 corpora and lexical resources such as WordNet, along with a suite of text processing libraries for classification, tokenization, stemming, tagging, parsing, and semantic reasoning, wrappers for industrial-strength NLP libraries, and an active discussion forum. Thanks to a hands-on guide introducing programming fundamentals alongside topics in computational linguistics, plus comprehensive API documentation, NLTK is suitable for linguists, engineers, students, educators, researchers, and industry users alike.

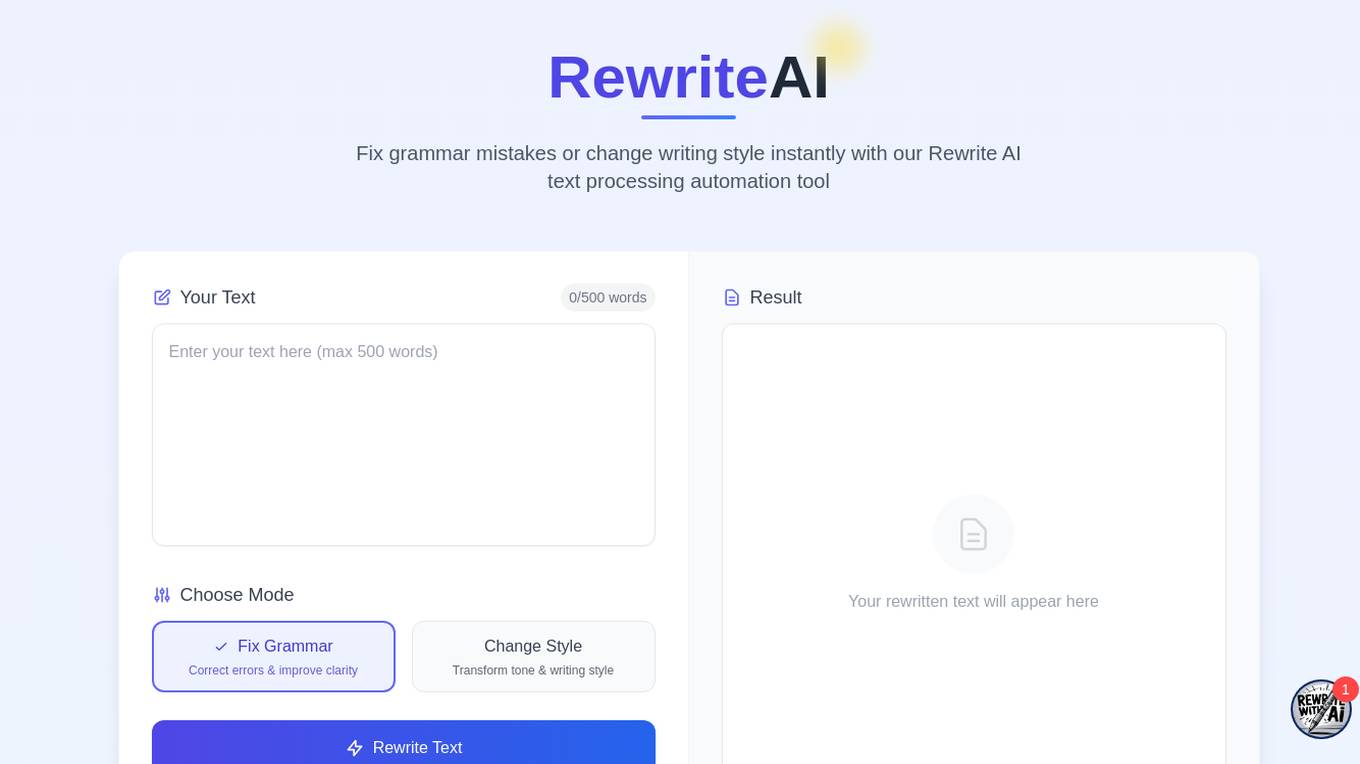

Rewrite AI

Rewrite AI is an advanced text processing automation tool that utilizes cutting-edge AI technology to fix grammar mistakes, improve clarity, and transform writing style instantly. Users can choose from over 30 writing styles or define a custom tone to achieve perfect results. The tool provides instant results, saving time and effort on editing tasks. Rewrite AI is designed to enhance the quality of written content efficiently and effectively.

iTextMaster

iTextMaster is an AI-powered tool that allows users to analyze, summarize, and chat with text-based documents, including PDFs and web pages. It utilizes ChatGPT technology to provide intelligent answers to questions and extract key information from documents. The tool is designed to simplify text processing, improve understanding efficiency, and save time. iTextMaster supports multiple languages and offers a user-friendly interface for easy navigation and interaction.

1minAI

1minAI is a free all-in-one AI application that offers various AI features for text, image, audio, and video processing. It provides tools like image generation, text removal, background replacement, and more. With no AI training required, the platform ensures user data privacy. Users can access top AI tools for tasks like content creation, design, social media management, and more. The application offers reasonable pricing plans with no hidden fees and secure payment options. Users can earn free credits through daily visits, reviews, and referrals.

GetDigest

GetDigest is an AI-powered tool that provides lightning-fast document summarization. It can analyze web content and text documents in over 33 languages, summarizing them efficiently by ignoring irrelevant information. The technology is designed to help users process information more effectively, saving time and enhancing productivity. GetDigest offers businesses the opportunity to integrate its technology into their own infrastructure or software projects, supporting various text formats, web environments, archives, emails, and image formats.

Atua

Atua is an AI tool designed to provide seamless access to ChatGPT on Mac devices. It allows users to easily interact with ChatGPT through custom commands and shortcut keys, enabling tasks such as text rephrasing, grammar correction, content expansion, and more. Atua offers effortless text selection and processing, conversation history saving, and limitless use cases for ChatGPT across various domains. The tool ensures user privacy by storing data locally and not monitoring or sending any analytics.

Chad AI

Chad AI is an AI-powered chatbot application that leverages advanced neural networks like GPT-4o, Midjourney, Stable Diffusion, and Dall-E to provide users with quick and efficient responses in Russian. The application supports text and code processing, content creation, image generation, and text improvement. It offers a range of subscription plans to cater to different user needs and preferences, ensuring seamless access to cutting-edge AI technologies for various tasks.

Torq AI

Torq AI is an advanced productivity assistant powered by ChatGPT, designed to revolutionize productivity through AI assistance. It offers features such as efficient email communication, powerful text processing, integrated ChatGPT and Google searches, and data insights. Torq AI aims to make users 200x more productive by providing seamless and interactive AI-powered solutions.

Macaify

Macaify is an AI application designed to bring AI capabilities to any Mac app with just a shortcut key. Users can unlock various AI smarts, customize predefined robots, and access over 1000 robot templates for text processing, code generation, and automation tasks. The application allows for mouse-free operation and offers features like generating images, searching images, converting text to speech files, bridging system and internet interfaces, processing web URLs, and searching the latest internet content. Macaify is free to use, with different pricing plans offering additional AI capabilities and support.

GPT-4O

GPT-4O is a free all-in-one OpenAI tool that offers advanced AI capabilities for online solutions. It enhances productivity, creativity, and problem-solving by providing real-time text, vision, and audio processing. With features like instantaneous interaction, integrated multimodal processing, and advanced emotion detection, GPT-4O revolutionizes user experiences across various industries. Its broad accessibility democratizes access to cutting-edge AI technology, empowering users globally.

Rizemail

Rizemail is an AI-powered email summarization tool that helps users quickly get to the core of their unread newsletters, long email threads, and cluttered commercial communications. By forwarding an email to [email protected], the tool uses AI to summarize the content and returns the key information you need, all within your inbox. Rizemail aims to save users time by providing fast and secure email summarization services, with a focus on user privacy and convenience.

Medallia

Medallia is a real-time text analytics software that offers comprehensive feedback capture, role-based reporting, AI and analytics capabilities, integrations, pricing flexibility, enterprise-grade security, and solutions for customer experience, employee experience, contact center, and market research. It provides omnichannel text analytics powered by AI to uncover high-impact insights and enable users to identify emerging trends and key insights at scale. Medallia's text analytics platform supports workflows, event analytics, real-time actions, natural language understanding, out-of-the-box topic models, customizable KPIs, and omnichannel analytics for various industries.

ChatGPT4o

ChatGPT4o is OpenAI's latest flagship model, capable of processing text, audio, image, and video inputs, and generating corresponding outputs. It offers both free and paid usage options, with enhanced performance in English and coding tasks, and significantly improved capabilities in processing non-English languages. ChatGPT4o includes built-in safety measures and has undergone extensive external testing to ensure safety. It supports multimodal inputs and outputs, with advantages in response speed, language support, and safety, making it suitable for various applications such as real-time translation, customer support, creative content generation, and interactive learning.

LLM Quality Beefer-Upper

LLM Quality Beefer-Upper is an AI tool designed to enhance the quality and productivity of LLM responses by automating critique, reflection, and improvement. Users can generate multi-agent prompt drafts, choose from different quality levels, and upload knowledge text for processing. The application aims to maximize output quality by utilizing the best available LLM models in the market.

AI Bank Statement Converter

The AI Bank Statement Converter is an industry-leading tool designed for accountants and bookkeepers to extract data from financial documents using artificial intelligence technology. It offers features such as automated data extraction, integration with accounting software, enhanced security, streamlined workflow, and multi-format conversion capabilities. The tool revolutionizes financial document processing by providing high-precision data extraction, tailored for accounting businesses, and ensuring data security through bank-level encryption. It also offers Intelligent Document Processing (IDP) using AI and machine learning techniques to process structured, semi-structured, and unstructured documents.

GPTKit

GPTKit is a free AI text generation detection tool that utilizes six different AI-based content detection techniques to identify and classify text as either human- or AI-generated. It provides reports on the authenticity and reality of the analyzed content, with an accuracy of approximately 93%. The first 2048 characters in every request are free, and users can register for free to get 2048 characters/request.

Rytar

Rytar is an AI-powered writing platform that helps users generate unique, relevant, and high-quality content in seconds. It uses state-of-the-art AI writing models to generate articles, blog posts, website pages, and other types of content from just a headline or a few keywords. Rytar is designed to help users save time and effort in the content creation process, and to produce content that is optimized for SEO and readability.

VoiceCanvas

VoiceCanvas is an advanced AI-powered multilingual voice synthesis and voice cloning platform that offers instant text-to-speech in over 40 languages. It utilizes cutting-edge AI technology to provide high-quality voice synthesis with natural intonation and rhythm, along with personalized voice cloning for more human-like AI speech. Users can upload voice samples, have AI analyze voice features, generate personalized AI voice models, input text for conversion, and apply the cloned AI voice model to generate natural voice speech. VoiceCanvas is highly praised by language learners, content creators, teachers, business owners, voice actors, and educators for its exceptional voice quality, multiple language support, and ease of use in creating voiceovers, learning materials, and podcast content.

ConversAI

ConversAI is an AI-powered chat assistant designed to enhance online communication. It uses natural language processing and machine learning to understand and respond to messages in a conversational manner. With ConversAI, users can quickly generate personalized responses, summarize long messages, detect the tone of conversations, communicate in multiple languages, and even add GIFs to their replies. It integrates seamlessly with various messaging platforms and tools, making it easy to use and efficient. ConversAI helps users save time, improve their communication skills, and have more engaging conversations online.

1 - Open Source AI Tools

openvino

OpenVINO™ is an open-source toolkit for optimizing and deploying AI inference. It provides a common API to deliver inference solutions on various platforms, including CPU, GPU, NPU, and heterogeneous devices. OpenVINO™ supports pre-trained models from Open Model Zoo and popular frameworks like TensorFlow, PyTorch, and ONNX. Key components of OpenVINO™ include the OpenVINO™ Runtime, plugins for different hardware devices, frontends for reading models from native framework formats, and the OpenVINO Model Converter (OVC) for adjusting models for optimal execution on target devices.

20 - OpenAI Gpts

kz image 2 typescript 2 image

Generate a Structured description in typescript format from the image and generate an image from that description. and OCR

Notes Master

With this bot process of making notes will be easier. Send your text and wait for the result

Regex Wizard

Generate and explain regex patterns from your description, it support English and Chinese.

Alien meaning?

What is Alien lyrics meaning? Alien singer:P. Sears, J. Sears,album:Modern Times ,album_time:1981. Click The LINK For More ↓↓↓

Instruction Assistant Operating Director

Full step by step guidance and copy & paste text for developing assistants with specific use cases.

📰 Simplify Text Hero (5.0⭐)

Transforms complex texts into simple, understandable language.