Best AI tools for< Test Ai Systems >

20 - AI tool Sites

Lakera

Lakera is the world's most advanced AI security platform that offers cutting-edge solutions to safeguard GenAI applications against various security threats. Lakera provides real-time security controls, stress-testing for AI systems, and protection against prompt attacks, data loss, and insecure content. The platform is powered by a proprietary AI threat database and aligns with global AI security frameworks to ensure top-notch security standards. Lakera is suitable for security teams, product teams, and LLM builders looking to secure their AI applications effectively and efficiently.

Bifrost AI

Bifrost AI is a data generation engine designed for AI and robotics applications. It enables users to train and validate AI models faster by generating physically accurate synthetic datasets in 3D simulations, eliminating the need for real-world data. The platform offers pixel-perfect labels, scenario metadata, and a simulated 3D world to enhance AI understanding. Bifrost AI empowers users to create new scenarios and datasets rapidly, stress test AI perception, and improve model performance. It is built for teams at every stage of AI development, offering features like automated labeling, class imbalance correction, and performance enhancement.

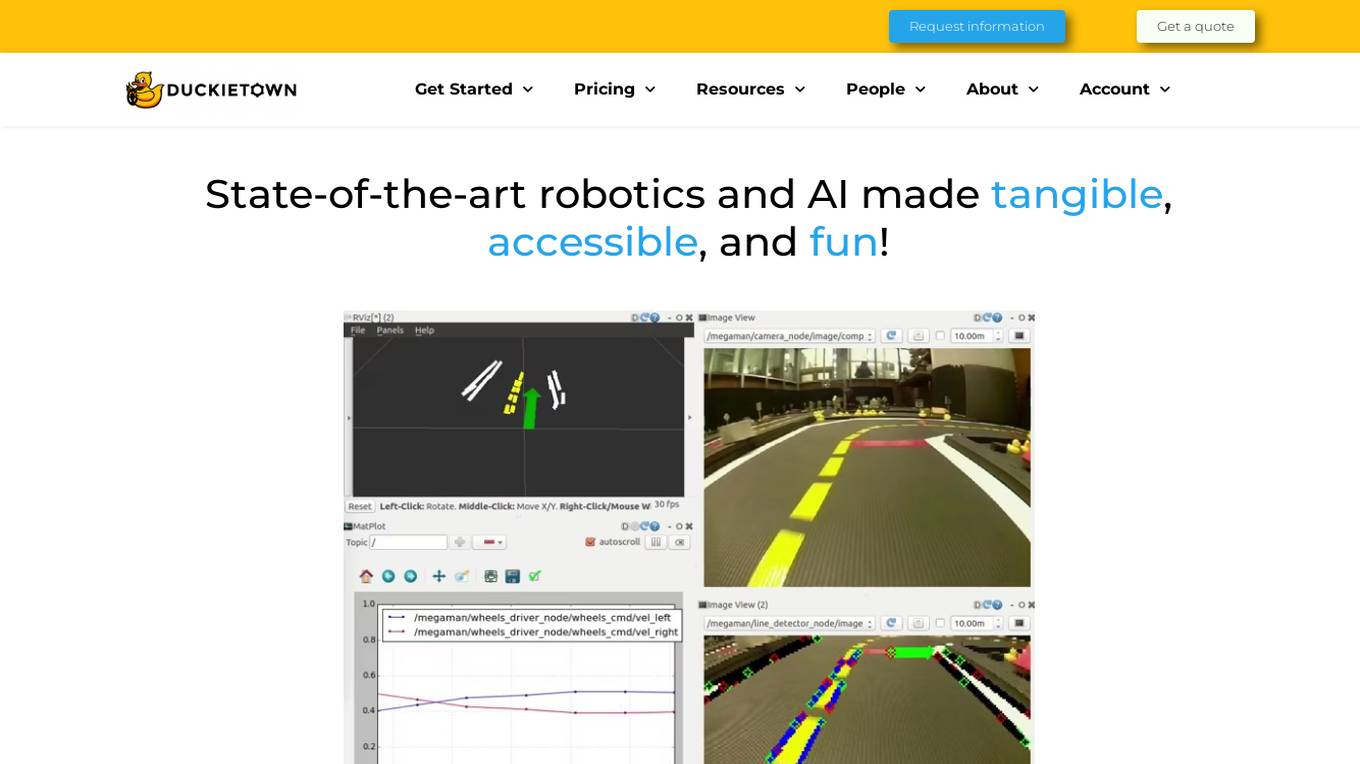

Duckietown

Duckietown is a platform for delivering cutting-edge robotics and AI learning experiences. It offers teaching resources to instructors, hands-on activities to learners, an accessible research platform to researchers, and a state-of-the-art ecosystem for professional training. Duckietown's mission is to make robotics and AI education state-of-the-art, hands-on, and accessible to all.

echowin

echowin is an AI Voice Agent Builder Platform that enables businesses to create AI agents for calls, chat, and Discord. It offers a comprehensive solution for automating customer support with features like Agentic AI logic and reasoning, support for over 30 languages, parallel call answering, and 24/7 availability. The platform allows users to build, train, test, and deploy AI agents quickly and efficiently, without compromising on capabilities or scalability. With a focus on simplicity and effectiveness, echowin empowers businesses to enhance customer interactions and streamline operations through cutting-edge AI technology.

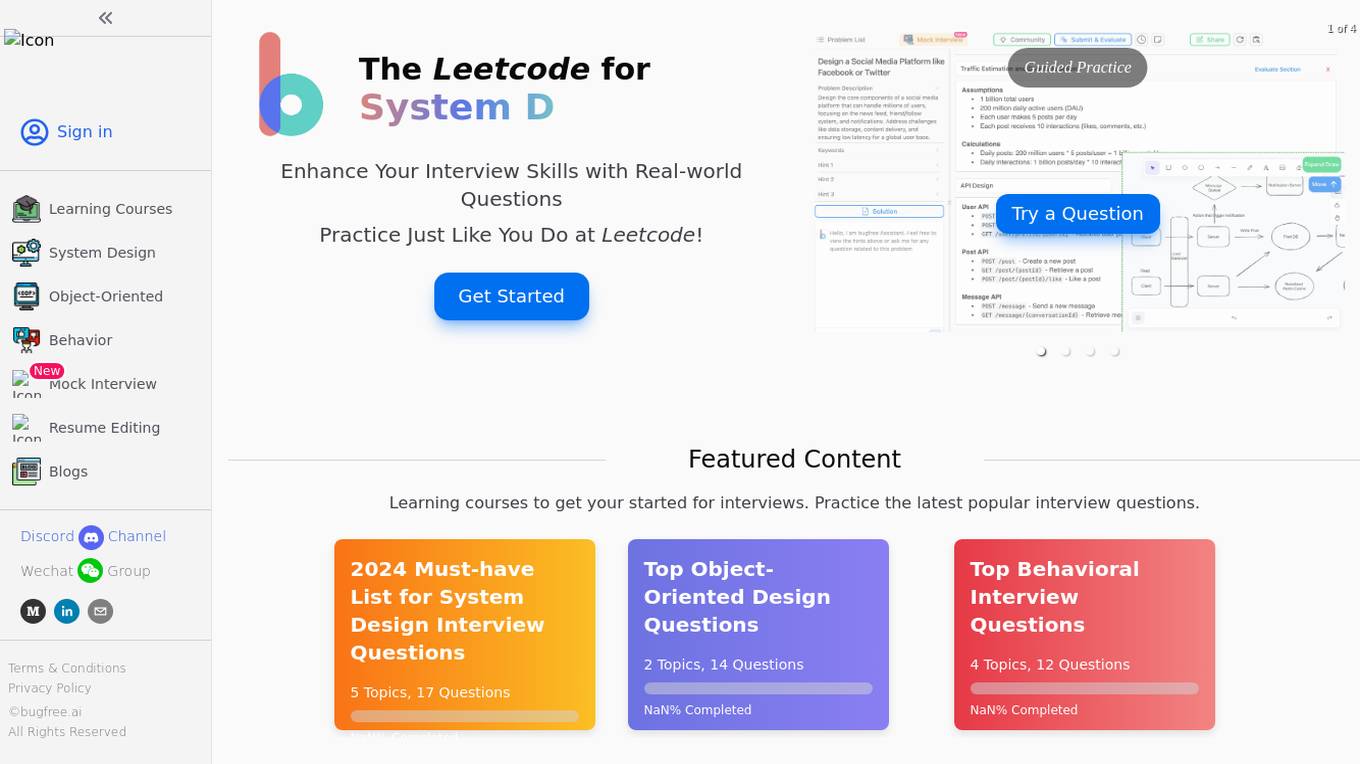

BugFree.ai

BugFree.ai is an AI-powered platform designed to help users practice system design and behavior interviews, similar to Leetcode. The platform offers a range of features to assist users in preparing for technical interviews, including mock interviews, real-time feedback, and personalized study plans. With BugFree.ai, users can improve their problem-solving skills and gain confidence in tackling complex interview questions.

Assessment Systems

Assessment Systems is an online testing platform that provides cost-effective, AI-driven solutions to develop, deliver, and analyze high-stakes exams. With Assessment Systems, you can build and deliver smarter exams faster, thanks to modern psychometrics and AI like computerized adaptive testing, multistage testing, or automated item generation. You can also deliver exams flexibly: paper, online testing unproctored, online proctored, and test centers (yours or ours). Assessment Systems also offers item banking software to build better tests in less time, with collaborative item development brought to life with versioning, user roles, metadata, workflow management, multimedia, automated item generation, and much more.

Tonic.ai

Tonic.ai is a platform that allows users to build AI models on their unstructured data. It offers various products for software development and LLM development, including tools for de-identifying and subsetting structured data, scaling down data, handling semi-structured data, and managing ephemeral data environments. Tonic.ai focuses on standardizing, enriching, and protecting unstructured data, as well as validating RAG systems. The platform also provides integrations with relational databases, data lakes, NoSQL databases, flat files, and SaaS applications, ensuring secure data transformation for software and AI developers.

DeepUnit

DeepUnit is a software tool designed to facilitate automated unit testing for code. By using DeepUnit, developers can ensure the quality and reliability of their code by automatically running tests to identify bugs and errors. The tool is user-friendly and integrates seamlessly with popular development environments like NPM and VS Code.

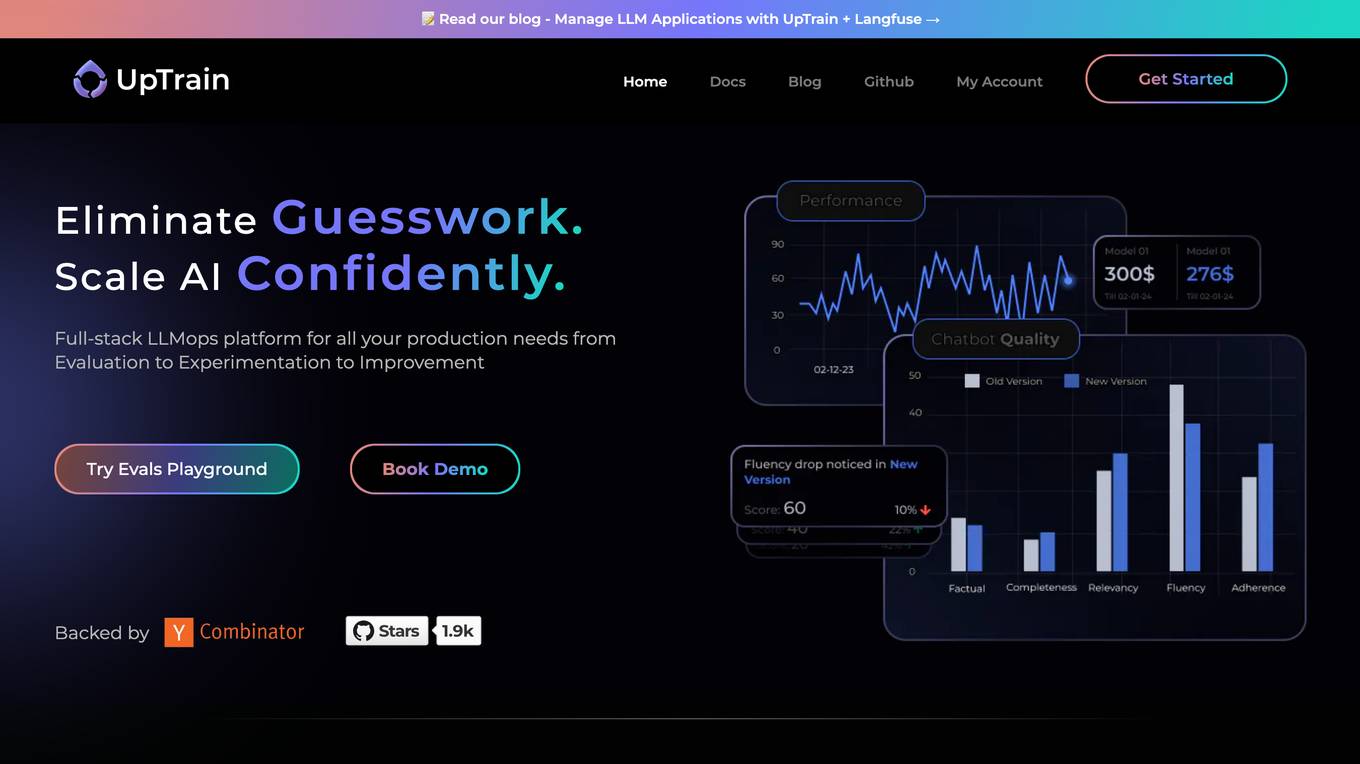

UpTrain

UpTrain is a full-stack LLMOps platform designed to help users confidently scale AI by providing a comprehensive solution for all production needs, from evaluation to experimentation to improvement. It offers diverse evaluations, automated regression testing, enriched datasets, and innovative techniques to generate high-quality scores. UpTrain is built for developers, compliant to data governance needs, cost-efficient, remarkably reliable, and open-source. It provides precision metrics, task understanding, safeguard systems, and covers a wide range of language features and quality aspects. The platform is suitable for developers, product managers, and business leaders looking to enhance their LLM applications.

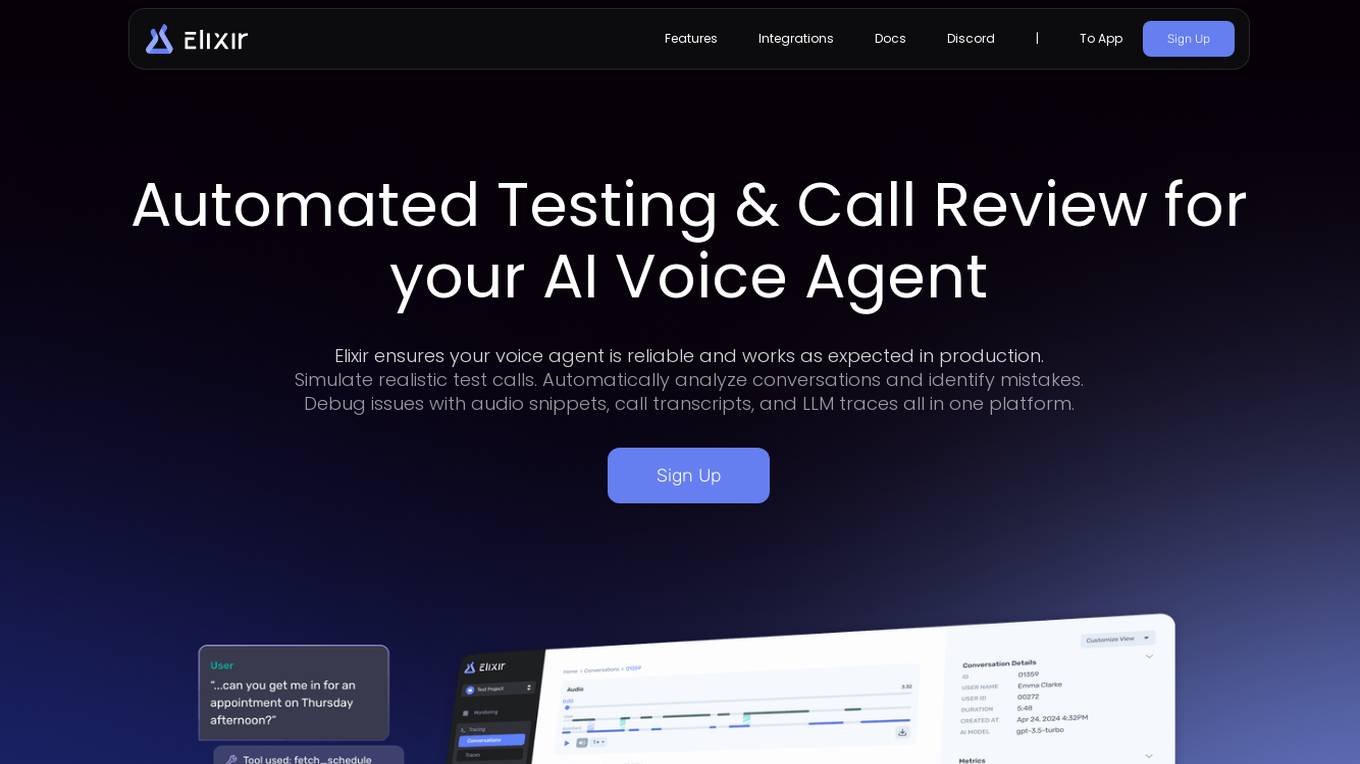

Elixir

Elixir is an AI tool designed for observability and testing of AI voice agents. It offers features such as automated testing, call review, monitoring, analytics, tracing, scoring, and reviewing. Elixir helps in simulating realistic test calls, analyzing conversations, identifying mistakes, and debugging issues with audio snippets and call transcripts. It provides detailed traces for complex abstractions, streamlines manual review processes, and allows for simulating thousands of calls for full test coverage. The tool is suitable for monitoring agent performance, detecting anomalies in real-time, and improving conversational systems through human-in-the-loop feedback.

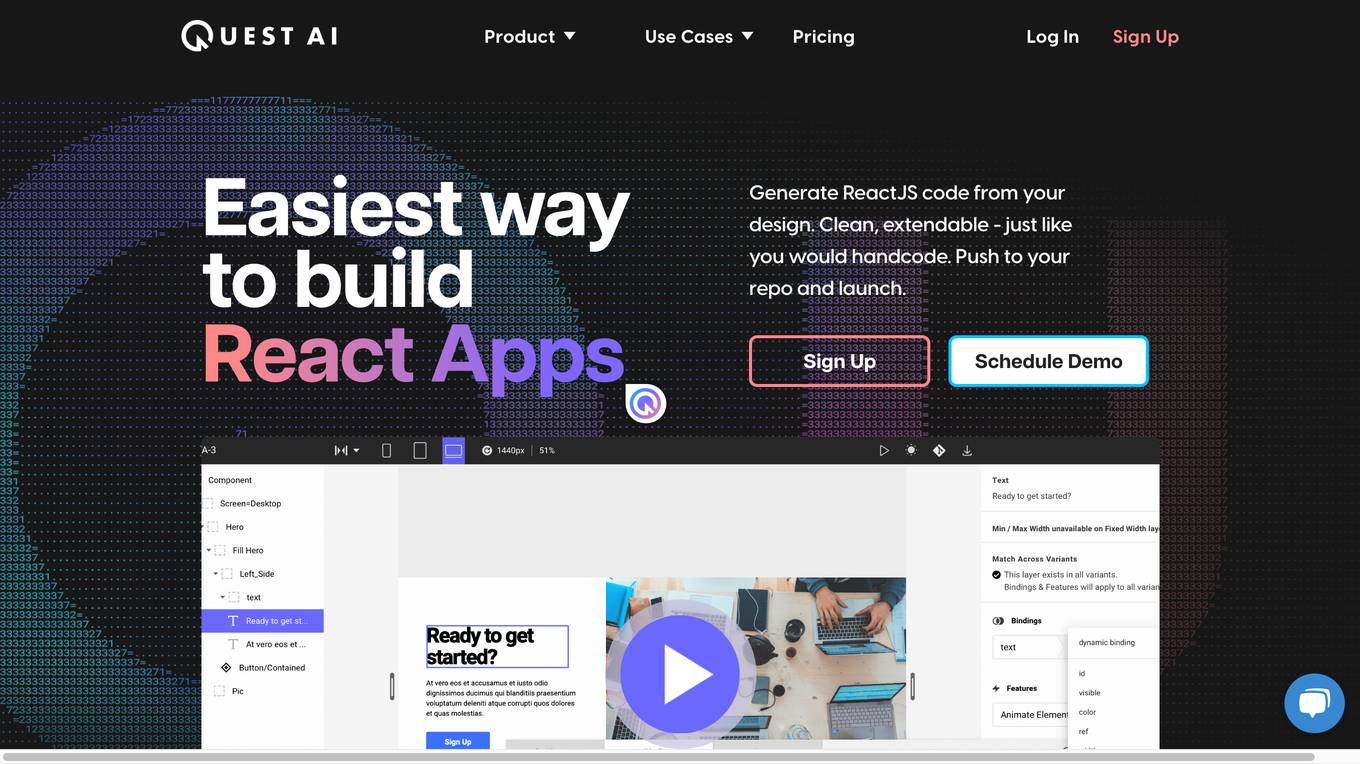

Quest

Quest is a web-based application that allows users to generate React code from their designs. It incorporates AI models to generate real, useful code that incorporates all the things professional developers care about. Users can use Quest to build new applications, add to existing applications, and create design systems and libraries. Quest is made for development teams and integrates with the design and dev tools that users love. It is also built for the most demanding product teams and can be used to build new applications, build web pages, and create component templates.

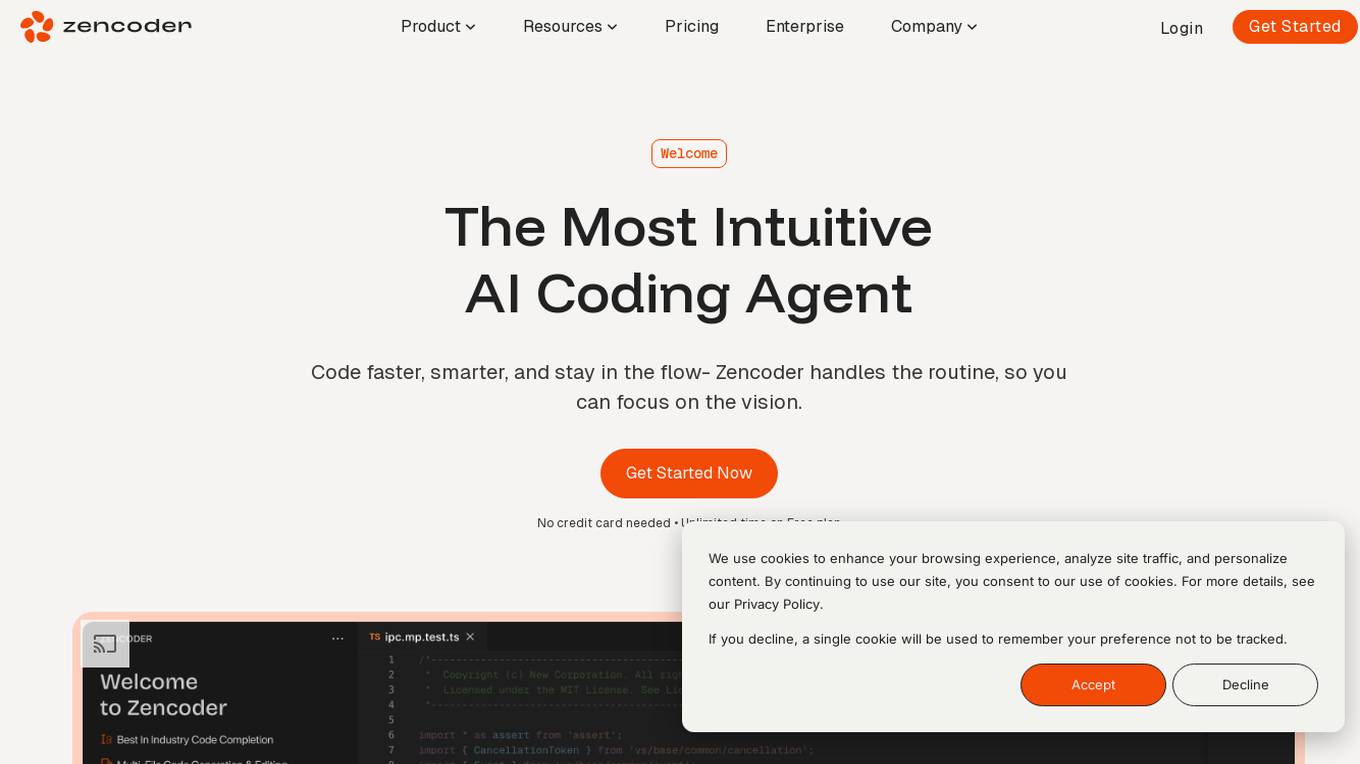

Zencoder

Zencoder is an intuitive AI coding agent designed to assist developers in coding tasks by leveraging advanced AI workflows and intelligent systems. It offers features like Repo Grokking for deep code insights, AI Agents for streamlining development processes, and capabilities such as code generation, chat assistance, code completion, and more. Zencoder aims to enhance software development efficiency, code quality, and project alignment by integrating seamlessly into developers' workflows.

Valispace

Valispace is an AI-powered platform that streamlines the entire engineering process, from requirements engineering to system design, test, verification, and validation. It modernizes classic engineering practices, enabling fast design iterations and automatic verifications. The platform assists in removing mundane and manual engineering tasks, saving engineering hours and enhancing collaboration among engineers and stakeholders.

Refraction

Refraction is an AI-powered code generation tool designed to help developers learn, improve, and generate code effortlessly. It offers a wide range of features such as bug detection, code conversion, function creation, CSP generation, CSS style conversion, debug statement addition, diagram generation, documentation creation, code explanation, code improvement, concept learning, CI/CD pipeline creation, SQL query generation, code refactoring, regex generation, style checking, type addition, and unit test generation. With support for 56 programming languages, Refraction is a versatile tool trusted by innovative companies worldwide to streamline software development processes using the magic of AI.

Riku

Riku is a no-code platform that allows users to build and deploy powerful generative AI for their business. With access to over 40 industry-leading LLMs, users can easily test different prompts to find just the right one for their needs. Riku's platform also allows users to connect siloed data sources and systems together to feed into powerful AI applications. This makes it easy for businesses to automate repetitive tasks, test ideas rapidly, and get answers in real-time.

Cognition

Cognition is an applied AI lab focused on reasoning. Their first product, Devin, is the first AI software engineer. Cognition is a small team based in New York and the San Francisco Bay Area.

Robin

Robin by Mobile.dev is an AI-powered mobile app testing tool that allows users to test their mobile apps with confidence. It offers a simple yet powerful open-source framework called Maestro for testing mobile apps at high speed. With intuitive and reliable testing powered by AI, users can write rock-solid tests without extensive coding knowledge. Robin provides an end-to-end testing strategy, rapid testing across various devices and operating systems, and auto-healing of test flows using state-of-the-art AI models.

Hamming

Hamming is an AI tool designed to help automate voice agent testing and optimization. It offers features such as prompt optimization, automated voice testing, monitoring, and more. The platform allows users to test AI voice agents against simulated users, create optimized prompts, actively monitor AI app usage, and simulate customer calls to identify system gaps. Hamming is trusted by AI-forward enterprises and is built for inbound and outbound agents, including AI appointment scheduling, AI drive-through, AI customer support, AI phone follow-ups, AI personal assistant, and AI coaching and tutoring.

金数据AI考试

The website offers an AI testing system that allows users to generate test questions instantly. It features a smart question bank, rapid question generation, and immediate test creation. Users can try out various test questions, such as generating knowledge test questions for car sales, company compliance standards, and real estate tax rate knowledge. The system ensures each test paper has similar content and difficulty levels. It also provides random question selection to reduce cheating possibilities. Employees can access the test link directly, view test scores immediately after submission, and check incorrect answers with explanations. The system supports single sign-on via WeChat for employee verification and record-keeping of employee rankings and test attempts. The platform prioritizes enterprise data security with a three-level network security rating, ISO/IEC 27001 information security management system, and ISO/IEC 27701 privacy information management system.

aqua

aqua is a comprehensive Quality Assurance (QA) management tool designed to streamline testing processes and enhance testing efficiency. It offers a wide range of features such as AI Copilot, bug reporting, test management, requirements management, user acceptance testing, and automation management. aqua caters to various industries including banking, insurance, manufacturing, government, tech companies, and medical sectors, helping organizations improve testing productivity, software quality, and defect detection ratios. The tool integrates with popular platforms like Jira, Jenkins, JMeter, and offers both Cloud and On-Premise deployment options. With AI-enhanced capabilities, aqua aims to make testing faster, more efficient, and error-free.

2 - Open Source AI Tools

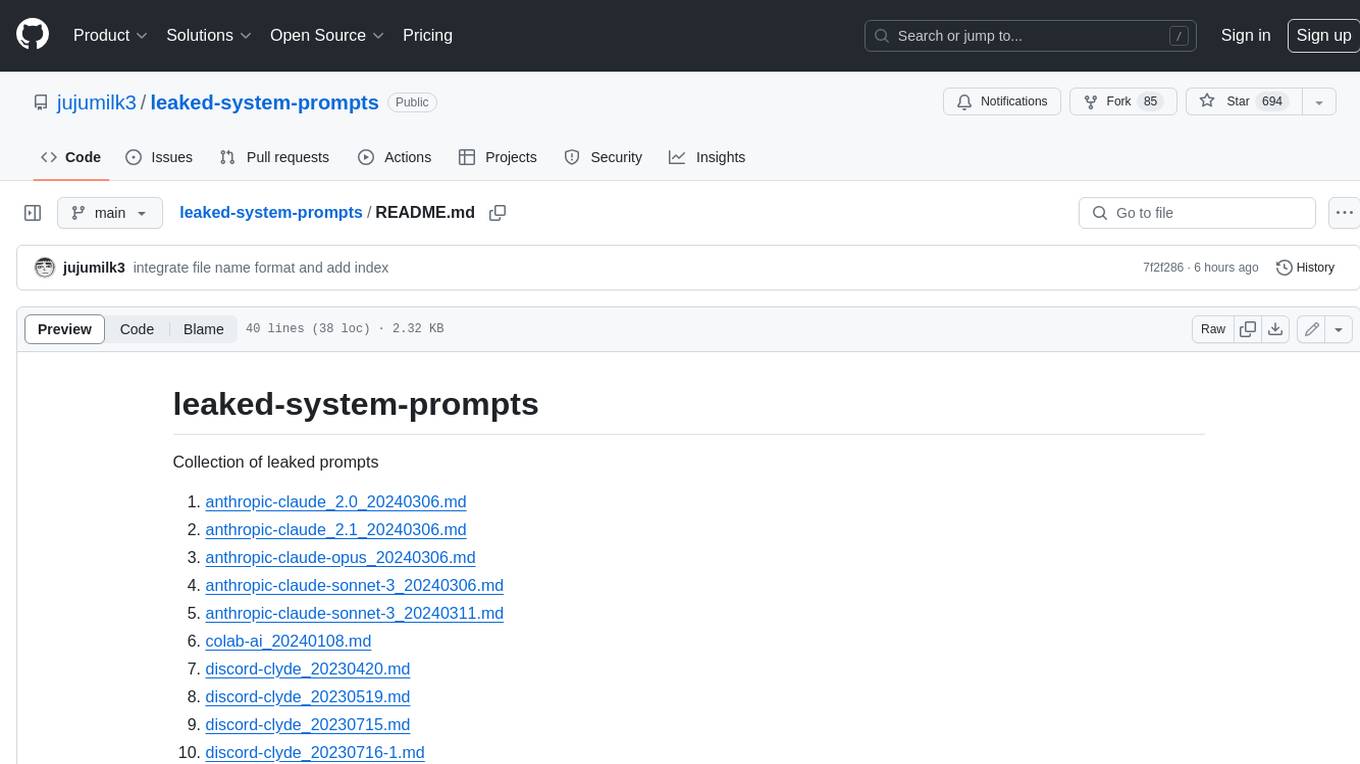

leaked-system-prompts

This repository contains a collection of leaked prompts for various AI systems, including Anthropic Claude, Discord Clyde, Google Gemini, Microsoft Bing Chat, OpenAI ChatGPT, and others. These prompts can be used to explore the capabilities and limitations of these AI systems and to gain insights into their inner workings.

evidently

Evidently is an open-source Python library designed for evaluating, testing, and monitoring machine learning (ML) and large language model (LLM) powered systems. It offers a wide range of functionalities, including working with tabular, text data, and embeddings, supporting predictive and generative systems, providing over 100 built-in metrics for data drift detection and LLM evaluation, allowing for custom metrics and tests, enabling both offline evaluations and live monitoring, and offering an open architecture for easy data export and integration with existing tools. Users can utilize Evidently for one-off evaluations using Reports or Test Suites in Python, or opt for real-time monitoring through the Dashboard service.

20 - OpenAI Gpts

AI Quiz Master

AI trivia expert, engaging and concise, focusing on AI history since the 1950s.

Generative AI Examiner

For "Generative AI Test". Examiner in Generative AI, posing questions and providing feedback.

Jailbreak Me: Code Crack-Up

This game combines humor and challenge, offering players a laugh-filled journey through the world of cybersecurity and AI.

Study Buddy

AI-powered test prep platform offering adaptive, interactive learning and progress tracking.

IQ Test Assistant

An AI conducting 30-question IQ tests, assessing and providing detailed feedback.

Test Case GPT

I will provide guidance on testing, verification, and validation for QA roles.

🎨🧠 ToonTrivia Mastermind 🤔🎬

Your go-to AI for a fun-filled trivia challenge on all things animated! From classic cartoons to modern animations, test your knowledge and learn fascinating facts! 🤓🎥✨

Inspection AI

Expert in testing, inspection, certification, compliant with OpenAI policies, developed on OpenAI.

Moot Master

A moot competition companion. & Trial Prep companion . Test and improve arguments- predict your opponent's reaction.

IELTS AI Checker (Speaking and Writing)

Provides IELTS speaking and writing feedback and scores.

Vitest Expert Testing Framework Multilingual

Multilingual AI for Vitest unit testing management.

AI powered Tech Company

A replacement to your Product Manager, Engineering Manager, and your Average Developer and Tester