Best AI tools for< Test Ai Security >

20 - AI tool Sites

Lakera

Lakera is the world's most advanced AI security platform that offers cutting-edge solutions to safeguard GenAI applications against various security threats. Lakera provides real-time security controls, stress-testing for AI systems, and protection against prompt attacks, data loss, and insecure content. The platform is powered by a proprietary AI threat database and aligns with global AI security frameworks to ensure top-notch security standards. Lakera is suitable for security teams, product teams, and LLM builders looking to secure their AI applications effectively and efficiently.

VIDOC

VIDOC is an AI-powered security engineer that automates code review and penetration testing. It continuously scans and reviews code to detect and fix security issues, helping developers deliver secure software faster. VIDOC is easy to use, requiring only two lines of code to be added to a GitHub Actions workflow. It then takes care of the rest, providing developers with a tailored code solution to fix any issues found.

NodeZero™ Platform

Horizon3.ai Solutions offers the NodeZero™ Platform, an AI-powered autonomous penetration testing tool designed to enhance cybersecurity measures. The platform combines expert human analysis by Offensive Security Certified Professionals with automated testing capabilities to streamline compliance processes and proactively identify vulnerabilities. NodeZero empowers organizations to continuously assess their security posture, prioritize fixes, and verify the effectiveness of remediation efforts. With features like internal and external pentesting, rapid response capabilities, AD password audits, phishing impact testing, and attack research, NodeZero is a comprehensive solution for large organizations, ITOps, SecOps, security teams, pentesters, and MSSPs. The platform provides real-time reporting, integrates with existing security tools, reduces operational costs, and helps organizations make data-driven security decisions.

Face Symmetry Test

Face Symmetry Test is an AI-powered tool that analyzes the symmetry of facial features by detecting key landmarks such as eyes, nose, mouth, and chin. Users can upload a photo to receive a personalized symmetry score, providing insights into the balance and proportion of their facial features. The tool uses advanced AI algorithms to ensure accurate results and offers guidelines for improving the accuracy of the analysis. Face Symmetry Test is free to use and prioritizes user privacy and security by securely processing uploaded photos without storing or sharing data with third parties.

Traceable

Traceable is an intelligent API security platform designed for enterprise-scale security. It offers unmatched API discovery, attack detection, threat hunting, and infinite scalability. The platform provides comprehensive protection against API attacks, fraud, and bot security, along with API testing capabilities. Powered by Traceable's OmniTrace Engine, it ensures unparalleled security outcomes, remediation, and pre-production testing. Security teams trust Traceable for its speed and effectiveness in protecting API infrastructures.

Sider.ai

Sider.ai is an AI-powered platform that focuses on security verification for online connections. It ensures a safe browsing experience by reviewing the security of your connection before proceeding. The platform uses advanced algorithms to detect and prevent potential threats, providing users with peace of mind while browsing the internet.

Sider.ai

Sider.ai is a website that provides security verification services to protect against malicious bots. Users may encounter a brief waiting period while the website verifies that they are not bots. The service ensures that the website remains secure and performs efficiently by enabling JavaScript and cookies. Sider.ai utilizes Cloudflare for performance and security measures.

Panto AI

Panto AI is an AI automation testing platform that offers a comprehensive solution for mobile app testing, combining dynamic code reviews, code security checks, and QA automation. It allows users to create, execute, and run mobile test cases in natural language, ensuring reliable and efficient testing processes. With features like self-healing automation, real device testing, and deep failure visibility, Panto AI aims to streamline the QA process and enhance app quality. The platform is designed to be platform-agnostic and supports various integrations, making it suitable for diverse mobile app environments.

AI Copilot for bank ALCOs

AI Copilot for bank ALCOs is an AI application designed to empower Asset-Liability Committees (ALCOs) in banks to test funding and liquidity strategies in a risk-free environment, ensuring optimal balance sheet decisions before real-world implementation. The application provides proactive intelligence for day-to-day decisions, allowing users to test multiple strategies, compare funding options, and make forward-looking decisions. It offers features such as stakeholder feedback, optimal funding mix, forward-looking decisions, comparison of funding strategies, domain-specific models, maximizing returns, staying compliant, and built-in security measures. MaverickFi, the AI Copilot, is deployed on Microsoft Azure and offers deployment options based on user preferences.

AI App Generator

The AI App Generator by Appy Pie is a platform that utilizes generative artificial intelligence to develop Android and iOS mobile applications based on simple text prompts. Users can describe their app ideas in plain language, and the AI automatically generates app structures, screens, navigation flows, and content. The platform offers a visual editor for customization, real-time updates, security compliance, and publishing support for Google Play Store and Apple's App Store. It is designed to cater to startups, small businesses, educators, agencies, and non-technical founders looking to create mobile apps without coding expertise.

Escape

Escape is an AI-powered Dynamic Application Security Testing (DAST) tool designed to work seamlessly with modern technology stacks, focusing on testing business logic and helping developers remediate vulnerabilities efficiently. It provides a comprehensive platform for API security, including API discovery, security testing, and GraphQL support. Escape offers features such as AI-powered DAST, API discovery & security, business logic security testing, CI/CD integration, and tailored remediations. The tool aims to streamline security workflows, improve risk reduction, and simplify compliance management for various industries.

nunu.ai

nunu.ai is an AI-powered platform designed to revolutionize game testing by leveraging AI agents to conduct end-to-end tests at scale. By automating repetitive tasks, the platform significantly reduces manual QA costs for game studios. With features like human-like testing, multi-platform support, and enterprise-grade security, nunu.ai offers a comprehensive solution for game developers seeking efficient and reliable testing processes.

Sedo.com

Sedo.com is an online platform for buying and selling domain names. It provides a marketplace where users can list their domain names for sale or purchase domains that are already registered. The platform offers a secure and efficient way for domain investors, businesses, and individuals to connect and transact. Sedo.com ensures the security of transactions and provides tools to streamline the domain buying and selling process.

CloudExam AI

CloudExam AI is an online testing platform developed by Hanke Numerical Union Technology Co., Ltd. It provides stable and efficient AI online testing services, including intelligent grouping, intelligent monitoring, and intelligent evaluation. The platform ensures test fairness by implementing automatic monitoring level regulations and three random strategies. It prioritizes information security by combining software and hardware to secure data and identity. With global cloud deployment and flexible architecture, it supports hundreds of thousands of concurrent users. CloudExam AI offers features like queue interviews, interactive pen testing, data-driven cockpit, AI grouping, AI monitoring, AI evaluation, random question generation, dual-seat testing, facial recognition, real-time recording, abnormal behavior detection, test pledge book, student information verification, photo uploading for answers, inspection system, device detection, scoring template, ranking of results, SMS/email reminders, screen sharing, student fees, and collaboration with selected schools.

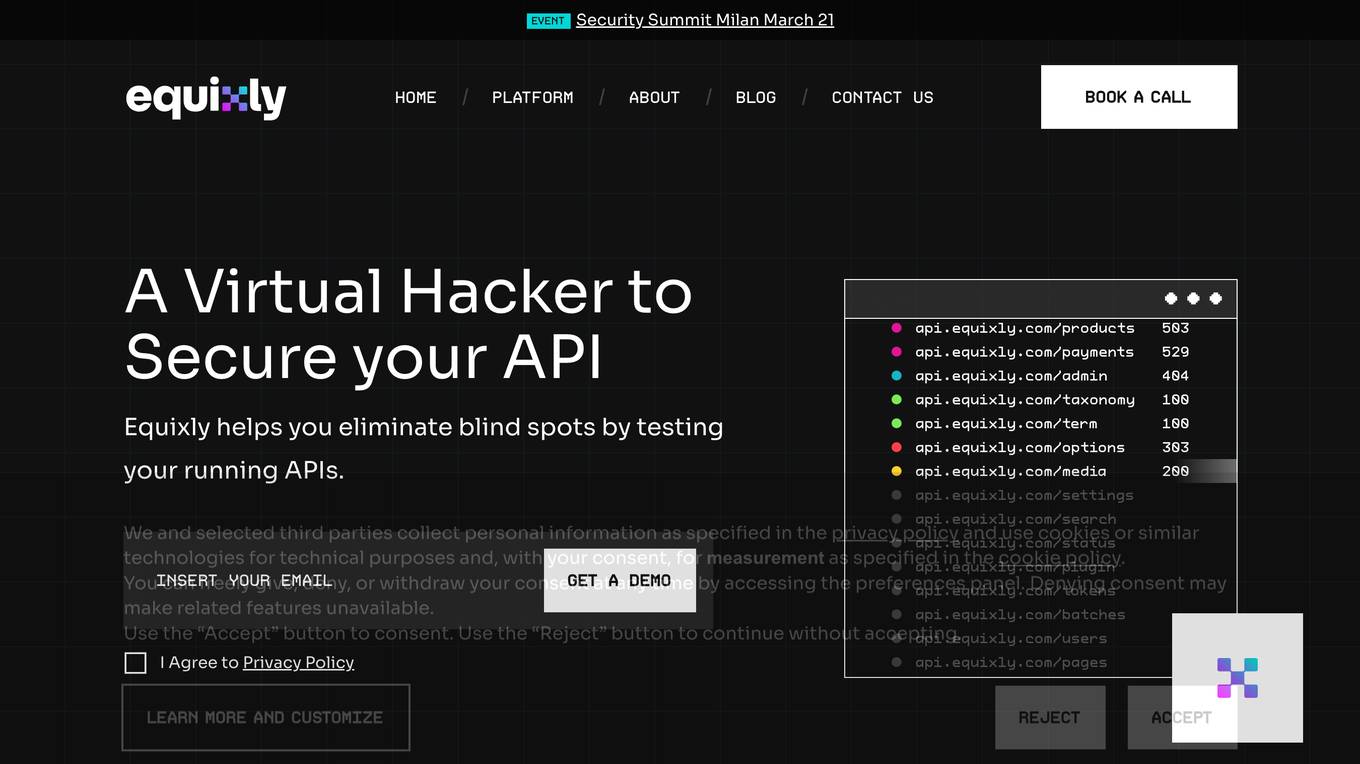

Equixly

Equixly is an AI-powered application designed to help users secure their APIs by identifying vulnerabilities and weaknesses through continuous security testing. The platform offers features such as scalable API PenTesting, attack simulation, mapping of attack surfaces, compliance simplification, and data exposure minimization. Equixly aims to streamline the process of identifying and fixing API security risks, ultimately enabling users to release secure code faster and reduce their attack surface.

金数据AI考试

The website offers an AI testing system that allows users to generate test questions instantly. It features a smart question bank, rapid question generation, and immediate test creation. Users can try out various test questions, such as generating knowledge test questions for car sales, company compliance standards, and real estate tax rate knowledge. The system ensures each test paper has similar content and difficulty levels. It also provides random question selection to reduce cheating possibilities. Employees can access the test link directly, view test scores immediately after submission, and check incorrect answers with explanations. The system supports single sign-on via WeChat for employee verification and record-keeping of employee rankings and test attempts. The platform prioritizes enterprise data security with a three-level network security rating, ISO/IEC 27001 information security management system, and ISO/IEC 27701 privacy information management system.

Clarion Technologies

Clarion Technologies is an AI-assisted development company that offers a wide range of software development services, including custom software development, web app development, mobile app development, cloud solutions, and Power BI solutions. They provide services for various technologies such as React Native, Java, Python, PHP, Laravel, and more. With a focus on AI-driven planning and Agile Project Execution Methodology, Clarion Technologies ensures top-quality results with faster time to market. They have a strong commitment to data security, compliance, and privacy, and offer on-demand access to skilled developers and tech architects.

Bito AI

Bito AI is an AI-powered code review tool that helps developers write better code faster. It provides real-time feedback on code quality, security, and performance, and can also generate test cases and documentation. Bito AI is trusted by developers across the world, and has been shown to reduce review time by 50%.

Ferhat Erata

Ferhat Erata is an AI application developed by a Computer Science PhD graduate from Yale University. The application focuses on training transformers to solve NP-complete problems using reinforcement learning and improving test-time compute strategies for reasoning. It also explores learning randomized reductions and program properties for security, privacy, and side-channel resilience. Ferhat Erata is currently an Applied Scientist at the Automated Reasoning Group at AWS, working on Neuro-Symbolic AI to prevent factual errors caused by LLM hallucinations using mathematically sound Automated Reasoning checks.

AllGalaxy

AllGalaxy is a pioneering platform revolutionizing mental health care with AI-driven assessment tools. It integrates cutting-edge artificial intelligence with compassionate care to enhance well-being globally. The platform offers advanced tools like the Health Nexus for mental health assessments, the Advanced Alzheimer's Detection Tool for early diagnostics, and MediMood for real-time mental health assessments. AllGalaxy also provides resources on healthy habits to prevent Alzheimer's and promote brain health.

1 - Open Source AI Tools

ps-fuzz

The Prompt Fuzzer is an open-source tool that helps you assess the security of your GenAI application's system prompt against various dynamic LLM-based attacks. It provides a security evaluation based on the outcome of these attack simulations, enabling you to strengthen your system prompt as needed. The Prompt Fuzzer dynamically tailors its tests to your application's unique configuration and domain. The Fuzzer also includes a Playground chat interface, giving you the chance to iteratively improve your system prompt, hardening it against a wide spectrum of generative AI attacks.

20 - OpenAI Gpts

AdversarialGPT

Adversarial AI expert aiding in AI red teaming, informed by cutting-edge industry research (early dev)

Jailbreak Me: Code Crack-Up

This game combines humor and challenge, offering players a laugh-filled journey through the world of cybersecurity and AI.

ethicallyHackingspace (eHs)® (Full Spectrum)™

Full Spectrum Space Cybersecurity Professional ™ AI-copilot (BETA)

GetPaths

This GPT takes in content related to an application, such as HTTP traffic, JavaScript files, source code, etc., and outputs lists of URLs that can be used for further testing.

AI Quiz Master

AI trivia expert, engaging and concise, focusing on AI history since the 1950s.

Generative AI Examiner

For "Generative AI Test". Examiner in Generative AI, posing questions and providing feedback.

Study Buddy

AI-powered test prep platform offering adaptive, interactive learning and progress tracking.

IQ Test Assistant

An AI conducting 30-question IQ tests, assessing and providing detailed feedback.

Test Case GPT

I will provide guidance on testing, verification, and validation for QA roles.

🎨🧠 ToonTrivia Mastermind 🤔🎬

Your go-to AI for a fun-filled trivia challenge on all things animated! From classic cartoons to modern animations, test your knowledge and learn fascinating facts! 🤓🎥✨

Inspection AI

Expert in testing, inspection, certification, compliant with OpenAI policies, developed on OpenAI.

Moot Master

A moot competition companion. & Trial Prep companion . Test and improve arguments- predict your opponent's reaction.

IELTS AI Checker (Speaking and Writing)

Provides IELTS speaking and writing feedback and scores.