Best AI tools for< Statistical Analysis >

20 - AI tool Sites

Hepta AI

Hepta AI is an AI-powered statistics tool designed for scientific research. It simplifies the process of statistical analysis by allowing users to easily input their data and receive comprehensive results, including tables, graphs, and statistical analysis. With a focus on accuracy and efficiency, Hepta AI aims to streamline the research process for scientists and researchers, providing valuable insights and data visualization. The tool offers a user-friendly interface and advanced AI algorithms to deliver precise and reliable statistical outcomes.

Powerdrill

Powerdrill is a platform that provides swift insights from knowledge and data. It offers a range of features such as discovering datasets, creating BI dashboards, accessing various apps, resources, blogs, documentation, and changelogs. The platform is available in English and fosters a community through its affiliate program. Users can sign up for a basic plan to start utilizing the tools and services offered by Powerdrill.

Lotto Chart

Lotto Chart is a highly accurate AI-powered chart for predicting lottery numbers. It harnesses the power of artificial intelligence, statistical analysis, and probability to generate winning combinations for various lotteries. The application processes billions of data points, utilizes 7 powerful prediction models, and provides advanced data-driven predictions to help users increase their chances of winning. Lotto Chart also offers support for seeded predictions, daily updated insights and reports, and tools to easily identify patterns and trends in lottery numbers.

SheetBot AI

SheetBot AI is an AI data analyst tool that enables users to analyze data quickly without the need for coding. It automates repetitive and time-consuming data tasks, making data visualization and analysis more efficient. With SheetBot AI, users can generate accurate and visually appealing graphs in seconds, streamlining the data analysis process.

ChartFast

ChartFast is an AI Data Analyzer tool that automates data visualization and analysis tasks, powered by GPT-4 technology. It allows users to generate precise and sleek graphs in seconds, process vast amounts of data, and provide interactive data queries and quick exports. With features like specialized internal libraries for complex graph generation, customizable visualization code, and instant data export, ChartFast aims to streamline data work and enhance data analysis efficiency.

Julius AI

Julius AI is an advanced AI data analyst tool that allows users to analyze data with computational AI, chat with files to get expert-level insights, create sleek data visualizations, perform modeling and predictive forecasting, solve math, physics, and chemistry problems, generate polished analyses and summaries, save time by automating data work, and unlock statistical modeling without complexity. It offers features like generating visualizations, asking data questions, effortless cleaning, instant data export, creating animations, and supercharging data analysis. Julius AI is loved by over 1,200,000 users worldwide and is designed to help knowledge workers make the most out of their data.

Datumbox

Datumbox is a machine learning platform that offers a powerful open-source Machine Learning Framework written in Java. It provides a large collection of algorithms, models, statistical tests, and tools to power up intelligent applications. The platform enables developers to build smart software and services quickly using its REST Machine Learning API. Datumbox API offers off-the-shelf Classifiers and Natural Language Processing services for applications like Sentiment Analysis, Topic Classification, Language Detection, and more. It simplifies the process of designing and training Machine Learning models, making it easy for developers to create innovative applications.

Posit

Posit is an open-source data science company that provides a suite of tools and services for data scientists. Its products include the RStudio IDE, Shiny, and Posit Connect. Posit also offers cloud-based solutions and enterprise support. The company's mission is to make data science accessible to everyone, regardless of their economic means or technical expertise.

4Quant

4Quant is an AI-powered medical imaging platform that utilizes Big Data and Deep Learning technology to accelerate the extraction of high-quality medical labels. The platform offers a range of tools for image analysis, annotation, and data analytics in the medical field. 4Quant aims to provide scalable solutions for medical imaging analysis, statistical reporting, and personalized training in image analysis. The platform is built on the latest Big Data framework, Apache Spark, and integrates with cloud computing for efficient processing of large datasets.

Julius

Julius is an AI-powered tool that helps users analyze data and files. It can perform various tasks such as generating visualizations, answering data questions, and performing statistical modeling. Julius is designed to save users time and effort by automating complex data analysis tasks.

Gestualy

Gestualy is an AI tool designed to measure and improve customer satisfaction and mood quickly and easily through gestures. It eliminates the need for cumbersome satisfaction surveys by allowing interactions with customers or guests through gestures. The tool uses artificial intelligence to make intelligent decisions in businesses. Gestualy generates valuable statistical reports for businesses, including satisfaction levels, gender, mood, and age, all while ensuring data protection and privacy compliance. It offers touchless interaction, immediate feedback, anonymized reports, and various services such as gesture recognition, facial analysis, gamification, and alert systems.

FaceSymAI

FaceSymAI is an online tool that utilizes advanced AI algorithms to analyze and determine the symmetry of your face. By uploading a photo, the AI examines your facial features, including the eyes, nose, mouth, and overall structure, to provide an accurate assessment of your facial symmetry. The analysis is based on mathematical and statistical methods, ensuring reliable and precise results. FaceSymAI is designed to be user-friendly and accessible, offering a free service to everyone. The uploaded photos are treated with utmost confidentiality and are not stored or used for any other purpose, ensuring your privacy is respected.

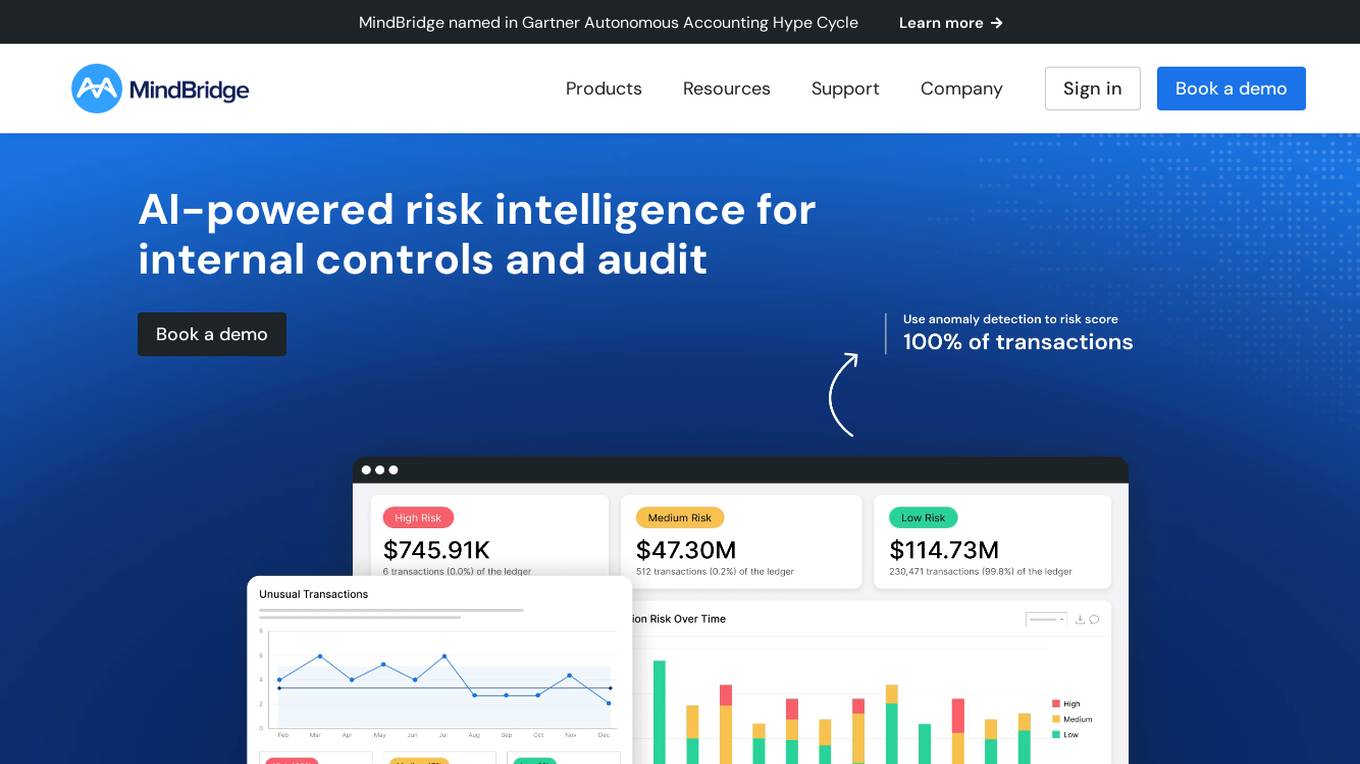

MindBridge

MindBridge is a global leader in financial risk discovery and anomaly detection. The MindBridge AI Platform drives insights and assesses risks across critical business operations. It offers various products like General Ledger Analysis, Company Card Risk Analytics, Payroll Risk Analytics, Revenue Risk Analytics, and Vendor Invoice Risk Analytics. With over 250 unique machine learning control points, statistical methods, and traditional rules, MindBridge is deployed to over 27,000 accounting, finance, and audit professionals globally.

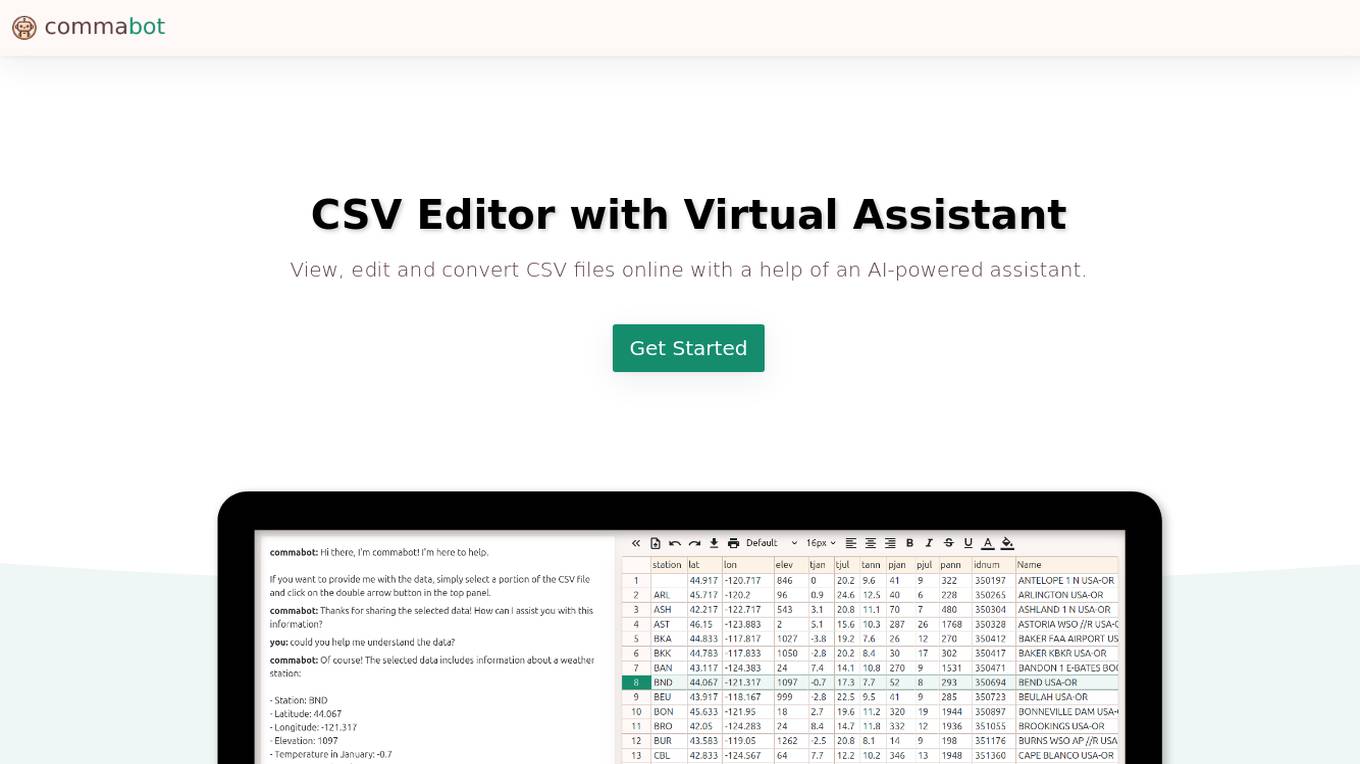

Commabot

Commabot is an online CSV editor that allows users to view, edit, and convert CSV files with the help of an AI-powered assistant. It features an intuitive spreadsheet interface, data operations capabilities, an AI virtual assistant, and transformation and conversion functionalities.

BooSum

BooSum is an AI-powered book summary application that helps users read smarter by eliminating unnecessary parts of books and focusing on the key content. Users can upload books and let the AI do the work of summarizing them, making reading faster, deeper, and easier. The platform offers a curated selection of top-rated books across various genres, allowing users to expand their knowledge and imagination. With features like reducing redundant information, improving information understanding through AI, and supporting multiple languages, BooSum aims to make reading accessible and useful for everyone.

RTutor

RTutor is an AI tool that utilizes OpenAI's large language models to translate natural language into R or Python code for data analysis. Users can upload data in various formats, ask questions, and receive results in plain English. The tool allows for exploring data, generating basic plots, and gradually adding complexity to the analysis. RTutor can only analyze traditional statistics data where rows are observations and columns are variables. It offers a comprehensive EDA (Exploratory Data Analysis) report and provides code chunks for analysis.

Displayr

Displayr is a comprehensive data workspace designed for teams, offering a range of capabilities including survey analysis, data visualization, dashboarding, automatic updating, PowerPoint reporting, finding data stories, and data cleaning. The platform aims to streamline workflow efficiency, promote self-sufficiency through DIY analytics, enable data storytelling with compelling narratives, and ensure quality control to minimize errors. Displayr caters to statisticians, market researchers, report creators, and professionals working with data, providing a user-friendly interface for creating interactive and insightful data stories.

DINGR

DINGR is an AI-powered solution designed to help gamers analyze their performance in League of Legends. The tool uses AI algorithms to provide accurate insights into gameplay, comparing performance metrics with friends and offering suggestions for improvement. DINGR is currently in development, with a focus on enhancing the gaming experience through data-driven analysis and personalized feedback.

Comment Explorer

Comment Explorer is a free tool that allows users to analyze comments on YouTube videos. Users can gain insights into audience engagement, sentiment, and top subjects of discussion. The tool helps content creators understand the impact of their videos and improve interaction with viewers.

ChartPixel

ChartPixel is an AI-assisted data analysis platform that empowers users to effortlessly generate charts, insights, and actionable statistics in just 30 seconds. The platform is designed to demystify data and analysis, making it accessible to users of all skill levels. ChartPixel combines the power of AI with domain expertise to provide secure and reliable output, ensuring trustworthy results without compromising data privacy. With user-friendly features and educational tools, ChartPixel helps users clean, wrangle, visualize, and present data with ease, catering to both beginners and professionals.

1 - Open Source AI Tools

DataFrame

DataFrame is a C++ analytical library designed for data analysis similar to libraries in Python and R. It allows you to slice, join, merge, group-by, and perform various statistical, summarization, financial, and ML algorithms on your data. DataFrame also includes a large collection of analytical algorithms in form of visitors, ranging from basic stats to more involved analysis. You can easily add your own algorithms as well. DataFrame employs extensive multithreading in almost all its APIs, making it suitable for analyzing large datasets. Key principles followed in the library include supporting any type without needing new code, avoiding pointer chasing, having all column data in contiguous memory space, minimizing space usage, avoiding data copying, using multi-threading judiciously, and not protecting the user against garbage in, garbage out.

20 - OpenAI Gpts

Statistics from ANY documents

Statistical analysis of text and image documents, providing detailed reports.

Data Interpretation

Upload an image of a statistical analysis and we'll interpret the results: linear regression, logistic regression, ANOVA, cluster analysis, MDS, factor analysis, and many more

Asesor Estadístico

Experto en estadística listo para ayudar con análisis e interpretación de datos.

AI-Powered SPSS Aid: Manuscript Interpretation

I assist with SPSS data interpretation for academic manuscripts.

HorseGPT

An expert in horse racing statistics and data analysis with a serious, explanatory and technical tone.

Stats Buddy

Assists with statistical information and learning, focusing on proven concepts.

ThorGPT

Expert in Thorchain and blockchain technologies. Can show current mainnet data, as well as some historical & statistical data.

A/B Test GPT

Calculate the results of your A/B test and check whether the result is statistically significant or due to chance.