Best AI tools for< Start Inference Server >

20 - AI tool Sites

FluidStack

FluidStack is a leading GPU cloud platform designed for AI and LLM (Large Language Model) training. It offers unlimited scale for AI training and inference, allowing users to access thousands of fully-interconnected GPUs on demand. Trusted by top AI startups, FluidStack aggregates GPU capacity from data centers worldwide, providing access to over 50,000 GPUs for accelerating training and inference. With 1000+ data centers across 50+ countries, FluidStack ensures reliable and efficient GPU cloud services at competitive prices.

Cortex Labs

Cortex Labs is a decentralized world computer that enables AI and AI-powered decentralized applications (dApps) to run on the blockchain. It offers a Layer2 solution called ZkMatrix, which utilizes zkRollup technology to enhance transaction speed and reduce fees. Cortex Virtual Machine (CVM) supports on-chain AI inference using GPU, ensuring deterministic results across computing environments. Cortex also enables machine learning in smart contracts and dApps, fostering an open-source ecosystem for AI researchers and developers to share models. The platform aims to solve the challenge of on-chain machine learning execution efficiently and deterministically, providing tools and resources for developers to integrate AI into blockchain applications.

Axelera AI

Axelera AI is an AI tool that offers datacenter performance and edge efficiency through AI accelerators and AI processing units. The platform provides hardware and software solutions for accelerating inference at the edge, bringing data insights and analytics to various industries such as industrial manufacturing, retail, security, healthcare, smart cities, robotics, agriculture, and computer vision. Axelera AI aims to boost AI applications' performance with cost-effective and efficient inference chips, seamlessly integrating into innovations to enhance customer focus.

Favikon

Favikon is an AI-powered influencer marketing platform that helps businesses find and manage influencers for their marketing campaigns. It offers a range of features to help businesses with influencer discovery, tracking, and reporting. Favikon's AI-powered discovery feature enables businesses to find influencers across more than 600 specialized niches effortlessly. The platform also provides in-depth profiles of creators to understand their social media influence and popularity. Favikon's AI-powered rankings help businesses identify trending topics, popular formats, and emerging creators within their industry. The platform also offers a tracking feature to stay updated on the latest trends and posts across social media platforms.

Cision

Cision is an end-to-end communications and media intelligence platform that provides a suite of tools and services to help public relations and communications professionals understand, influence, and amplify their stories. Cision's platform includes PR Newswire, CisionOne, and Cision Insights, which offer a range of capabilities such as PR distribution, media monitoring, media analytics, and influencer outreach. Cision's solutions are used by a wide range of organizations, including Fortune 500 companies, government agencies, and non-profit organizations.

Start Left® Security

Start Left® Security is an AI-driven application security posture management platform that empowers product teams to automate secure-by-design software from people to cloud. The platform integrates security into every facet of the organization, offering a unified solution that aligns with business goals, fosters continuous improvement, and drives innovation. Start Left® Security provides a gamified DevSecOps experience with comprehensive security capabilities like SCA, SBOM, SAST, DAST, Container Security, IaC security, ASPM, and more.

GPT Jump Start

GPT Jump Start is a versatile solution designed to incorporate AI features into your projects. It functions in two ways: As an API, it can be integrated with custom projects or existing solutions like Zapier and Pabbly. As a WordPress plugin, it assists in generating high-quality, engaging, and relevant content based on provided prompts.

Dora

Dora is a no-code 3D animated website design platform that allows users to create stunning 3D and animated visuals without writing a single line of code. With Dora, designers, freelancers, and creative professionals can focus on what they do best: designing. The platform is tailored for professionals who prioritize design aesthetics without wanting to dive deep into the backend. Dora offers a variety of features, including a drag-and-connect constraint layout system, advanced animation capabilities, and pixel-perfect usability. With Dora, users can create responsive 3D and animated websites that translate seamlessly across devices.

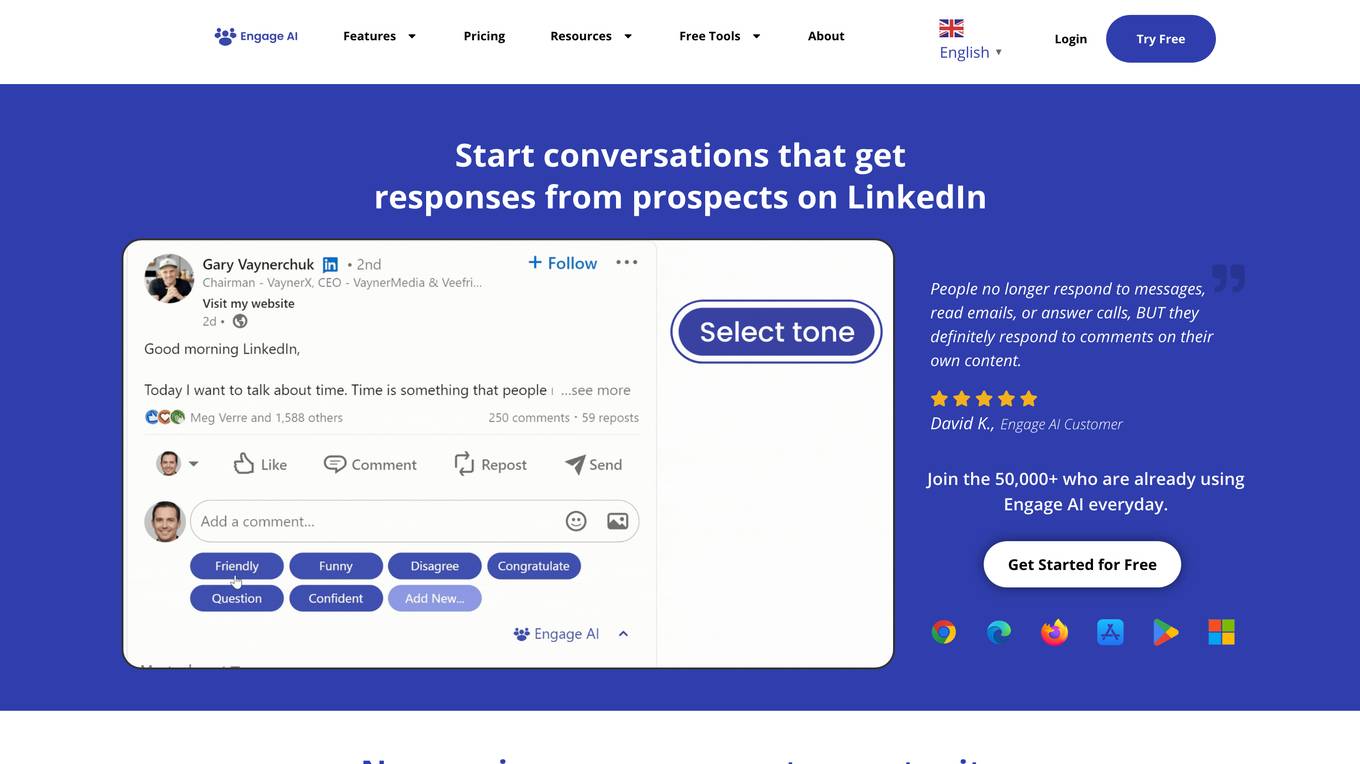

Engage AI

Engage AI is a generative AI tool that helps businesses increase their LinkedIn engagement and lead generation. It offers a range of features to help users create personalized and engaging content, including the ability to generate comments, connection requests, and profile content. Engage AI also provides insights into LinkedIn trends and best practices, and offers a variety of resources to help users get the most out of the platform.

Optimove

Optimove is a Customer-Led Marketing Platform that leverages a real-time Customer Data Platform (CDP) to orchestrate personalized multichannel campaigns optimized by AI. It enables businesses to deliver personalized experiences in real-time across various channels such as web, app, and marketing channels. With a focus on customer-led marketing, Optimove helps brands improve customer KPIs through data-driven campaigns and top-tier personalization. The platform offers a range of resources, including industry benchmarks, marketing guides, success stories, and best practices, to help users achieve marketing mastery.

MyTales

MyTales is an AI-powered story generator that helps you create unique and engaging stories. With MyTales, you can start your adventure by submitting a prompt, and the AI will generate a story based on your input. You can then share your story with others or continue to develop it yourself.

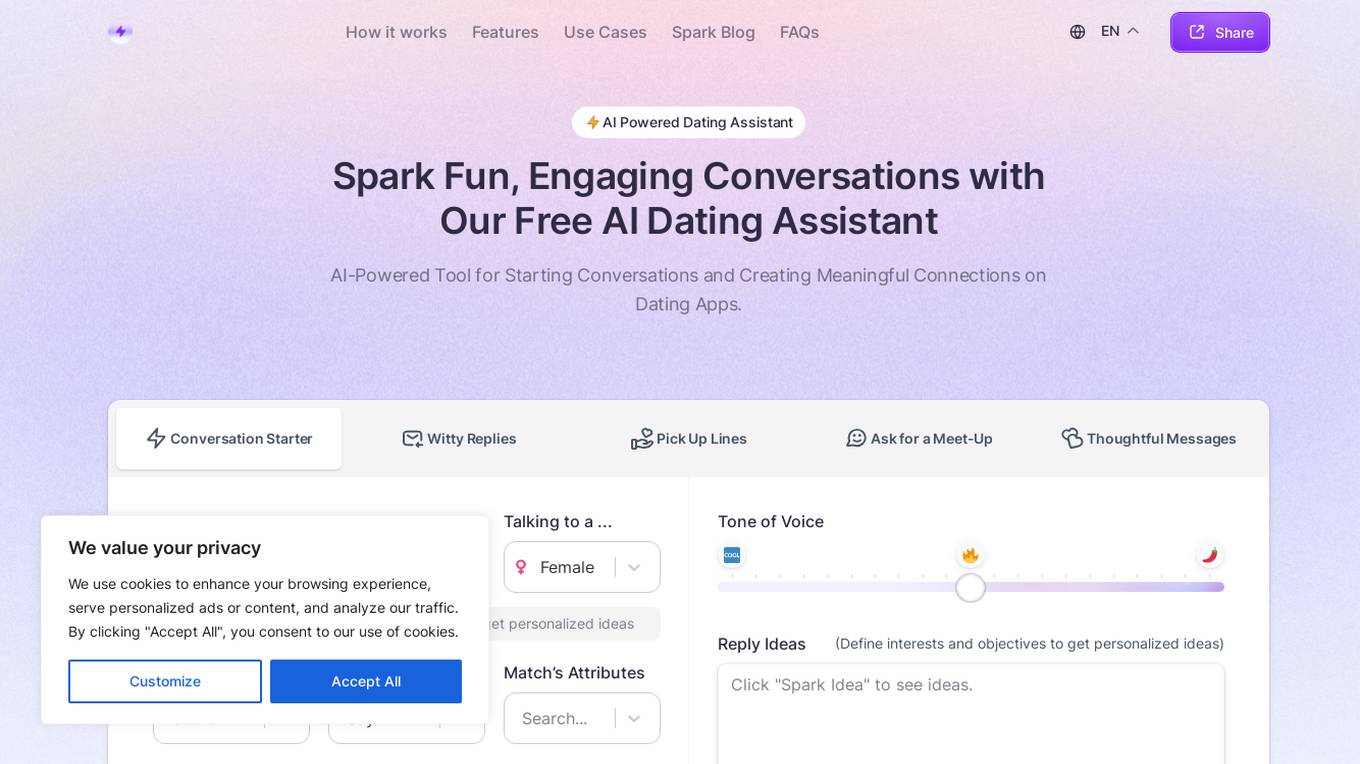

WithSpark.ai

WithSpark.ai is a free AI-powered dating assistant that helps users start engaging conversations and create meaningful connections on dating apps. The tool uses advanced artificial intelligence to analyze user inputs and generate personalized conversation starters tailored to each user's interests and preferences. With Spark, users can easily spark genuine connections, boost their conversations, and engage effortlessly with personalized responses. The application empowers users to unleash their dating potential by providing witty replies, clever pick-up lines, captivating conversation starters, thoughtful messages, and more.

Infinilearn

Infinilearn is a personalized learning platform that revolutionizes education by offering gamified and interactive learning experiences. It features a customized AI Guide that grows with the user, providing personalized learning paths, gamified level system, earning grants directly through the app, and human-AI powered symbiosis. Infinilearn aims to make learning engaging, rewarding, and tailored to individual needs.

Basel

Basel is an AI career companion that revolutionizes the job application process by enabling users to start conversations instead of traditional applications. It facilitates meaningful career opportunities and smarter recruiting by generating interviews on demand. Basel is packed with features to assist in connecting recruiters to candidates, offering adaptive and personalized interactions. Users have full control over the information Basel learns and recollects about their career profile. The application allows users to create shareable links for instant access by recruiters and employers, enabling Basel to represent them effectively. Basel also provides features for logging daily progress, tracking wins, and offering actionable suggestions to stay organized and productive.

TopWorksheets

TopWorksheets is an online platform that allows teachers to create and share interactive worksheets and exercises. It offers a variety of features to help teachers save time and track student progress. Teachers can use TopWorksheets to convert existing worksheets into interactive ones, browse through thousands of worksheets created by other teachers, and even have AI assist them in creating new worksheets. Students can use TopWorksheets to complete assignments, receive auto-graded feedback, and track their own progress.

Getin.AI

Getin.AI is a platform that focuses on AI jobs, career paths, and company profiles in the fields of artificial intelligence, machine learning, and data science. Users can explore various job categories, such as Analyst, Consulting, Customer Service & Support, Data Science & Analytics, Engineering, Finance & Accounting, HR & Recruiting, Legal, Compliance and Ethics, Marketing & PR, Product, Sales And Business Development, Senior Management / C-level, Strategy & M&A, and UX, UI & Design. The platform provides a comprehensive list of remote job opportunities and features detailed job listings with information on job titles, companies, locations, job descriptions, and required skills.

AIBooster

AIBooster is a platform that helps AI businesses to market their products. It offers a variety of services, including directory submission, content marketing, and social media marketing. AIBooster's goal is to help AI start-ups reach their target audience and grow their business.

Mozilla.ai Lumigator Blueprints

Mozilla.ai Lumigator Blueprints is a developer-first hub for open-source AI workflows. It provides a platform for exploring and customizing AI blueprints, such as building timeline algorithms, fine-tuning models, and mapping features using computer vision. The site aims to empower users to create personalized AI solutions tailored to their specific needs and preferences.

xZactly.ai

xZactly.ai is an AI tool that works with Artificial Intelligence start-up businesses and high growth companies to deliver accelerated revenue growth, AI specific sales, business development, and marketing expertise, seed and venture capital financing, business scale, and global expansion fund. The tool helps in connecting businesses and investors, providing go-to-market strategies, and offering triage, transformation, and turnaround solutions for AI and ML companies. With over 25 years of experience in sales, marketing, and business development, xZactly.ai aims to accelerate sales and boost revenue for AI-driven businesses by delivering expertise, strategy, and execution.

Beducated

Beducated is an online platform offering 150+ courses on sex and relationships, led by top experts in the field. It provides a safe space for adults of all backgrounds to learn and explore topics related to sexual health and pleasure. Users can access a variety of courses ranging from couple practices to solo practices, sensual massage, kink & BDSM, communication, and more. The platform aims to empower individuals with pleasure-based sex education and break taboos surrounding sexuality.

1 - Open Source AI Tools

podman-desktop-extension-ai-lab

Podman AI Lab is an open source extension for Podman Desktop designed to work with Large Language Models (LLMs) on a local environment. It features a recipe catalog with common AI use cases, a curated set of open source models, and a playground for learning, prototyping, and experimentation. Users can quickly and easily get started bringing AI into their applications without depending on external infrastructure, ensuring data privacy and security.

20 - OpenAI Gpts

Business Reporter for the Start-Up Ecosystem

I can research and review news that will interest the key players in the Start-Up ecosystem and provide them with briefing.

PSYCH: Your Compass to Inner Clarity (TPW.AI)

Start by sharing what’s on your mind or any emotional challenges you're facing. PSYCH will guide you through reflective dialogue, providing insights and coping mechanisms tailored to your needs.

Quotes Wallpaper Creator

I could provide you a quote wallpaper every day to start your day right.

Neighbot

Start by giving Neighbot the name of a neighborhood and state (eg Orchard Hills, CA). It will provide descriptions for your market collateral. You can follow up and ask about local restaurants and builder communities.

Plot Breaker

Start with a genre and I'll help you develop a rough story outline. You can handle the rest

Supervisors of Gambling Workers Ready

It’s your first day! Excited, Nervous? Let me help you start off strong in your career. Type "help" for More Information

Tax Preparers Ready

It’s your first day! Excited, Nervous? Let me help you start off strong in your career. Type "help" for More Information

Medical Secretaries and Assistants Ready

It’s your first day! Excited, Nervous? Let me help you start off strong in your career. Type "help" for More Information

Couriers and Messengers Ready

It’s your first day! Excited, Nervous? Let me help you start off strong in your career. Type "help" for More Information

Image Theme Clone

Type “Start” and Get Exact Details on Image Generation and/or Duplication