Best AI tools for< Separate Stems >

20 - AI tool Sites

Music Demixer

Music Demixer is an AI-powered music transcription tool that converts audio recordings into professional sheet music, MIDI files, and stems with unmatched accuracy. It uses advanced AI stem separation technology to generate sheet music, MIDI files, or isolated instrument tracks. The platform is designed for musicians, composers, educators, and music enthusiasts looking to transcribe, analyze, and create music with studio-quality results.

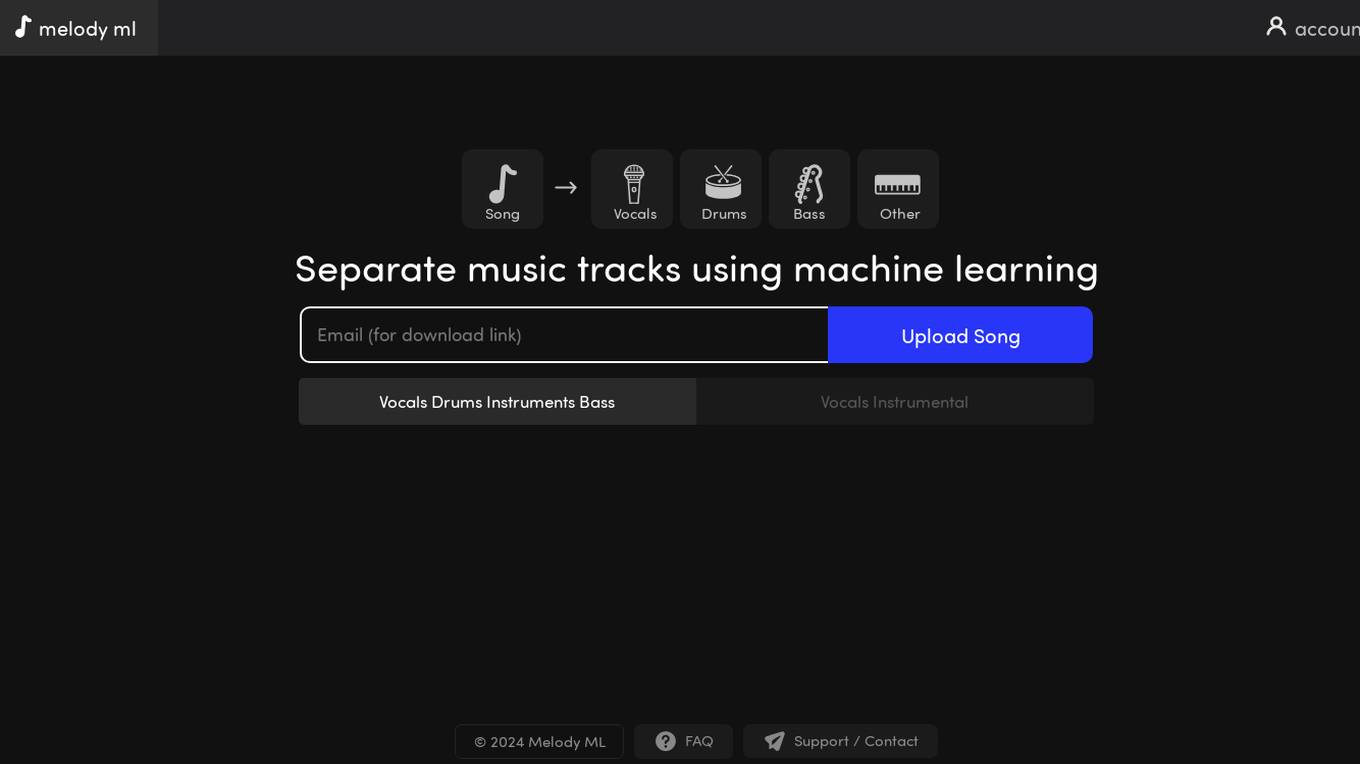

Melody ML

Melody ML is an AI-powered music processing tool that allows users to separate music tracks using machine learning technology. Users can upload songs, and the tool uses AI algorithms to extract vocals, drums, bass, and other instruments into separate stems. Melody ML offers a user-friendly platform for music enthusiasts, producers, and artists to enhance their music production process.

RipX DAW

RipX DAW is an AI-powered digital audio workstation (DAW) that allows users to edit notes in the mix, replace sounds, and separate stems. It is designed to assist musicians and producers in creating and editing music using AI-generated samples and loops. RipX DAW is known for its advanced features such as 6+ stem separation, sound replacement menu, and the ability to edit notes in the mix.

Samplab

Samplab is an AI-powered audio editing tool that allows users to manipulate audio samples with advanced features such as note editing, chord detection, stem separation, audio to MIDI conversion, and audio warping. It offers a seamless integration with digital audio workstations (DAWs) as a plugin or desktop app, enabling producers to enhance their music production workflow. Samplab's AI technology revolutionizes the way users interact with audio samples, providing unprecedented control over notes, chords, and melodies.

AudioShake

AudioShake is a cloud-based audio processing platform that uses artificial intelligence (AI) to separate audio into its component parts, such as vocals, music, and effects. This technology can be used for a variety of applications, including mixing and mastering, localization and captioning, interactive audio, and sync licensing.

LALAL.AI

LALAL.AI is a next-generation vocal remover and music source separation service that offers fast, easy, and precise stem extraction. It allows users to remove vocals, instrumental tracks, drums, bass, guitar, and more without quality loss. The platform uses advanced AI technology to provide high-quality stem splitting based on transformer-based audio separation approach. Users can create custom voices, remove background noise, change voices, and separate lead and backing vocals with pinpoint accuracy. LALAL.AI offers various packages for individuals and businesses, with features like fast processing queue, batch upload, and stem download. The service supports a wide range of input/output formats for audio and video files.

Gaudio Studio

Gaudio Studio is an AI music separation tool designed for creators to unleash their creativity with ease. It allows users to extract background music, separate instruments, and remove vocals from any music content. Powered by GSEP (Gaudio source SEParation), a high-quality and easy-to-use AI stem separation model, Gaudio Studio offers a seamless experience for audio separation. Users can upload their songs in various formats, access the tool from desktop or mobile devices, and enjoy Studio Plans for advanced processing. Additionally, Gaudio Studio can be integrated with cloud APIs and On-device SDKs for business applications, offering a versatile solution for music professionals and enthusiasts.

Music AI

Music AI is an AI audio platform that offers state-of-the-art ethical AI solutions for audio and music applications. It provides a wide range of tools and modules for tasks such as stem separation, transcription, mixing, mastering, content generation, effects, utilities, classification, enhancement, style transfer, and more. The platform aims to streamline audio processing workflows, enhance creativity, improve accuracy, increase engagement, and save time for music professionals and businesses. Music AI prioritizes data security, privacy, and customization, allowing users to build custom workflows with over 50 AI modules.

Vocal Remover Oak

Vocal Remover Oak is an advanced AI tool designed for music producers, video makers, and karaoke enthusiasts to easily separate vocals and accompaniment in audio files. The website offers a free online vocal remover service that utilizes deep learning technology to provide fast processing, high-quality output, and support for various audio and video formats. Users can upload local files or provide YouTube links to extract vocals, accompaniment, and original music. The tool ensures lossless audio output quality and compatibility with multiple formats, making it suitable for professional music production and personal entertainment projects.

Galaxy.ai

Galaxy.ai is an all-in-one AI platform that offers a wide range of AI tools and applications to streamline and enhance various business processes. From data analysis to predictive modeling, Galaxy.ai provides advanced AI solutions to help businesses make data-driven decisions and improve efficiency. With its user-friendly interface and powerful algorithms, Galaxy.ai is designed to cater to the needs of both small businesses and large enterprises, making AI technology accessible and easy to implement.

Vocalx

Vocalx is an AI-powered online tool that converts text into natural-sounding speech. It utilizes advanced speech synthesis technology to generate lifelike voices for various applications. Users can easily create audio content from written text, making it ideal for content creators, educators, and businesses looking to enhance their multimedia offerings. With Vocalx, you can customize the voice, tone, and speed of the generated speech to suit your needs. The tool supports multiple languages and accents, providing a versatile solution for voiceover projects, audiobooks, podcasts, and more.

Package

Package is a generative AI rendering tool that helps homeowners envision different renovation styles, receive recommended material packages, and streamline procurement with just one click. It offers a wide range of design packages curated by experts, allowing users to customize items to fit their specific style. Package also provides 3D renderings, material management, and personalized choices, making it easy for homeowners to bring their design ideas to life.

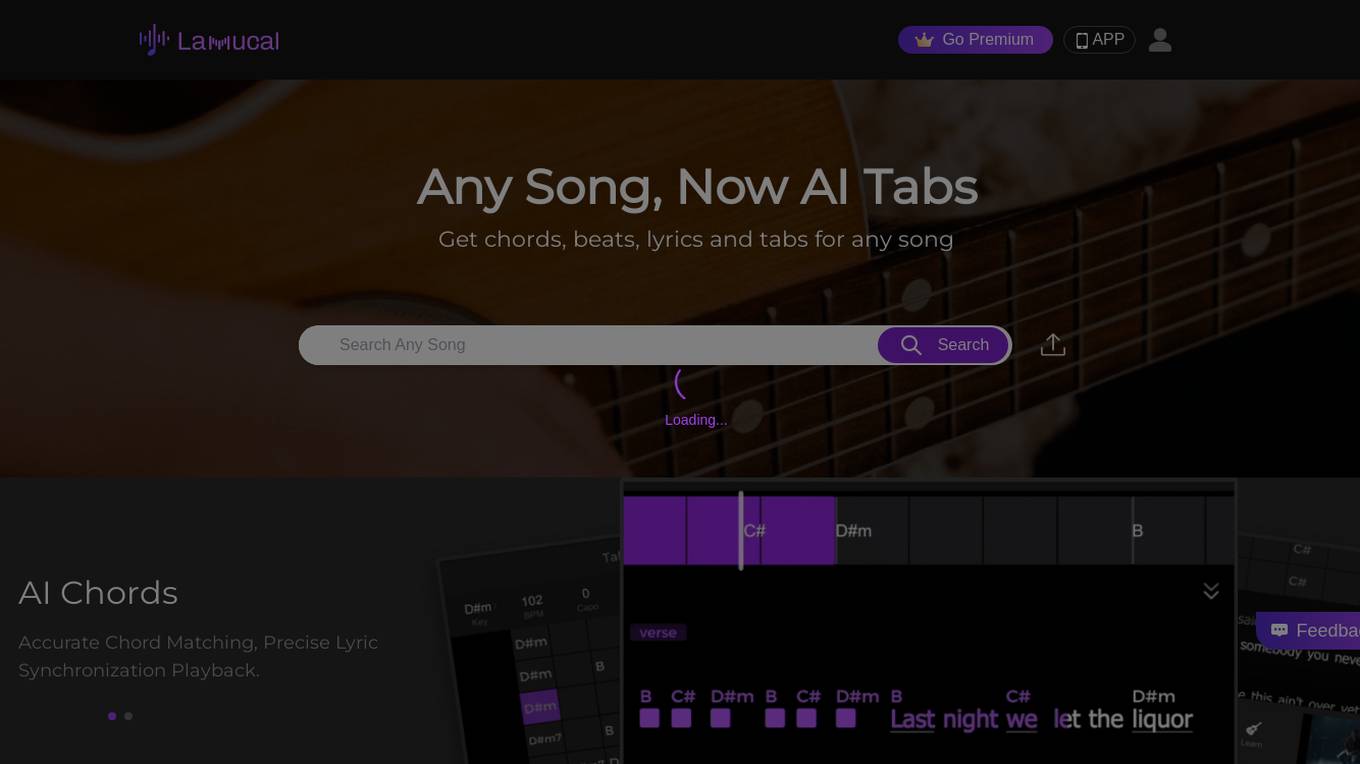

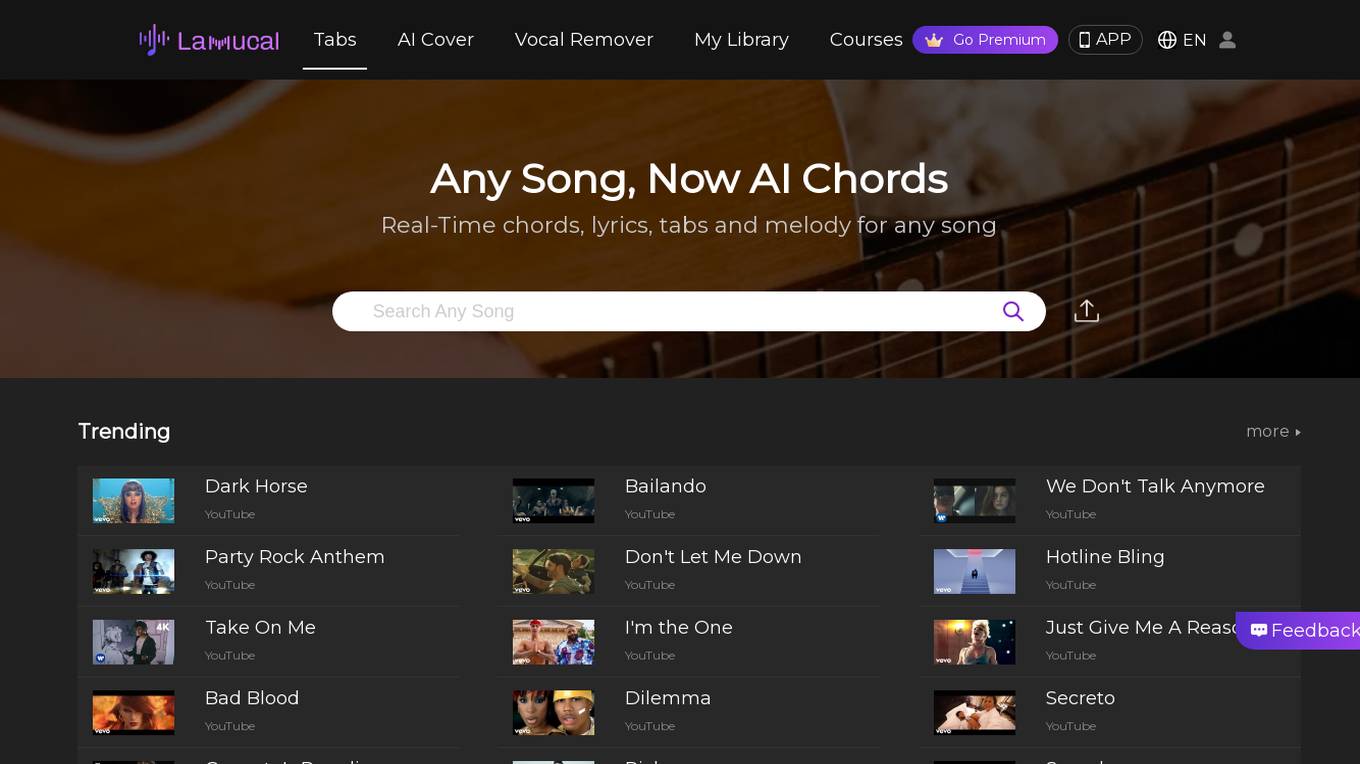

Lamucal

Lamucal is an AI-powered music application that provides users with accurate chords, beats, lyrics, and tabs for any song. It features AI-generated rhythm patterns and precise lyric synchronization, making it an invaluable tool for musicians and music enthusiasts alike. With Lamucal, users can easily find and play their favorite songs, explore new music, and improve their musical skills.

Moises App

Moises App is a music application powered by AI that provides musicians with a range of tools to enhance their practice and performance. With Moises App, users can separate vocals and instruments in any song, adjust the speed and pitch, and detect chords in real time. The app also includes a smart metronome and audio speed changer, making it an ideal tool for musicians of all levels. Moises App is available as a desktop application, iOS app, and web app, making it accessible to musicians on any device.

Moises

Moises is an AI-powered musician's app that allows users to remove vocals and instruments from any song. With Moises, musicians and music enthusiasts can isolate specific elements of a track for learning, remixing, or practicing purposes. The app utilizes advanced AI algorithms to provide high-quality audio separation, making it a valuable tool for music production and analysis. Moises offers a user-friendly interface and intuitive controls, making it accessible to both beginners and professionals in the music industry.

Lamucal

Lamucal is an AI-powered platform that provides tabs and chords for any song. It offers real-time chords, lyrics, tabs, and melody for any song, making it a valuable tool for musicians and music enthusiasts. Users can upload songs or search for any song to access chords and other musical elements. With a user-friendly interface and a wide range of features, Lamucal aims to enhance the music learning and playing experience for its users.

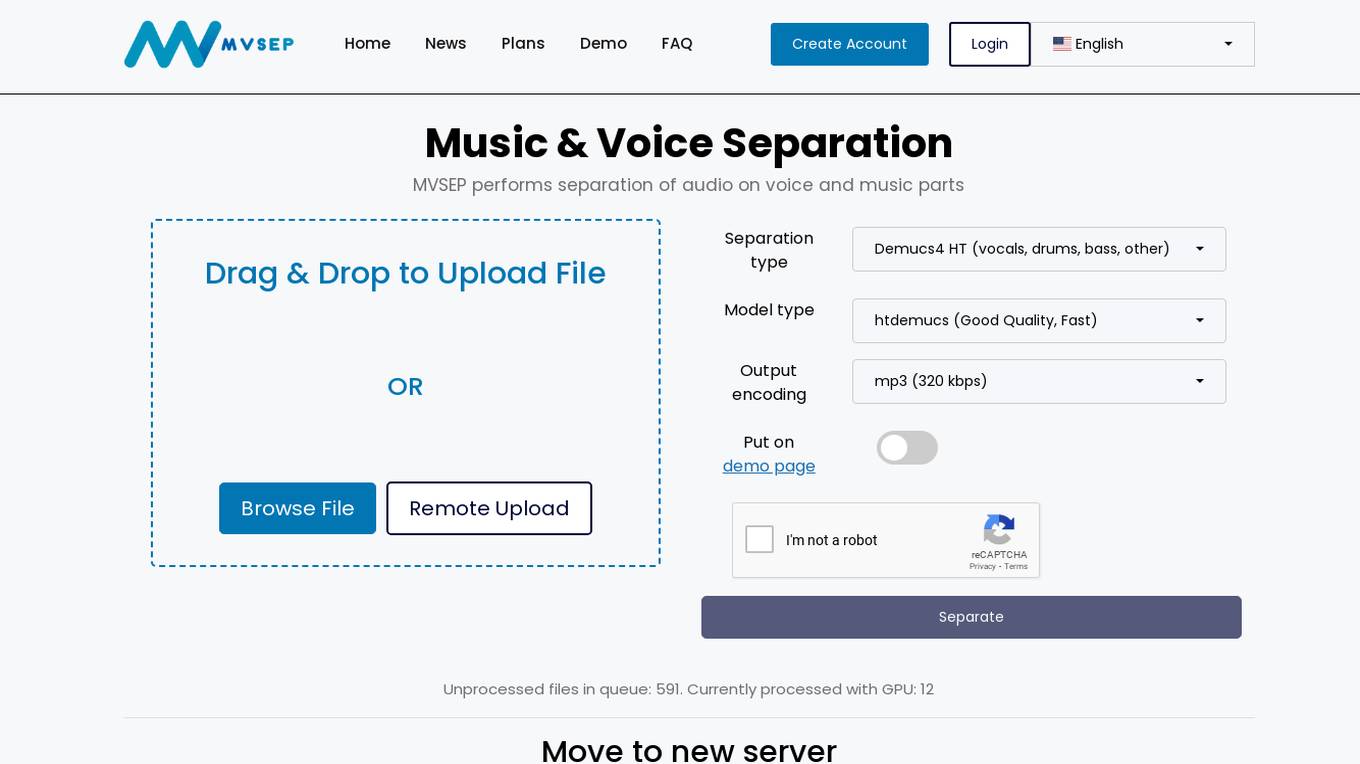

MVSEP - Music & Voice Separation

MVSEP is an AI-powered application that specializes in music and voice separation. It offers users the ability to separate audio files into voice and music parts using advanced algorithms and models. Users can easily upload files through drag and drop or remote upload features. The application provides various separation types, HQ models, and output encoding options to cater to different user needs. MVSEP aims to enhance the audio editing experience by providing high-quality results and a user-friendly interface.

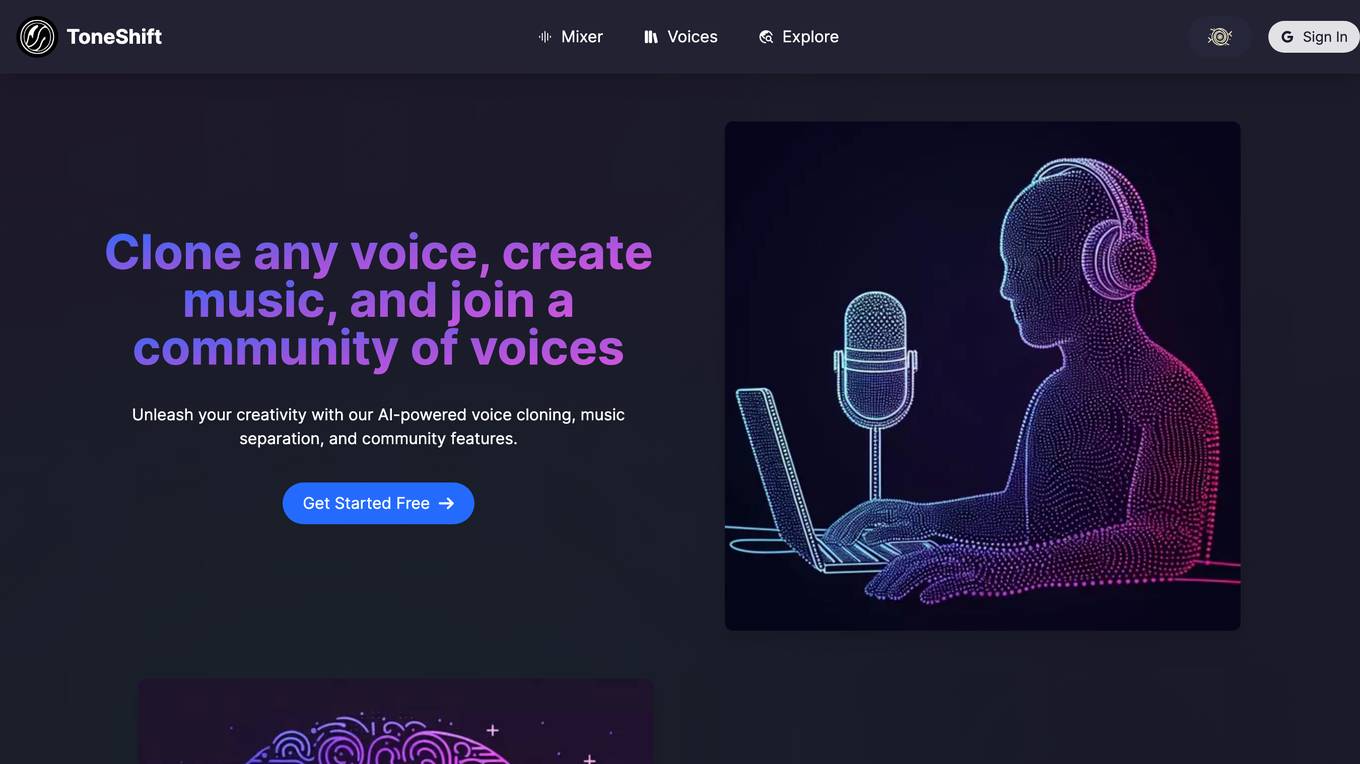

ToneShift

ToneShift is an AI-powered platform that allows users to clone voices, separate music, and join a community of voices. With ToneShift, users can transform recordings into versatile voices for various purposes, separate vocals and instrumentals from songs to create new remixes and mashups, and join a community to discover new tones, contribute their creations, and collaborate with others.

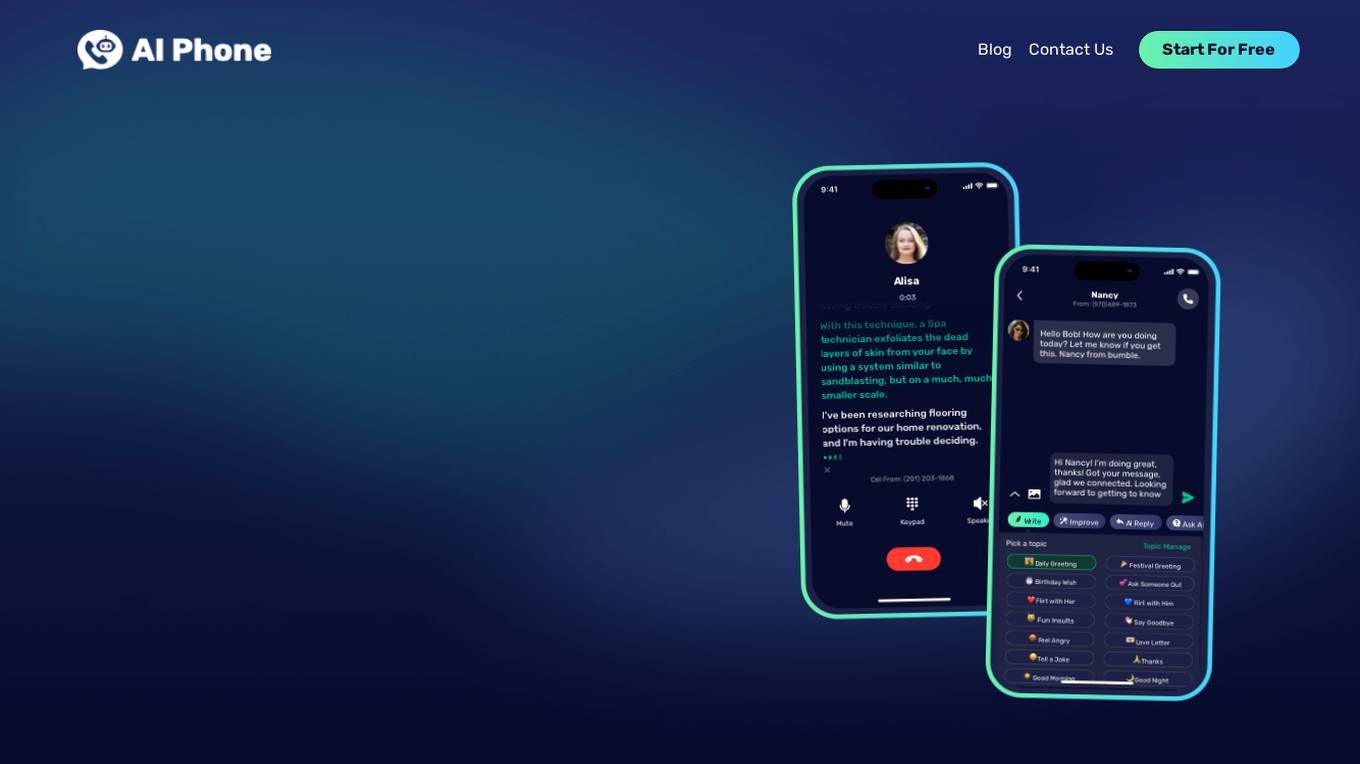

AI Phone

AI Phone is a mobile application that uses artificial intelligence to simplify and enhance phone calls. It offers real-time transcription, AI-generated summaries, call highlights, keyword detection, and a separate US phone number for work-life balance. The AI chat assistant can correct messages, provide recommendations, and suggest replies, reducing communication stress.

pyannote AI Speaker Intelligence Platform

The pyannote AI Speaker Intelligence Platform is an advanced AI tool designed for developers to detect, segment, label, and separate speakers in any language. It offers state-of-the-art speaker diarization models that accurately identify speakers in audio recordings, providing valuable insights and improving productivity. With optimized AI models, the platform saves time, effort, and money by delivering top-tier performance. The tool is language agnostic and offers advanced features such as speaker partitioning, identification, overlapping speech detection, voice activity detection, speaker separation, and confidence scoring.

1 - Open Source AI Tools

ai-dj

OBSIDIAN-Neural is a real-time AI music generation VST3 plugin designed for live performance. It allows users to type words and instantly receive musical loops, enhancing creative flow. The plugin features an 8-track sampler with MIDI triggering, 4 pages per track for easy variation switching, perfect DAW sync, real-time generation without pre-recorded samples, and stems separation for isolated drums, bass, and vocals. Users can generate music by typing specific keywords and trigger loops with MIDI while jamming. The tool offers different setups for server + GPU, local models for offline use, and a free API option with no setup required. OBSIDIAN-Neural is actively developed and has received over 110 GitHub stars, with ongoing updates and bug fixes. It is dual licensed under GNU Affero General Public License v3.0 and offers a commercial license option for interested parties.