Best AI tools for< Reward Loyalty >

20 - AI tool Sites

Gamelight

Gamelight is a revolutionary AI platform for mobile games marketing. It utilizes advanced algorithms to analyze app usage data and users' behavior, creating detailed user profiles and delivering personalized game recommendations. The platform also features a loyalty program that rewards users with points for gameplay duration, fostering engagement and retention. Gamelight's ROAS Algorithm identifies users with the highest likelihood of making a purchase on your game, providing exclusive access to valuable data points for effective user acquisition.

PurplePro

PurplePro is an AI-powered loyalty club platform designed to help businesses launch and manage loyalty programs effortlessly. With features like referral management, streaks, quizzes, variable rewards, and automated triggers, PurplePro aims to enhance customer engagement, retention, and acquisition. The platform offers advanced customization and segmentation options, making it suitable for direct-to-consumer (D2C) brands looking to boost customer loyalty and increase revenue. PurplePro's AI capabilities enable users to create and implement effective loyalty campaigns in just a few clicks, without the need for coding knowledge. The platform also provides a seamless integration with Shopify, making it easy for businesses to set up and activate their loyalty programs.

Almonds Ai

Almonds Ai is a powerful and scalable AI-driven platform that focuses on channel engagement for businesses. It offers solutions such as B2B loyalty programs, interactive product learning, and hybrid/virtual events to enhance partner engagement and drive revenue growth. With features like platform customization, dedicated customer support, data & AI engine, and global recognition, Almonds Ai aims to deliver measurable conversions and return on experience for its users. The platform caters to various industries including technology, retail, auto, and banking, helping businesses engage, educate, and reward their channel partners effectively.

Pin-Up Casino Guatemala

Pin-Up Casino Guatemala is an online casino platform offering a wide range of games including slots, table games, live casino options, and special games. With high-quality graphics, smooth gameplay, and generous payouts, Pin-Up provides a thrilling and secure gaming experience in Guatemala. The platform rewards loyalty with bonuses, seasonal jackpots, and a VIP program. Licensed and regulated in Guatemala, Pin-Up ensures secure payment processing, mobile-friendly design, and transparency. Players can enjoy a variety of payment methods, 24/7 customer support, and mobile gaming without downloads. Trusted by thousands of players, Pin-Up promotes responsible gaming and provides tools for managing gambling habits. Join Pin-Up today for an exciting online gaming experience.

Bing Sign in Rewards

Bing Sign in Rewards is a loyalty program that allows users to earn points for using Bing search engine and other Microsoft products and services. Points can be redeemed for gift cards, merchandise, and other rewards.

Smart With Points

Smart With Points is a comprehensive website dedicated to helping users maximize their travel rewards and explore the world for less. The platform offers a wide range of tools, calculators, guides, and reviews related to frequent flyer miles, Avios, and Virgin Atlantic Flying Club points. Users can find valuable information on credit cards, airlines, loyalty programs, and travel deals to make informed decisions and optimize their points and miles usage.

OpenTable

OpenTable is a popular online restaurant reservation platform that allows users to discover restaurants, read reviews, and make reservations conveniently. With a vast network of restaurants across various cuisines and locations, OpenTable simplifies the dining experience for users by providing a seamless booking process. Users can explore restaurant options, view menus, check availability, and secure reservations with ease. The platform also offers features such as personalized recommendations, loyalty programs, and dining rewards to enhance the overall dining experience.

Gensbot

Gensbot is an innovative platform that empowers users to create personalized goods on demand. By leveraging advanced AI technology, Gensbot eliminates the hassle of searching, stressing, or second-guessing, offering a seamless and convenient online shopping experience. Users can simply prompt the AI with their desired product specifications, and Gensbot will generate unique designs tailored to their preferences. This user-centric approach extends to the production process, where Gensbot prioritizes local manufacturing to minimize shipping distances and carbon emissions, contributing to a greener planet. Additionally, Gensbot rewards users with tokens for every purchase, which can be redeemed for future designs or exclusive offers, fostering a sustainable and rewarding shopping experience.

Vouchery.io

Vouchery.io is an AI-powered Coupon & E-commerce Promo Management Software that offers an all-in-one promotional engine to help businesses orchestrate and deliver the right incentives at every stage of the customer lifecycle. It provides capabilities to create, distribute, and synchronize promotions, analyze data, maximize promo ROI, manage and collaborate on multiple budgets, detect coupon abuse, and personalize promotions using a flexible rule engine. The platform is trusted by leading brands across six continents and offers a programmable Coupon API for seamless integration with various voucher and coupon regulations.

Perspect

Perspect is an AI-powered platform designed for high-performance software teams. It offers real-time insights into team contributions and impact, optimizing developer experience, and rewarding high-performers. With 50+ integrations, Perspect enables visualization of impact, benchmarking performance, and uses machine learning models to identify and eliminate blockers. The platform is deeply integrated with web3 wallets and offers built-in reward mechanisms. Managers can align resources around crucial KPIs, identify top talent, and prevent burnout. Perspect aims to enhance team productivity and employee retention through AI and ML technologies.

Advantage Club

The Advantage Club is an AI-powered Employee Engagement Platform that offers solutions for workforce engagement through various features such as recognition, marketplace, wellness, incentive automation, communities, and pulse tracking. It digitizes rewarding processes, curates personalized vouchers and gifts, elevates well-being with a holistic wellness platform, automates sales contests, fosters inclusion through communities, and captures employee sentiments through surveys and quizzes. The platform integrates with global HRIS and communication tools, provides real-time analytics, and offers a seamless user experience for both employees and administrators.

Community Hub

Community Hub is a free-to-use, AI-powered community management platform that helps you automate tasks, reward members, and keep your community engaged. With Community Hub, you can:

MyShell

MyShell is an AI application that enables users to build, share, and own AI agents. It serves as a platform connecting users, creators, and open-source AI researchers. With MyShell, users can interact with AI friends and work companions, such as Shizuku and Emma 01 03, through voice and video conversations. The application empowers creators to leverage generative AI models to transform ideas into AI-native apps quickly. MyShell fosters a creator economy in the AI-native era, allowing anyone to become a creator, take ownership of their work, and be rewarded for their ideas.

Huntr

Huntr is the world's first bug bounty platform for AI/ML. It provides a single place for security researchers to submit vulnerabilities, ensuring the security and stability of AI/ML applications, including those powered by Open Source Software (OSS).

Bagel

Bagel is an AI & Cryptography Research Lab that focuses on making open source AI monetizable by leveraging novel cryptography techniques. Their innovative fine-tuning technology tracks the evolution of AI models, ensuring every contribution is rewarded. Bagel is built for autonomous AIs with large resource requirements and offers permissionless infrastructure for seamless information flow between machines and humans. The lab is dedicated to privacy-preserving machine learning through advanced cryptography schemes.

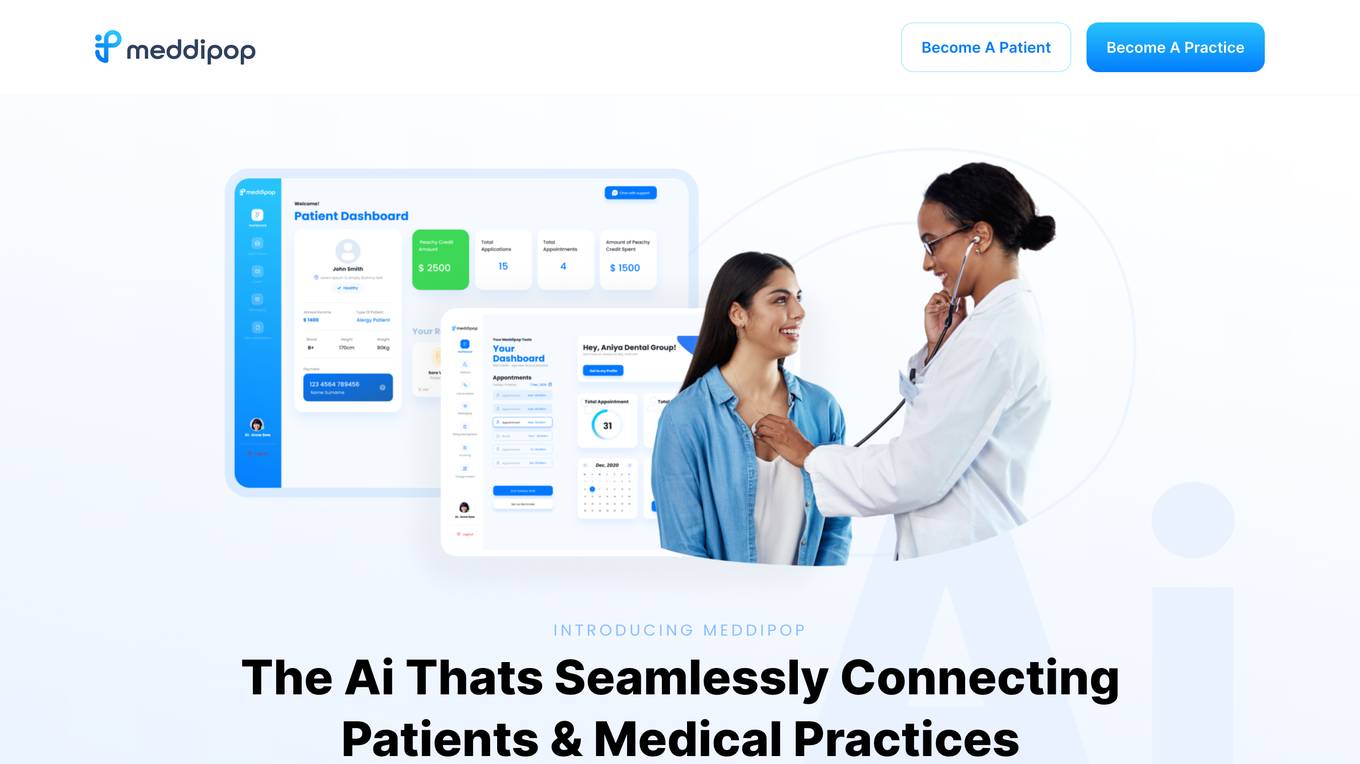

MeddiPop

MeddiPop is an AI-powered platform that seamlessly connects patients with medical practices in various industries such as plastic surgery, dermatology, cosmetic dentistry, and ophthalmology. The application streamlines the process by allowing patients to submit applications for services, which are then matched with the most suitable practice using AI algorithms. MeddiPop aims to revolutionize healthcare by simplifying patient-practice connections and optimizing appointment scheduling.

Zesh AI

Zesh AI is an advanced AI-powered ecosystem that offers a range of innovative tools and solutions for Web3 projects, community managers, data analysts, and decision-makers. It leverages AI Agents and LLMs to redefine KOL analysis, community engagement, and campaign optimization. With features like InfluenceAI for KOL discovery, EngageAI for campaign management, IDAI for fraud detection, AnalyticsAI for data analysis, and Wallet & NFT Profile for community empowerment, Zesh AI provides cutting-edge solutions for various aspects of Web3 ecosystems.

EVY

EVY is an AI co-creator tool designed to assist creators in producing authentic and engaging content. It helps users understand how algorithms reward content and provides actionable tips to improve engagement rates. EVY enables users to create unique human content, maintain consistency, and build sustainable audiences by focusing on quality over flashiness. The tool offers features such as writing assistance, idea generation, brand scaling, content optimization, and time-saving capabilities.

Giftpack

Giftpack is a global gifting platform that offers a smart gifting service to help businesses make a unique impression through personalized experiences. The platform leverages data and design to strengthen relationships, automate gifting operations, boost retention, and drive recurring revenue. With a diverse global catalog, Giftpack provides branded merchandise, vouchers, and experiences sourced locally and globally. The platform also offers integrated automation, intelligent gift customization powered by AI, and a reward program system. Giftpack aims to simplify gifting processes and enhance engagement and retention within organizations.

Teachr

Teachr is an online course creation platform that uses artificial intelligence to help users create and sell stunning courses. With Teachr, users can create interactive courses with 3D visuals, 360° perspectives, and augmented reality. They can also use speech recognition and AI voice-over technology to create engaging learning experiences. Teachr also offers a range of features to help users manage their courses, including a payment system, reward system, and fitness challenges. With Teachr, users can turn their expertise into a product that they can sell infinitely and create the perfect learning experience for their customers.

0 - Open Source AI Tools

19 - OpenAI Gpts

Investing in Biotechnology and Pharma

🔬💊 Navigate the high-risk, high-reward world of biotech and pharma investing! Discover breakthrough therapies 🧬📈, understand drug development 🧪📊, and evaluate investment opportunities 🚀💰. Invest wisely in innovation! 💡🌐 Not a financial advisor. 🚫💼

Gammy

Ti aiuto a conoscere soluzioni per il settore HR legate alla Gamification e agli Assessment Game

Options Explorer

Expert in U.S. stock options, adept at explaining strategies with simple language and charts.

Team Building

Office Team Building fun: Innovative team-building app for engaging, collaborative office activities, fun and games.

Reword

Reword: Your advanced text revison ally for your everyday writing! Simply ask Reword to reword your text, then paste your text into Reword's input field. Reword your written copy, emails, papers, text messages, and much more!

Total Rewards Generalist Advisor

Advises on employee compensation and benefits strategies.

Shop Rewards - AMZ Cashback

Amazon product shopping search, conveniently query products, get discounts and discounted products more quickly.

Executive Compensation Advisor

Guides organization's executive compensation strategy and decisions.

Chatflights Points Expert - USA & Canada

Got points to spend? Get expert advice on how to find and book flights in business class for credit card points and miles, from USA or Canada.

Decision Journal

Decision Journal can help you with decision making, keeping track of the decisions you've made, and helping you review them later on.