Best AI tools for< Research Object History >

20 - AI tool Sites

Luma AI

Luma AI is an AI-powered platform that specializes in video generation using advanced models like Ray2 and Dream Machine. The platform offers director-grade control over style, character, and setting, allowing users to reshape videos with ease. Luma AI aims to build multimodal general intelligence that can generate, understand, and operate in the physical world, paving the way for creative, immersive, and interactive systems beyond traditional text-based approaches. The platform caters to creatives in various industries, offering powerful tools for worldbuilding, storytelling, and creative expression.

Luma AI

Luma AI is a 3D capture platform that allows users to create interactive 3D scenes from videos. With Luma AI, users can capture 3D models of people, objects, and environments, and then use those models to create interactive experiences such as virtual tours, product demonstrations, and training simulations.

AI Umbrella

AI Umbrella is a comprehensive platform offering a wide range of powerful AI tools and solutions. It serves as a one-stop destination for individuals and businesses seeking to leverage artificial intelligence for various purposes. With a user-friendly interface and cutting-edge technology, AI Umbrella empowers users to streamline their workflows, enhance decision-making processes, and unlock new possibilities through the application of AI algorithms and models.

Amazon Science

Amazon Science is a research and development organization within Amazon that focuses on developing new technologies and products in the fields of artificial intelligence, machine learning, and computer science. The organization is home to a team of world-renowned scientists and engineers who are working on a wide range of projects, including developing new algorithms for machine learning, building new computer vision systems, and creating new natural language processing tools. Amazon Science is also responsible for developing new products and services that use these technologies, such as the Amazon Echo and the Amazon Fire TV.

CVF Open Access

The Computer Vision Foundation (CVF) is a non-profit organization dedicated to advancing the field of computer vision. CVF organizes several conferences and workshops each year, including the International Conference on Computer Vision (ICCV), the Conference on Computer Vision and Pattern Recognition (CVPR), and the Winter Conference on Applications of Computer Vision (WACV). CVF also publishes the International Journal of Computer Vision (IJCV) and the Computer Vision and Image Understanding (CVIU) journal. The CVF Open Access website provides access to the full text of all CVF-sponsored conference papers. These papers are available for free download in PDF format. The CVF Open Access website also includes links to the arXiv versions of the papers, where available.

Salesforce AI Blog

Salesforce AI Blog is an AI tool that focuses on various AI research topics such as accountability, accuracy, AI agents, AI coding, AI ethics, AI object detection, deep learning, forecasting, generative AI, and more. The blog showcases cutting-edge research, advancements, and projects in the field of artificial intelligence. It also highlights the work of Salesforce Research team members and their contributions to the AI community.

TensorFlow

TensorFlow is an end-to-end platform for machine learning. It provides a wide range of tools and resources to help developers build, train, and deploy ML models. TensorFlow is used by researchers and developers all over the world to solve real-world problems in a variety of domains, including computer vision, natural language processing, and robotics.

Keras

Keras is an open-source deep learning API written in Python, designed to make building and training deep learning models easier. It provides a user-friendly interface and a wide range of features and tools to help developers create and deploy machine learning applications. Keras is compatible with multiple frameworks, including TensorFlow, Theano, and CNTK, and can be used for a variety of tasks, including image classification, natural language processing, and time series analysis.

Joseph Chet Redmon's Computer Vision Platform

The website is a platform maintained by Joseph Chet Redmon, a graduate student working on computer vision. It features information on his projects, publications, talks, and teaching activities. The site also includes details about the Darknet Neural Network Framework, tactics in Coq, and research work. Visitors can learn about computer vision, object recognition, and visual question answering through the resources provided on the site.

Vansh

Vansh is an AI tool developed by a tech enthusiast. It specializes in Vision AI and Vispark technologies. The tool offers advanced features for image recognition, object detection, and visual data analysis. With a user-friendly interface, Vansh caters to both beginners and experts in the field of artificial intelligence.

Grok-1.5 Vision

Grok-1.5 Vision (Grok-1.5V) is a groundbreaking multimodal AI model developed by Elon Musk's research lab, x.AI. This advanced model has the potential to revolutionize the field of artificial intelligence and shape the future of various industries. Grok-1.5V combines the capabilities of computer vision, natural language processing, and other AI techniques to provide a comprehensive understanding of the world around us. With its ability to analyze and interpret visual data, Grok-1.5V can assist in tasks such as object recognition, image classification, and scene understanding. Additionally, its natural language processing capabilities enable it to comprehend and generate human language, making it a powerful tool for communication and information retrieval. Grok-1.5V's multimodal nature sets it apart from traditional AI models, allowing it to handle complex tasks that require a combination of visual and linguistic understanding. This makes it a valuable asset for applications in fields such as healthcare, manufacturing, and customer service.

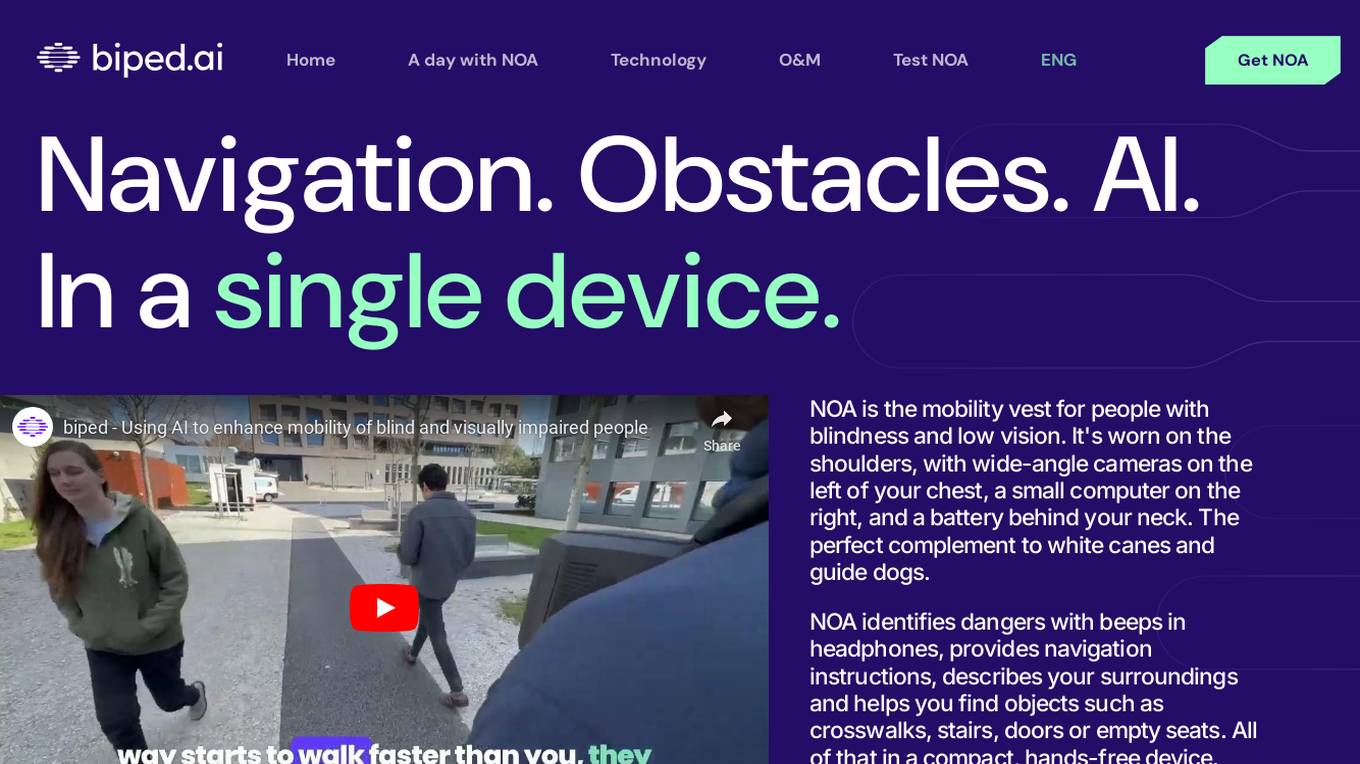

NOA

NOA by biped.ai is a revolutionary mobility vest designed to enhance the independence and safety of individuals with blindness and low vision. It combines cutting-edge AI technology with wearable devices to provide real-time navigation instructions, obstacle detection, and object finding capabilities. NOA is a hands-free solution that complements traditional mobility aids like white canes and guide dogs, offering a compact and lightweight design for seamless integration into daily life. Developed through extensive research and collaboration with experts in the field, NOA aims to empower users to navigate their surroundings with confidence and ease.

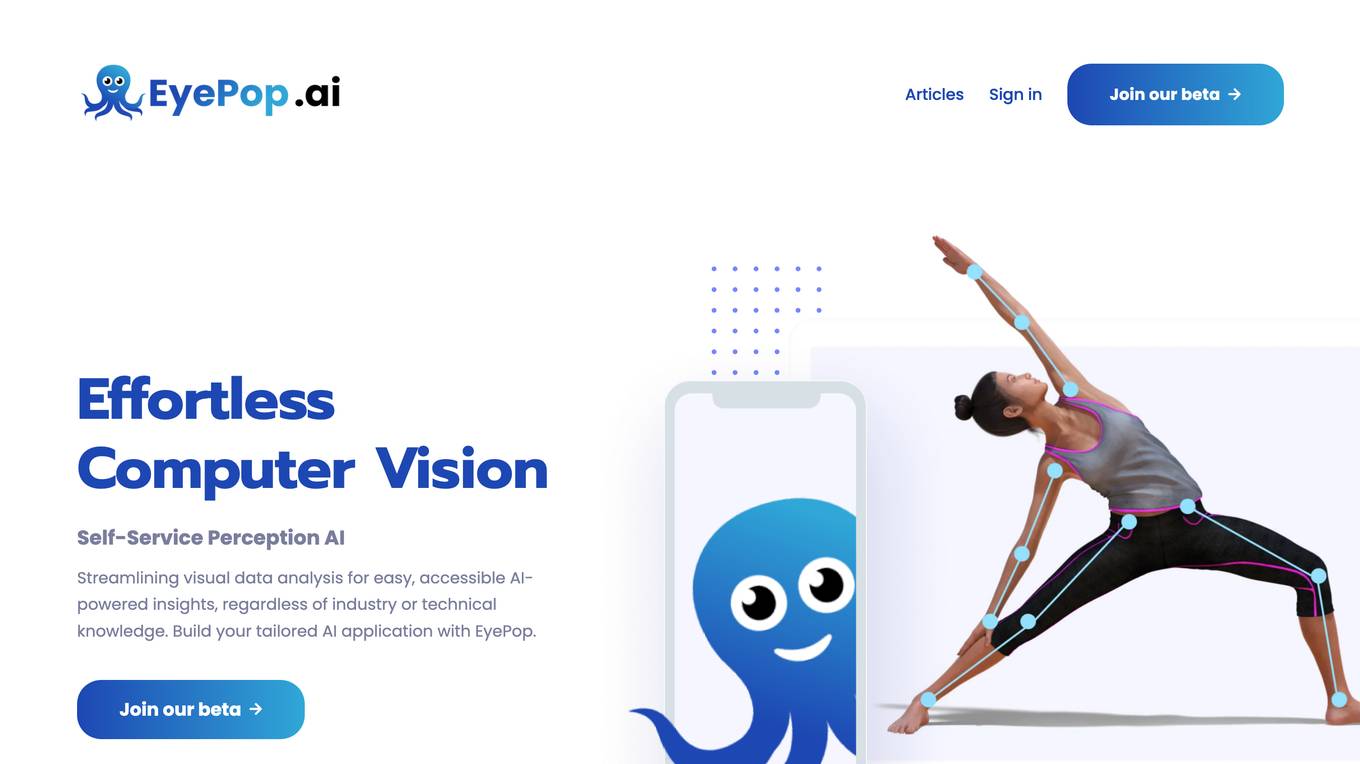

EyePop.ai

EyePop.ai is a hassle-free AI vision partner designed for innovators to easily create and own custom AI-powered vision models tailored to their visual data needs. The platform simplifies building AI-powered vision models through a fast, intuitive, and fully guided process without the need for coding or technical expertise. Users can define their target, upload data, train their model, deploy and detect, and iterate and improve to ensure effective AI solutions. EyePop.ai offers pre-trained model library, self-service training platform, and future-ready solutions to help users innovate faster, offer unique solutions, and make real-time decisions effortlessly.

Tengr.ai - Image AI

Tengr.ai is an AI tool that specializes in image analysis and recognition. It uses advanced artificial intelligence algorithms to analyze images and extract valuable insights. The tool is designed to help businesses and individuals automate image processing tasks, improve accuracy, and save time. With Tengr.ai, users can easily classify images, detect objects, recognize text, and perform various image-related tasks with high precision.

Visual Computing & Artificial Intelligence Lab at TUM

The Visual Computing & Artificial Intelligence Lab at TUM is a group of research enthusiasts advancing cutting-edge research at the intersection of computer vision, computer graphics, and artificial intelligence. Our research mission is to obtain highly-realistic digital replica of the real world, which include representations of detailed 3D geometries, surface textures, and material definitions of both static and dynamic scene environments. In our research, we heavily build on advances in modern machine learning, and develop novel methods that enable us to learn strong priors to fuel 3D reconstruction techniques. Ultimately, we aim to obtain holographic representations that are visually indistinguishable from the real world, ideally captured from a simple webcam or mobile phone. We believe this is a critical component in facilitating immersive augmented and virtual reality applications, and will have a substantial positive impact in modern digital societies.

AIModels.fyi

AIModels.fyi is a website that helps users find the best AI model for their startup. The website provides a weekly rundown of the latest AI models and research, and also allows users to search for models by category or keyword. AIModels.fyi is a valuable resource for anyone looking to use AI to solve a problem.

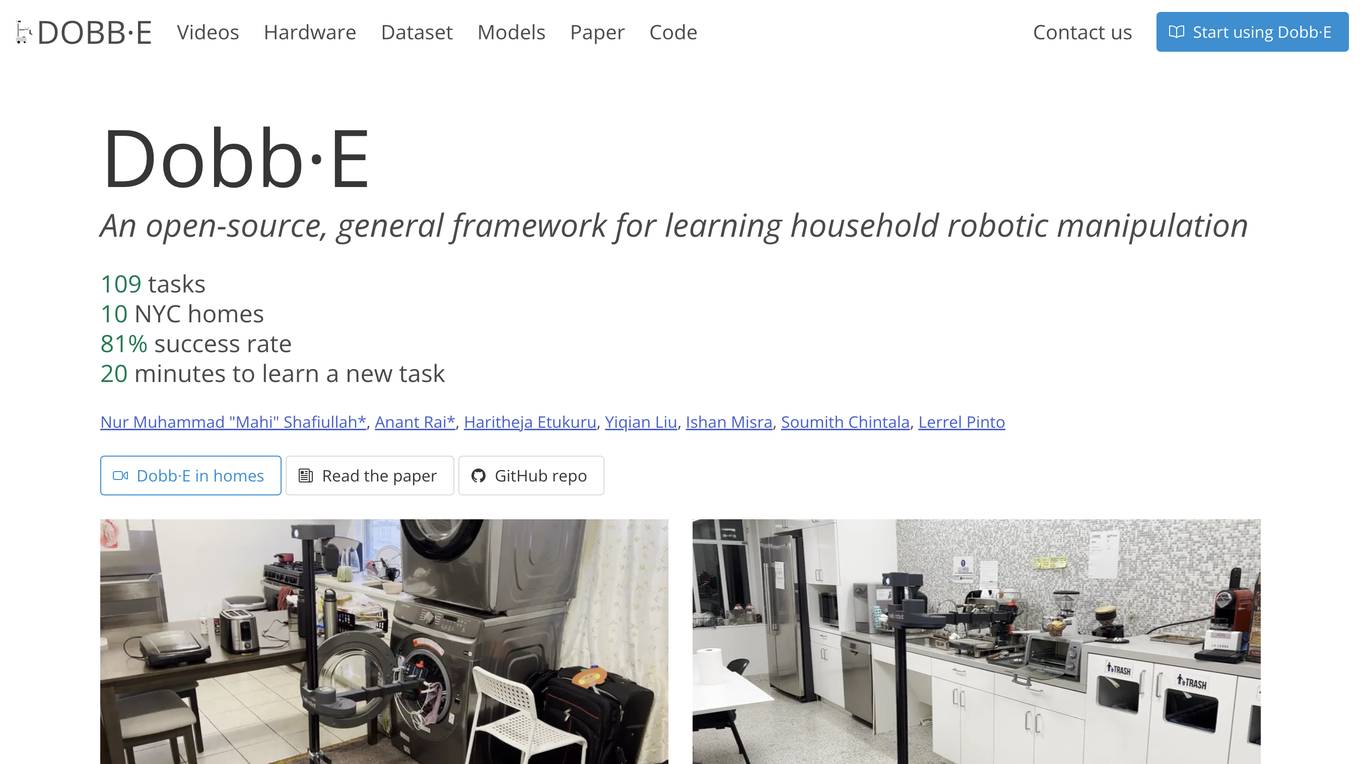

Dobb·E

Dobb·E is an open-source, general framework for learning household robotic manipulation. It aims to create a 'generalist machine' for homes, a domestic assistant that can adapt and learn various tasks cost-effectively. Dobb·E can learn a new task with just five minutes of demonstration, achieving an 81% success rate in 10 NYC homes. The system is designed to accelerate research on home robots and eventually enable robot butlers in every home.

Future Tools

Future Tools is a website that collects and organizes AI tools. It provides a comprehensive list of AI tools categorized into various domains, including AI detection, aggregators, avatar chat, copywriting, finance, gaming, generative art, generative code, generative video, image improvement, image scanning, inspiration, marketing, motion capture, music, podcasting, productivity, prompt guides, research, self-improvement, social media, speech-to-text, text-to-speech, text-to-video, translation, video editing, and voice modulation. The website also offers a search bar to help users find specific tools based on their needs.

Google Research

Google Research is a leading research organization focusing on advancing science and artificial intelligence. They conduct research in various domains such as AI/ML foundations, responsible human-centric technology, science & societal impact, computing paradigms, and algorithms & optimization. Google Research aims to create an environment for diverse research across different time scales and levels of risk, driving advancements in computer science through fundamental and applied research. They publish hundreds of research papers annually, collaborate with the academic community, and work on projects that impact technology used by billions of people worldwide.

Google Research

Google Research is a team of scientists and engineers working on a wide range of topics in computer science, including artificial intelligence, machine learning, and quantum computing. Our mission is to advance the state of the art in these fields and to develop new technologies that can benefit society. We publish hundreds of research papers each year and collaborate with researchers from around the world. Our work has led to the development of many new products and services, including Google Search, Google Translate, and Google Maps.

0 - Open Source AI Tools

20 - OpenAI Gpts

Antique and Collectible Appraisal GPT

All-encompassing antique and collectible appraisal assistant offering dollar estimates.

Deep Learning Master

Guiding you through the depths of deep learning with accuracy and respect.

Print Tech Guru

Expert in 3D printing innovations, offering in-depth insights and analysis.

Research Paper Explorer

Explains Arxiv papers with examples, analogies, and direct PDF links.

Kemi - Research & Creative Assistant

I improve marketing effectiveness by designing stunning research-led assets in a flash!

Research Radar: Tracking social sciences

Spot emerging trends in the latest social science research ( (also see, just "Research Radar" for all disciplines))