Best AI tools for< Research Environmental Data >

20 - AI tool Sites

DWE.ai

DWE.ai is an AI-powered platform specializing in DeepWater Exploration. The platform offers cutting-edge marine optics for crystal clear underwater imaging data, empowering marine robotics, surface vehicles, aquaculture farms, and aerial drones with advanced mapping and vision capabilities. DWE.ai provides cost-effective equipment for monitoring subsea assets, conducting detailed inspections, and collecting essential environmental data to revolutionize underwater research and operations.

Climate Policy Radar

Climate Policy Radar is an AI-powered application that serves as a live, searchable database containing over 5,000 national climate laws, policies, and UN submissions. The app aims to organize, analyze, and democratize climate data by providing open data, code, and machine learning models. It promotes a responsible approach to AI, fosters a climate NLP community, and offers an API for organizations to utilize the data. The tool addresses the challenge of sparse and siloed climate-related information, empowering decision-makers with evidence-based policies to accelerate climate action.

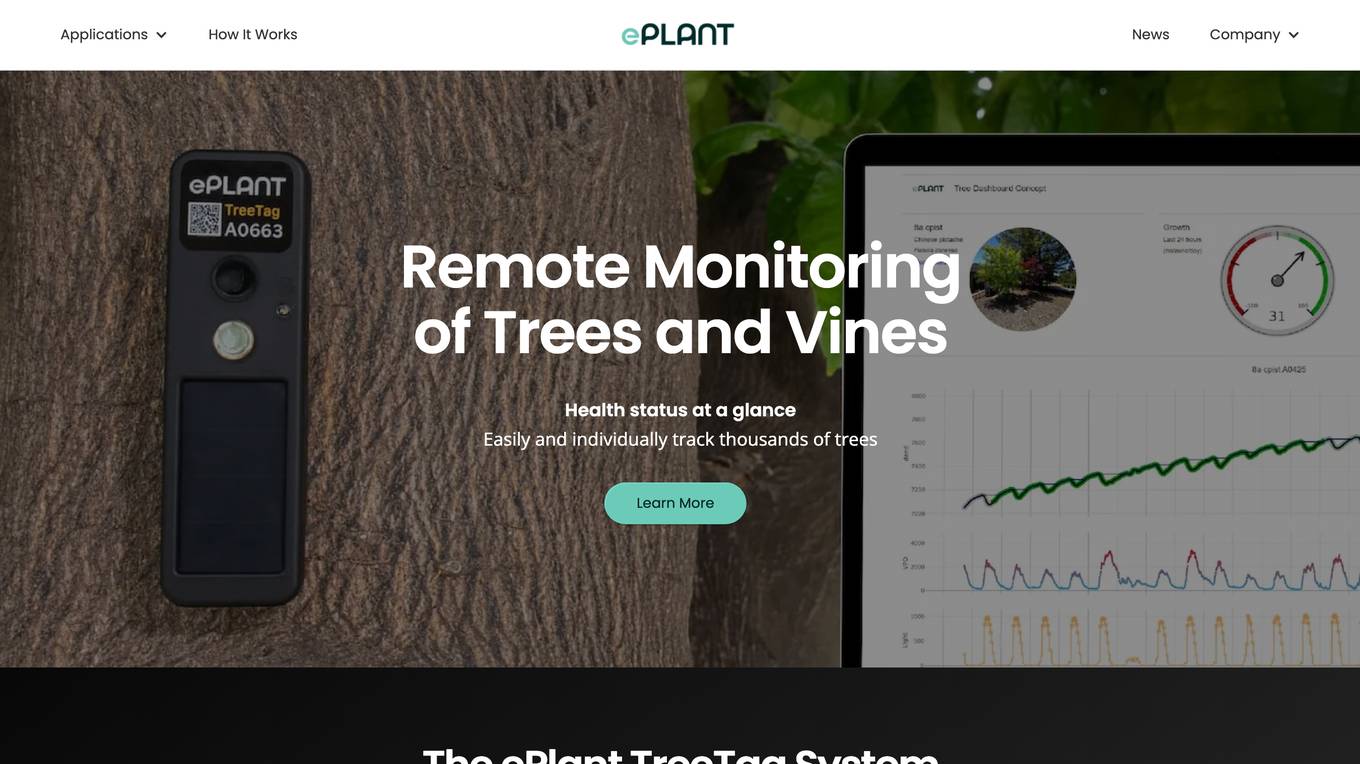

ePlant

ePlant is an advanced solution for tree care and plant research, offering precision tree monitoring from the lab to the landscape. It provides wireless monitoring solutions with advanced sensors for plant researchers and consulting arborists, enabling efficient data collection and analysis for better decision-making. The platform empowers users to track plant growth, water stress, tree lean, and sway through innovative sensors and data visualization tools. ePlant aims to simplify data management and transform complex datasets into actionable insights for users in the field of plant science and arboriculture.

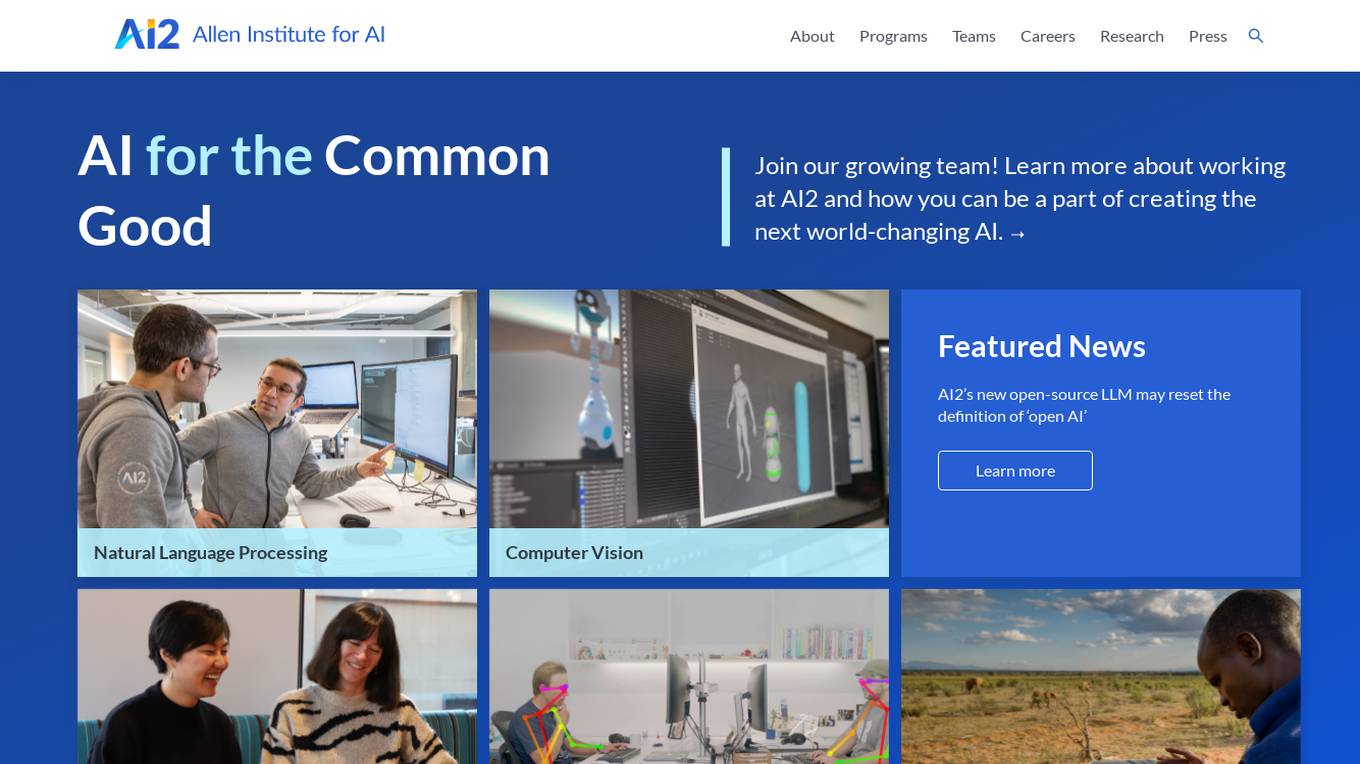

Allen Institute for AI (AI2)

The Allen Institute for AI (AI2) is a leading research institute dedicated to advancing artificial intelligence technologies for the common good. They focus on Natural Language Processing, Computer Vision, and AI applications for the environment. AI2 collaborates with diverse teams to tackle challenging problems in AI research, aiming to create world-changing AI solutions. The institute promotes diversity, equity, and inclusion in the research community, and offers opportunities for individuals to contribute to impactful AI projects.

Climate Change AI

Climate Change AI is a global non-profit organization that focuses on catalyzing impactful work at the intersection of climate change and machine learning. They provide resources, reports, events, and grants to support the use of machine learning in addressing climate change challenges.

Telborg

Telborg is a global climate news platform that provides reliable, unbiased, and ad-free reporting on climate and energy news. It offers nature-based solutions, climate finance, renewables, energy storage, hydrogen, synthetic fuels, nuclear and geothermal energy, AI and robotics for climate, materials and critical minerals, biodiversity and carbon removal, industrial decarbonization, and ClimateTech. Telborg stands out by directly sourcing news from official sources worldwide, ensuring credibility and independence in reporting.

Climate Change AI

Climate Change AI is a community platform dedicated to leveraging artificial intelligence to address the challenges of climate change. The platform serves as a hub for researchers, practitioners, and policymakers to collaborate, share knowledge, and develop AI solutions for mitigating the impacts of climate change. By harnessing the power of AI technologies, Climate Change AI aims to accelerate the transition to a sustainable and resilient future.

LAION

LAION is a non-profit organization that provides datasets, tools, and models to advance machine learning research. The organization's goal is to promote open public education and encourage the reuse of existing datasets and models to reduce the environmental impact of machine learning research.

Dog Age Calculator

The Dog Age Calculator is an AI-powered tool that accurately converts a dog's age into human years based on advanced scientific research and breed-specific data. It considers factors such as DNA methylation research, genetic factors, breed-specific aging patterns, individual health characteristics, and environmental influences on aging to provide precise results. The tool offers a comparison between the scientific research method and the traditional method of age calculation, highlighting the limitations of the latter. Users can easily calculate their dog's age in human years and receive care recommendations based on their life stage.

Deepfake Detection Challenge Dataset

The Deepfake Detection Challenge Dataset is a project initiated by Facebook AI to accelerate the development of new ways to detect deepfake videos. The dataset consists of over 100,000 videos and was created in collaboration with industry leaders and academic experts. It includes two versions: a preview dataset with 5k videos and a full dataset with 124k videos, each featuring facial modification algorithms. The dataset was used in a Kaggle competition to create better models for detecting manipulated media. The top-performing models achieved high accuracy on the public dataset but faced challenges when tested against the black box dataset, highlighting the importance of generalization in deepfake detection. The project aims to encourage the research community to continue advancing in detecting harmful manipulated media.

Domino Data Lab

Domino Data Lab is an enterprise AI platform that enables data scientists and IT leaders to build, deploy, and manage AI models at scale. It provides a unified platform for accessing data, tools, compute, models, and projects across any environment. Domino also fosters collaboration, establishes best practices, and tracks models in production to accelerate and scale AI while ensuring governance and reducing costs.

Domino Data Lab

Domino Data Lab is an enterprise AI platform that enables users to build, deploy, and manage AI models across any environment. It fosters collaboration, establishes best practices, and ensures governance while reducing costs. The platform provides access to a broad ecosystem of open source and commercial tools, and infrastructure, allowing users to accelerate and scale AI impact. Domino serves as a central hub for AI operations and knowledge, offering integrated workflows, automation, and hybrid multicloud capabilities. It helps users optimize compute utilization, enforce compliance, and centralize knowledge across teams.

Google Research

Google Research is a leading research organization focusing on advancing science and artificial intelligence. They conduct research in various domains such as AI/ML foundations, responsible human-centric technology, science & societal impact, computing paradigms, and algorithms & optimization. Google Research aims to create an environment for diverse research across different time scales and levels of risk, driving advancements in computer science through fundamental and applied research. They publish hundreds of research papers annually, collaborate with the academic community, and work on projects that impact technology used by billions of people worldwide.

The Institute for the Advancement of Legal and Ethical AI (ALEA)

The Institute for the Advancement of Legal and Ethical AI (ALEA) is a platform dedicated to supporting socially, economically, and environmentally sustainable futures through open research and education. They focus on developing legal and ethical frameworks to ensure that AI systems benefit society while minimizing harm to the economy and the environment. ALEA engages in activities such as open data collection, model training, technical and policy research, education, and community building to promote the responsible use of AI.

RapidAI Research Institute

RapidAI Research Institute is an academic institution under the RapidAI open-source organization, a non-enterprise academic institution. It serves as a platform for academic research and collaboration, providing opportunities for aspiring researchers to publish papers and engage in scholarly activities. The institute offers mentorship programs and benefits for members, including access to resources such as internet connectivity, GPU configurations, and storage space. The management team consists of esteemed professionals in the field, ensuring a conducive environment for academic growth and development.

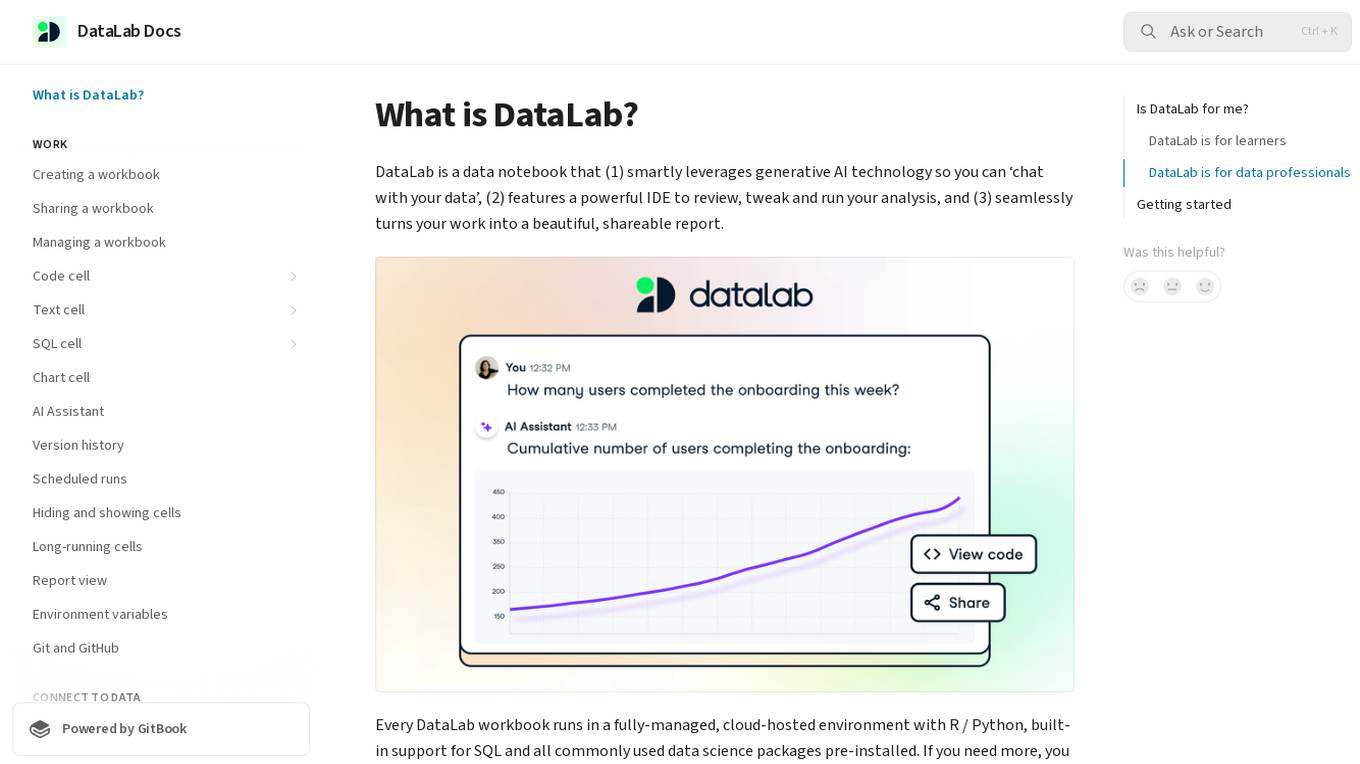

DataLab

DataLab is a data notebook that smartly leverages generative AI technology to enable users to 'chat with their data'. It features a powerful IDE for analysis, and seamlessly transforms work into shareable reports. The application runs in a cloud-hosted environment with support for R/Python, SQL, and various data science packages. Users can connect to external databases, collaborate in real-time, and utilize an AI Assistant for code generation and error correction.

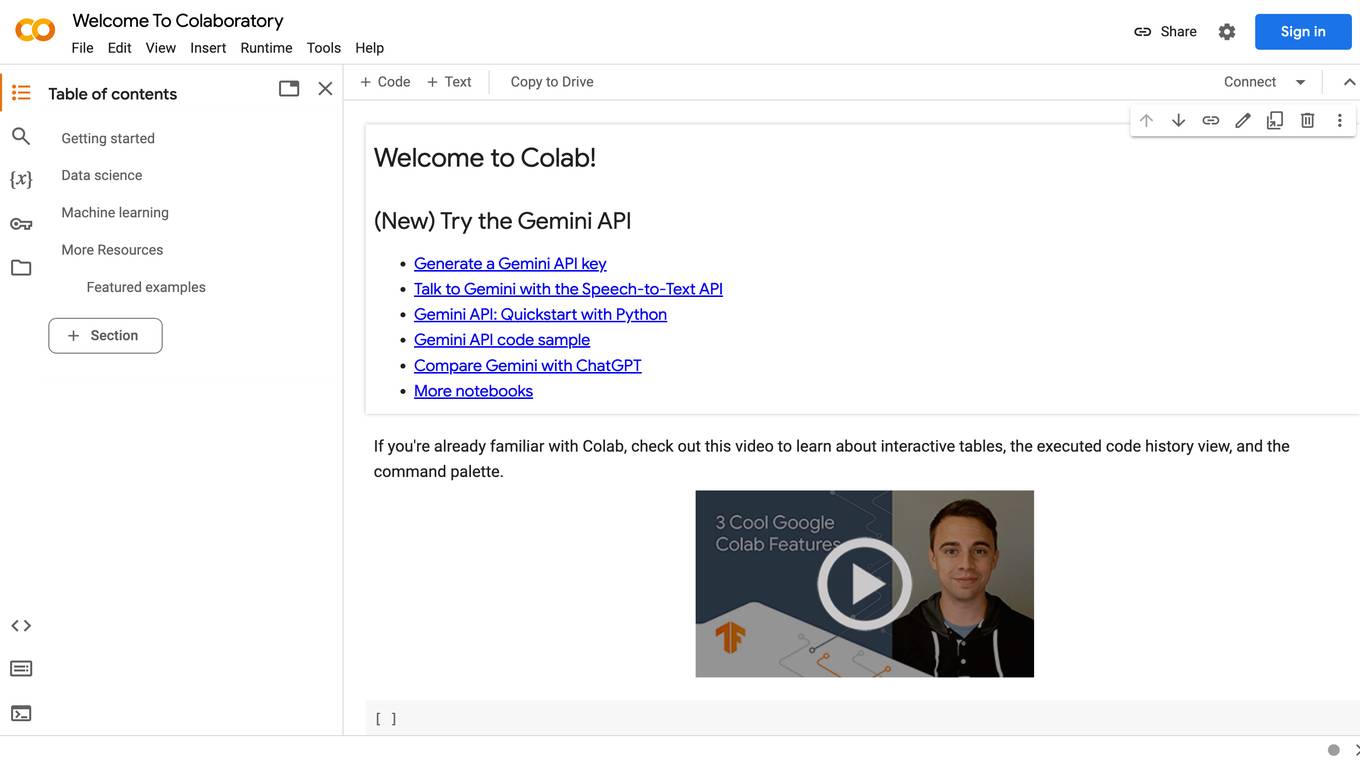

Google Colab

Google Colab is a free Jupyter notebook environment that runs in the cloud. It allows you to write and execute Python code without having to install any software or set up a local environment. Colab notebooks are shareable, so you can easily collaborate with others on projects.

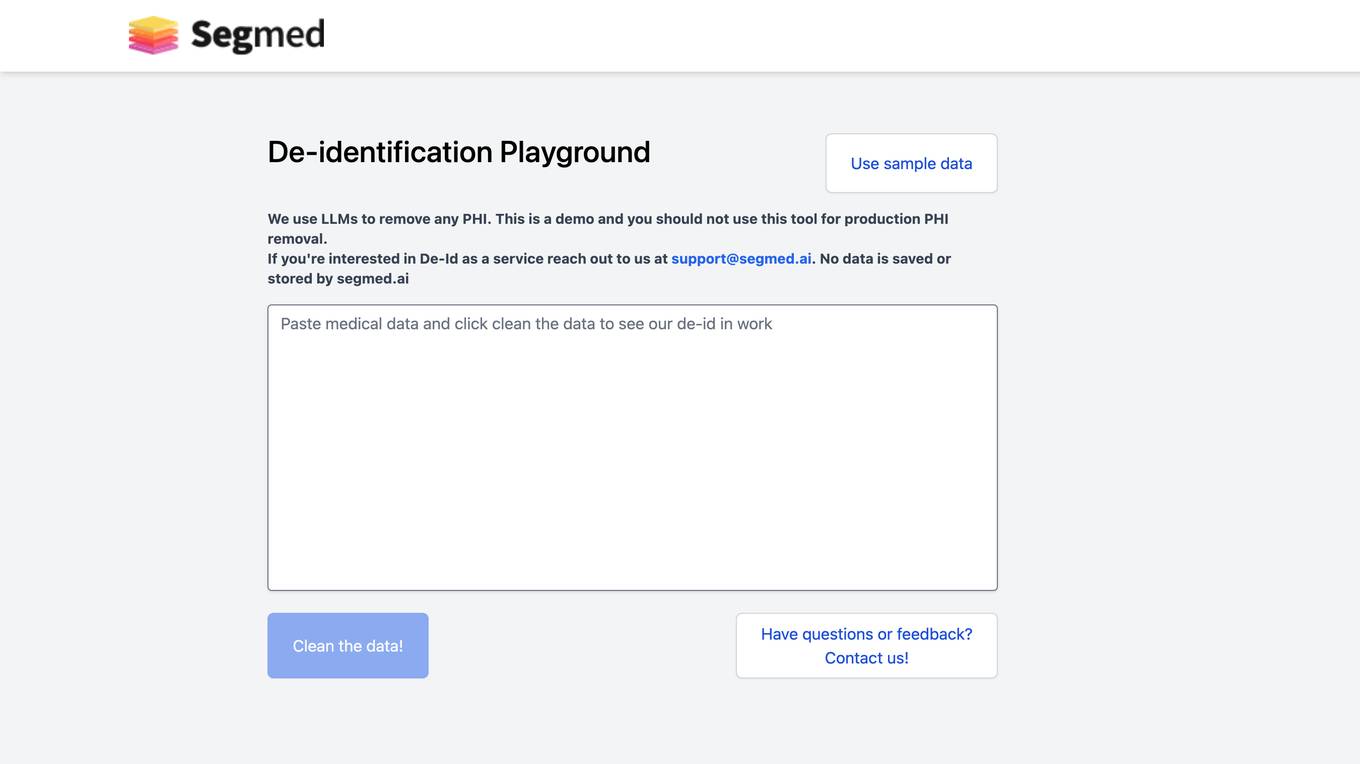

Segmed

Segmed offers a free Medical Data De-Identification Tool that utilizes NLP and language models to remove any PHI, ensuring privacy-compliant medical research. The tool is designed for demonstration purposes only, with the option to reach out for De-Id as a service. Segmed.ai does not save or store any data, providing a secure environment for cleaning medical data. Users can access sample data and benefit from de-identified clinical data solutions.

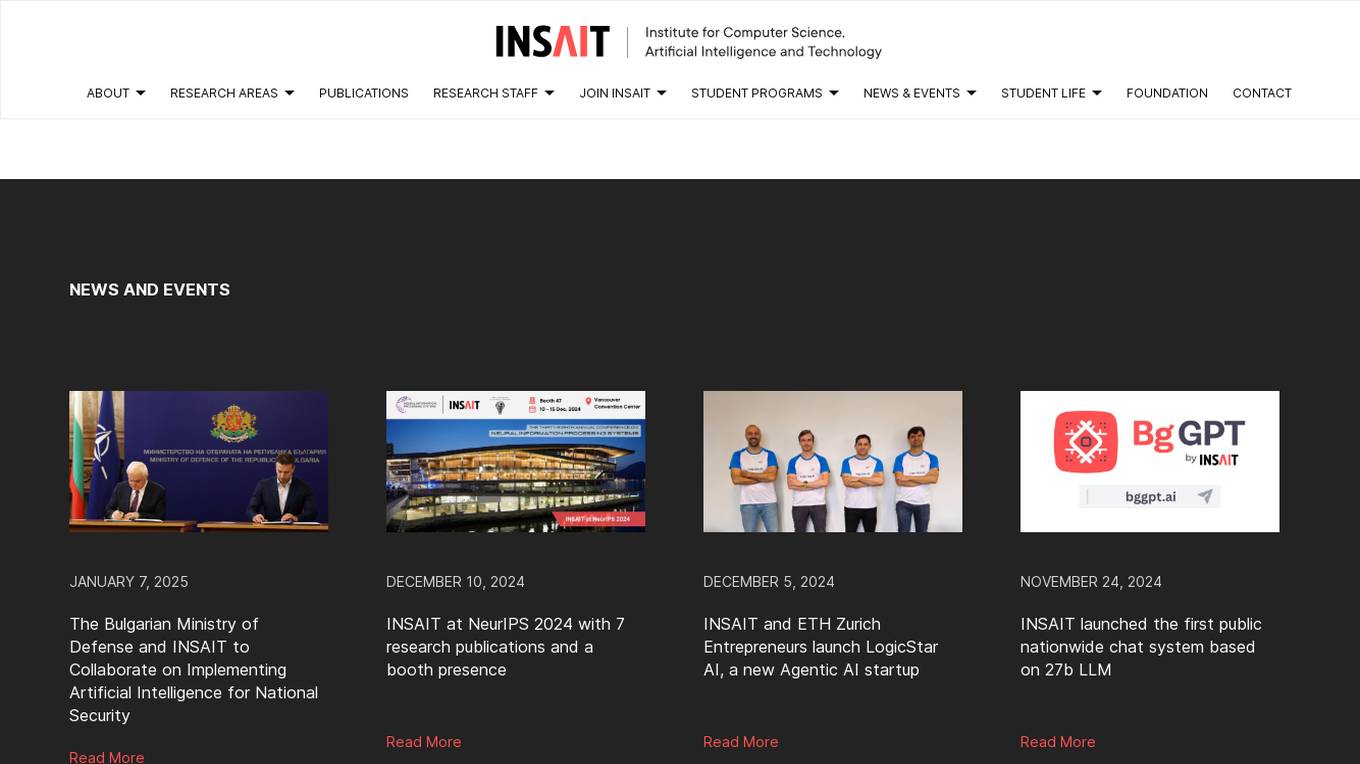

INSAIT

INSAIT is an Institute for Computer Science, Artificial Intelligence, and Technology located in Sofia, Bulgaria. The institute focuses on cutting-edge research areas such as Computer Vision, Robotics, Quantum Computing, Machine Learning, and Regulatory AI Compliance. INSAIT is known for its collaboration with top universities and organizations, as well as its commitment to fostering a diverse and inclusive environment for students and researchers.

INMA

INMA (International News Media Association) is a global organization that provides news media companies with resources, networking opportunities, and research on the latest trends in the industry. INMA's mission is to help news media companies succeed in the digital age by providing them with the tools and knowledge they need to adapt to the changing landscape. INMA offers a variety of services to its members, including conferences, webinars, reports, and a member directory. INMA also has a number of initiatives focused on specific areas of the news media industry, such as digital subscriptions, product and technology, and newsroom transformation.

0 - Open Source AI Tools

20 - OpenAI Gpts

Earth Conscious Voice

Hi ;) Ask me for data & insights gathered from an environmentally aware global community

Biochem Helper: Research's Helper

A helpful guide for biochemical engineers, offering insights and reassurance.

Gas Intellect Pro

Leading-Edge Gas Analysis and Optimization: Adaptable, Accurate, Advanced, developed on OpenAI.

Marine Biologist

Marine biologist studying and conserving ocean life, focusing on ecosystem health and climate effects.

GreenTech Guru

GreenTech Guru: Leading in-depth expertise in green tech, powered by OpenAI.

ClimatePal by Palau

I'm trained on major climate reports from the UN, World Resources Institute, and others. Ask me about climate trends, green energy, and how climate change affects us all. I make complex climate info easy to understand!

IR Spectra Interpreter

Analyzes IR spectra, prompts for uploads, and details findings in tables.

One atmosphere

I help you evolve your habits and processes to preserve the habitability of the earth and much more

Botanist

Focused on groundbreaking plant biology research for agricultural, medicinal, and environmental advancements.

EcoEconomist

Embrace the economics of ecosystems with EcoEconomist, balancing the scales between economic development and environmental stewardship.

amplio

Expert in Global Sustainable Development topics, provides accurate info with Harvard referencing.