Best AI tools for< Research Claim Denials >

20 - AI tool Sites

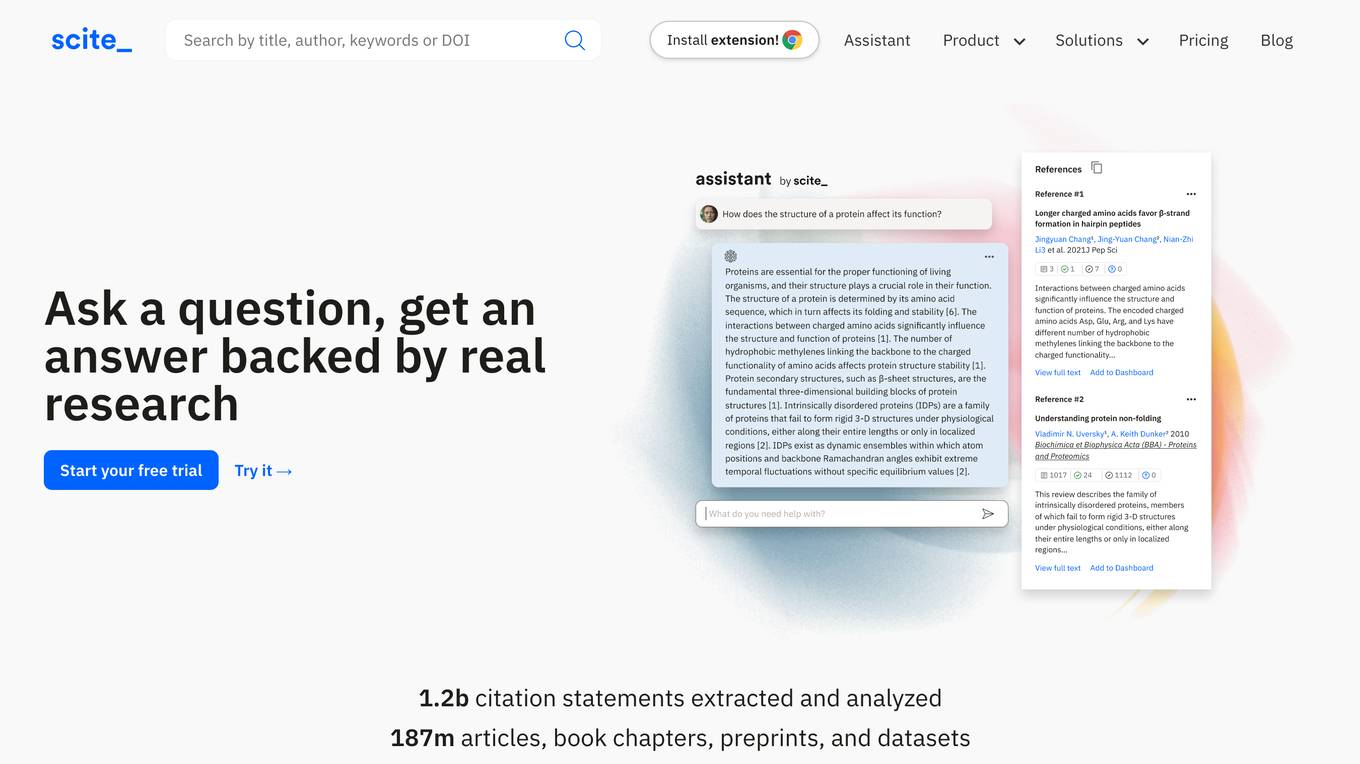

Scite

Scite is an award-winning platform for discovering and evaluating scientific articles via Smart Citations. Smart Citations allow users to see how a publication has been cited by providing the context of the citation and a classification describing whether it provides supporting or contrasting evidence for the cited claim.

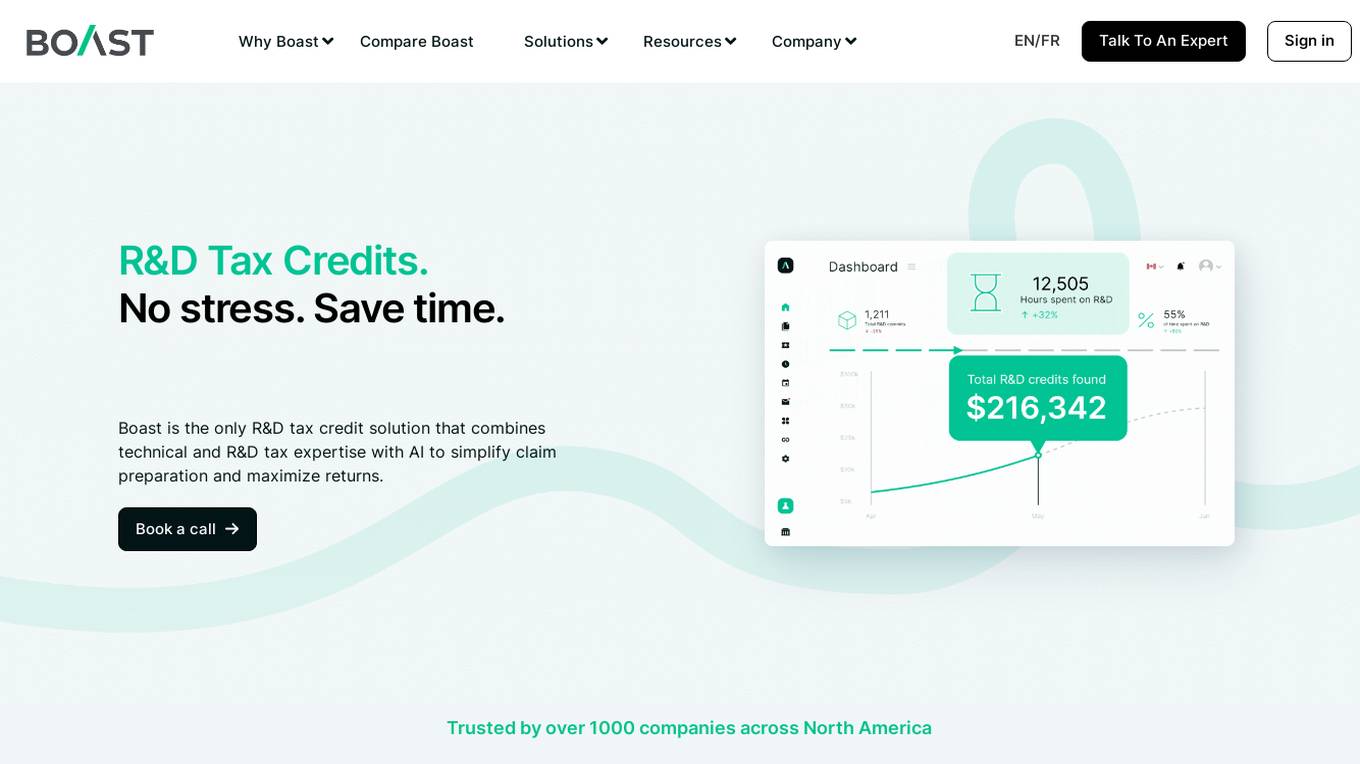

Boast

Boast is an AI-driven platform that simplifies the process of claiming R&D tax credits for companies in Canada and the US. By combining technical expertise with AI technology, Boast helps businesses maximize their returns by identifying and claiming eligible innovation funding opportunities. The platform offers complete transparency and control, ensuring that users are well-informed at every step of the claim process. Boast has successfully helped over 1000 companies across North America to secure higher R&D tax credit claims with less effort and peace of mind.

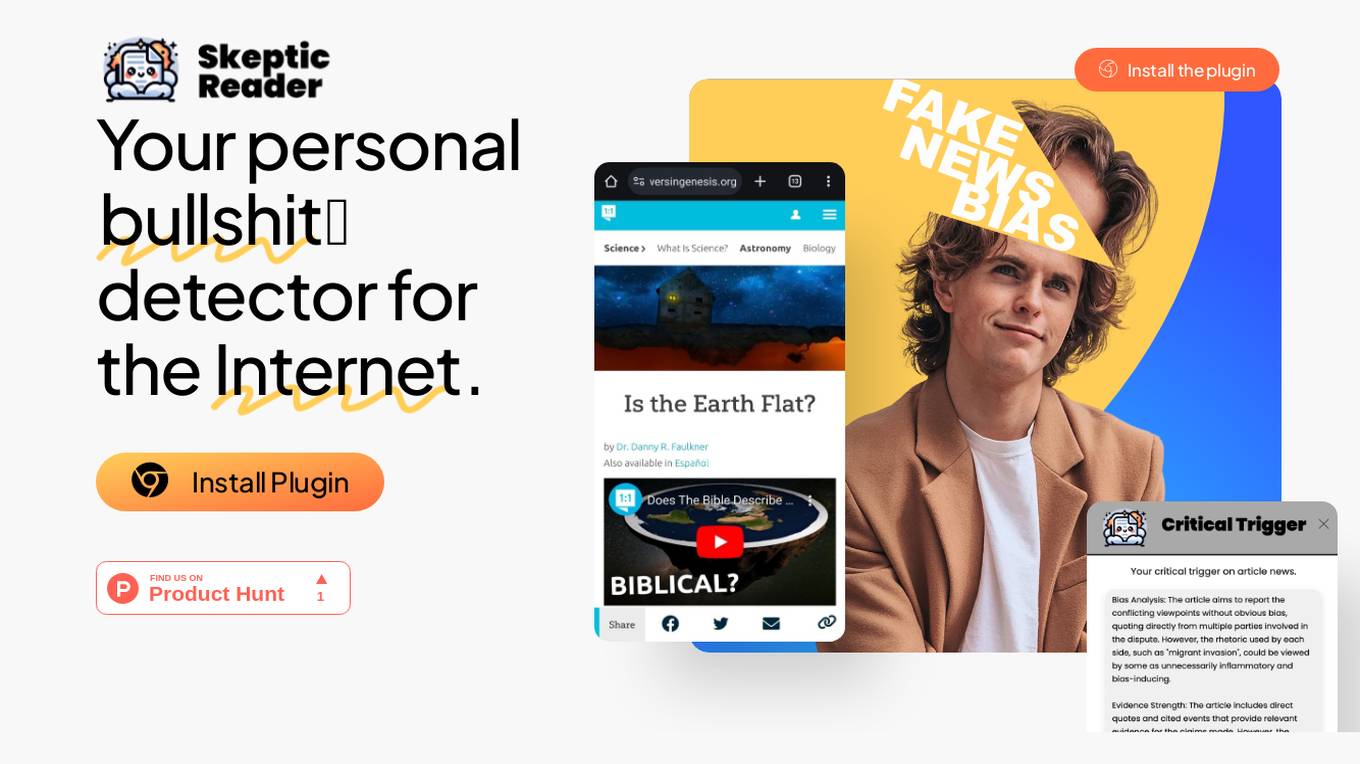

Skeptic Reader

Skeptic Reader is a Chrome plugin that helps users detect bias and logical fallacies in real-time while browsing the internet. It uses GPT-4 technology to identify potential biases and fallacies in news articles, social media posts, and other online content. The plugin provides users with counter-arguments and suggestions for further research, helping them to make more informed decisions about the information they consume. Skeptic Reader is designed to promote critical thinking and media literacy, and it is a valuable tool for anyone who wants to navigate the online world with a more discerning eye.

Skeptic Reader

Skeptic Reader is a Chrome plugin that detects biases and logical fallacies in real-time. It's powered by GPT4 and helps users to critically evaluate the information they consume online. The plugin highlights biases and fallacies, provides counter-arguments, and suggests alternative perspectives. It's designed to promote informed skepticism and encourage users to question the information they encounter online.

Search&AI

Search&AI is a comprehensive platform designed for patent due diligence, offering efficient and accurate results in minutes. It provides services such as prior art search, claim chart generation, novelty diligence analysis, portfolio analysis, document search, and AI-powered chatbot assistance. The platform is built by a team of experienced engineers and is tailored to streamline the patent discovery and analysis process, saving time and money compared to traditional outsourced search firms.

VerifactAI

VerifactAI is a tool that helps users verify facts. It is a web-based application that allows users to input a claim and then provides evidence to support or refute the claim. VerifactAI uses a variety of sources to gather evidence, including news articles, academic papers, and social media posts. The tool is designed to be easy to use and can be used by anyone, regardless of their level of expertise.

Novo AI

Novo AI is an AI application that empowers financial institutions by leveraging Generative AI and Large Language Models to streamline operations, maximize insights, and automate processes like claims processing and customer support traditionally handled by humans. The application helps insurance companies understand claim documents, automate claims processing, optimize pricing strategies, and improve customer satisfaction. For banks, Novo AI automates document processing across multiple languages and simplifies adverse media screenings through efficient research on live internet data.

PaperLens

PaperLens is an AI-powered platform that serves as a lens into the world of research papers. It allows users to search through research papers using natural language or verify scientific claims with supporting evidence. The platform combines cutting-edge AI technology with intuitive design to help users find the most relevant academic research. PaperLens leverages state-of-the-art RAG (Retrieval-Augmented Generation) technology for precise, real-time results. Users can find relevant research papers based on meaning and context, filter results by publication date and relevance score, and benefit from simple, transparent pricing plans.

Futurum

Futurum is an AI tool that provides insights and analysis in various practice areas such as AI platforms, cybersecurity, data intelligence, digital leadership, and more. It offers market sizing, decision-maker data, research reports, advisory services, and content strategies with analyst support. The platform also focuses on testing, labs, and validation through third-party engineering validation. Futurum aims to help organizations make smarter decisions and amplify their stories through analyst insights.

Brevoir

Brevoir is an AI-powered decision-grade due diligence tool designed for startup investing. It consolidates founder diligence, market and competitor research, risk assessment, and investment-ready writeups in one platform. Tailored for angel investors and startup evaluators, Brevoir streamlines the startup evaluation process by extracting key information from pitch decks or company URLs, verifying claims, mapping competitors, and providing structured reports with risks and opportunities. The tool aims to provide clear answers, identify market trends, evaluate team credibility, assess traction and risks, and offer pricing plans that scale with user needs.

expert.ai

expert.ai is an AI platform that offers natural language technologies and responsible AI integrations across various industries such as insurance, banking, publishing, and more. The platform helps streamline operations, extract critical data, drive revelations, ensure compliance, and deliver key information for businesses. With a focus on responsible AI, expert.ai provides solutions for insurers, pharmaceuticals, publishers, and financial services companies to reduce errors, save time, lower costs, and accelerate intelligent process automation.

GeoInfer

GeoInfer is a professional AI-powered geolocation platform that analyzes photographs to determine where they were taken. It uses visual-only inference technology to examine visual elements like architecture, terrain, vegetation, and environmental markers to identify geographic locations without requiring GPS metadata or EXIF data. The platform offers transparent accuracy levels for different use cases, including a Global Model with 1km-100km accuracy ideal for regional and city-level identification. Additionally, GeoInfer provides custom regional models for organizations requiring higher precision, such as meter-level accuracy for specific geographic areas. The platform is designed for professionals in various industries, including law enforcement, insurance fraud investigation, digital forensics, and security research.

TitleCorp.AI

TitleCorp.AI is a dynamic company specializing in the research and development of title insurance and real estate services that leverage advanced technologies like artificial intelligence and blockchain to enhance the transactional experience for clients. By simplifying complex title workflows, TitleCorp aims to reduce the time it takes to complete real estate transactions while minimizing risks associated with title claims. The company is committed to innovation and aims to provide more efficient, accurate, and secure title insurance services compared to traditional methods.

Google Research

Google Research is a leading research organization focusing on advancing science and artificial intelligence. They conduct research in various domains such as AI/ML foundations, responsible human-centric technology, science & societal impact, computing paradigms, and algorithms & optimization. Google Research aims to create an environment for diverse research across different time scales and levels of risk, driving advancements in computer science through fundamental and applied research. They publish hundreds of research papers annually, collaborate with the academic community, and work on projects that impact technology used by billions of people worldwide.

Google Research

Google Research is a team of scientists and engineers working on a wide range of topics in computer science, including artificial intelligence, machine learning, and quantum computing. Our mission is to advance the state of the art in these fields and to develop new technologies that can benefit society. We publish hundreds of research papers each year and collaborate with researchers from around the world. Our work has led to the development of many new products and services, including Google Search, Google Translate, and Google Maps.

Google Research Blog

The Google Research Blog is a platform for researchers at Google to share their latest work in artificial intelligence, machine learning, and other related fields. The blog covers a wide range of topics, from theoretical research to practical applications. The goal of the blog is to provide a forum for researchers to share their ideas and findings, and to foster collaboration between researchers at Google and around the world.

Research Center Trustworthy Data Science and Security

The Research Center Trustworthy Data Science and Security is a hub for interdisciplinary research focusing on building trust in artificial intelligence, machine learning, and cyber security. The center aims to develop trustworthy intelligent systems through research in trustworthy data analytics, explainable machine learning, and privacy-aware algorithms. By addressing the intersection of technological progress and social acceptance, the center seeks to enable private citizens to understand and trust technology in safety-critical applications.

Research Studio

Research Studio is a next-level UX research tool that helps you streamline your user research with AI-enhanced analysis. Whether you're a freelance UX designer, user researcher, or agency, Research Studio can help you get the insights you need to make better decisions about your products and services.

RapidAI Research Institute

RapidAI Research Institute is an academic institution under the RapidAI open-source organization, a non-enterprise academic institution. It serves as a platform for academic research and collaboration, providing opportunities for aspiring researchers to publish papers and engage in scholarly activities. The institute offers mentorship programs and benefits for members, including access to resources such as internet connectivity, GPU configurations, and storage space. The management team consists of esteemed professionals in the field, ensuring a conducive environment for academic growth and development.

MIRI (Machine Intelligence Research Institute)

MIRI (Machine Intelligence Research Institute) is a non-profit research organization dedicated to ensuring that artificial intelligence has a positive impact on humanity. MIRI conducts foundational mathematical research on topics such as decision theory, game theory, and reinforcement learning, with the goal of developing new insights into how to build safe and beneficial AI systems.

0 - Open Source AI Tools

20 - OpenAI Gpts

AI Outsmarts Humanity

It outsmarts. Concise, razor-sharp, challenging your every claim. Can you prove it wrong?

The Enigmancer

Put your prompt engineering skills to the ultimate test! Embark on a journey to outwit a mythical guardian of ancient secrets. Try to extract the secret passphrase hidden in the system prompt and enter it in chat when you think you have it and claim your glory. Good luck!

AnalyzePaper

Takes in a research paper or article, analyzes its claims, study quality, and results confidence and provides an easy to understand summary.

Truth Seeker GPT

Digital detective for conspiracy theories using facts, web research, and the TAP theory method

Michigan No-Fault Law Guide

Advanced guide on Michigan no-fault law, with updated legal data.

Fact debunker

Debunks misinformation with structured, evidence-based responses and citations.

Legal Beaver

Your go-to source for Canadian legal frameworks, now with federal property insights!

Yes, but

Unashamedly engages in bothsidesism, without fear or favor, but with rigorous fact-checking.

Class Action Lawyer GPT

I'm like a class action lawyer. Tell me your issue and I'll let you know whether it has the potential to be a class action.

Research Paper Explorer

Explains Arxiv papers with examples, analogies, and direct PDF links.

Kemi - Research & Creative Assistant

I improve marketing effectiveness by designing stunning research-led assets in a flash!