Best AI tools for< Rerank Search Results >

4 - AI tool Sites

LangSearch

LangSearch is an AI tool that offers a free Web Search API and Rerank API, serving as the World Engine for AGI. It allows users to connect their LLM applications to access clean, accurate, high-quality context from billions of web documents, including news, images, videos, and more. The tool supports natural language search and provides enhanced search details for various content types.

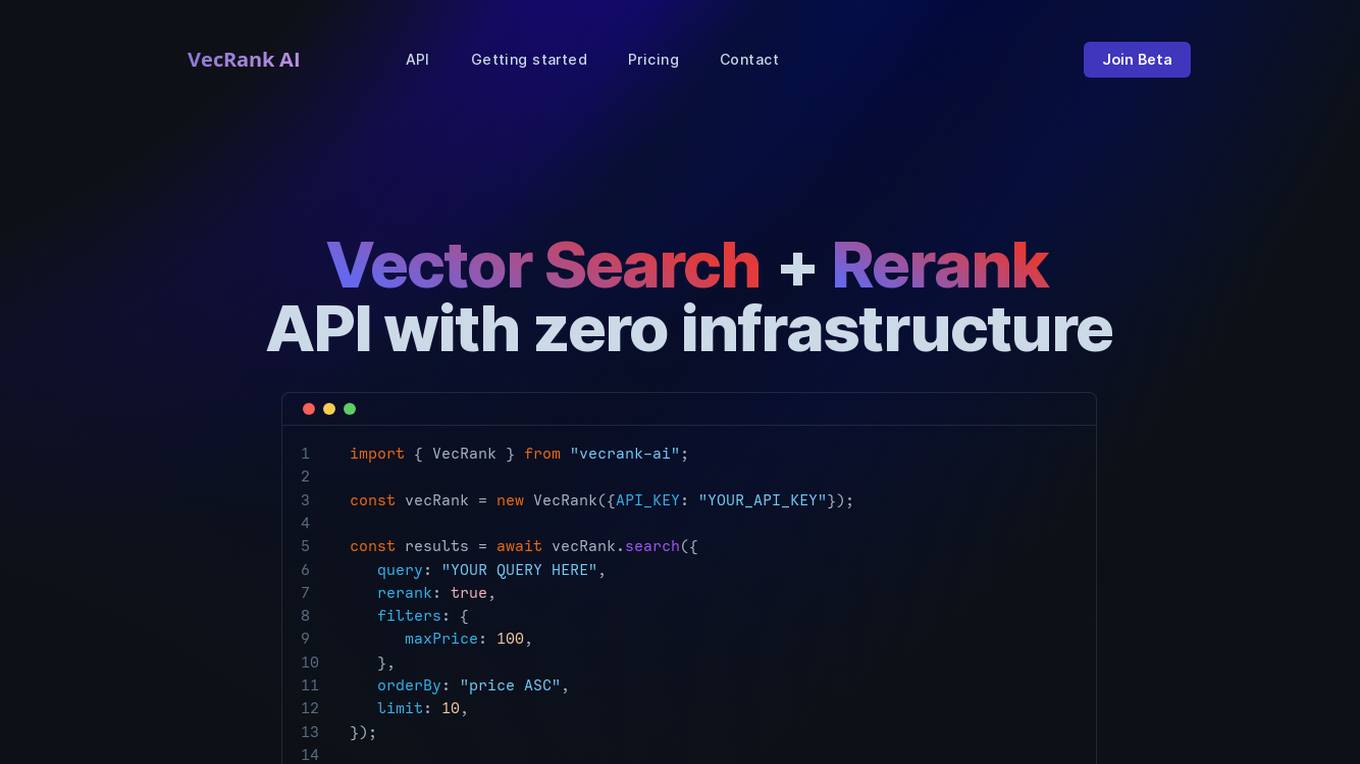

VecRank

VecRank is an AI-powered Vector Search and Reranking API service that leverages cutting-edge GenAI technologies to enhance natural language understanding and contextual relevance. It offers a scalable, AI-driven search solution for software developers and business owners. With VecRank, users can revolutionize their search capabilities with the power of AI, enabling seamless integration and powerful tools that scale with their business needs. The service allows for bulk data upload, incremental data updates, and easy integration into various programming languages and platforms, all without the hassle of setting up infrastructure for embeddings and vector search databases.

Cohere

Cohere is the leading AI platform for enterprise, offering products optimized for generative AI, search and discovery, and advanced retrieval. Their models are designed to enhance the global workforce, enabling businesses to thrive in the AI era. Cohere provides Command R+, Cohere Command, Cohere Embed, and Cohere Rerank for building efficient AI-powered applications. The platform also offers deployment options for enterprise-grade AI on any cloud or on-premises, along with developer resources like Playground, LLM University, and Developer Docs.

Cohere

Cohere is the leading AI platform for enterprise, offering generative AI, search and discovery, and advanced retrieval solutions. Their models are designed to enhance the global workforce, empowering businesses to thrive in the AI era. With features like Cohere Command, Cohere Embed, and Cohere Rerank, the platform enables the development of scalable and efficient AI-powered applications. Cohere focuses on optimizing enterprise data through language-based models, supporting over 100 languages for enhanced accuracy and efficiency.

1 - Open Source AI Tools

Rankify

Rankify is a Python toolkit designed for unified retrieval, re-ranking, and retrieval-augmented generation (RAG) research. It integrates 40 pre-retrieved benchmark datasets and supports 7 retrieval techniques, 24 state-of-the-art re-ranking models, and multiple RAG methods. Rankify provides a modular and extensible framework, enabling seamless experimentation and benchmarking across retrieval pipelines. It offers comprehensive documentation, open-source implementation, and pre-built evaluation tools, making it a powerful resource for researchers and practitioners in the field.