Best AI tools for< Refine Data >

20 - AI tool Sites

Basejump AI

Basejump AI is an AI-powered data access tool that allows users to interact with their database using natural language queries. It empowers teams to access data quickly and easily, providing instant insights and eliminating the need to navigate through complex dashboards. With Basejump AI, users can explore data, save relevant information, create custom collections, and refine datapoints to meet their specific requirements. The tool ensures data accuracy by allowing users to compare datapoints side by side. Basejump AI caters to various industries such as healthcare, HR, and software, offering real-time insights and analytics to streamline decision-making processes and optimize workflow efficiency.

Sagehood

Sagehood is an AI-driven platform that provides real-time market intelligence for investors in the finance sector. By harnessing AI-driven insights, Sagehood helps users stay ahead in the dynamic world of finance by offering intelligent analysis, deep-dive analysis, strategic execution, and intelligent synthesis. The platform aims to optimize investment strategies and enhance decision-making by leveraging AI technology to analyze vast amounts of data and provide actionable insights. With advanced features for informed decision-making and a focus on minimizing bias while maximizing insight, Sagehood offers tailored intelligence to help users make data-driven investment decisions.

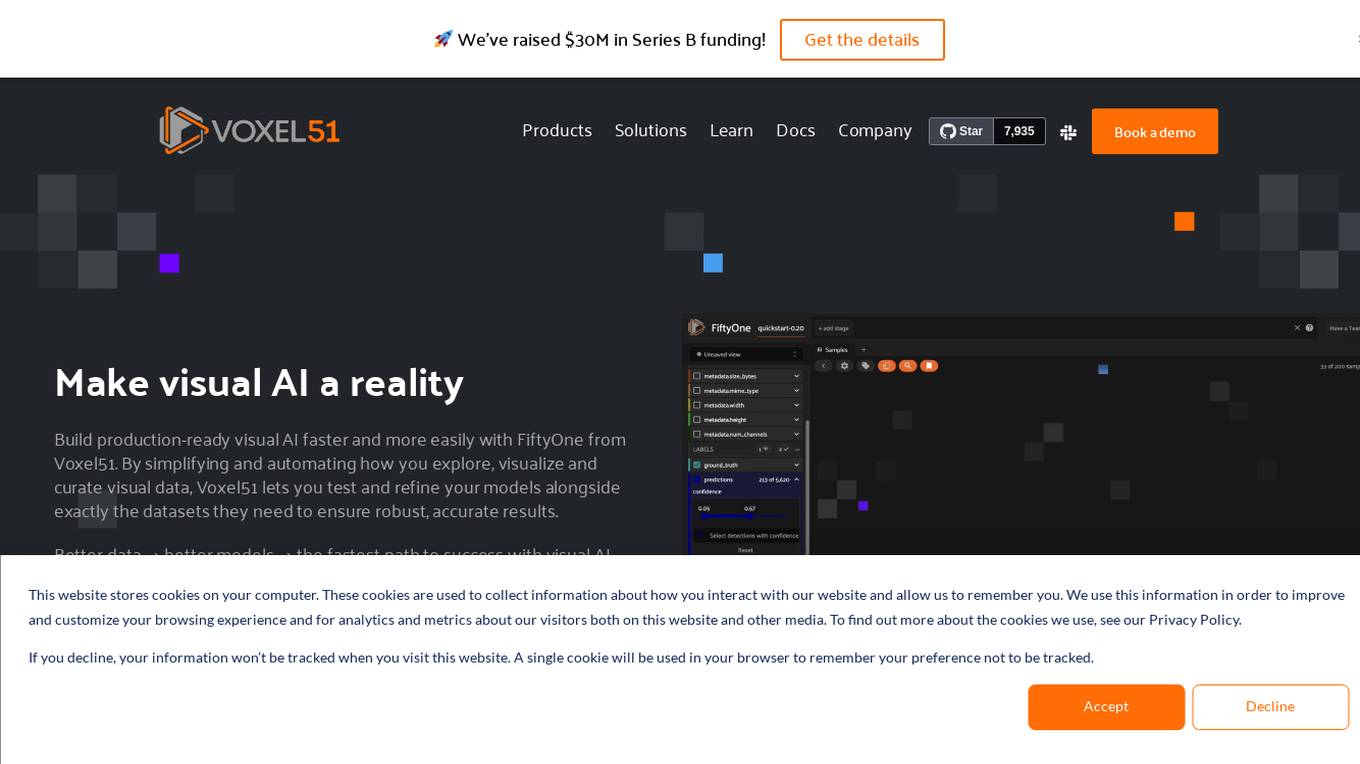

Voxel51

Voxel51 is an AI tool that provides open-source computer vision tools for machine learning. It offers solutions for various industries such as agriculture, aviation, driving, healthcare, manufacturing, retail, robotics, and security. Voxel51's main product, FiftyOne, helps users explore, visualize, and curate visual data to improve model performance and accelerate the development of visual AI applications. The platform is trusted by thousands of users and companies, offering both open-source and enterprise-ready solutions to manage and refine data and models for visual AI.

Globose Technology Solutions

Globose Technology Solutions Pvt Ltd (GTS) is an AI data collection company that provides various datasets such as image datasets, video datasets, text datasets, speech datasets, etc., to train machine learning models. They offer premium data collection services with a human touch, aiming to refine AI vision and propel AI forward. With over 25+ years of experience, they specialize in data management, annotation, and effective data collection techniques for AI/ML. The company focuses on unlocking high-quality data, understanding AI's transformative impact, and ensuring data accuracy as the backbone of reliable AI.

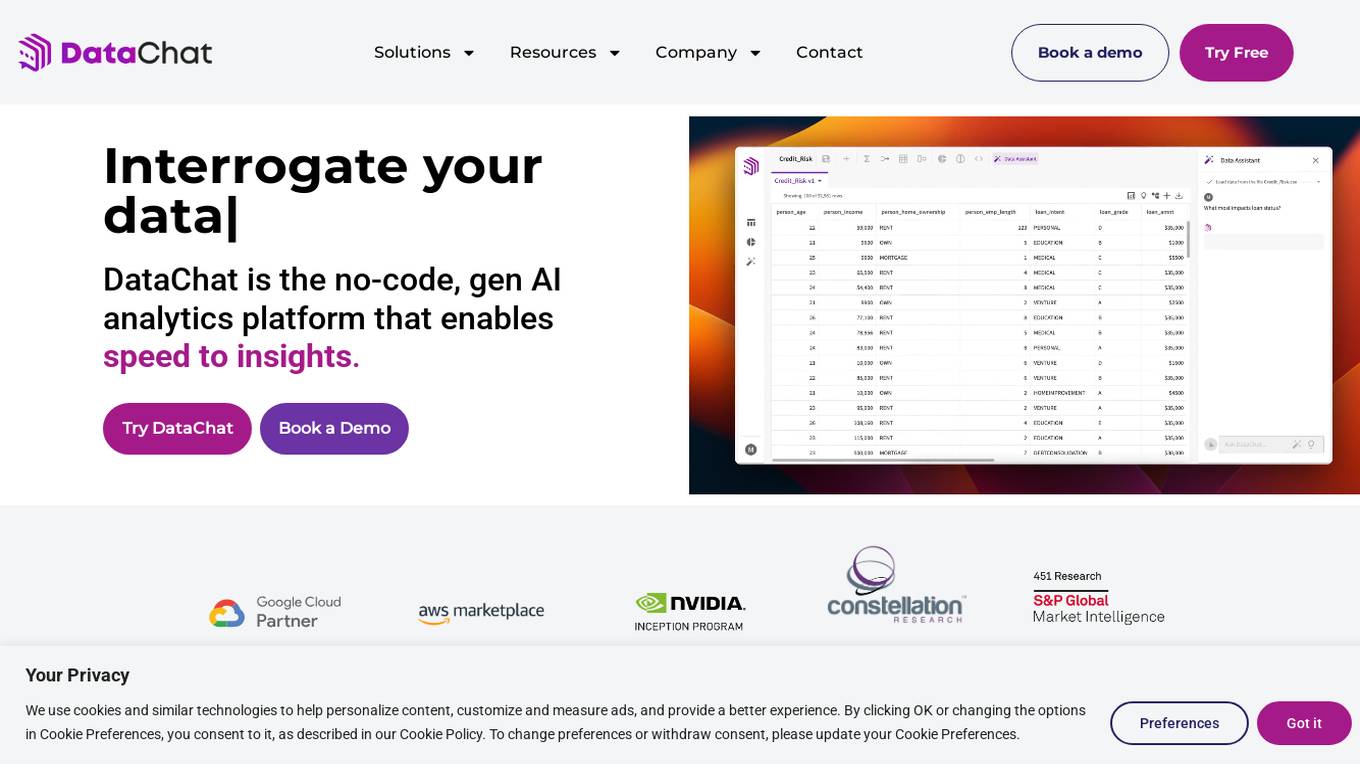

DataChat

DataChat is a no-code, AI analytics platform that enables business users to ask questions of their data in plain English, empowering them to gain insights quickly and efficiently. The platform allows users to refine questions, reshape data, and adjust insights without any coding required. DataChat ensures transparency in the workflow and maintains data security by performing all computations within the user's data warehouse. With DataChat, users can easily access real-time insights and make data-driven decisions to enhance their business operations.

Grro

Grro is an AI-powered platform that provides audience insights for over 550,000 English podcasts. It offers data-driven insights to help podcast creators understand their audience better, identify niche segments, and leverage marketing potential. With weekly updates, Grro helps users refine their content strategy, find partnership opportunities, and analyze viral reach. The platform aims to empower podcasters with valuable information to make informed decisions and enhance their podcasting experience.

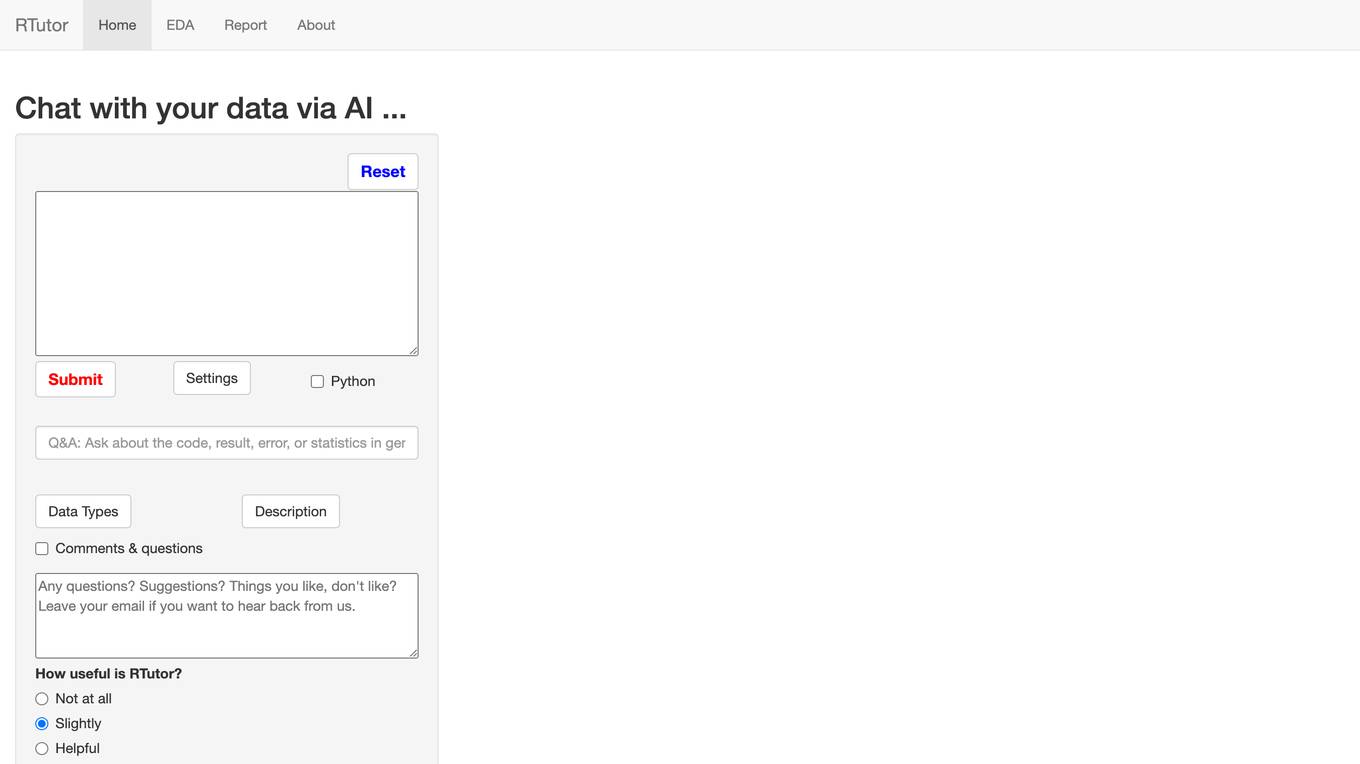

RTutor

RTutor is an AI tool that utilizes OpenAI's large language models to translate natural language into R or Python code for data analysis. Users can upload data in various formats, ask questions, and receive results in plain English. The tool allows for exploring data, generating basic plots, and gradually adding complexity to the analysis. RTutor can only analyze traditional statistics data where rows are observations and columns are variables. It offers a comprehensive EDA (Exploratory Data Analysis) report and provides code chunks for analysis.

Namique

Namique is an AI-powered name generator that helps businesses create short, brandable, and memorable names. It utilizes an advanced AI model to generate unique and attention-grabbing names. Namique also offers custom filters to help businesses find the perfect name for their brand. Additionally, Namique provides discounts on domain purchases when a name generated by Namique is used.

CVBee.ai

CVBee.ai is an AI-powered online CV maker that offers a comprehensive solution for creating, optimizing, and refining professional resumes. The platform utilizes artificial intelligence to generate CVs from users' career background, enhance existing CVs with industry-specific keywords, and provide format and structure suggestions. With features like iterative refinement and keyword optimization, CVBee.ai aims to help job seekers craft job-winning resumes that stand out in Applicant Tracking Systems (ATS) and increase their chances of landing interviews.

Penome

Penome is an AI-powered product management platform that empowers users to define clear goals, identify key drivers of success, and build roadmaps based on data and AI insights. It offers features like Penome Copilot for automating tasks, feedback management system, standardized goal framework, idea management with AI refinement, product portfolio management, initiatives & epics for impact analysis, and roadmapping & prioritization tools. Penome focuses on transparency, objectivity, and data-driven decisions to help organizations achieve strategic alignment and maximize productivity.

Mimir

Mimir is an AI-native product management tool that helps users figure out what to build next by importing or uploading feedback, interviews, or metrics. It provides evidence-backed recommendations, refines them in chat, and generates AI agent-ready specs. Mimir stands out by creating GitHub issues from recommendations with complete specs and implementation tasks, enabling users to ship features in hours. The tool extracts structured insights, clusters them into themes, and generates prioritized recommendations based on product management best practices. Mimir learns from every interaction, aligning recommendations with the user's business context over time.

Capitol AI

Capitol AI is an AI tool designed to help users create persuasive content from data. It is currently in beta phase, where AI-generated content may be incorrect or misleading. The platform offers users the ability to leverage AI technology to generate compelling content based on data inputs. Capitol AI aims to streamline the content creation process and provide users with valuable insights to enhance their communication strategies.

Pongo

Pongo is an AI-powered tool that helps reduce hallucinations in Large Language Models (LLMs) by up to 80%. It utilizes multiple state-of-the-art semantic similarity models and a proprietary ranking algorithm to ensure accurate and relevant search results. Pongo integrates seamlessly with existing pipelines, whether using a vector database or Elasticsearch, and processes top search results to deliver refined and reliable information. Its distributed architecture ensures consistent latency, handling a wide range of requests without compromising speed. Pongo prioritizes data security, operating at runtime with zero data retention and no data leaving its secure AWS VPC.

ExcelMaster

ExcelMaster is an AI-powered Excel formula and VBA assistant that provides human-level expertise. It can generate formulas, fix or explain existing formulas, learn formula skills, and draft and refine VBA scripts. ExcelMaster is the first of its kind product that handles real-world Excel structure and solves complicated formula/VBA assignments. It is far better than other “toy” formula bots, Copilot, and ChatGPT.

CHAPTR

CHAPTR is an innovative AI solutions provider that aims to redefine work and fuel human innovation. They offer AI-driven solutions tailored to empower, innovate, and transform work processes. Their products are designed to enhance efficiency, foster creativity, and anticipate change in the modern workforce. CHAPTR's solutions are user-centric, secure, customizable, and backed by the Holtzbrinck Publishing Group. They are committed to relentless innovation and continuous advancement in AI technology.

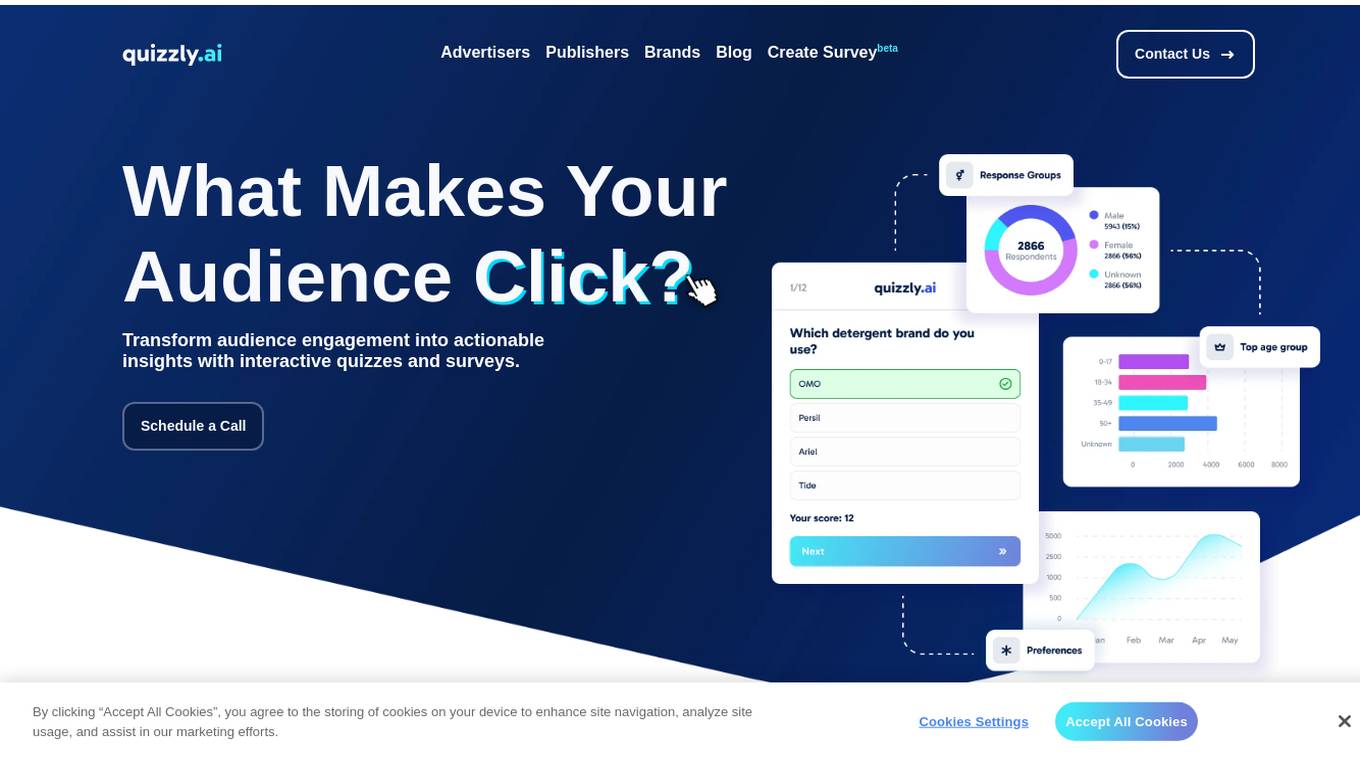

Quizzly

Quizzly is an AI-powered platform that revolutionizes audience engagement and data insights for advertisers and publishers. It offers interactive quizzes and surveys to help businesses understand their audience better, drive conversions, and refine marketing strategies. With a patented quiz format and contextual quizzes, Quizzly delivers actionable insights that lead to real performance marketing results. Trusted by global brands and publishers, Quizzly is designed to enhance engagement, reduce bounce rates, and monetize effectively.

NeoPrompts

NeoPrompts is an AI-powered prompt optimization tool designed to help businesses enhance their efficiency by providing tailored prompts for various industries. With a vast library of 25,000 optimized prompts, NeoPrompts ensures clear and precise instructions to achieve accurate results in AI applications. The tool reduces ambiguity, enhances clarity, and offers prompt customization for image and video generation. NeoPrompts aims to be the best copilot for ChatGPT users, offering prompt refinement and boosting productivity by up to 35%. Users can access free trials and advanced features to optimize prompts, chat with ChatGPT-4o, and enroll in courses for enhanced AI capabilities.

Inworld

Inworld is an AI framework designed for games and media, offering a production-ready framework for building AI agents with client-side logic and local model inference. It provides tools optimized for real-time data ingestion, low latency, and massive scale, enabling developers to create engaging and immersive experiences for users. Inworld allows for building custom AI agent pipelines, refining agent behavior and performance, and seamlessly transitioning from prototyping to production. With support for C++, Python, and game engines, Inworld aims to future-proof AI development by integrating 3rd-party components and foundational models to avoid vendor lock-in.

Pixis

Pixis is a codeless AI infrastructure designed for growth marketing, offering purpose-built AI solutions to scale demand generation. The platform leverages transparent AI infrastructure to optimize campaign results across platforms, with features such as targeting AI, creative AI, and performance AI. Pixis helps reduce customer acquisition cost, generate creative assets quickly, refine audience targeting, and deliver contextual communication in real-time. The platform also provides an AI savings calculator to estimate the returns from leveraging its codeless AI infrastructure for marketing. With success stories showcasing significant improvements in various marketing metrics, Pixis aims to empower businesses to unlock the capabilities of AI for enhanced performance and results.

BERA.ai

BERA.ai is an advanced brand management tracking software that offers solutions for brand positioning, tracking, competitive intelligence, and conversion funnel analysis. It connects brand strategy to business outcomes, enabling users to measure, predict, and optimize the financial impact of their brand. With AI-powered insights, census-matched data, and predictive analytics, BERA.ai helps users make smarter decisions, prioritize high-value audiences, and drive measurable growth. The platform integrates brand data into the marketing ecosystem, providing intelligence to outmaneuver competitors and maximize ROI.

1 - Open Source AI Tools

dataformer

Dataformer is a robust framework for creating high-quality synthetic datasets for AI, offering speed, reliability, and scalability. It empowers engineers to rapidly generate diverse datasets grounded in proven research methodologies, enabling them to prioritize data excellence and achieve superior standards for AI models. Dataformer integrates with multiple LLM providers using one unified API, allowing parallel async API calls and caching responses to minimize redundant calls and reduce operational expenses. Leveraging state-of-the-art research papers, Dataformer enables users to generate synthetic data with adaptability, scalability, and resilience, shifting their focus from infrastructure concerns to refining data and enhancing models.

20 - OpenAI Gpts

Data Analysis Prompt Engineer

Specializes in creating, refining, and testing data analysis prompts based on user queries.

Complex Knowledge Atomizer

I refine complex knowledge into granular, integrated solutions.

GPT Builder V2.4 (by GB)

Craft and refine GPTs. Join our Reddit community: https://www.reddit.com/r/GPTreview/

Neural Network Creator

Assists with creating, refining, and understanding neural networks.

Search Helper with Henk van Ess and Translation

Refines search queries with specific terms and includes Google links

Acronym Finder

I'm an acronym finder, ranking possibilities and refining with context. I can also suggest acronyms given a term or phrase.

GPT Search & Finderr

Optimized with advanced search operators for refined results. Specializing in finding and linking top custom GPTs from builders around the world. Version 0.3.0

Refine Product Management Enhancement Document

I help refine product enhancements. Logic - Essential Details - Business Value

Startup Business Validator

Refine your startup strategy with Startup Business Validator: Dive into SWOT, Business Model Canvas, PESTEL, and more for comprehensive insights. Got just an idea? We'll craft the details for you.

SCI论文润色修改ByZZJ

I refine academic writing, list edits in a table, and provide the final paragraph.

Prompt Hero

Write prompt like a professional! I refine user prompts for optimal ChatGPT responses. Type "Start" to begin.