Best AI tools for< Pre-training >

20 - AI tool Sites

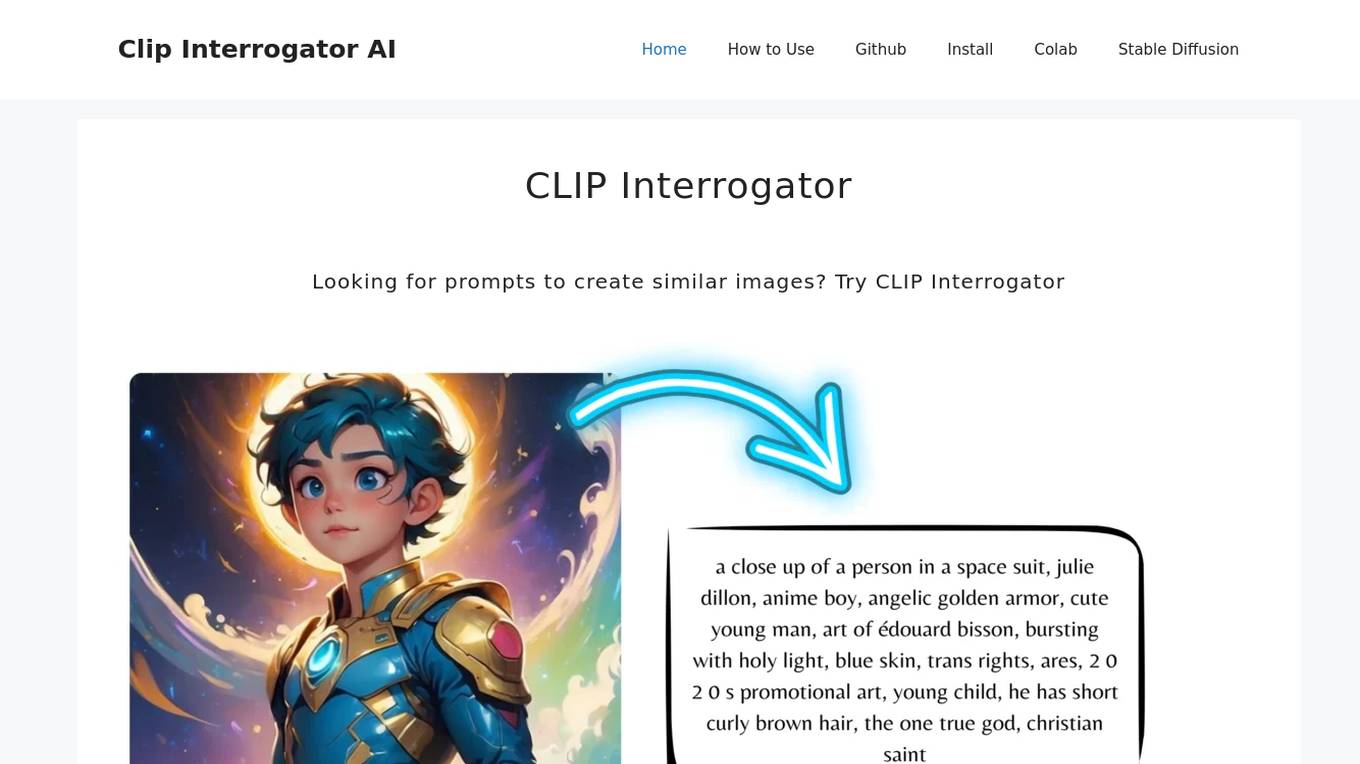

CLIP Interrogator

CLIP Interrogator is a tool that uses the CLIP (Contrastive Language–Image Pre-training) model to analyze images and generate descriptive text or tags. It effectively bridges the gap between visual content and language by interpreting the contents of images through natural language descriptions. The tool is particularly useful for understanding or replicating the style and content of existing images, as it helps in identifying key elements and suggesting prompts for creating similar imagery.

FinetuneFast

FinetuneFast is an AI tool designed to help developers, indie makers, and businesses to efficiently finetune machine learning models, process data, and deploy AI solutions at lightning speed. With pre-configured training scripts, efficient data loading pipelines, and one-click model deployment, FinetuneFast streamlines the process of building and deploying AI models, saving users valuable time and effort. The tool is user-friendly, accessible for ML beginners, and offers lifetime updates for continuous improvement.

Viso Suite

Viso Suite is a no-code computer vision platform that enables users to build, deploy, and scale computer vision applications. It provides a comprehensive set of tools for data collection, annotation, model training, application development, and deployment. Viso Suite is trusted by leading Fortune Global companies and has been used to develop a wide range of computer vision applications, including object detection, image classification, facial recognition, and anomaly detection.

WowTo

WowTo is an all-in-one support video platform that helps businesses create how-to videos, host video knowledge bases, and provide in-app video help. With WowTo's AI-powered video creator, businesses can easily create step-by-step how-to videos without any prior design expertise. WowTo also offers a variety of pre-made video knowledge base layouts to choose from, making it easy to create a professional-looking video knowledge base that matches your brand. In addition, WowTo's in-app video widget allows businesses to provide contextual video help to their visitors, improving the customer support experience.

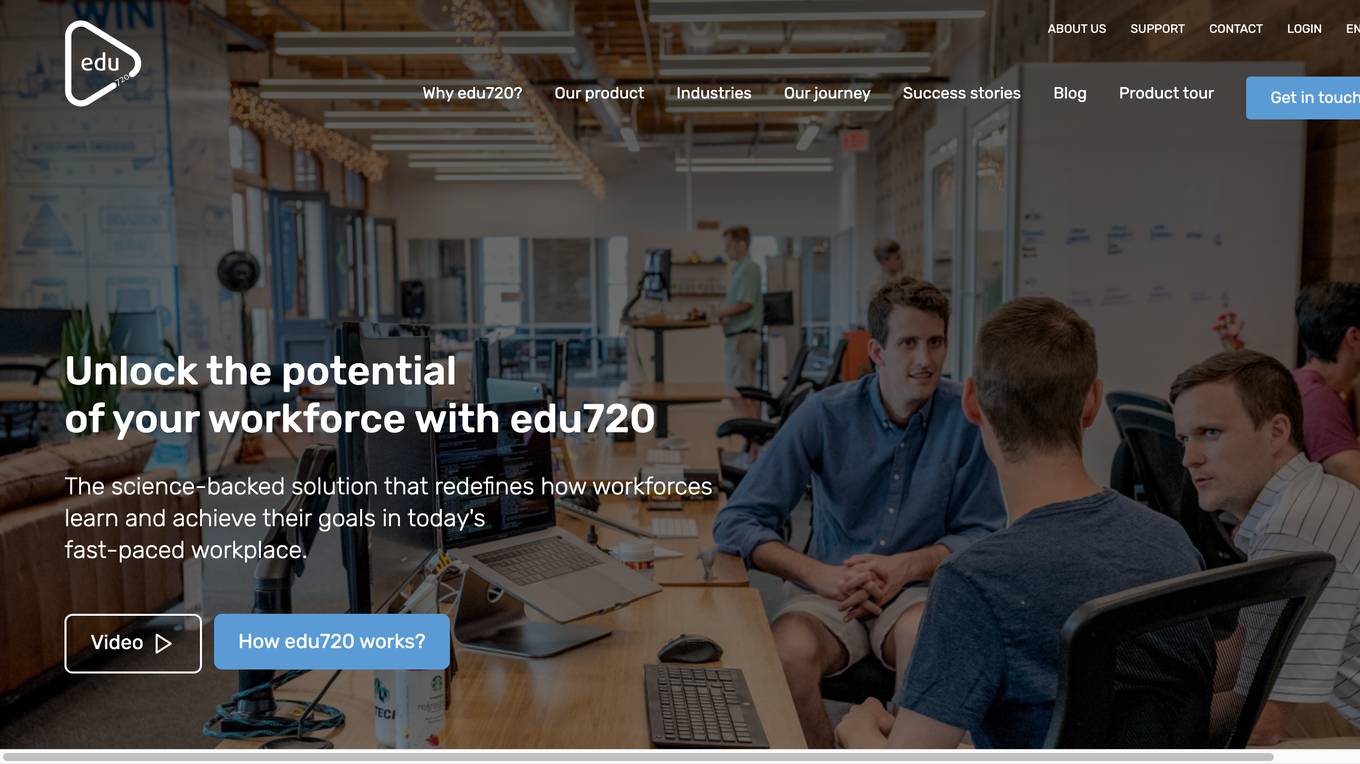

edu720

edu720 is a science-backed learning platform that uses AI and nanolearning to redefine how workforces learn and achieve their goals. It provides pre-built learning modules on various topics, including cybersecurity, privacy, and AI ethics. edu720's 360-degree approach ensures that all employees, regardless of their status or location, fully understand and absorb the knowledge conveyed.

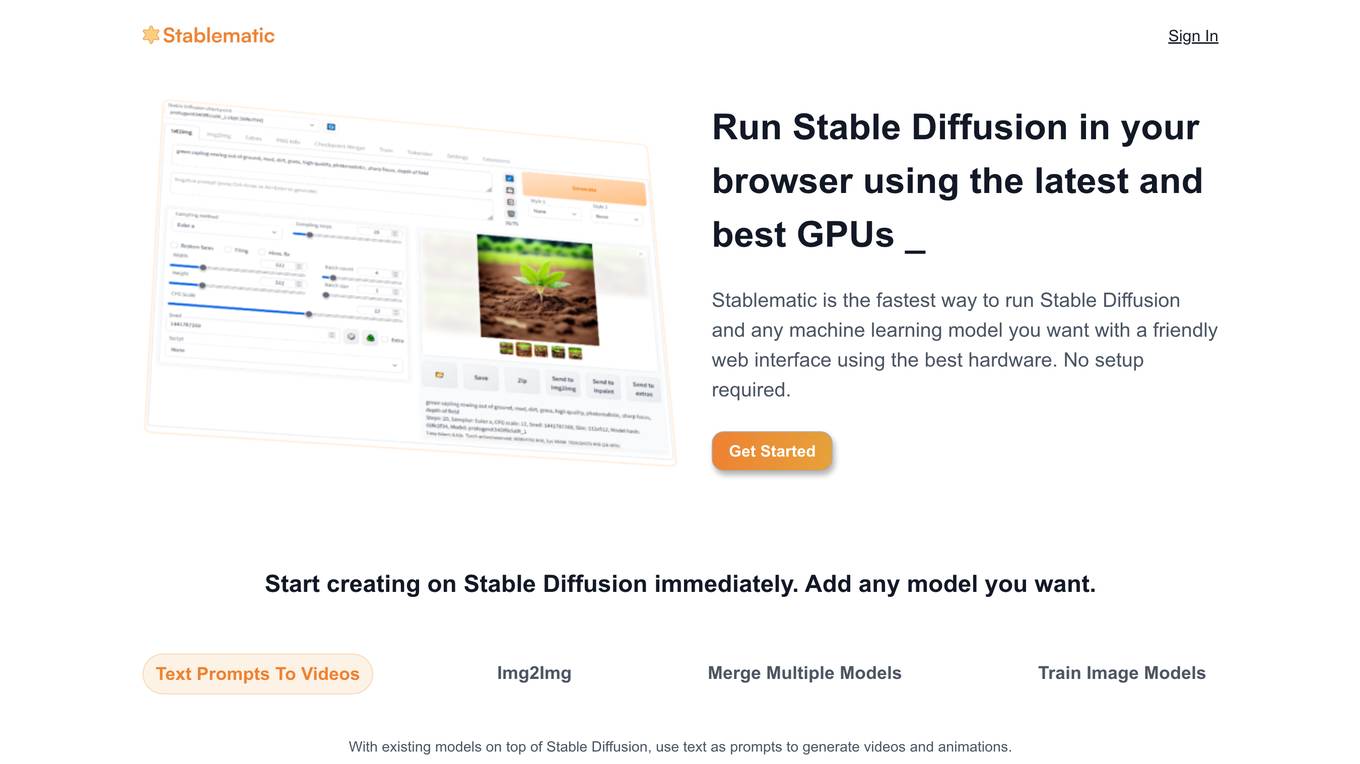

Stablematic

Stablematic is a web-based platform that allows users to run Stable Diffusion and other machine learning models without the need for local setup or hardware limitations. It provides a user-friendly interface, pre-installed plugins, and dedicated GPU resources for a seamless and efficient workflow. Users can generate images and videos from text prompts, merge multiple models, train custom models, and access a range of pre-trained models, including Dreambooth and CivitAi models. Stablematic also offers API access for developers and dedicated support for users to explore and utilize the capabilities of Stable Diffusion and other machine learning models.

ClearCompany

ClearCompany is a leading recruiting and talent management software platform designed to optimize employee experience and HR initiatives for maximizing employee and company success. The platform offers solutions for applicant tracking, onboarding, employee engagement, performance management, analytics & reporting, and background checks. ClearCompany leverages automation, AI in HR, and workforce planning to streamline recruiting processes and enhance candidate experiences. The platform aims to build community, connect with employees, and promote inclusivity through talent management tools like employee surveys and peer recognition. ClearCompany provides pre-built reports and analytics to help interpret HR data and optimize talent management strategies.

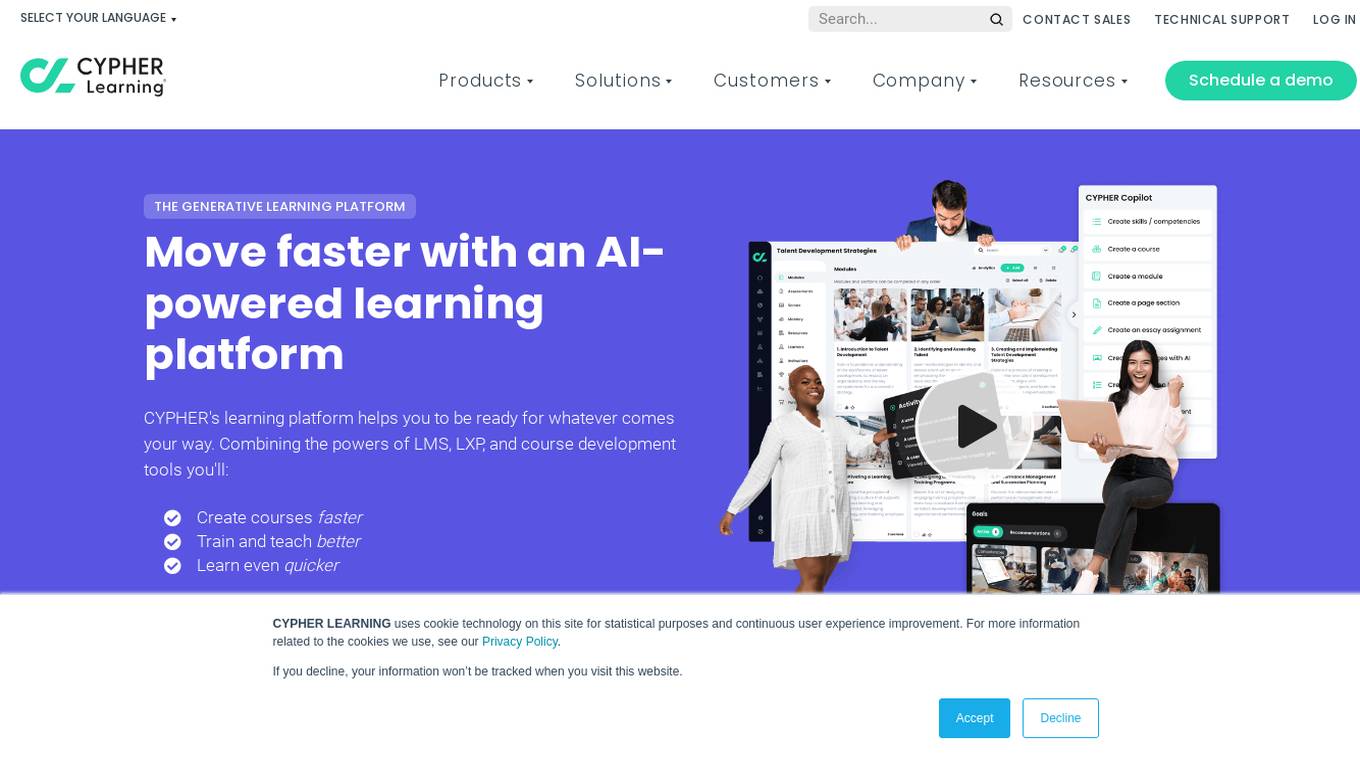

CYPHER Learning

CYPHER Learning is a leading AI-powered learning platform offering solutions for academia, business, and entrepreneurs. The platform provides features such as course development, AI media options, personalized skills development, gamification, automation, integrations, reporting & analytics, and more. CYPHER Learning focuses on human-centric learning, offers enterprise-class connections, supports over 50 languages, and provides customizable and pre-built courses. The platform aims to enhance learning experiences through AI innovation and automation.

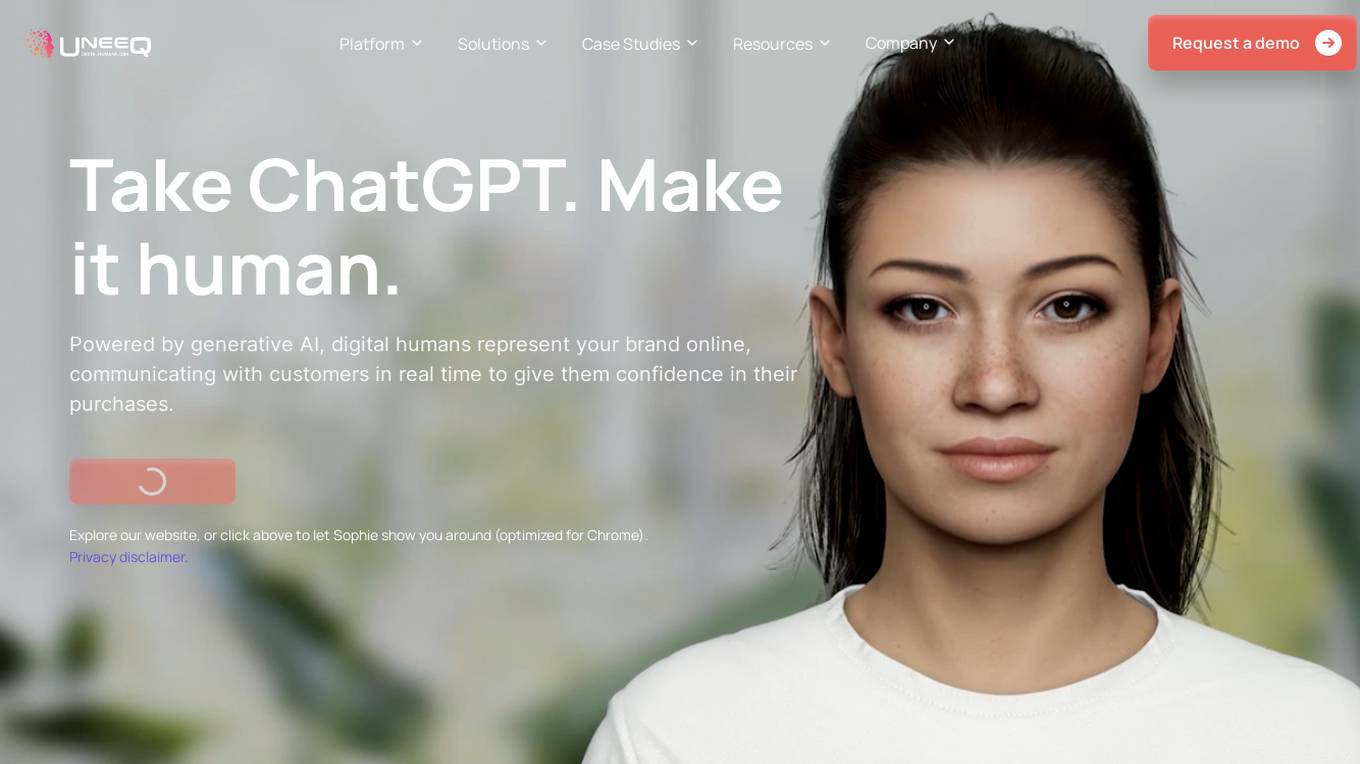

UneeQ Digital Humans Platform

The UneeQ Digital Humans Platform is an AI application that offers intelligent animation and real-time lifelike animation engine. It provides solutions for marketing, sales, customer service, and training across various industries. The platform allows for personalized and enjoyable customer interactions through digital human avatars, enhancing customer journeys and boosting conversions. With features like dialog-based marketing, personalized AI experiences, and multilingual support, UneeQ aims to simplify complex subject matters, empower users, and provide memorable interactions. The platform is developer-friendly with open APIs and pre-built SDKs, making it easy to integrate and work with. UneeQ's digital humans have been proven to significantly increase engagement, web traffic, conversion rates, and customer satisfaction.

Synthesia

Synthesia is an AI-powered video creation platform that allows users to create professional-quality videos with AI avatars and voiceovers in over 130 languages. It is designed to be easy to use, with a drag-and-drop interface and a library of pre-built templates. Synthesia is used by businesses of all sizes to create training videos, sales videos, marketing videos, and more.

EyePop.ai

EyePop.ai is a hassle-free AI vision partner designed for innovators to easily create and own custom AI-powered vision models tailored to their visual data needs. The platform simplifies building AI-powered vision models through a fast, intuitive, and fully guided process without the need for coding or technical expertise. Users can define their target, upload data, train their model, deploy and detect, and iterate and improve to ensure effective AI solutions. EyePop.ai offers pre-trained model library, self-service training platform, and future-ready solutions to help users innovate faster, offer unique solutions, and make real-time decisions effortlessly.

OpenArt

OpenArt is an AI-powered art platform that offers a free AI image generator and editor. It allows users to create images using pre-built models or by training their own models. The platform provides an intuitive AI drawing tool and editing suite to transform artistic concepts into reality. OpenArt stands out for its boundary-free AI drawing, advanced AI art tools, diverse artistic styles, and the ability to train custom AI models. It caters to both amateur and professional artists, offering high-quality art creation and comprehensive support. Users can experiment with various styles, receive detailed feedback, and collaborate on artistic projects through the platform.

RunPod

RunPod is a cloud platform specifically designed for AI development and deployment. It offers a range of features to streamline the process of developing, training, and scaling AI models, including a library of pre-built templates, efficient training pipelines, and scalable deployment options. RunPod also provides access to a wide selection of GPUs, allowing users to choose the optimal hardware for their specific AI workloads.

Landing AI

Landing AI is a computer vision platform and AI software company that provides a cloud-based platform for building and deploying computer vision applications. The platform includes a library of pre-trained models, a set of tools for data labeling and model training, and a deployment service that allows users to deploy their models to the cloud or edge devices. Landing AI's platform is used by a variety of industries, including automotive, electronics, food and beverage, medical devices, life sciences, agriculture, manufacturing, infrastructure, and pharma.

Runway

Runway is a platform that provides tools and resources for artists and researchers to create and explore artificial intelligence-powered creative applications. The platform includes a library of pre-trained models, a set of tools for building and training custom models, and a community of users who share their work and collaborate on projects. Runway's mission is to make AI more accessible and understandable, and to empower artists and researchers to create new and innovative forms of creative expression.

micro1

micro1 is an AI recruitment tool that leverages human data produced by subject matter experts to help companies identify and hire top talent efficiently. The platform offers end-to-end post-training solutions, high-quality data for model training, pre-vetted AI trainers, and enterprise-grade LLM evaluations. With a focus on tech startups, staffing agencies, and enterprises, micro1 aims to streamline the recruitment process and save costs for businesses.

Viddyoze

Viddyoze is a powerful video creation software that empowers users to create professional-looking videos for their businesses or brands in minutes. With its extensive library of pre-built video templates, users can choose from a variety of options to achieve their desired goals, such as lead generation, Facebook ads, and brand awareness. Viddyoze also provides exclusive training courses and learning materials to help users build effective video marketing strategies. Additionally, users can access a brand library to add their own branding elements, auto-populate templates, and integrate TrustPilot reviews into their videos.

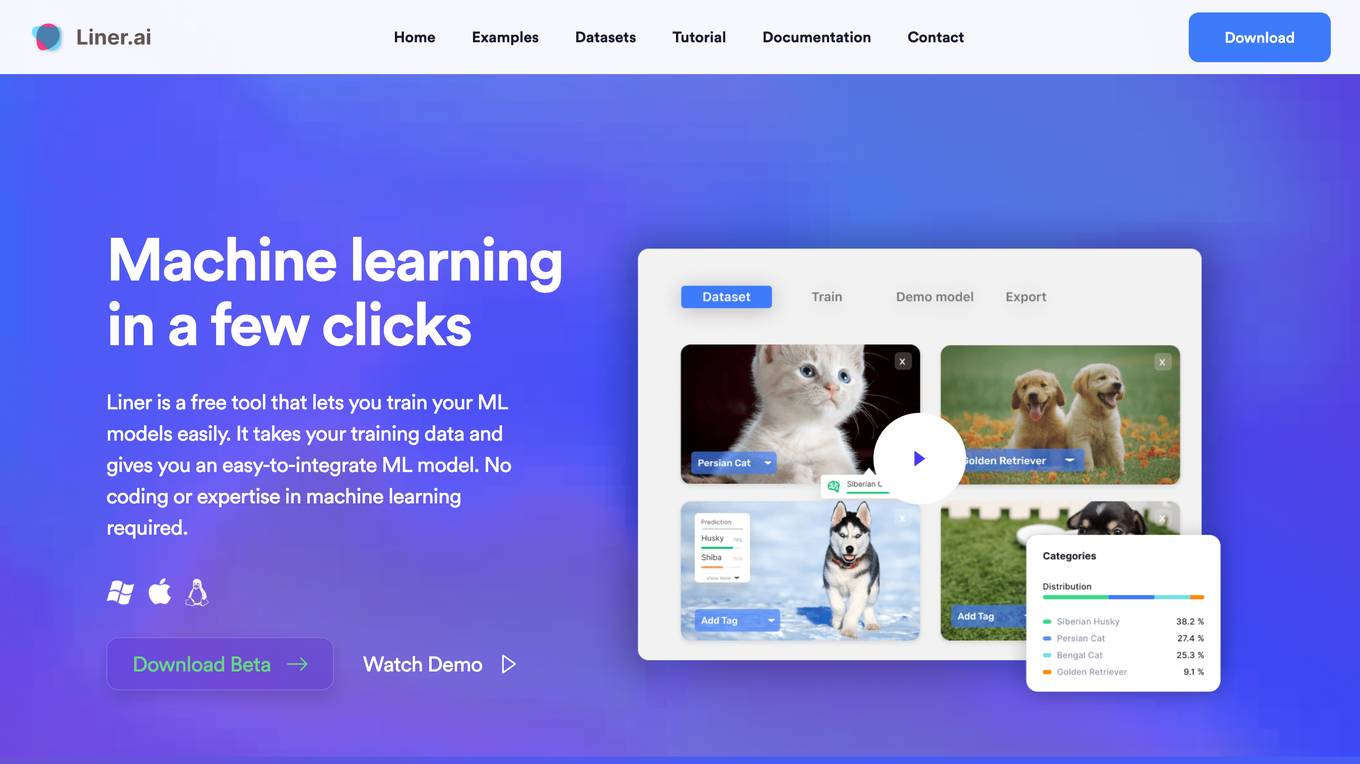

Liner.ai

Liner is a free and easy-to-use tool that allows users to train machine learning models without writing any code. It provides a user-friendly interface that guides users through the process of importing data, selecting a model, and training the model. Liner also offers a variety of pre-trained models that can be used for common tasks such as image classification, text classification, and object detection. With Liner, users can quickly and easily create and deploy machine learning applications without the need for specialized knowledge or expertise.

Baseten

Baseten is a machine learning infrastructure that provides a unified platform for data scientists and engineers to build, train, and deploy machine learning models. It offers a range of features to simplify the ML lifecycle, including data preparation, model training, and deployment. Baseten also provides a marketplace of pre-built models and components that can be used to accelerate the development of ML applications.

SentiSight.ai

SentiSight.ai is a machine learning platform for image recognition solutions, offering services such as object detection, image segmentation, image classification, image similarity search, image annotation, computer vision consulting, and intelligent automation consulting. Users can access pre-trained models, background removal, NSFW detection, text recognition, and image recognition API. The platform provides tools for image labeling, project management, and training tutorials for various image recognition models. SentiSight.ai aims to streamline the image annotation process, empower users to build and train their own models, and deploy them for online or offline use.

1 - Open Source AI Tools

Steel-LLM

Steel-LLM is a project to pre-train a large Chinese language model from scratch using over 1T of data to achieve a parameter size of around 1B, similar to TinyLlama. The project aims to share the entire process including data collection, data processing, pre-training framework selection, model design, and open-source all the code. The goal is to enable reproducibility of the work even with limited resources. The name 'Steel' is inspired by a band '万能青年旅店' and signifies the desire to create a strong model despite limited conditions. The project involves continuous data collection of various cultural elements, trivia, lyrics, niche literature, and personal secrets to train the LLM. The ultimate aim is to fill the model with diverse data and leave room for individual input, fostering collaboration among users.

20 - OpenAI Gpts

Dune x Farcaster (GPT)

A GPT pre-trained on duneSQL Farcaster tables, complex examples, and duneSQL syntax. Please reference the dune docs or contact @shoni.eth for errors

Y Combinator Co-Pilot

Expert in YC applications, pre-trained by real application data insights

Seabiscuit Launch Lander

Startup Strong Within 180 Days: Tailored advice for launching, promoting, and scaling businesses of all types. It covers all stages from pre-launch to post-launch and develops strategies including market research, branding, promotional tactics, and operational planning unique your business. (v1.8)

Travel Buddy

Detailed trip planner offering destination ideas, itineraries, budgeting, and pre-trip advice.

Marina Medical

A guide for aspiring med students, aiding in interviews, MCAT prep, and application advice.

AVA 1.0 for Aspiring Medics | Medicine Interviews

A trial version of AVA 2.0, the World's first AI Interview Platform for Medical School Interview Interviews - https://ai.theaspiringmedics.co.uk/

MCAT Mentor

AI MCAT tutor with a focus on challenging topics, structured learning, and supportive guidance.

Afford This Home

I help simplify and realistically calculate how much house you can buy. Real Estate , Personal Finance, Mortgages, & more.

SBA Loan Advisor

Using public SBA data to help you find the best fit lender for your small business

Mobile Home Mortgage Calculator

Get the most up to date information about mortgages for a mobile home