Best AI tools for< Optimize System Performance >

20 - AI tool Sites

BottleneckCalculator.biz

BottleneckCalculator.biz is an AI tool designed to optimize system performance for AI workloads, specifically focusing on AI photo generation. The website provides a comprehensive guide on creating stunning visual content using AI technology, covering key concepts, essential tools, advanced techniques, system requirements, and future trends in AI photo generation.

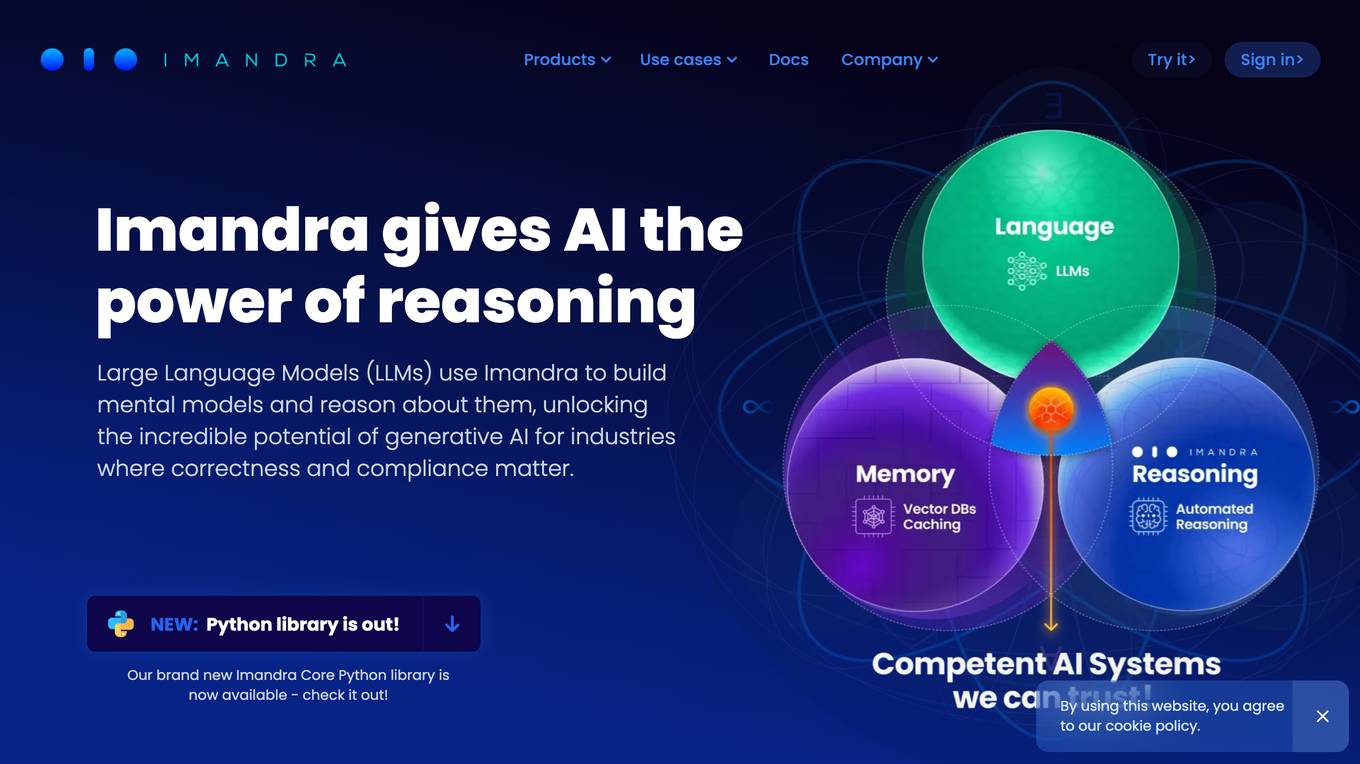

Imandra

Imandra is a company that provides automated logical reasoning for Large Language Models (LLMs). Imandra's technology allows LLMs to build mental models and reason about them, unlocking the potential of generative AI for industries where correctness and compliance matter. Imandra's platform is used by leading financial firms, the US Air Force, and DARPA.

Offline for Maintenance

The website is currently offline for maintenance. It is undergoing updates and improvements to enhance user experience. Please check back later for the latest information and services.

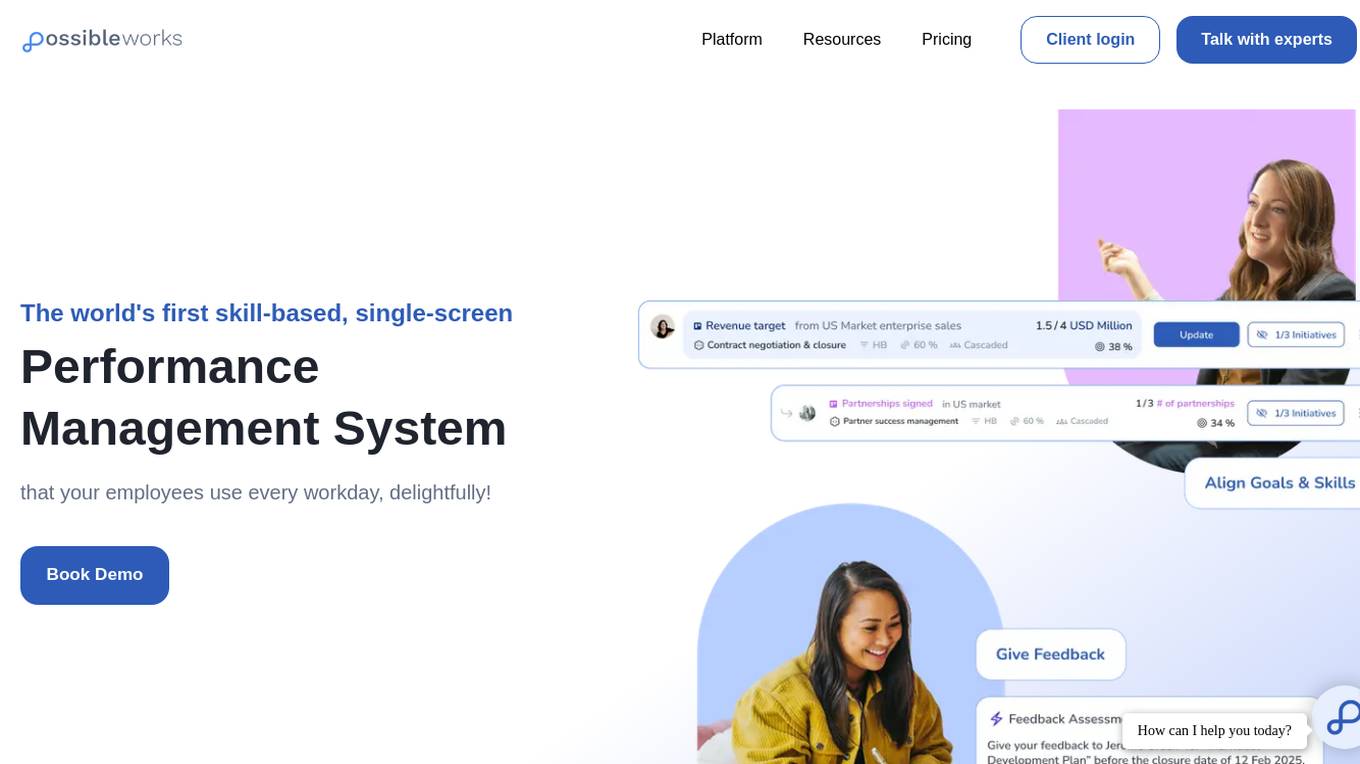

PossibleWorks

PossibleWorks is a skill-based Performance Management System Platform that reimagines traditional performance management processes by interlinking individual employee skills with organizational KPIs. It offers a single-screen Talent Management System, API automation, and AI integration to enhance performance tracking and decision-making. The platform streamlines HR processes, eliminates manual work, and focuses on high-value tasks, ultimately boosting productivity and efficiency.

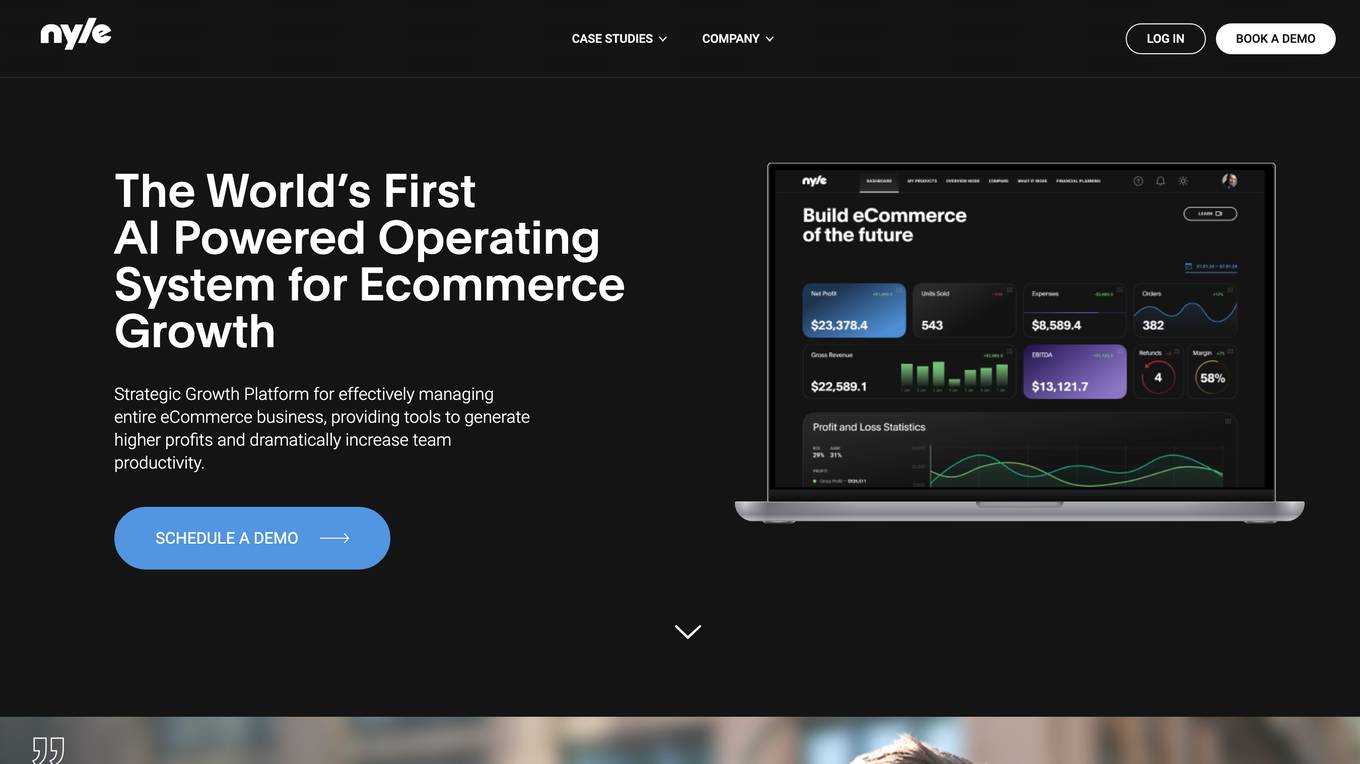

Nyle

Nyle is an AI-powered operating system for e-commerce growth. It provides tools to generate higher profits and increase team productivity. Nyle's platform includes advanced market analysis, quantitative assessment of customer sentiment, and automated insights. It also offers collaborative dashboards and interactive modeling to facilitate decision-making and cross-functional alignment.

Vindey

Vindey is an industry-leading AI-powered CRM platform designed for property management and sales solutions. It offers real-time smart suggestions, automation features, and intelligent sales automation to transform customer experiences. Vindey handles the entire sales pipeline, from lead qualification to deal closing, while providing personalized interactions and seamless lead management. The platform also offers AI-powered payment insights, workflow automation, and tailored solutions for various industries, such as sales, healthcare, real estate, education, and manufacturing.

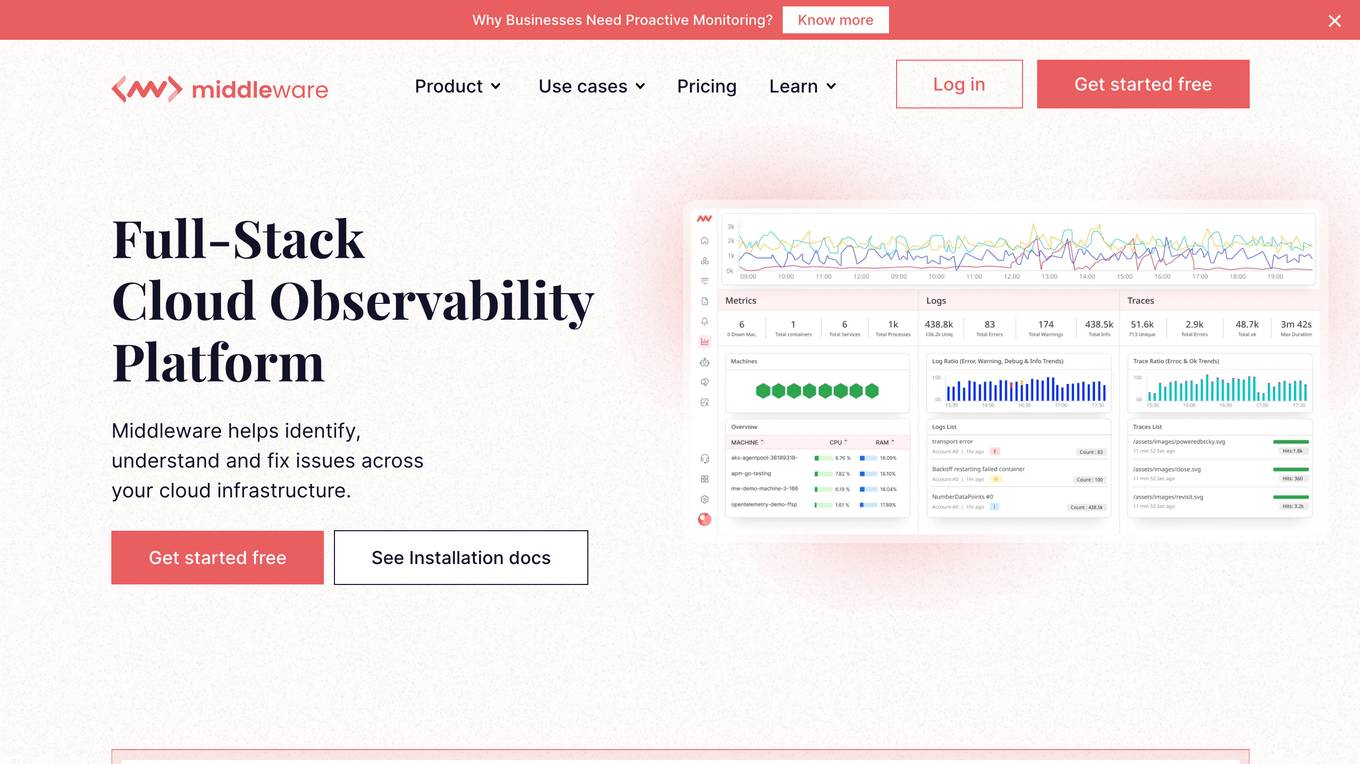

Cloud Observability Middleware

The website provides Full-Stack Cloud Observability services with a focus on Middleware. It offers comprehensive monitoring and analysis tools to help businesses optimize their cloud infrastructure performance. The platform enables users to gain insights into their middleware applications, identify bottlenecks, and improve overall system efficiency.

OpenResty

The website is currently displaying a '403 Forbidden' error, which indicates that the server is refusing to respond to the request. This error is often caused by insufficient permissions or misconfiguration on the server side. The 'openresty' mentioned in the error message is a web platform based on NGINX and LuaJIT, commonly used for building high-performance web applications. It is designed to handle a large number of concurrent connections and provide advanced features for web development.

OpenResty

The website is currently displaying a '403 Forbidden' error message, which indicates that the server is refusing to respond to the request. This error is often caused by insufficient permissions or misconfiguration on the server side. The 'openresty' mentioned in the message is a web platform based on NGINX and LuaJIT, commonly used for building high-performance web applications. It is designed to handle a large number of concurrent connections and provide a scalable and efficient web server solution.

Pulse

Pulse is a world-class expert support tool for BigData stacks, specifically focusing on ensuring the stability and performance of Elasticsearch and OpenSearch clusters. It offers early issue detection, AI-generated insights, and expert support to optimize performance, reduce costs, and align with user needs. Pulse leverages AI for issue detection and root-cause analysis, complemented by real human expertise, making it a strategic ally in search cluster management.

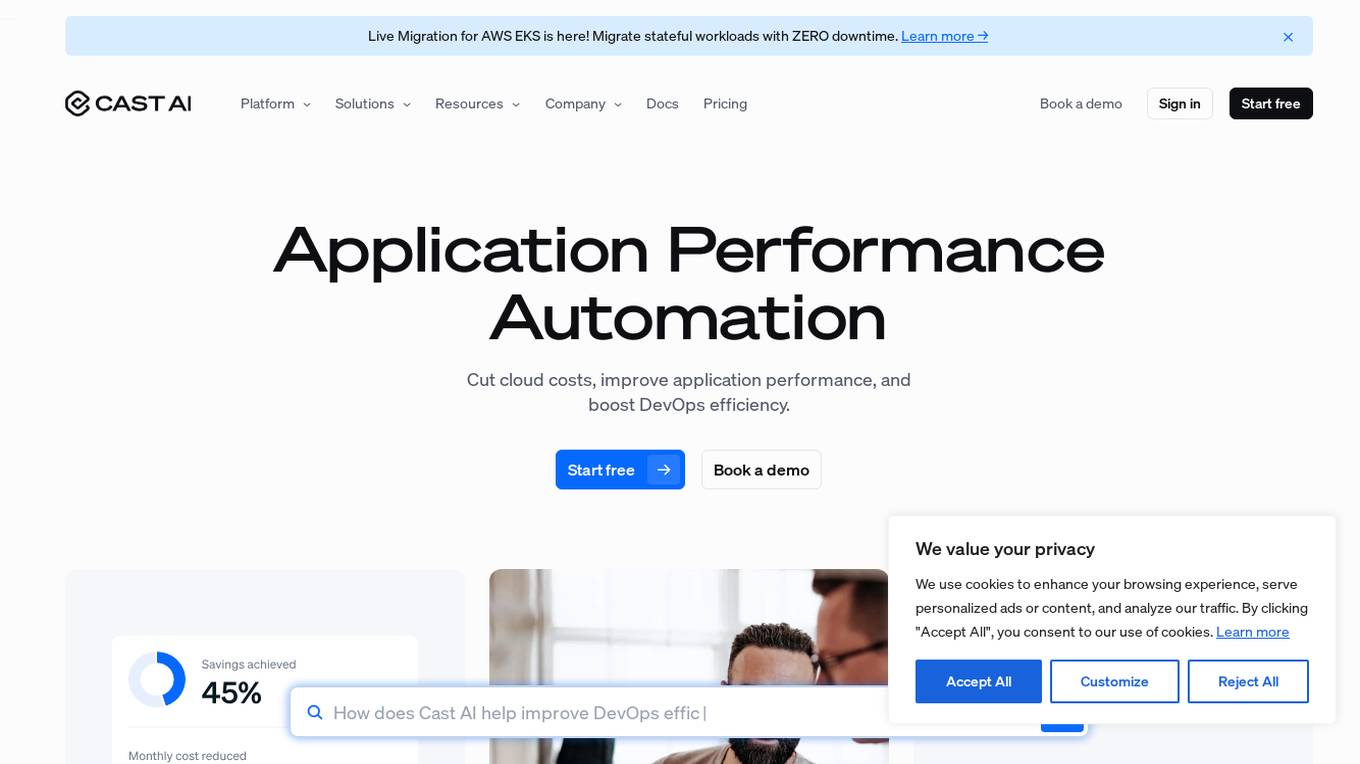

Cast AI

Cast AI is an intelligent Kubernetes automation platform that offers live migration for AWS EKS, enabling users to migrate stateful workloads with zero downtime. The platform provides application performance automation by automating and optimizing the entire application stack, including Kubernetes cluster optimization, security, workload optimization, LLM optimization for AIOps, cost monitoring, and database optimization. Cast AI integrates with various cloud services and tools, offering solutions for migration of stateful workloads, inference at scale, and cutting AI costs without sacrificing scale. The platform helps users improve performance, reduce costs, and boost productivity through end-to-end application performance automation.

GetMerlin

GetMerlin is a website that focuses on security verification and performance optimization. It ensures a secure connection by reviewing the security of the user's connection before proceeding. The platform verifies the user's identity to prevent unauthorized access and provides a seamless browsing experience. GetMerlin uses Cloudflare for performance and security enhancements, ensuring a safe and efficient online environment for users.

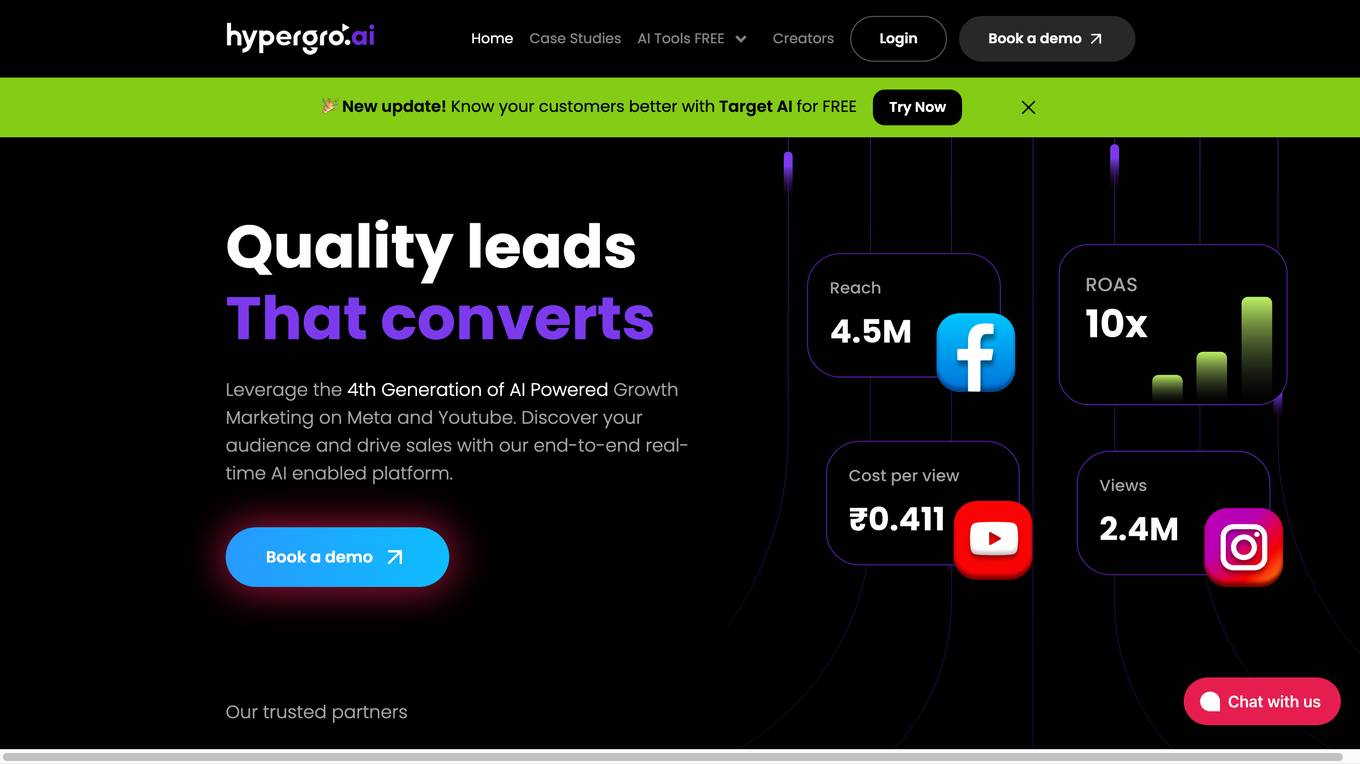

Hypergro

Hypergro is an AI-powered platform that specializes in UGC video ads for smart customer acquisition. Leveraging the 4th Generation of AI-powered growth marketing on Meta and Youtube, Hypergro helps businesses discover their audience, drive sales, and increase revenue through real-time AI insights. The platform offers end-to-end solutions for creating impactful short video ads that combine creator authenticity with AI-driven research for compelling storytelling. With a focus on precision targeting, competitor analysis, and in-depth research, Hypergro ensures maximum ROI for brands looking to elevate their growth strategies.

HONO.AI

HONO.AI is a Conversational AI-Powered HRMS Software designed to simplify complex HR tasks for both the workforce and HR professionals. It offers a chat-first HCM solution with predictive analytics and global payroll capabilities, driving enhanced employee experience, effortless attendance management, quicker employee support, and greater workforce productivity. HONO's human capital management platform empowers organizations to focus on what matters most by providing a centralized payroll administration system. With features like conversational HRMS, intelligent HR analytics, employee engagement tools, enterprise-grade security, and actionable insights, HONO revolutionizes the HR landscape for leading organizations worldwide.

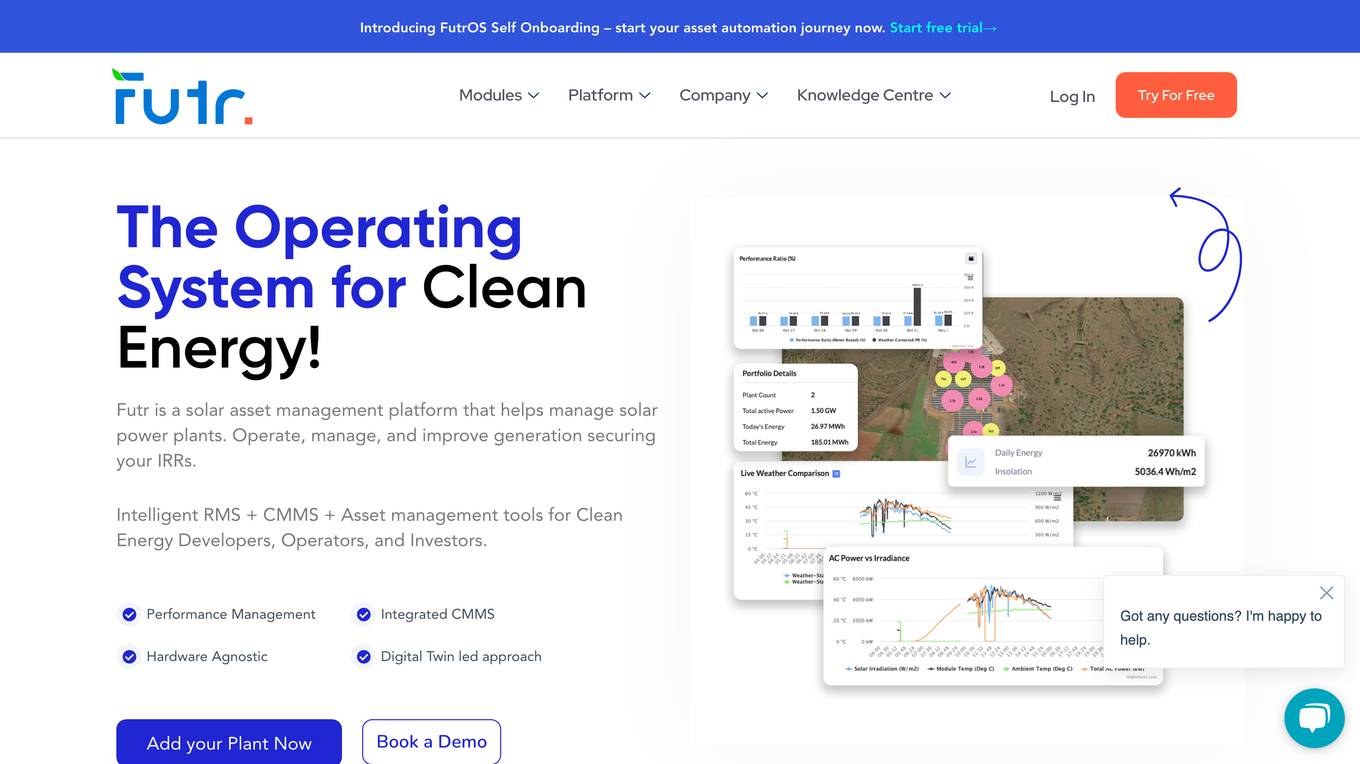

Futr Energy

Futr Energy is a solar asset management platform designed to help manage solar power plants efficiently. It offers a range of tools and features such as remote monitoring, CMMS, inventory management, performance monitoring, and automated reports. Futr Energy aims to provide clean energy developers, operators, and investors with intelligent solutions to optimize the generation and performance of solar assets.

Portkey

Portkey is a control panel for production AI applications that offers an AI Gateway, Prompts, Guardrails, and Observability Suite. It enables teams to ship reliable, cost-efficient, and fast apps by providing tools for prompt engineering, enforcing reliable LLM behavior, integrating with major agent frameworks, and building AI agents with access to real-world tools. Portkey also offers seamless AI integrations for smarter decisions, with features like managed hosting, smart caching, and edge compute layers to optimize app performance.

OpenResty

The website appears to be displaying a '403 Forbidden' error message, which indicates that the server is refusing to respond to the request. This error is often caused by incorrect permissions on the server or a misconfiguration in the server settings. The message 'openresty' suggests that the server may be running the OpenResty web platform. OpenResty is a web platform based on NGINX and Lua that is commonly used to build high-performance web applications. It provides a powerful and flexible way to extend NGINX with Lua scripts, allowing for advanced web server functionality.

Tensordyne

Tensordyne is a generative AI inference compute tool designed and developed in the US and Germany. It focuses on re-engineering AI math and defining AI inference to run the biggest AI models for thousands of users at a fraction of the rack count, power, and cost. Tensordyne offers custom silicon and systems built on the Zeroth Scaling Law, enabling breakthroughs in AI technology.

FXPredator

FXPredator is the #1 Forex Trading Bot for MT4/MT5 Expert Advisor that offers fully automated trading powered by AI technology. Users can track real-time and historical performance, enjoy fast delivery of the Expert Advisor, and receive top-notch customer support. FXPredator provides multiple advantages such as automated trading based on numerical data, generating passive income, proven performance monitoring, and peace of mind in managing trades. With a focus on transparency, customization, and outstanding customer support, FXPredator is the ultimate solution for automated trading.

Tamarack

Tamarack is a technology company specializing in equipment finance, offering AI-powered applications and data-centric technologies to enhance operational efficiency and business performance. They provide a range of solutions, from business intelligence to professional services, tailored for the equipment finance industry. Tamarack's AI Predictors and DataConsole are designed to streamline workflows and improve outcomes for stakeholders. With a focus on innovation and customer experience, Tamarack aims to empower clients with online functionality and predictive analytics. Their expertise spans from origination to portfolio management, delivering industry-specific solutions for better performance.

0 - Open Source AI Tools

20 - OpenAI Gpts

Thermal Engineering Advisor

Guides thermal management solutions for efficient system performance.

Power Systems Advisor

Ensures optimal performance of power systems through strategic advisory.

Pelles GPT for MEP engineers

Specialized assistant for MEP engineers, aiding in calculations and system design.

OPSGPT

A technical encyclopedia for network operations, offering detailed solutions and advice.

Software Architect

Expert in software architecture, ensuring integrity and scalability through best practices.

The Dock - Your Docker Assistant

Technical assistant specializing in Docker and Docker Compose. Lets Debug !