Best AI tools for< Optimize Results >

20 - AI tool Sites

Growcado

Growcado is an AI-powered marketing automation platform that helps businesses unify data and content to engage and personalize customer experiences for better results. The platform leverages advanced AI analysis to predict customer segments accurately, extract customer preferences, and deliver customized experiences with precision and ease. With features like AI-powered segmentation, personalization engine, dynamic segmentation, intelligent content management, and real-time analytics, Growcado empowers businesses to optimize their marketing strategies and enhance customer engagement. The platform also offers seamless integrations, scalable architecture, and no-code customization for easy deployment of personalized marketing assets across multiple channels.

AI Website & Landing Pages

The AI Website & Landing Pages tool allows users to create AI-designed websites and landing pages in just 10 seconds. It offers a streamlined experience with features such as AI design and copy, free and custom domains, analytics and insights, A/B testing, AI sales and support chatbot, SEO optimization, free image and video library, custom forms, webhook integration, auto page translation, high-speed streaming, adaptive design, curated playlists, and more. Users can optimize their results with AB testing, AI-generated versions, quick experiments, and in-depth reports. The tool also enables users to run ads globally, create multilingual landing pages, and reach a global audience with fast campaigns. It provides effortless editing with 1-click edit and publish functionality, instant previews, and seamless publishing. The tool is user-friendly, requiring no code or drag-and-drop actions, making website and landing page creation quick and easy.

LINQ Me Up

LINQ Me Up is an AI-powered tool designed to boost .Net productivity by generating and converting LINQ code queries. It allows users to effortlessly convert SQL queries to LINQ code, transform LINQ code into SQL queries, and generate tailored LINQ queries for various datasets. The tool supports C# and Visual Basic code, Method and Query syntax, and provides AI-powered analysis for optimized results. LINQ Me Up aims to supercharge productivity by simplifying the migration process and enabling users to focus on essential code parts.

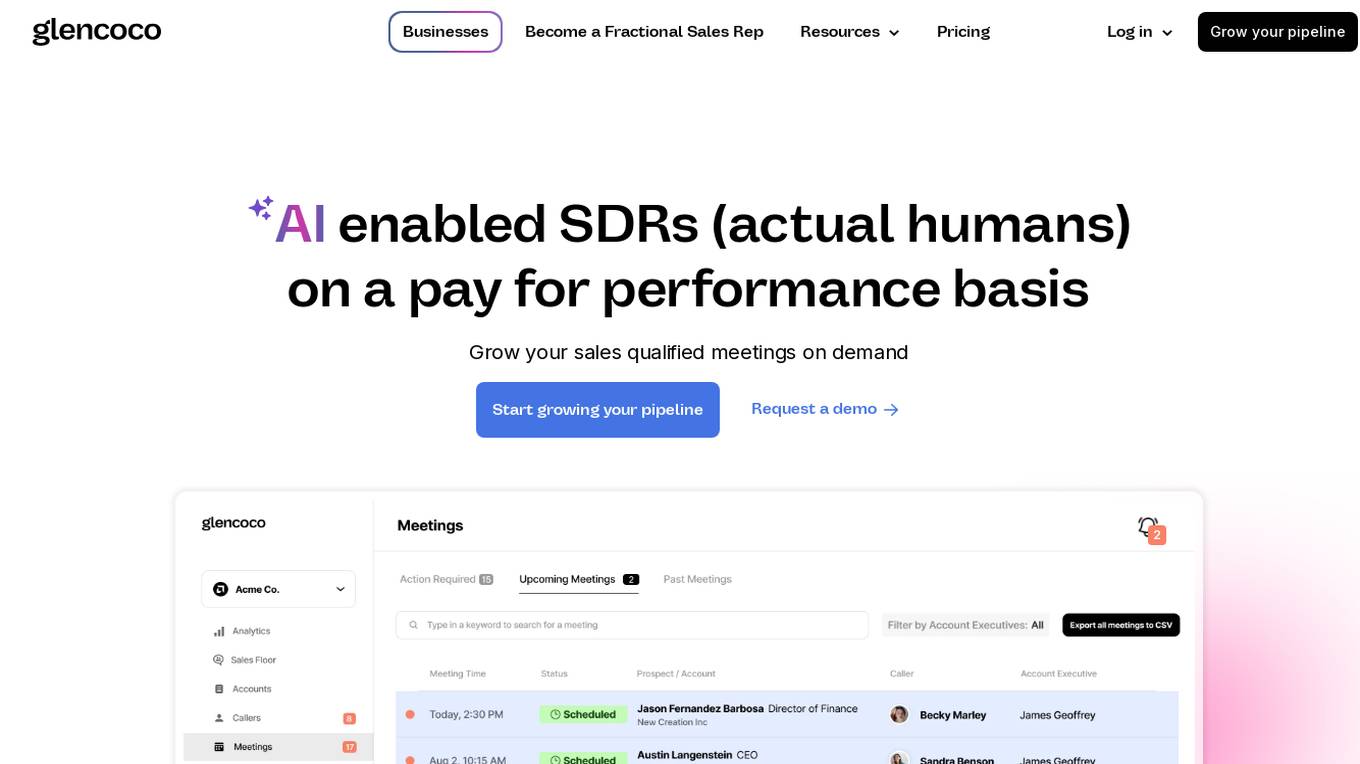

Glencoco

Glencoco is a tech-enabled sales marketplace that empowers businesses to become fractional sales representatives. The platform offers AI-enabled SDRs on a pay-for-performance basis, helping businesses grow their pipeline by finding the right prospects and maximizing ROI. Glencoco provides insights on prospect responses, integrates dialing and email solutions, and allows users to set up campaigns, select sales development reps, and optimize results. The platform combines human contractors with AI workflows to deliver successful outbound sales motions effortlessly.

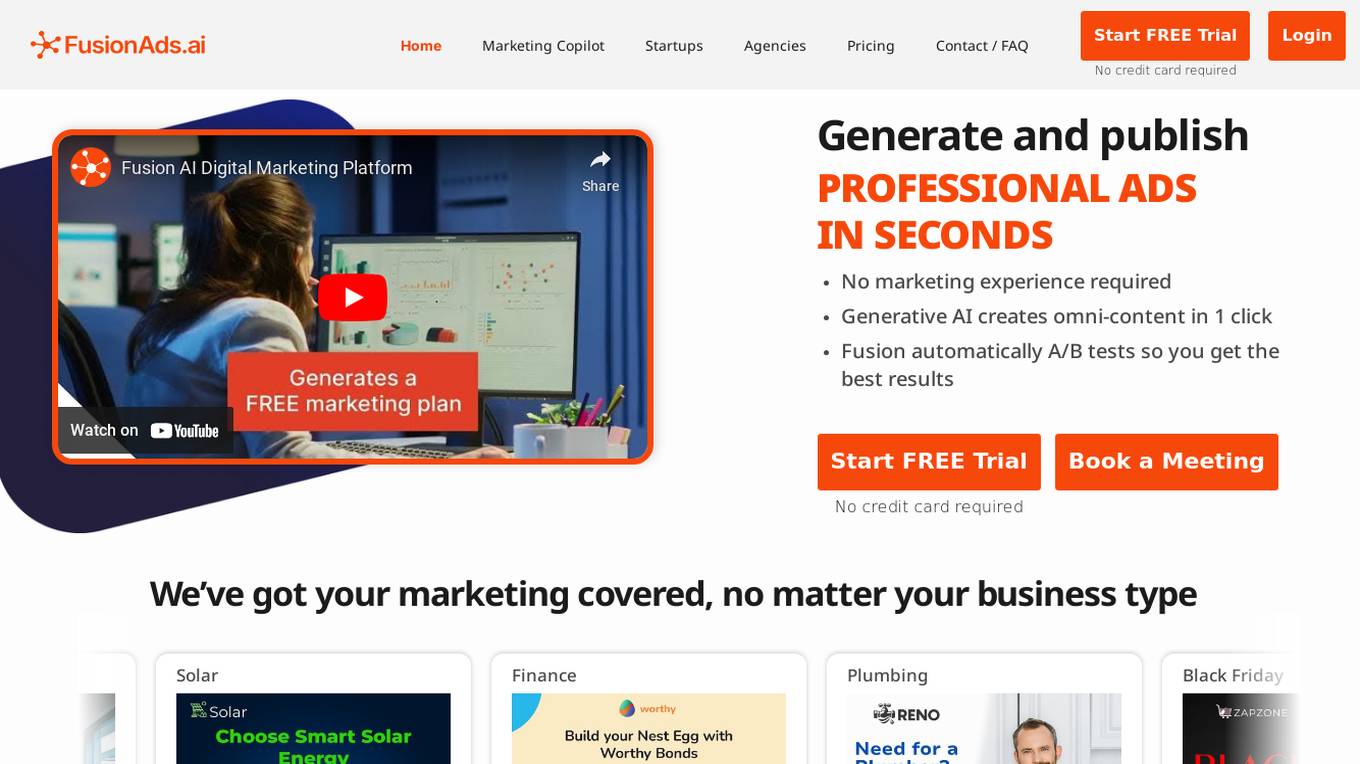

FusionAds.ai

FusionAds.ai is an AI Generative Advertising platform designed for businesses and their agencies. It offers a user-friendly interface that allows users to generate and publish professional ads in seconds without the need for marketing experience. The platform utilizes Generative AI to create omni-content with just one click and automatically conducts A/B tests to optimize results. FusionAds.ai covers various marketing aspects such as social media posts, paid ads, emails, SMS, and more, making it a comprehensive solution for businesses of all types.

Videco

Videco is an AI-driven personalized and interactive video platform designed for sales and marketing teams to enhance customer engagement and boost conversions. It offers features such as AI voice cloning, interactive buttons, lead generation, in-video calendars, and dynamic video creation. With Videco, users can personalize videos, distribute them through various channels, analyze performance, and optimize results. The platform aims to help businesses 10x their pipeline with video content and improve sales outcomes through personalized interactions.

Landingi

Landingi is a top landing page builder and platform for marketers, offering a comprehensive suite of tools to create, publish, and optimize digital marketing assets. With features like AI landing page refinement, A/B testing, event tracking, integrations, and e-commerce capabilities, Landingi empowers users to drive sales, generate leads, and optimize campaign results. The platform also provides resources such as a blog, help center, landing page academy, and design services to support users in mastering digital marketing. With a focus on data-driven decision-making and user experience optimization, Landingi is a valuable tool for digital marketers seeking to enhance their online presence and conversion rates.

Pixis

Pixis is a codeless AI infrastructure designed for growth marketing, offering purpose-built AI solutions to scale demand generation. The platform leverages transparent AI infrastructure to optimize campaign results across platforms, with features such as targeting AI, creative AI, and performance AI. Pixis helps reduce customer acquisition cost, generate creative assets quickly, refine audience targeting, and deliver contextual communication in real-time. The platform also provides an AI savings calculator to estimate the returns from leveraging its codeless AI infrastructure for marketing. With success stories showcasing significant improvements in various marketing metrics, Pixis aims to empower businesses to unlock the capabilities of AI for enhanced performance and results.

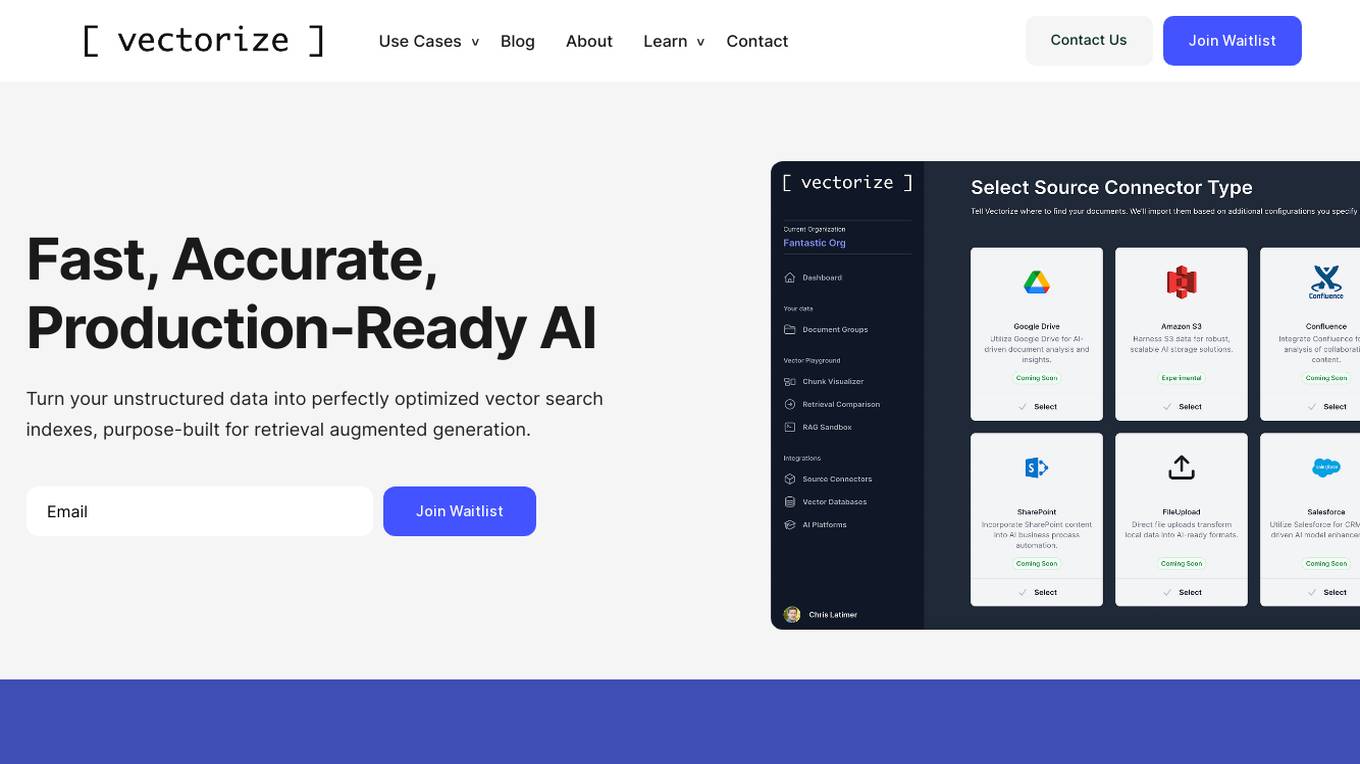

Vectorize

Vectorize is a fast, accurate, and production-ready AI tool that helps users turn unstructured data into optimized vector search indexes. It leverages Large Language Models (LLMs) to create copilots and enhance customer experiences by extracting natural language from various sources. With built-in support for top AI platforms and a variety of embedding models and chunking strategies, Vectorize enables users to deploy real-time vector pipelines for accurate search results. The tool also offers out-of-the-box connectors to popular knowledge repositories and collaboration platforms, making it easy to transform knowledge into AI-generated content.

Trieve

Trieve is an AI-first infrastructure API that offers search, recommendations, and RAG capabilities by combining language models with tools for fine-tuning ranking and relevance. It helps companies build unfair competitive advantages through their discovery experiences, powering over 30,000 discovery experiences across various categories. Trieve supports semantic vector search, BM25 & SPLADE full-text search, hybrid search, merchandising & relevance tuning, and sub-sentence highlighting. The platform is built on open-source models, ensuring data privacy, and offers self-hostable options for sensitive data and maximum performance.

Bloomreach

Bloomreach is an AI-powered ecommerce personalization platform that integrates real-time customer and product data to enable personalized marketing, product discovery, content, and conversational shopping. It offers solutions for marketers, merchandisers, and data-driven executives, helping businesses optimize performance, increase profitability, and enhance customer experiences.

Plato

Plato is an AI-powered platform that provides data intelligence for the digital world. It offers an immersive user experience through a proprietary hashtagging algorithm optimized for search. With over 5 million users since its beta launch in April 2020, Plato organizes public and private data sources to deliver authentic and valuable insights. The platform connects users to sector-specific applications, offering real-time data intelligence in a secure environment. Plato's vertical search and AI capabilities streamline data curation and provide contextual relevancy for users across various industries.

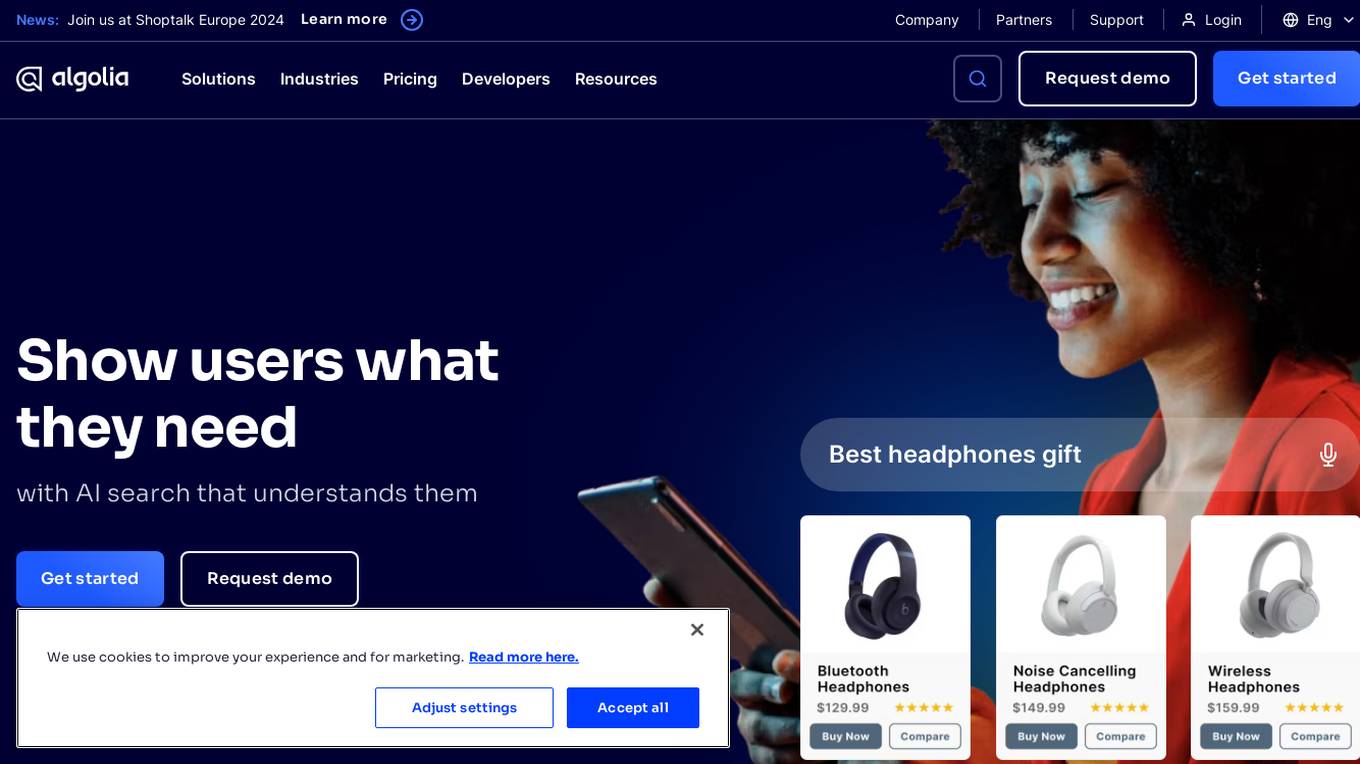

Algolia

Algolia is an AI search tool that provides users with the results they need to see, category and collection pages built by AI, recommendations throughout the user journey, data-enhanced customer experiences through the Merchandising Studio, and insights in one dashboard with Analytics. It offers pre-built UI components for custom journeys and integrates with platforms like Adobe Commerce, BigCommerce, Commercetools, Salesforce CC, and Shopify. Algolia is trusted by various industries such as retail, e-commerce, B2B e-commerce, marketplaces, and media. It is known for its ease of use, speed, scalability, and ability to handle a high volume of queries.

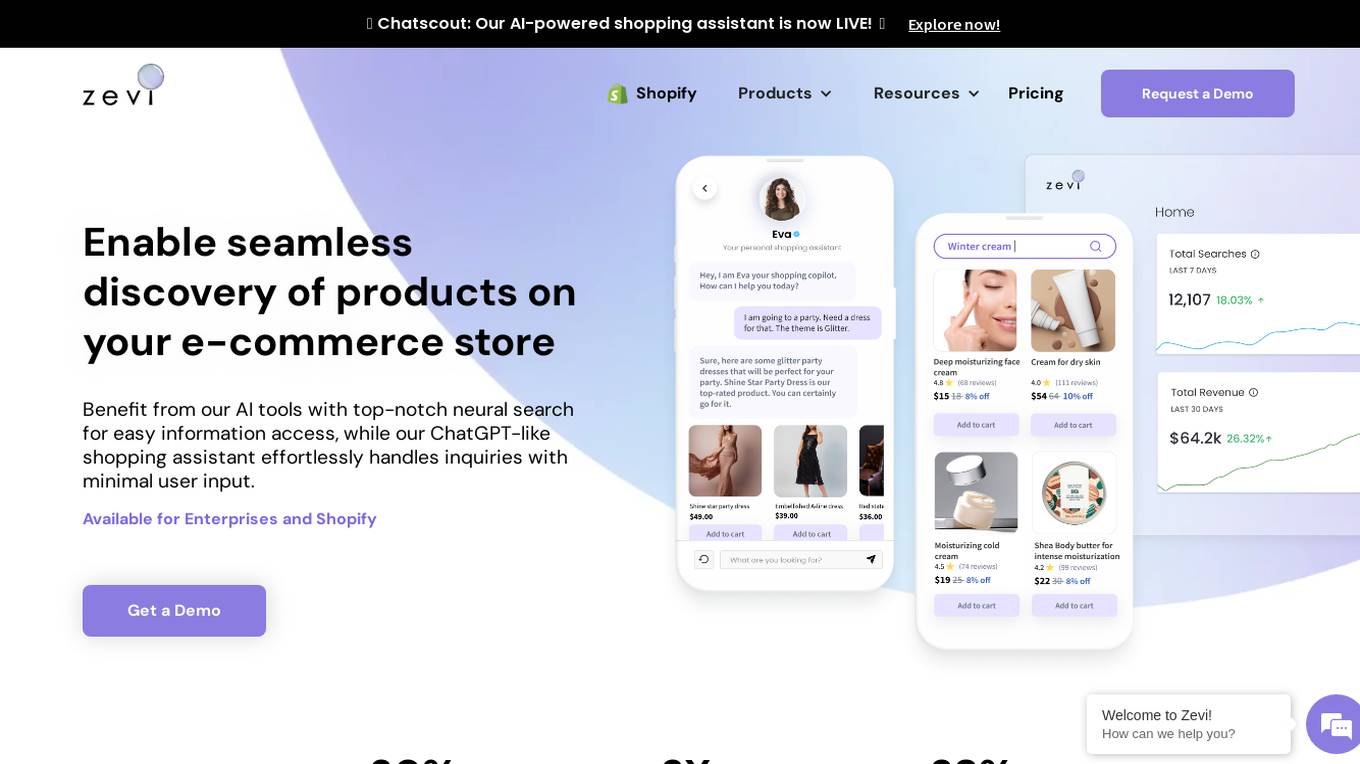

Zevi

Zevi is an AI-powered site search and discovery platform designed for D2C commerce. It offers a chat assistant and neural search capabilities to enhance the shopping experience for users. The platform helps businesses improve engagement and sales by guiding prospects from discovery to conversions with intent-focused search and chat solutions. Zevi is available for enterprises and Shopify users, providing seamless product discovery and personalized shopping assistance.

Biotech Newswire

The Biotech Newswire is a specialized PR distribution service for the life science and biotech industry. With over 20 years of experience, it offers unparalleled media coverage and engagement through meaningful post-release reports. Founded by Dr. Sabine Duntze, a PR expert with a strong life science background, the service enhances press releases for AI-based searches using MeSH terms and ChatGPT. It provides cost-effective and service-oriented solutions with fast email response times, proofreading, and consulting.

Miros

Miros is an AI-powered ecommerce search tool that provides shoppers with a seamless and efficient search experience. It utilizes visual and semantic AI algorithms to understand shopper preferences and behavior, delivering relevant search results without the need for text entry. Miros offers innovative solutions such as Wordless Search, Tagless Discovery, and Discovery Bar to enhance product discovery and improve the overall customer experience. With fast API response speeds and easy integration options, Miros is a versatile tool trusted by top retailers worldwide to drive growth through AI-powered product discovery.

Roundabout

Roundabout is a micro influencer marketing platform that helps businesses reach their target audience and save time by providing solutions for brand reach and agency efficiency. The platform allows users to discover influencers across various industry niches, create engaging content, and track campaign performance. With features like finding, controlling, and converting influencers, Roundabout offers a transparent and flexible process for successful influencer marketing campaigns.

FineSkyAi

FineSkyAi is an AI application that offers bespoke AI solutions to streamline workflows, enhance creativity, and optimize operations. The platform focuses on developing custom AI tools that revolutionize processes and empower users to unleash their full potential. With cutting-edge AI models and innovative technology, FineSkyAi aims to provide high-quality, reliable support to help users generate unique results and optimize their adaptive processes.

TestMarket

TestMarket is an AI-powered sales optimization platform for online marketplace sellers. It offers a range of services to help sellers increase their visibility, boost sales, and improve their overall performance on marketplaces such as Amazon, Etsy, and Walmart. TestMarket's services include product promotion, keyword analysis, Google Ads and SEO optimization, and advertising optimization.

Shopstory

Shopstory is an AI automation tool designed for marketing teams and agencies to automate, optimize, and save time in building scalable processes. It offers a versatile flow builder, Excel-like formulas, and AI actions to generate content and extract structured data. With over 2,600 integrations and efficient flow management, Shopstory helps users automate at scale and drive results across all clients. The tool provides dedicated onboarding and support, pre-built automations, and guided setups for quick implementation.

0 - Open Source AI Tools

20 - OpenAI Gpts

Search Query Optimizer

Create the most effective database or search engine queries using keywords, truncation, and Boolean operators!

A/B Test GPT

Calculate the results of your A/B test and check whether the result is statistically significant or due to chance.

Website Conversion by B12

I'll help you optimize your website for more conversions, and compare your site's CRO potential to competitors’.

SEO Magic

GPT created by Max Del Rosso, specialising in the analysis and optimisation of SEO content. This tool develops targeted content strategies and advises on best practices for structuring articles to maximise visibility and ranking in search engine results pages (SERPs)

CV Optimiser

ATS-Optimised CVs that get results. Upload a general CV and job description, get a specific, optimised CV.

Data Analysis - SERP

it helps me analyze serp results and data from certain websites in order to create an outline for the writer

International SEO and UX Expert Guide

Guides on optimizing websites for international audiences

SEARCHLIGHT

Script Examples and Resource Center for Helping with LAMMPS Input Generation and High-quality Tutorials (SERCHLIGHT)

Programmatic Advertising Expert (ENG/GER)

All you need to know - from basics to latest developments e.g. Post Cookie in 2024 , the rise of DOOH, CTV, In-Housing, and much more...