Best AI tools for< Optimize Parallelism >

20 - AI tool Sites

Webflow Optimize

Webflow Optimize is a website optimization and personalization tool that empowers users to create, edit, and manage web content with ease. It offers features such as A/B testing, personalized site experiences, and AI-powered optimization. With Webflow Optimize, users can enhance their digital experiences, experiment with different variations, and deliver personalized content to visitors. The platform integrates seamlessly with Webflow's visual development environment, allowing users to create and launch variations without complex code implementations.

Qualtrics XM

Qualtrics XM is a leading Experience Management Software that helps businesses optimize customer experiences, employee engagement, and market research. The platform leverages specialized AI to uncover insights from data, prioritize actions, and empower users to enhance customer and employee experience outcomes. Qualtrics XM offers solutions for Customer Experience, Employee Experience, Strategy & Research, and more, enabling organizations to drive growth and improve performance.

Jobscan

Jobscan is a comprehensive job search tool that helps job seekers optimize their resumes, cover letters, and LinkedIn profiles to increase their chances of getting interviews. It uses artificial intelligence and machine learning technology to analyze job descriptions and identify the skills and keywords that recruiters are looking for. Jobscan then provides personalized suggestions on how to tailor your application materials to each specific job you apply for. In addition to its resume and cover letter optimization tools, Jobscan also offers a job tracker, a LinkedIn optimization tool, and a career change tool. With its powerful suite of features, Jobscan is an essential tool for any job seeker who wants to land their dream job.

TestMarket

TestMarket is an AI-powered sales optimization platform for online marketplace sellers. It offers a range of services to help sellers increase their visibility, boost sales, and improve their overall performance on marketplaces such as Amazon, Etsy, and Walmart. TestMarket's services include product promotion, keyword analysis, Google Ads and SEO optimization, and advertising optimization.

VWO

VWO is a comprehensive experimentation platform that enables businesses to optimize their digital experiences and maximize conversions. With a suite of products designed for the entire optimization program, VWO empowers users to understand user behavior, validate optimization hypotheses, personalize experiences, and deliver tailored content and experiences to specific audience segments. VWO's platform is designed to be enterprise-ready and scalable, with top-notch features, strong security, easy accessibility, and excellent performance. Trusted by thousands of leading brands, VWO has helped businesses achieve impressive growth through experimentation loops that shape customer experience in a positive direction.

Botify AI

Botify AI is an AI-powered tool designed to assist users in optimizing their website's performance and search engine rankings. By leveraging advanced algorithms and machine learning capabilities, Botify AI provides valuable insights and recommendations to improve website visibility and drive organic traffic. Users can analyze various aspects of their website, such as content quality, site structure, and keyword optimization, to enhance overall SEO strategies. With Botify AI, users can make data-driven decisions to enhance their online presence and achieve better search engine results.

Siteimprove

Siteimprove is an AI-powered platform that offers a comprehensive suite of digital governance, analytics, and SEO tools to help businesses optimize their online presence. It provides solutions for digital accessibility, quality assurance, content analytics, search engine marketing, and cross-channel advertising. With features like AI-powered insights, automated analysis, and machine learning capabilities, Siteimprove empowers users to enhance their website's reach, reputation, revenue, and returns. The platform transcends traditional boundaries by addressing a wide range of digital requirements and impact-drivers, making it a valuable tool for businesses looking to improve their online performance.

SiteSpect

SiteSpect is an AI-driven platform that offers A/B testing, personalization, and optimization solutions for businesses. It provides capabilities such as analytics, visual editor, mobile support, and AI-driven product recommendations. SiteSpect helps businesses validate ideas, deliver personalized experiences, manage feature rollouts, and make data-driven decisions. With a focus on conversion and revenue success, SiteSpect caters to marketers, product managers, developers, network operations, retailers, and media & entertainment companies. The platform ensures faster site performance, better data accuracy, scalability, and expert support for secure and certified optimization.

EverSQL

EverSQL is an AI-powered tool designed for SQL query optimization, database observability, and cost reduction for PostgreSQL and MySQL databases. It automatically optimizes SQL queries using smart AI-based algorithms, provides ongoing performance insights, and helps reduce monthly database costs by offering optimization recommendations. With over 100,000 professionals trusting EverSQL, it aims to save time, improve database performance, and enhance cost-efficiency without accessing sensitive data.

Attention Insight

Attention Insight is an AI-driven pre-launch analytics tool that provides crucial insights into consumer engagement with designs before the launch. By using predictive attention heatmaps and AI-generated attention analytics, users can optimize their concepts for better performance, validate designs, and improve user experience. The tool offers accurate data based on psychological research, helping users make informed decisions and save time and resources. Attention Insight is suitable for various types of analysis, including desktop, marketing material, mobile, posters, packaging, and shelves.

Competera

Competera is an AI-powered pricing platform designed for online and omnichannel retailers. It offers a unified workplace with an easy-to-use interface, real-time market data, and AI-powered product matching. Competera focuses on demand-based pricing, customer-centric pricing, and balancing price elasticity with competitive pricing. It provides granular pricing at the SKU level and offers a seamless adoption and onboarding process. The platform helps retailers optimize pricing strategies, increase margins, and save time on repricing.

Inventoro

Inventoro is a smart inventory forecasting and replenishment tool that helps businesses optimize their inventory management processes. By analyzing past sales data, the tool predicts future sales, recommends order quantities, reduces inventory size, identifies profitable inventory items, and ensures customer satisfaction by avoiding stockouts. Inventoro offers features such as sales forecasting, product segmentation, replenishment, system integration, and forecast automations. The tool is designed to help businesses decrease inventory, increase revenue, save time, and improve product availability. It is suitable for businesses of all sizes and industries looking to streamline their inventory management operations.

Vic.ai

Vic.ai is an AI-powered accounting software designed to streamline invoice processing, purchase order matching, approval flows, payments, analytics, and insights. The platform offers autonomous finance solutions that optimize accounts payable processes, achieve lasting ROI, and enable informed decision-making. Vic.ai leverages AI technology to enhance productivity, accuracy, and efficiency in accounting workflows, reducing manual tasks and improving overall financial operations.

Paro

Paro is a professional business finance and accounting solutions platform that matches businesses and accounting firms with skilled finance experts. It offers a wide range of services including accounting, bookkeeping, financial planning, budgeting, business analysis, data visualization, strategic advisory, growth strategy consulting, startup and fundraising consulting, transaction advisory, tax and compliance services, AI consulting services, and more. Paro aims to help businesses optimize faster by providing expert solutions to bridge gaps in finance and accounting operations. The platform also offers staff augmentation services, talent acquisition, and custom solutions to enhance operational efficiency and maximize ROI.

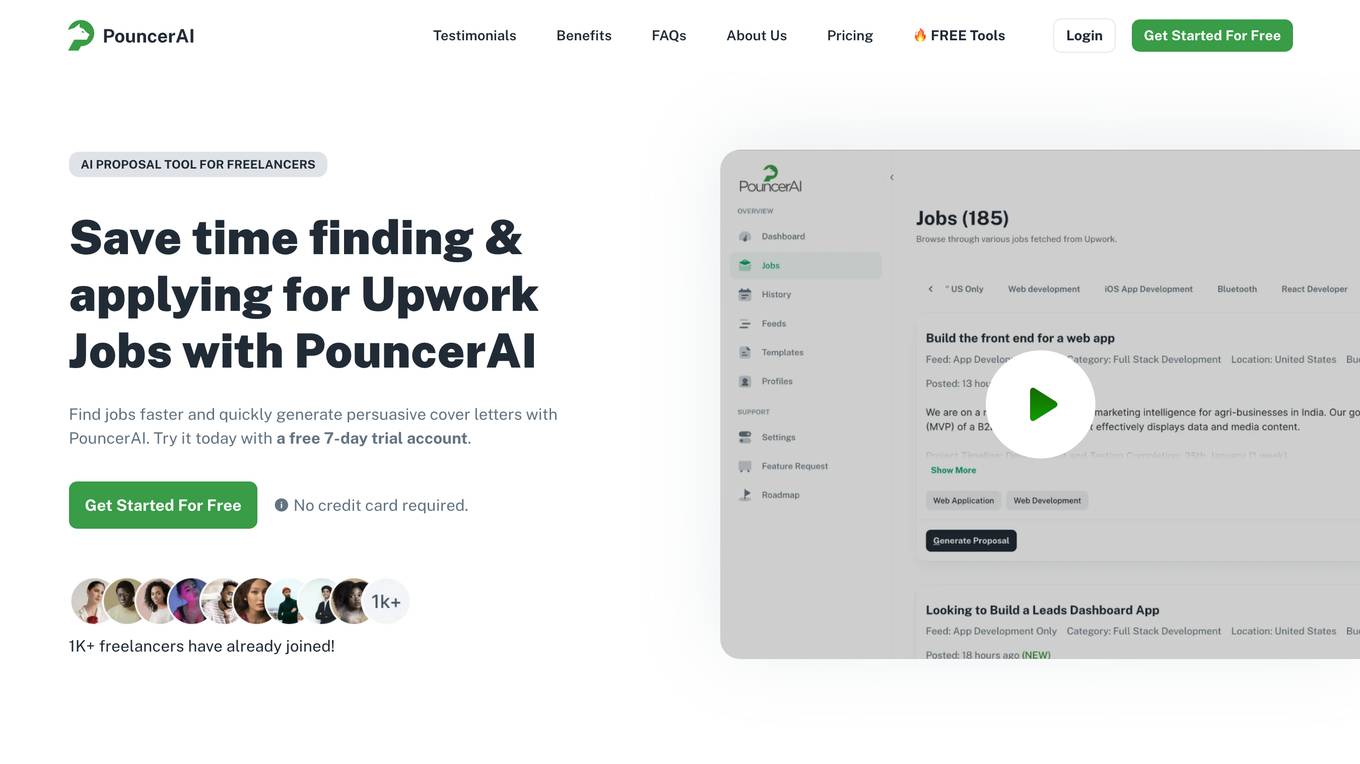

PouncerAI

PouncerAI is an AI tool designed for Upwork freelancers to enhance their profiles and proposals using AI-guided insights. It offers a Profile Optimizer to create client-attracting profiles and a Proposal Generator to simplify cover letter creation. The tool aims to help freelancers stand out in the competitive market by leveraging AI technology to optimize their Upwork presence and increase job opportunities.

Seventh Sense

Seventh Sense is an AI software designed to optimize email delivery times using artificial intelligence for HubSpot and Marketo users. It helps email marketers improve engagement and conversions by personalizing email delivery times based on individual recipient behavior. The tool aims to address the challenges of email marketing in today's competitive digital landscape by leveraging AI to increase deliverability, engagement, and conversions. Seventh Sense has been successful in helping hundreds of companies enhance their email marketing performance and stand out in crowded inboxes.

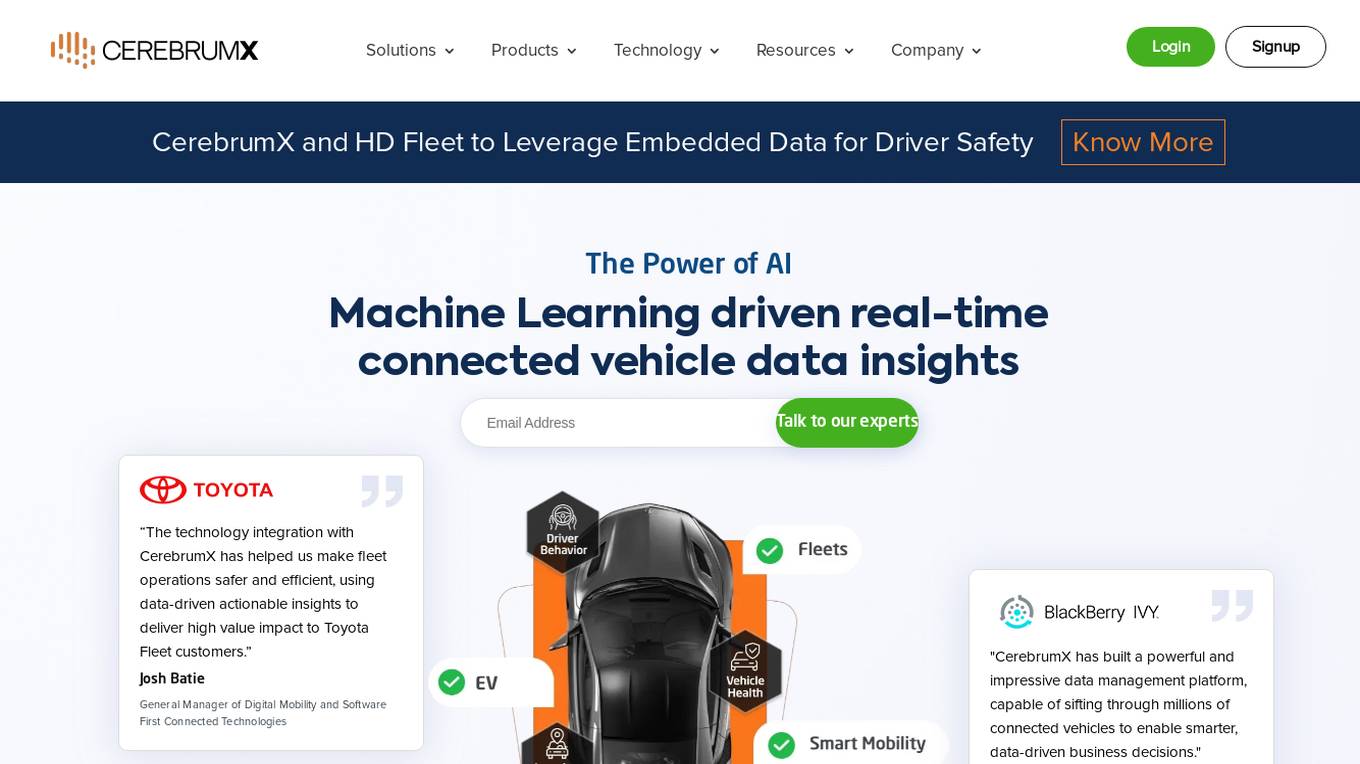

CEREBRUMX

CEREBRUMX is an AI-powered platform that offers preventive car maintenance telematics solutions for various industries such as fleet management, vehicle service contracts, electric vehicles, smart cities, and media. The platform provides data insights and features like driver safety, EV charging, predictive maintenance, roadside assistance, and traffic flow management. CEREBRUMX aims to optimize fleet operations, enhance efficiency, and deliver high-value impact to customers through real-time connected vehicle data insights.

CloudEagle.ai

CloudEagle.ai is a modern SaaS procurement and management platform that offers AI/ML capabilities. It helps optimize SaaS stacks, manage contracts, streamline procurement workflows, and ensure cost savings by identifying unused licenses. The platform also assists in vendor research, renewal management, and automating provisioning processes. CloudEagle.ai is recognized for its AI/ML capabilities in the 2024 Gartner Magic Quadrant.

Rewatch

Rewatch is an AI-powered meeting assistant and video hub that helps users capture meetings, create summaries, transcriptions, and action items. It centralizes all meeting videos, notes, and discussions in one place, replacing repetitive in-person meetings with asynchronous collaborative series. Rewatch also offers features like screen recording, integrations with other tools, and conversation intelligence to empower organizations with actionable insights. Trusted by productive businesses, Rewatch aims to optimize necessary meetings, eliminate useless ones, and enhance cross-functional collaboration in a unified hub.

Sellozo

Sellozo is an AI-driven automation platform designed to optimize Amazon advertising and boost sales. It offers a range of features such as AI Technology, Dayparting, Campaign Studio, Autopilot Repricer, and more. Sellozo provides flat-fee pricing without long-term contracts, helping users increase ad profit by an average of 70%. The platform leverages AI to automate advertising strategies, lower costs, and maximize profits. With Campaign Studio, users can easily design and refine their PPC campaigns, while the full PPC management service allows businesses to focus on growth while Sellozo handles advertising. Powered by billions of transactions, Sellozo is a trusted platform for Amazon sellers seeking to enhance their advertising performance.

1 - Open Source AI Tools

ReaLHF

ReaLHF is a distributed system designed for efficient RLHF training with Large Language Models (LLMs). It introduces a novel approach called parameter reallocation to dynamically redistribute LLM parameters across the cluster, optimizing allocations and parallelism for each computation workload. ReaL minimizes redundant communication while maximizing GPU utilization, achieving significantly higher Proximal Policy Optimization (PPO) training throughput compared to other systems. It supports large-scale training with various parallelism strategies and enables memory-efficient training with parameter and optimizer offloading. The system seamlessly integrates with HuggingFace checkpoints and inference frameworks, allowing for easy launching of local or distributed experiments. ReaLHF offers flexibility through versatile configuration customization and supports various RLHF algorithms, including DPO, PPO, RAFT, and more, while allowing the addition of custom algorithms for high efficiency.

20 - OpenAI Gpts

CV & Resume ATS Optimize + 🔴Match-JOB🔴

Professional Resume & CV Assistant 📝 Optimize for ATS 🤖 Tailor to Job Descriptions 🎯 Compelling Content ✨ Interview Tips 💡

Website Conversion by B12

I'll help you optimize your website for more conversions, and compare your site's CRO potential to competitors’.

Thermodynamics Advisor

Advises on thermodynamics processes to optimize system efficiency.

Cloud Architecture Advisor

Guides cloud strategy and architecture to optimize business operations.

International Tax Advisor

Advises on international tax matters to optimize company's global tax position.

Investment Management Advisor

Provides strategic financial guidance for investment behavior to optimize organization's wealth.

ESG Strategy Navigator 🌱🧭

Optimize your business with sustainable practices! ESG Strategy Navigator helps integrate Environmental, Social, Governance (ESG) factors into corporate strategy, ensuring compliance, ethical impact, and value creation. 🌟

Floor Plan Optimization Assistant

Help optimize floor plan, for better experience, please visit collov.ai

AI Business Transformer

Top AI for business automation, data analytics, content creation. Optimize efficiency, gain insights, and innovate with AI Business Transformer.

Business Pricing Strategies & Plans Toolkit

A variety of business pricing tools and strategies! Optimize your price strategy and tactics with AI-driven insights. Critical pricing tools for businesses of all sizes looking to strategically navigate the market.

Purchase Order Management Advisor

Manages purchase orders to optimize procurement operations.

E-Procurement Systems Advisor

Advises on e-procurement systems to optimize purchasing processes.

Contract Administration Advisor

Advises on contract administration to optimize procurement processes.