Best AI tools for< Optimize Cpu >

20 - AI tool Sites

Modular

Modular is a fast, scalable Gen AI inference platform that offers a comprehensive suite of tools and resources for AI development and deployment. It provides solutions for AI model development, deployment options, AI inference, research, and resources like documentation, models, tutorials, and step-by-step guides. Modular supports GPU and CPU performance, intelligent scaling to any cluster, and offers deployment options for various editions. The platform enables users to build agent workflows, utilize AI retrieval and controlled generation, develop chatbots, engage in code generation, and improve resource utilization through batch processing.

Rafay

Rafay is an AI-powered platform that accelerates cloud-native and AI/ML initiatives for enterprises. It provides automation for Kubernetes clusters, cloud cost optimization, and AI workbenches as a service. Rafay enables platform teams to focus on innovation by automating self-service cloud infrastructure workflows.

Wallaroo.AI

Wallaroo.AI is an AI inference platform that offers production-grade AI inference microservices optimized on OpenVINO for cloud and Edge AI application deployments on CPUs and GPUs. It provides hassle-free AI inferencing for any model, any hardware, anywhere, with ultrafast turnkey inference microservices. The platform enables users to deploy, manage, observe, and scale AI models effortlessly, reducing deployment costs and time-to-value significantly.

Anycores

Anycores is an AI tool designed to optimize the performance of deep neural networks and reduce the cost of running AI models in the cloud. It offers a platform that provides automated solutions for tuning and inference consultation, optimized networks zoo, and platform for reducing AI model cost. Anycores focuses on faster execution, reducing inference time over 10x times, and footprint reduction during model deployment. It is device agnostic, supporting Nvidia, AMD GPUs, Intel, ARM, AMD CPUs, servers, and edge devices. The tool aims to provide highly optimized, low footprint networks tailored to specific deployment scenarios.

Pixian.AI

Pixian.AI is an AI tool that specializes in removing backgrounds from images. It offers a free service with no signup required, as well as a paid option for higher resolution images. The tool uses powerful GPUs and multi-core CPUs to analyze images and provide high-quality results. Pixian.AI aims to provide efficient and cost-effective AI image processing solutions to users, with a focus on quality and value.

AMD AI Solutions

AMD AI Solutions is a leading AI innovation platform with a broad portfolio, open ecosystem, and cutting-edge technology for data centers, edge computing, and clients. The platform offers end-to-end solutions powered by CPUs, GPUs, accelerators, networking, and open software, delivering unmatched flexibility and performance. AMD enables accelerated AI outcomes, sustained AI success, and is recognized as a trusted AI partner. With a commitment to minimizing costs, prioritizing security, and staying flexible, AMD empowers businesses and consumers to scale AI deployments effectively and efficiently.

ONNX Runtime

ONNX Runtime is a production-grade AI engine designed to accelerate machine learning training and inferencing in various technology stacks. It supports multiple languages and platforms, optimizing performance for CPU, GPU, and NPU hardware. ONNX Runtime powers AI in Microsoft products and is widely used in cloud, edge, web, and mobile applications. It also enables large model training and on-device training, offering state-of-the-art models for tasks like image synthesis and text generation.

Caffe

Caffe is a deep learning framework developed by Berkeley AI Research (BAIR) and community contributors. It is designed for speed, modularity, and expressiveness, allowing users to define models and optimization through configuration without hard-coding. Caffe supports both CPU and GPU training, making it suitable for research experiments and industry deployment. The framework is extensible, actively developed, and tracks the state-of-the-art in code and models. Caffe is widely used in academic research, startup prototypes, and large-scale industrial applications in vision, speech, and multimedia.

Modal

Modal is a high-performance cloud platform designed for developers, AI data, and ML teams. It offers a serverless environment for running generative AI models, large-scale batch jobs, job queues, and more. With Modal, users can bring their own code and leverage the platform's optimized container file system for fast cold boots and seamless autoscaling. The platform is engineered for large-scale workloads, allowing users to scale to hundreds of GPUs, pay only for what they use, and deploy functions to the cloud in seconds without the need for YAML or Dockerfiles. Modal also provides features for job scheduling, web endpoints, observability, and security compliance.

Deep Live Cam

Deep Live Cam is a cutting-edge AI tool that enables real-time face swapping and one-click video deepfakes. It harnesses advanced AI algorithms to deliver high-quality face replacement with just a single image. The tool supports multiple execution platforms, including CPU, NVIDIA CUDA, and Apple Silicon, providing users with flexibility and optimized performance. Deep Live Cam promotes ethical use by incorporating safeguards to prevent processing of inappropriate content. Additionally, it benefits from an active open-source community, ensuring ongoing support and improvements to stay at the forefront of technology.

Webflow Optimize

Webflow Optimize is a website optimization and personalization tool that empowers users to create, edit, and manage web content with ease. It offers features such as A/B testing, personalized site experiences, and AI-powered optimization. With Webflow Optimize, users can enhance their digital experiences, experiment with different variations, and deliver personalized content to visitors. The platform integrates seamlessly with Webflow's visual development environment, allowing users to create and launch variations without complex code implementations.

Qualtrics XM

Qualtrics XM is a leading Experience Management Software that helps businesses optimize customer experiences, employee engagement, and market research. The platform leverages specialized AI to uncover insights from data, prioritize actions, and empower users to enhance customer and employee experience outcomes. Qualtrics XM offers solutions for Customer Experience, Employee Experience, Strategy & Research, and more, enabling organizations to drive growth and improve performance.

Jobscan

Jobscan is a comprehensive job search tool that helps job seekers optimize their resumes, cover letters, and LinkedIn profiles to increase their chances of getting interviews. It uses artificial intelligence and machine learning technology to analyze job descriptions and identify the skills and keywords that recruiters are looking for. Jobscan then provides personalized suggestions on how to tailor your application materials to each specific job you apply for. In addition to its resume and cover letter optimization tools, Jobscan also offers a job tracker, a LinkedIn optimization tool, and a career change tool. With its powerful suite of features, Jobscan is an essential tool for any job seeker who wants to land their dream job.

TestMarket

TestMarket is an AI-powered sales optimization platform for online marketplace sellers. It offers a range of services to help sellers increase their visibility, boost sales, and improve their overall performance on marketplaces such as Amazon, Etsy, and Walmart. TestMarket's services include product promotion, keyword analysis, Google Ads and SEO optimization, and advertising optimization.

VWO

VWO is a comprehensive experimentation platform that enables businesses to optimize their digital experiences and maximize conversions. With a suite of products designed for the entire optimization program, VWO empowers users to understand user behavior, validate optimization hypotheses, personalize experiences, and deliver tailored content and experiences to specific audience segments. VWO's platform is designed to be enterprise-ready and scalable, with top-notch features, strong security, easy accessibility, and excellent performance. Trusted by thousands of leading brands, VWO has helped businesses achieve impressive growth through experimentation loops that shape customer experience in a positive direction.

Botify AI

Botify AI is an AI-powered tool designed to assist users in optimizing their website's performance and search engine rankings. By leveraging advanced algorithms and machine learning capabilities, Botify AI provides valuable insights and recommendations to improve website visibility and drive organic traffic. Users can analyze various aspects of their website, such as content quality, site structure, and keyword optimization, to enhance overall SEO strategies. With Botify AI, users can make data-driven decisions to enhance their online presence and achieve better search engine results.

Siteimprove

Siteimprove is an AI-powered platform that offers a comprehensive suite of digital governance, analytics, and SEO tools to help businesses optimize their online presence. It provides solutions for digital accessibility, quality assurance, content analytics, search engine marketing, and cross-channel advertising. With features like AI-powered insights, automated analysis, and machine learning capabilities, Siteimprove empowers users to enhance their website's reach, reputation, revenue, and returns. The platform transcends traditional boundaries by addressing a wide range of digital requirements and impact-drivers, making it a valuable tool for businesses looking to improve their online performance.

SiteSpect

SiteSpect is an AI-driven platform that offers A/B testing, personalization, and optimization solutions for businesses. It provides capabilities such as analytics, visual editor, mobile support, and AI-driven product recommendations. SiteSpect helps businesses validate ideas, deliver personalized experiences, manage feature rollouts, and make data-driven decisions. With a focus on conversion and revenue success, SiteSpect caters to marketers, product managers, developers, network operations, retailers, and media & entertainment companies. The platform ensures faster site performance, better data accuracy, scalability, and expert support for secure and certified optimization.

EverSQL

EverSQL is an AI-powered tool designed for SQL query optimization, database observability, and cost reduction for PostgreSQL and MySQL databases. It automatically optimizes SQL queries using smart AI-based algorithms, provides ongoing performance insights, and helps reduce monthly database costs by offering optimization recommendations. With over 100,000 professionals trusting EverSQL, it aims to save time, improve database performance, and enhance cost-efficiency without accessing sensitive data.

Attention Insight

Attention Insight is an AI-driven pre-launch analytics tool that provides crucial insights into consumer engagement with designs before the launch. By using predictive attention heatmaps and AI-generated attention analytics, users can optimize their concepts for better performance, validate designs, and improve user experience. The tool offers accurate data based on psychological research, helping users make informed decisions and save time and resources. Attention Insight is suitable for various types of analysis, including desktop, marketing material, mobile, posters, packaging, and shelves.

1 - Open Source AI Tools

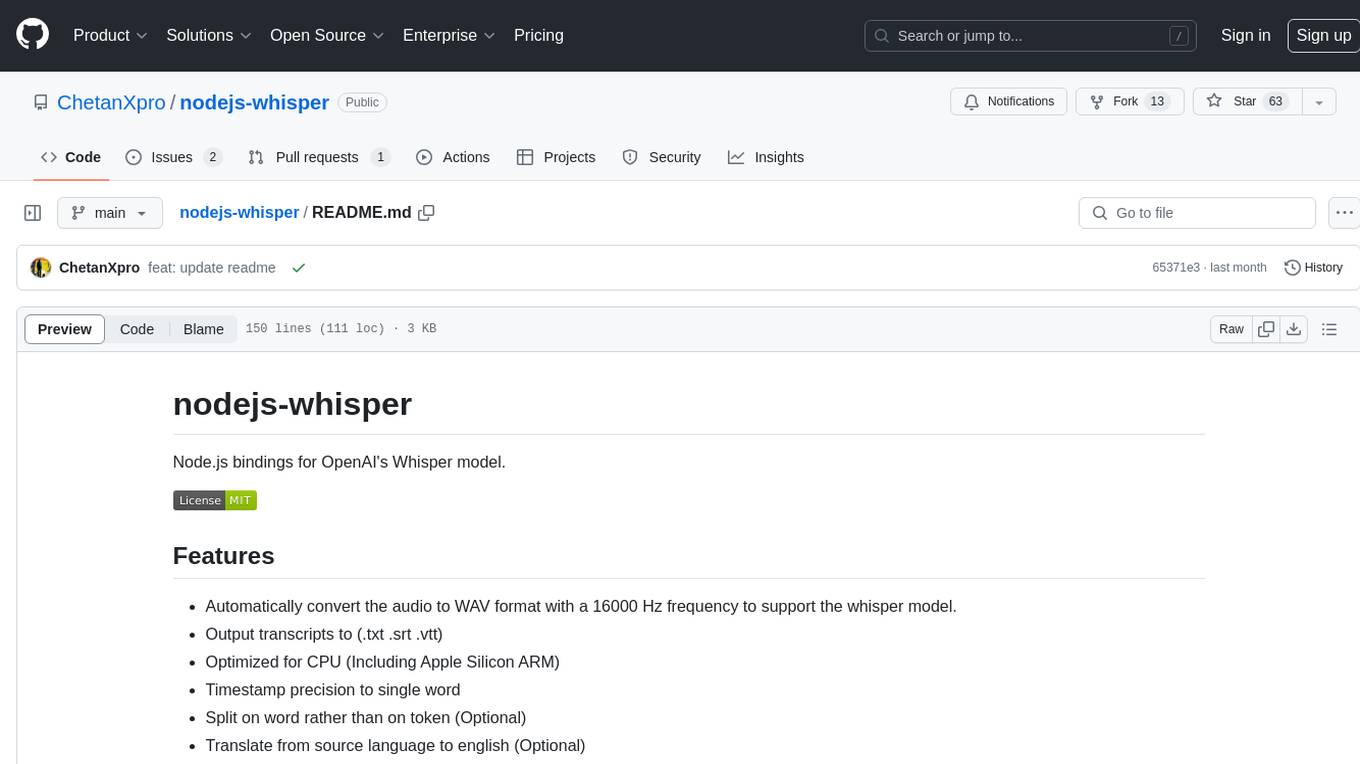

nodejs-whisper

Node.js bindings for OpenAI's Whisper model that automatically converts audio to WAV format with a 16000 Hz frequency to support the whisper model. It outputs transcripts to various formats, is optimized for CPU including Apple Silicon ARM, provides timestamp precision to single word, allows splitting on word rather than token, translation from source language to English, and conversion of audio format to WAV for whisper model support.

20 - OpenAI Gpts

CV & Resume ATS Optimize + 🔴Match-JOB🔴

Professional Resume & CV Assistant 📝 Optimize for ATS 🤖 Tailor to Job Descriptions 🎯 Compelling Content ✨ Interview Tips 💡

Website Conversion by B12

I'll help you optimize your website for more conversions, and compare your site's CRO potential to competitors’.

Thermodynamics Advisor

Advises on thermodynamics processes to optimize system efficiency.

Cloud Architecture Advisor

Guides cloud strategy and architecture to optimize business operations.

International Tax Advisor

Advises on international tax matters to optimize company's global tax position.

Investment Management Advisor

Provides strategic financial guidance for investment behavior to optimize organization's wealth.

ESG Strategy Navigator 🌱🧭

Optimize your business with sustainable practices! ESG Strategy Navigator helps integrate Environmental, Social, Governance (ESG) factors into corporate strategy, ensuring compliance, ethical impact, and value creation. 🌟

Floor Plan Optimization Assistant

Help optimize floor plan, for better experience, please visit collov.ai

AI Business Transformer

Top AI for business automation, data analytics, content creation. Optimize efficiency, gain insights, and innovate with AI Business Transformer.

Business Pricing Strategies & Plans Toolkit

A variety of business pricing tools and strategies! Optimize your price strategy and tactics with AI-driven insights. Critical pricing tools for businesses of all sizes looking to strategically navigate the market.

Purchase Order Management Advisor

Manages purchase orders to optimize procurement operations.

E-Procurement Systems Advisor

Advises on e-procurement systems to optimize purchasing processes.